How the internet has changed the world

How the internet has changed the world

Internet

All our interactive charts on Internet

The Internet’s history has just begun

But it was the creation of the World Wide Web in 1989 that revolutionized our history of communication. The inventor of the World Wide Web was the British scientist Tim Berners-Lee who created a system to share information through a network of computers. At the time he was working for the European physics laboratory CERN in the Swiss Alps.

Here I want to look at the global expansion of the internet since then.

This chart shows the share and number of people that are using the internet, which in these statistics refers to all those who have used the internet in the last 3 months. 1

You can also explore interactive versions of the chart with the most recent available global data.

The chart starts in 1990, still one year before Berners-Lee released the first web browser and before the very first website was online (the site of CERN, which is still online ). At that time very few computers around the world were connected to a network; estimates for 1990 suggest that only half of a percent of the world population were online.

As the chart shows, this started to change in the 1990s, at least in some parts of the world: By the year 2000 almost half of the population in the US was accessing information through the internet. But across most of the world, the internet had not yet had much influence – 93% in the East Asia and Pacific region and 99% in South Asia and in Sub-Saharan Africa were still offline in 2000. At the time of the Dot-com-crash less than 7% of the world was online.

Fifteen years later, in 2016, three-quarters (76%) of people in the US were online and during these years countries from many parts of the world caught up: in Malaysia 79% used the internet; in Spain and Singapore 81%; in France 86%; in South Korea and Japan 93%; in Denmark and Norway 97%; and Iceland tops the ranking with 98% of the population online. 2

At the other end of the spectrum, there are still countries where almost nothing has changed since 1990. In the very poorest countries – including Eritrea, Somalia, Guinea-Bissau, the Central African Republic, Niger, and Madagascar – fewer than 5% are online. And at the very bottom is North Korea, where the country’s oppressive regime restricts the access to the walled-off North Korean intranet Kwangmyong and access to the global internet is only granted to a very small elite.

But the overarching trend globally – and, as the chart shows, in all world regions – is clear: more and more people are online every year. The speed with which the world is changing is incredibly fast. On any day in the last 5 years there were on average 640,000 people online for the first time. 3

This was 27,000 every hour.

For those who are online most days it is easy to forget how young the internet still is. The timeline below the chart reminds you how recent websites and technologies became available that are integrated to the everyday lives of millions: In the 1990s there was no Wikipedia, Twitter launched in 2006, and Our World in Data is only 4 years old (and look how many people have joined since then 4 ).

And while many of us cannot imagine their lives without the services that the internet provides, the key message for me from this overview of the global history of the internet is that we are still in the very early stages of the internet. It was only in 2017 that half of the world population was online; and in 2018 it is therefore still the case that close to half of the world population is not using the internet. 5

The internet has already changed the world, but the big changes that the internet will bring still lie ahead. Its history has just begun.

3 Ways the Internet Has Changed the World – And Created New Opportunities?

The rapid advancement of the internet has created unmistakable, significant changes to our everyday lives. The impact the Internet has on society is felt in almost everything we do — from ordering a pizza to starting a romantic relationship. It has affected how we communicate, how we learn about global events, and even how our brains function.

At this point, society is racing to catch up with developing web technology, creating a social evolution that has impacted how we celebrate significant life events. From weddings to graduations to baby announcements, there’s no denying that the way we celebrate is impacted by the web. Here are a few ways that the Internet has changed how we organize, participate in, and document the most significant aspects of our lives.

The Impact the Internet Has on Society

We Treat Intimacy Differently

The internet has affected the way we form and maintain relationships with friends, family, romantic partners, and acquaintances. Now that we can interact with each other and keep each other updated on our lives more easily than in the past, the notion of intimate relationships has changed.

For instance, social media allows people to constantly update their networks about their lives. Using photos, videos, text posts, and more, we present ourselves to everyone around us, allowing us to stay in touch from moment to moment — with people who we care about and people we don’t. Casual acquaintances from years past know our political leanings, where we go to and graduate from school, who we’re marrying, who our kids are, and even where we live.

This can create a new sense of intimacy that didn’t exist in the past, since people feel that they know all there is to know about the people in their lives. This, in turn, has actually decreased the significance of personal life events, like family, college, and high school reunions. If we all know what everyone else is up to anyway, then why spend the money and travel time to catch up in person?

Everything’s Crowd-Based

The beginning of the 21st Century saw a huge rise in social media and other interactive, crowd-based communication platforms. This revolution upended the way we think about personal life events due to the fact that with the internet, we have access to a huge variety of ideas and options — and now everyone has a say.

For one, as we grow more globally connected, there are more cultural ideas and traditions being shared than ever. Culture flows across oceans and borders easily, meaning that more people are beginning to pick up ideas regarding how to celebrate significant life events than ever before. American couples are including aspects of Indian culture in their weddings; European youth are beginning to throw American-style graduation parties. Now that ideas are shared among the public in crowd-based communication platforms like social media, people are able to pick and choose celebration ideas in ways that were unheard of even 20 years ago.

A quick scroll through social media can also give you an idea of how personal life events are growing increasingly crowd-based. As young couples announce their wedding engagements on Facebook, graduations on Instagram, and pregnancies on Twitter, it’s easy to pick out comments from interested family members, friends, and even acquaintances giving their opinions — wanted or not. Everything from wedding colors to baby shower themes have become a sort of collective event, as social media invites everyone to participate. In other words, your engagement photo shoot is no longer yours — it’s part of the collective “ours” to comment on as we like.

We’re Constantly Connected

The rise of mobile communication, especially through smartphones, means that most people have constant access to the Internet. We are no longer tied to desktop computers; we can get online almost anytime, anywhere, which has created noticeable impacts on how we celebrate personal life events.

For instance, being constantly connected to the internet has created a need for near-constant documentation of our daily lives. Since social media has become such an integral part of how we communicate, many people feel a need to post about everything from their breakfast to the birth of their friend’s child online, all the time. People live-tweet arguments they overhear at restaurants, Snapchat clips from concerts in real time, and even pose for social media shots at funerals.

In response to this, people are starting to have to actively discourage social media documentation during celebrations of life events like weddings. It’s growing increasingly popular for engaged couples to ban cell phone photos and videos during their wedding ceremonies due to issue like guests stepping out into the aisle for a photo or getting in the professional photographer’s way to snap that perfect shot.

While it’s difficult to say that the internet is the sole cause of these developments, there’s no denying that it has made a significant impact on how we celebrate personal life events. As we navigate the social evolution that comes with changes in technology, it will be interesting to pay attention to how these events continue to change with it.

How the internet has changed the world

As the CEO of one of largest technology companies in the world puts it:

“Paper is no longer a big part of my day. I get 90% of my news online, and when I go to a meeting and want to jot things down, I bring my Tablet PC. It’s fully synchronized with my office machine so I have all the files I need. Bill Gates, Microsoft CEO”

And Bill Gates isn’t alone. The past twenty-five years have shown a dramatic shift in the means by which people obtain information. Before the Internet was developed, print media, radio and television news stories were the main modes people used to stay in-the-know about a variety of issues.

Throughout the 20 th century, the desire for newspapers gradually evolved into radio which then developed into television. Each shift rendered the prior form of media slightly more obsolete and identified a universal theme: that people will always want to obtain news as quickly as possible and will embrace any new technology that permits them to do so.

The Internet, specifically social media websites and blogs, not only has the ability to deliver information to the public in real time, but the public now expects it.

However, as the methods of providing news have changed, so have the providers. While in the past, journalists and reporters would present stories to the public that were extensively researched and fact-checked to ensure accuracy and maintain accountability, now there is an increasing pressure to distribute news stories as quickly as possible to avoid being pre-empted by the blogs. Unfortunately, the quality of the news is suffering.

The Future of The News

In years past, television, radio, newspapers, and magazines provided the daily information that people needed in order have a sense of what was going on in the world, in this country and in their local community. If people wanted to research a topic, they would have to take a trip to the public library, where encyclopedias, card catalogs, microfilm, microfiche and books were commonplace tools that offered insight into any subject. The library was the place to access information.

Due to the advent of the Internet, life has changed. Most people are attached to their computers during the day and receive the bulk of their information from the Internet. Information is available 24 hours in a day, seven days a week and it’s so easy.

People don’t have to go to the store to buy a newspaper or magazine because the public can access all of the information they could possibly need in the comfort of their own home or office.

Research is simple on the internet. A simple google search provides hundreds of sources that appear instantly and can provide a wealth of information at any time, day or night.

Forty years of the internet: how the world changed for ever

Internet business cables in California. Photograph: Bob Sacha/Corbis

Internet business cables in California. Photograph: Bob Sacha/Corbis

T owards the end of the summer of 1969 – a few weeks after the moon landings, a few days after Woodstock, and a month before the first broadcast of Monty Python’s Flying Circus – a large grey metal box was delivered to the office of Leonard Kleinrock, a professor at the University of California in Los Angeles. It was the same size and shape as a household refrigerator, and outwardly, at least, it had about as much charm. But Kleinrock was thrilled: a photograph from the time shows him standing beside it, in requisite late-60s brown tie and brown trousers, beaming like a proud father.

Had he tried to explain his excitement to anyone but his closest colleagues, they probably wouldn’t have understood. The few outsiders who knew of the box’s existence couldn’t even get its name right: it was an IMP, or «interface message processor», but the year before, when a Boston company had won the contract to build it, its local senator, Ted Kennedy, sent a telegram praising its ecumenical spirit in creating the first «interfaith message processor». Needless to say, though, the box that arrived outside Kleinrock’s office wasn’t a machine capable of fostering understanding among the great religions of the world. It was much more important than that.

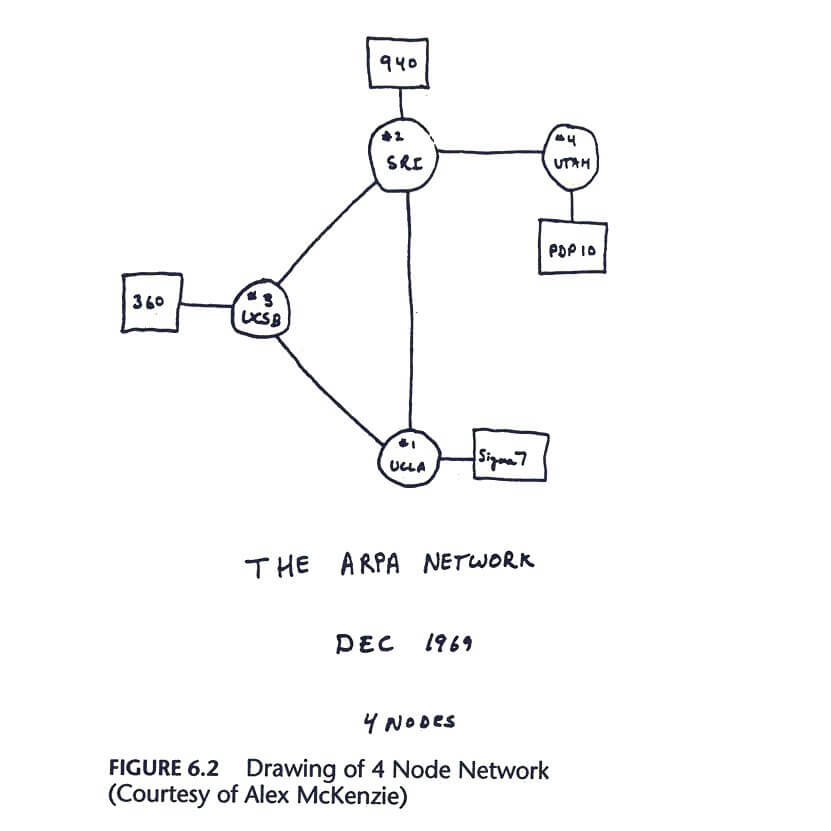

It’s impossible to say for certain when the internet began, mainly because nobody can agree on what, precisely, the internet is. (This is only partly a philosophical question: it is also a matter of egos, since several of the people who made key contributions are anxious to claim the credit.) But 29 October 1969 – 40 years ago next week – has a strong claim for being, as Kleinrock puts it today, «the day the infant internet uttered its first words». At 10.30pm, as Kleinrock’s fellow professors and students crowded around, a computer was connected to the IMP, which made contact with a second IMP, attached to a second computer, several hundred miles away at the Stanford Research Institute, and an undergraduate named Charley Kline tapped out a message. Samuel Morse, sending the first telegraph message 125 years previously, chose the portentous phrase: «What hath God wrought?» But Kline’s task was to log in remotely from LA to the Stanford machine, and there was no opportunity for portentousness: his instructions were to type the command LOGIN.

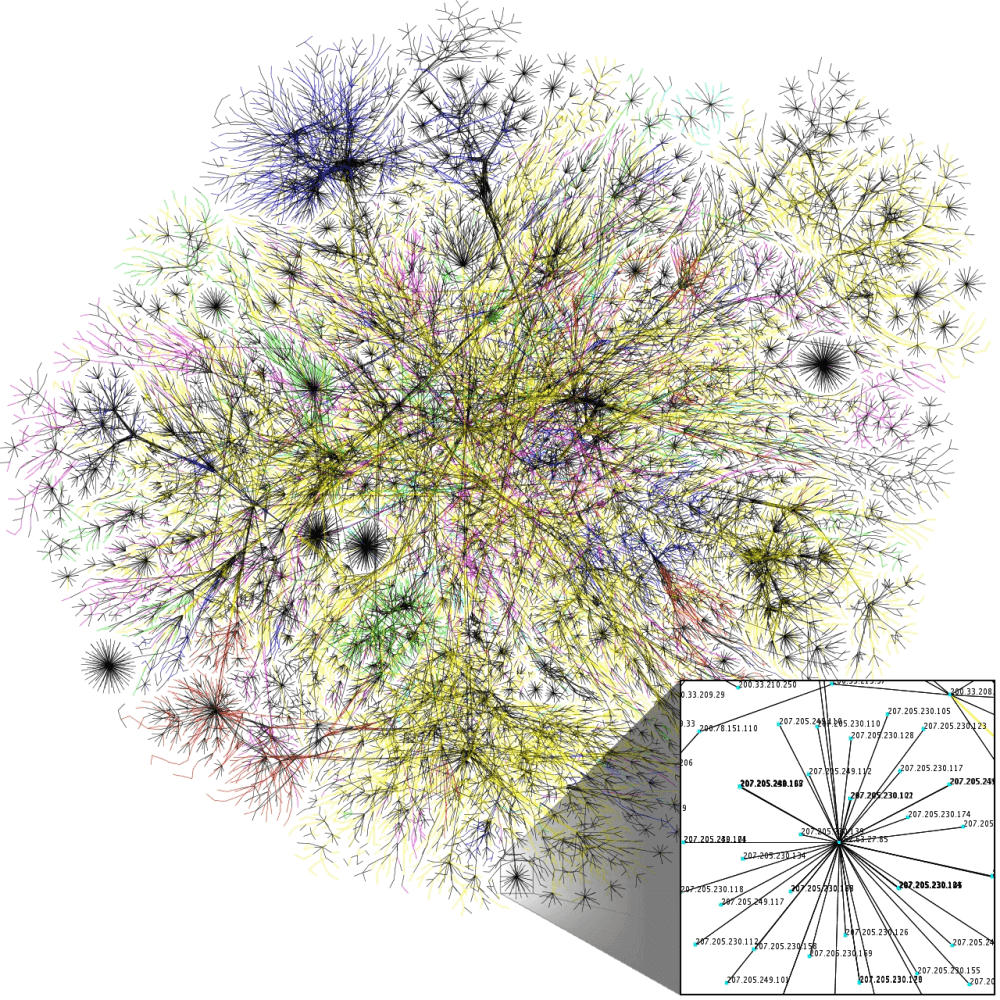

To say that the rest is history is the emptiest of cliches – but trying to express the magnitude of what began that day, and what has happened in the decades since, is an undertaking that quickly exposes the limits of language. It’s interesting to compare how much has changed in computing and the internet since 1969 with, say, how much has changed in world politics. Consider even the briefest summary of how much has happened on the global stage since 1969: the Vietnam war ended; the cold war escalated then declined; the Berlin Wall fell; communism collapsed; Islamic fundamentalism surged. And yet nothing has quite the power to make people in their 30s, 40s or 50s feel very old indeed as reflecting upon the growth of the internet and the world wide web. Twelve years after Charley Kline’s first message on the Arpanet, as it was then known, there were still only 213 computers on the network; but 14 years after that, 16 million people were online, and email was beginning to change the world; the first really usable web browser wasn’t launched until 1993, but by 1995 we had Amazon, by 1998 Google, and by 2001, Wikipedia, at which point there were 513 million people online. Today the figure is more like 1.7 billion.

Unless you are 15 years old or younger, you have lived through the dotcom bubble and bust, the birth of Friends Reunited and Craigslist and eBay and Facebook and Twitter, blogging, the browser wars, Google Earth, filesharing controversies, the transformation of the record industry, political campaigning, activism and campaigning, the media, publishing, consumer banking, the pornography industry, travel agencies, dating and retail; and unless you’re a specialist, you’ve probably only been following the most attention-grabbing developments. Here’s one of countless statistics that are liable to induce feelings akin to vertigo: on New Year’s Day 1994 – only yesterday, in other words – there were an estimated 623 websites. In total. On the whole internet. «This isn’t a matter of ego or crowing,» says Steve Crocker, who was present that day at UCLA in 1969, «but there has not been, in the entire history of mankind, anything that has changed so dramatically as computer communications, in terms of the rate of change.»

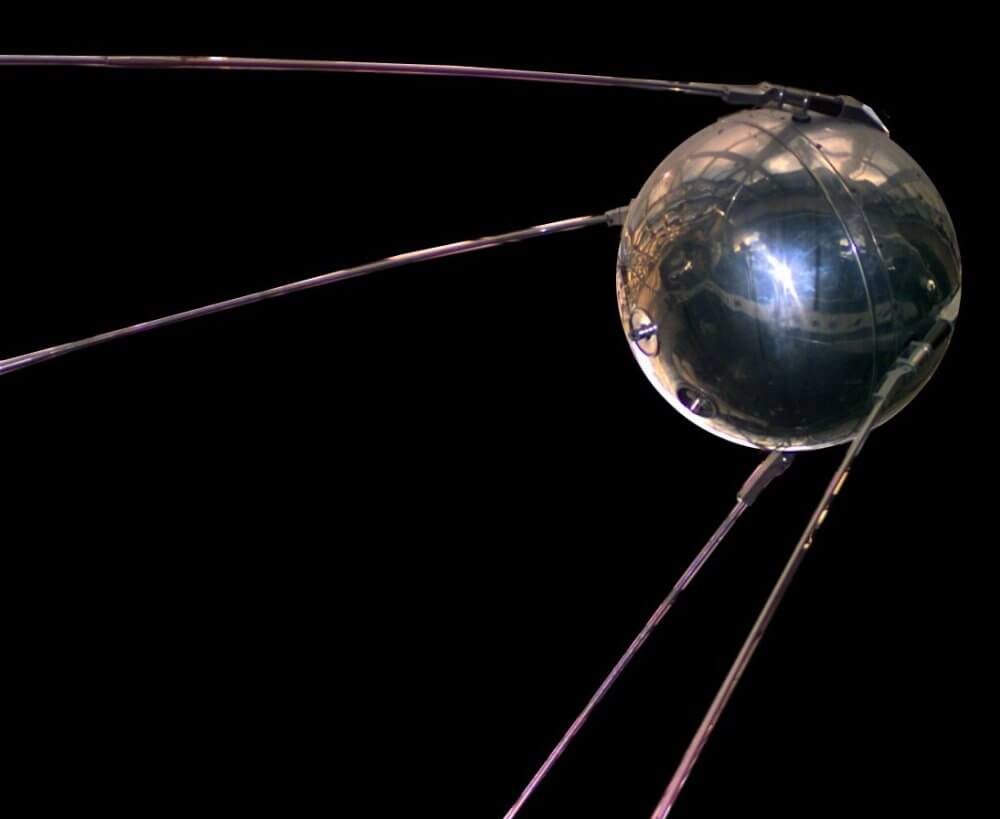

Looking back now, Kleinrock and Crocker are both struck by how, as young computer scientists, they were simultaneously aware that they were involved in something momentous and, at the same time, merely addressing a fairly mundane technical problem. On the one hand, they were there because of the Russian Sputnik satellite launch, in 1957, which panicked the American defence establishment, prompting Eisenhower to channel millions of dollars into scientific research, and establishing Arpa, the Advanced Research Projects Agency, to try to win the arms technology race. The idea was «that we would not get surprised again,» said Robert Taylor, the Arpa scientist who secured the money for the Arpanet, persuading the agency’s head to give him a million dollars that had been earmarked for ballistic missile research. With another pioneer of the early internet, JCR Licklider, Taylor co-wrote the paper, «The Computer As A Communication Device», which hinted at what was to come. «In a few years, men will be able to communicate more effectively through a machine than face to face,» they declared. «That is rather a startling thing to say, but it is our conclusion.»

On the other hand, the breakthrough accomplished that night in 1969 was a decidedly down-to-earth one. The Arpanet was not, in itself, intended as some kind of secret weapon to put the Soviets in their place: it was simply a way to enable researchers to access computers remotely, because computers were still vast and expensive, and the scientists needed a way to share resources. (The notion that the network was designed so that it would survive a nuclear attack is an urban myth, though some of those involved sometimes used that argument to obtain funding.) The technical problem solved by the IMPs wasn’t very exciting, either. It was already possible to link computers by telephone lines, but it was glacially slow, and every computer in the network had to be connected, by a dedicated line, to every other computer, which meant you couldn’t connect more than a handful of machines without everything becoming monstrously complex and costly. The solution, called «packet switching» – which owed its existence to the work of a British physicist, Donald Davies – involved breaking data down into blocks that could be routed around any part of the network that happened to be free, before getting reassembled at the other end.

«I thought this was important, but I didn’t really think it was as challenging as what I thought of as the ‘real research’,» says Crocker, a genial Californian, now 65, who went on to play a key role in the expansion of the internet. «I was particularly fascinated, in those days, by artificial intelligence, and by trying to understand how people think. I thought that was a much more substantial and respectable research topic than merely connecting up a few machines. That was certainly useful, but it wasn’t art.»

Still, Kleinrock recalls a tangible sense of excitement that night as Kline sat down at the SDS Sigma 7 computer, connected to the IMP, and at the same time made telephone contact with his opposite number at Stanford. As his colleagues watched, he typed the letter L, to begin the word LOGIN.

«Have you got the L?» he asked, down the phone line. «Got the L,» the voice at Stanford responded.

Kline typed an O. «Have you got the O?»

«Got the O,» Stanford replied.

Kline typed a G, at which point the system crashed, and the connection was lost. The G didn’t make it through, which meant that, quite by accident, the first message ever transmitted across the nascent internet turned out, after all, to be fittingly biblical:

Frenzied visions of a global conscious brain

One of the most intriguing things about the growth of the internet is this: to a select group of technological thinkers, the surprise wasn’t how quickly it spread across the world, remaking business, culture and politics – but that it took so long to get off the ground. Even when computers were mainly run on punch-cards and paper tape, there were whispers that it was inevitable that they would one day work collectively, in a network, rather than individually. (Tracing the origins of online culture even further back is some people’s idea of an entertaining game: there are those who will tell you that the Talmud, the book of Jewish law, contains a form of hypertext, the linking-and-clicking structure at the heart of the web.) In 1945, the American presidential science adviser, Vannevar Bush, was already imagining the «memex», a device in which «an individual stores all his books, records, and communications», which would be linked to each other by «a mesh of associative trails», like weblinks. Others had frenzied visions of the world’s machines turning into a kind of conscious brain. And in 1946, an astonishingly complete vision of the future appeared in the magazine Astounding Science Fiction. In a story entitled A Logic Named Joe, the author Murray Leinster envisioned a world in which every home was equipped with a tabletop box that he called a «logic»:

Despite all these predictions, though, the arrival of the internet in the shape we know it today was never a matter of inevitability. It was a crucial idiosyncracy of the Arpanet that its funding came from the American defence establishment – but that the millions ended up on university campuses, with researchers who embraced an anti-establishment ethic, and who in many cases were committedly leftwing; one computer scientist took great pleasure in wearing an anti-Vietnam badge to a briefing at the Pentagon. Instead of smothering their research in the utmost secrecy – as you might expect of a cold war project aimed at winning a technological battle against Moscow – they made public every step of their thinking, in documents known as Requests For Comments.

Deliberately or not, they helped encourage a vibrant culture of hobbyists on the fringes of academia – students and rank amateurs who built their own electronic bulletin-board systems and eventually FidoNet, a network to connect them to each other. An argument can be made that these unofficial tinkerings did as much to create the public internet as did the Arpanet. Well into the 90s, by the time the Arpanet had been replaced by NSFNet, a larger government-funded network, it was still the official position that only academic researchers, and those affiliated to them, were supposed to use the network. It was the hobbyists, making unofficial connections into the main system, who first opened the internet up to allcomers.

What made all of this possible, on a technical level, was simultaneously the dullest-sounding and most crucial development since Kleinrock’s first message. This was the software known as TCP/IP, which made it possible for networks to connect to other networks, creating a «network of networks», capable of expanding virtually infinitely – which is another way of defining what the internet is. It’s for this reason that the inventors of TCP/IP, Vint Cerf and Bob Kahn, are contenders for the title of fathers of the internet, although Kleinrock, understandably, disagrees. «Let me use an analogy,» he says. «You would certainly not credit the birth of aviation to the invention of the jet engine. The Wright Brothers launched aviation. Jet engines greatly improved things.»

The spread of the internet across the Atlantic, through academia and eventually to the public, is a tale too intricate to recount here, though it bears mentioning that British Telecom and the British government didn’t really want the internet at all: along with other European governments, they were in favour of a different networking technology, Open Systems Interconnect. Nevertheless, by July 1992, an Essex-born businessman named Cliff Stanford had opened Demon Internet, Britain’s first commercial internet service provider. Officially, the public still wasn’t meant to be connecting to the internet. «But it was never a real problem,» Stanford says today. «The people trying to enforce that weren’t working very hard to make it happen, and the people working to do the opposite were working much harder.» The French consulate in London was an early customer, paying Demon £10 a month instead of thousands of pounds to lease a private line to Paris from BT.

After a year or so, Demon had between 2,000 and 3,000 users, but they weren’t always clear why they had signed up: it was as if they had sensed the direction of the future, in some inchoate fashion, but hadn’t thought things through any further than that. «The question we always got was: ‘OK, I’m connected – what do I do now?'» Stanford recalls. «It was one of the most common questions on our support line. We would answer with ‘Well, what do you want to do? Do you want to send an email?’ ‘Well, I don’t know anyone with an email address.’ People got connected, but they didn’t know what was meant to happen next.»

Fortunately, a couple of years previously, a British scientist based at Cern, the physics laboratory outside Geneva, had begun to answer that question, and by 1993 his answer was beginning to be known to the general public. What happened next was the web.

The birth of the web

I sent my first email in 1994, not long after arriving at university, from a small, under-ventilated computer room that smelt strongly of sweat. Email had been in existence for decades by then – the @ symbol was introduced in 1971, and the first message, according to the programmer who sent it, Ray Tomlinson, was «something like QWERTYUIOP». (The test messages, Tomlinson has said, «were entirely forgettable, and I have, therefore, forgotten them».) But according to an unscientific poll of friends, family and colleagues, 1994 seems fairly typical: I was neither an early adopter nor a late one. A couple of years later I got my first mobile phone, which came with two batteries: a very large one, for normal use, and an extremely large one, for those occasions on which you might actually want a few hours of power. By the time I arrived at the Guardian, email was in use, but only as an add-on to the internal messaging system, operated via chunky beige terminals with green-on-black screens. It took for ever to find the @ symbol on the keyboard, and I don’t remember anything like an inbox, a sent-mail folder, or attachments. I am 34 years old, but sometimes I feel like Methuselah.

I have no recollection of when I first used the world wide web, though it was almost certainly when people still called it the world wide web, or even W3, perhaps in the same breath as the phrase «information superhighway», made popular by Al Gore. (Or «infobahn»: did any of us really, ever, call the internet the «infobahn»?) For most of us, though, the web is in effect synonymous with the internet, even if we grasp that in technical terms that’s inaccurate: the web is simply a system that sits on top of the internet, making it greatly easier to navigate the information there, and to use it as a medium of sharing and communication. But the distinction rarely seems relevant in everyday life now, which is why its inventor, Tim Berners-Lee, has his own legitimate claim to be the progenitor of the internet as we know it. The first ever website was his own, at CERN: info.cern.ch.

The idea that a network of computers might enable a specific new way of thinking about information, instead of just allowing people to access the data on each other’s terminals, had been around for as long as the idea of the network itself: it’s there in Vannevar Bush’s memex, and Murray Leinster’s logics. But the grandest expression of it was Project Xanadu, launched in 1960 by the American philosopher Ted Nelson, who imagined – and started to build – a vast repository for every piece of writing in existence, with everything connected to everything else according to a principle he called «transclusion». It was also, presciently, intended as a method for handling many of the problems that would come to plague the media in the age of the internet, automatically channelling small royalties back to the authors of anything that was linked. Xanadu was a mind-spinning vision – and at least according to an unflattering portrayal by Wired magazine in 1995, over which Nelson threatened to sue, led those attempting to create it into a rabbit-hole of confusion, backbiting and «heart-slashing despair». Nelson continues to develop Xanadu today, arguing that it is a vastly superior alternative to the web. «WE FIGHT ON,» the Xanadu website declares, sounding rather beleaguered, not least since the declaration is made on a website.

Web browsers crossed the border into mainstream use far more rapidly than had been the case with the internet itself: Mosaic launched in 1993 and Netscape followed soon after, though it was an embarrassingly long time before Microsoft realised the commercial necessity of getting involved at all. Amazon and eBay were online by 1995. And in 1998 came Google, offering a powerful new way to search the proliferating mass of information on the web. Until not too long before Google, it had been common for search or directory websites to boast about how much of the web’s information they had indexed – the relic of a brief period, hilarious in hindsight, when a user might genuinely have hoped to check all the webpages that mentioned a given subject. Google, and others, saw that the key to the web’s future would be helping users exclude almost everything on any given topic, restricting search results to the most relevant pages.

Without most of us quite noticing when it happened, the web went from being a strange new curiosity to a background condition of everyday life: I have no memory of there being an intermediate stage, when, say, half the information I needed on a particular topic could be found online, while the other half still required visits to libraries. «I remember the first time I saw a web address on the side of a truck, and I thought, huh, OK, something’s happening here,» says Spike Ilacqua, who years beforehand had helped found The World, the first commercial internet service provider in the US. Finally, he stopped telling acquaintances that he worked in «computers», and started to say that he worked on «the internet», and nobody thought that was strange.

It is absurd – though also unavoidable here – to compact the whole of what happened from then onwards into a few sentences: the dotcom boom, the historically unprecedented dotcom bust, the growing «digital divide», and then the hugely significant flourishing, over the last seven years, of what became known as Web 2.0. It is only this latter period that has revealed the true capacity of the web for «generativity», for the publishing of blogs by anyone who could type, for podcasting and video-sharing, for the undermining of totalitarian regimes, for the use of sites such as Twitter and Facebook to create (and ruin) friendships, spread fashions and rumours, or organise political resistance. But you almost certainly know all this: it’s part of what these days, in many parts of the world, we call «just being alive».

The most confounding thing of all is that in a few years’ time, all this stupendous change will probably seem like not very much change at all. As Crocker points out, when you’re dealing with exponential growth, the distance from A to B looks huge until you get to point C, whereupon the distance between A and B looks like almost nothing; when you get to point D, the distance between B and C looks similarly tiny. One day, presumably, everything that has happened in the last 40 years will look like early throat-clearings — mere preparations for whatever the internet is destined to become. We will be the equivalents of the late-60s computer engineers, in their horn-rimmed glasses, brown suits, and brown ties, strange, period-costume characters populating some dimly remembered past.

Will you remember when the web was something you accessed primarily via a computer? Will you remember when there were places you couldn’t get a wireless connection? Will you remember when «being on the web» was still a distinct concept, something that described only a part of your life, instead of permeating all of it? Will you remember Google?

A Brief History of the Internet – Who Invented It, How it Works, and How it Became the Web We Use Today

Let’s start by clearing up some mis-conceptions about the Internet. The Internet is not the Web. The Internet is not a cloud. And the Internet is not magic.

It may seem like something automatic that we take for granted, but there is a whole process that happens behind the scenes that makes it run.

So. The Internet. What is it?

The Internet is actually a wire. Well, many wires that connect computers all around the world.

The Internet is also infrastructure. It’s a global network of interconnected computers that communicate through a standardised way with set protocols.

Really, it’s a network of networks. It’s a fully distributed system of computing devices and it ensures end to end connectivity through every part of the network. The aim is for every device to be able to communicate with any other device.

The Internet is something we all use everyday, and many of us can’t imagine our lives without it. The internet and all the technological advances it offers has changed our society. It has changed our jobs, the way we consume news and share information, and the way we communicate with one another.

It has also created so many opportunities and has helped helped humanity progress and has shaped our human experience.

There is nothing else like it – it’s one of the greatest inventions of all time. But do we ever stop to think why it was created in the first place, how it all happened, or by whom it was created? How the internet has become what it is today?

This article is more of a journey back in time. We’ll learn about the origins of the Internet and how far it has come throughout the years, as this can be beneficial in our coding journeys.

Learning about the history of how the Internet was created has made me realise that everything comes down to problem solving. And that is what coding is all about. Having a problem, trying to find a solution to it, and improving upon it once that solution is found.

The Internet, a technology so expansive and ever-changing, wasn’t the work of just one person or institution. Many people contributed to its growth by developing new features.

So it has developed over time. It was at least 40 years in the making and kept (well, still keeps) on evolving.

And it wasn’t created just for the sake of creating something. The Internet we know and use today was a result of an experiment, ARPANET, the precursor network to the internet.

And it all started because of a problem.

Scared of Sputnik

It was in the midst of the Cold War, October 4 1957, that the Soviets launched the first man made satellite into space called Sputnik.

As it was the world’s first ever artificial object to float into space, this was alarming for Americans.

The Soviets were not only ahead in science and technology but they were a threat. Americans feared that the Soviets would spy on their enemies, win the Cold War, and that nuclear attacks on American soil were possible.

So Americans started to think more seriously about science and technology. After the Sputnik wake up call, the space race began. It was not long after that in 1958 the US Administration funded various agencies, one of them being ARPA.

ARPA stands for Advanced Research Project Agency. It was a Defence Department research project in Computer Science, a way for scientists and researchers to share information, findings, knowledge, and communicate. It also allowed and helped the field of Computer Science to develop and evolve.

It was there that the vision of J.C.R. Licklider, one of the directors of ARPA, would start to form in the years to come.

Without ARPA the Internet would not exist. It was because of this institution that the very first version of the Internet was created – ARPANET.

Creating a Global Network of Computers

Although Licklider left ARPA a few years before ARPANET was created, his ideas and his vision laid the foundation and building blocks to create the Internet. The fact that it has become what we know today we may take for granted.

Computers at the time were not as we know them now. They were massive and extremely expensive. They were seen as number-crunching machines and mostly as calculators, and they could only perform a limited number of tasks.

So in the era of mainframe computers, each one could only run a specific task. For an experiment to take place that required multiple tasks, it would require more than one computer. But that meant buying more expensive hardware.

The solution to that?

Connecting multiple computers to the same network and getting those different systems to speak the same language in order to communicate with one another.

The idea of multiple computers connected to a network was not new. Such infrastructure existed in the 1950’s and was called WANs (Wide Area Networks).

However, WANs had many technological limitations and were constrained both to small areas and in what they could do. Each machine spoke it’s own language which made it impossible for it to communicate with other machines.

So this idea of a ‘global network’ that Licklider proposed and then popularised in the early 1960’s was revolutionary. It tied in with the greater vision he had, that of the perfect symbiosis between computers and humans.

He was certain that in the future computers would improve the quality of life and get rid of repetitive tasks, leaving room and time for humans to think creatively, more in-depth, and let their imagination flow.

That could only come to fruition if different systems broke the language barrier and integrated into a wider network. This idea of «Networking» is what makes the Internet we use today. It’s essentially the need for common standards for different systems to communicate.

Building a Distributed Packet Switched Network

Up until this point (the end of the 1960’s), when you wanted to run tasks on computers, data was sent via the telephone line using a method called «Circuit switching».

This method worked just fine for phone calls but was was very inefficient for computers and the Internet.

Using this method you could only send data as a full packet, that is data sent over the network, and only to one computer at a time. It was common for information to get lost and to have to re-start the whole procedure from the beginning. It was time consuming, ineffective, and costly.

And then in the Cold War era, it was also dangerous. An attack on the telephone system would destroy the whole communication system.

The answer to that problem was packet switching.

It was a simple and efficient method of transferring data. Instead of sending data as one big stream, it cuts it up into pieces.

Then it breaks down the packets of information into blocks and forwards them as fast as possible and in as many possible directions, each taking its own different routes in the network, until they reach their destination.

Once there, they are re-assembled. That’s made possible because each packet has information about the sender, the destination, and a number. This then allows the receiver to put them back together in their original form.

This method was researched by different scientists, but the ideas of Paul Baran on distributed networks were later adopted by ARPANET.

Baran was trying to figure out a communication system that could survive a nuclear attack. Essentially he wanted to discover a communication system that could handle failure.

He came to the conclusion that networks can be built around two types of structures: centralised and distributed.

From those structures there came three types of networks: centralised, decentralised, and distributed. Out of those three, it was only the last one that was fit to survive an attack.

If a part of that kind of network was destroyed, the rest of it would still function and the task would simply be moved to another part.

At the time, they didn’t have rapid expansion of the network in mind – we didn’t need it. And it was only in the years to come that this expansion started to take shape. Baran’s ideas were ahead of his time, however, they laid the foundation for how the Internet works now.

The experimental packet switched network was a success. It led to the early creation of the ARPANET architecture which adopted this method.

How ARPANET Was Built

What started off as a response to a Cold War threat was turning into something different. The first prototype of the Internet slowly began to take shape and the first computer network was built, ARPANET.

The goal now was resource sharing, whether that was data, findings, or applications. It would allow people, no matter where they were, to harness the power of expensive computing that was far away, as if they were right in front of them.

Up until this point scientists couldn’t use resources available on computers that were in another location. Each mainframe computer spoke its own language so there was lack of communication and incompatibility between the systems.

In order for computers to be effective, though, they needed to speak the same language and be linked together into a network.

So the solution to that was to build a network that established communication links between multiple resource-sharing mainframe supercomputers that were miles apart.

The building of an experimental nationwide packet switched network that linked centers run by agencies and universities began.

On October 29 1969 different computers made their first connection and spoke, a ‘node to node’ communication from one computer to another. It was an experiment that was about to revolutionize communication.

The first ever message was delivered from UCLA (University of California, Los Angeles) to SRI (the Stanford Research Institute).

It read simply «LO».

What was meant to be «LOGIN» was not feasible at first, as the system crashed and had to be rebooted. But it worked! The first step had been made and the language barrier had been broken.

By the end of 1969 a connection had been established between four nodes on the whole network which included UCLA, SRI, UCSB (University of California Santa Barbara) and the University of Utah.

But the network grew steadily throughout the years and more and more universities joined.

By 1973 there were even nodes connecting to England and Norway. ARPANET managed to connect these supercomputing centers run by universities together into its network.

One of the greatest achievements of that time was that a new culture was emerging. A culture that revolved around solving problems via sharing and finding the best possible solution collectively via networking.

During that time scientists and researchers were questioning every aspect of the network – technical aspects as well as the moral side of things, too.

The environments where these discussions were taking place were welcoming for all and free of hierarchies. Everyone was free to express their opinion and collaborate to solve the big issues that arose.

We see that kind of culture carrying over to the Internet of today. Through forums, social media, and the like, people ask questions to get answers or come together to deal with problems, whatever they may be, that affect the human condition and experience.

These different networks had their own dialects, and their own standards for how data was transferred. It was impossible for them to integrate into this larger network, the Internet we know today.

Getting these different networks to speak to one another – or Internetworking, a term scientists used for this process – proved to be a challenge.

A Need for Common Standards

Now our devices are designed so that they can connect to the wider global network automatically. But back then this process was a complex task.

This worldwide infrastructure, the network of networks that we call the Internet, is based on certain agreed upon protocols. Those are based on how networks communicate and exchange data.

From the early days at ARPANET, it still lacked a common language for computers outside its own network to be able to communicate with computers on its own network. Even though it was a secure and reliable packet-switched network.

How could these early networks communicate with one another? We needed the network to expand even more for the vision of an ‘global network’ to become a reality.

To build an open network of networks, a general protocol was needed. That is, a set of rules.

Those rules had to be strict enough for secure data transfer but also loose enough to accommodate all the ways that data was transferred.

TCP/IP Saves the Day

Vint Cerf and Bob Khan began working on the design of what we now call the Internet. In 1978 the Transmission Control Protocol and Internet Protocol were created, otherwise known as TCP/IP.

The rules for the Interconnection were:

As Cerf explained:

The job of TCP is merely to take a stream of messages produced by one HOST and reproduce the stream at a foreign receiving HOST without change.

The Internet Protocol (IP) makes locating information possible when looking among the plethora of machines available.

So how does data travel?

So how does a packet go from one destination to another? Say from the sending destination to the receiving one? What role does TCP/IP play in this and how does it make the journey possible?

When a user sends or receives information, the first step is for TCP on the sender’s machine to break that data into packets and distribute them. Those packets travel from router to router over the Internet.

During this time the IP protocol is in charge of the addressing and forwarding of those packets. At the end, TCP reassembles the packets to their original state.

What Happened Next with the Internet?

Throughout the ’80s this protocol was tested thoroughly and adopted by many networks. The Internet just continued to grow and scale at a rapid speed.

The interconnected global network of networks was finally starting to happen. It was still mainly used widely by researchers, scientists, and programmers to exchange messages and information. The general public was quite unaware of it.

But that was about to change in the late ’80s when the Internet morphed again.

This was thanks to Tim Berners Lee who introduced the Web – how we know and use the Internet today.

The internet went from just sending messages from one computer to another to creating an accessible and intuitive way for people to browse what was at first a collection of interlinked websites. The Web was built on top of the Internet. The Internet is its backbone.

I hope this article gave some context and insight into the origins of this galaxy of information we use today. And I hope you enjoyed learning about how it actually all started and the path it took to becoming the Internet we know and use today.