How to create topic kafka

How to create topic kafka

Create Kafka Topics in 3 Easy Steps

Creating a topic in production is an operative task that requires awareness and preparation. In this tutorial, we’ll explain all the parameters to consider when creating a new topic in production.

Setting the partition count and replication factor is required when creating a new Topic and the following choices affect the performance and reliability of your system.

PARTITIONS

Kafka topics are divided into a number of partitions, which contains messages in an unchangeable sequence. Each message in a partition is assigned and identified by its unique offset.

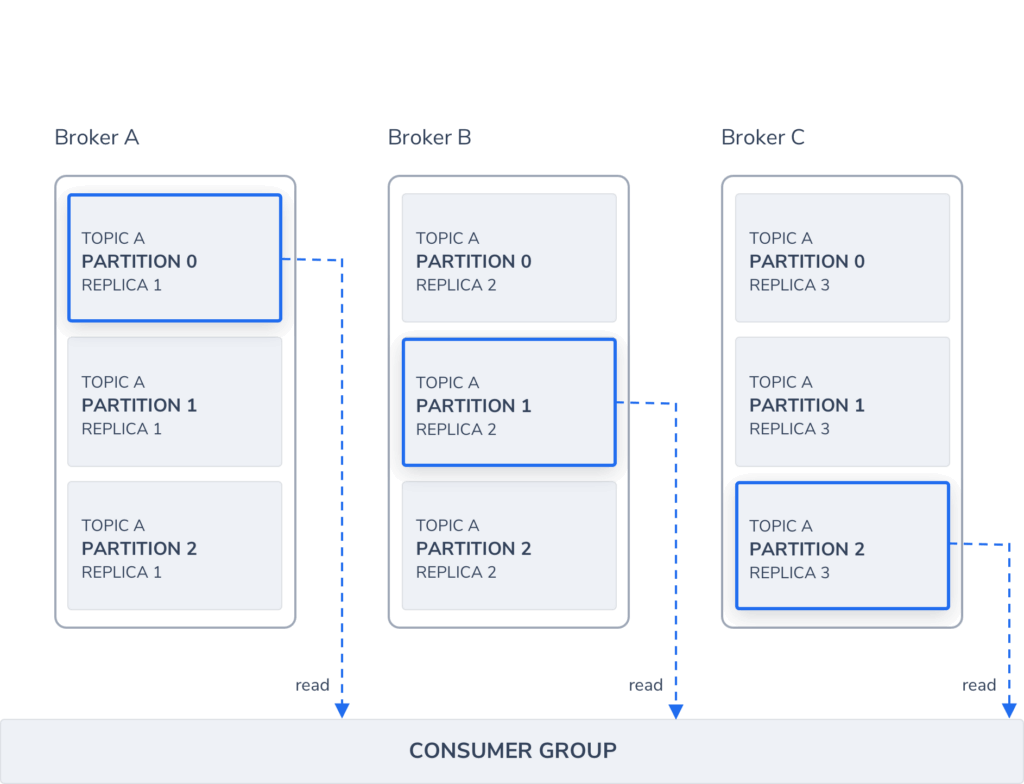

Partitions allow us to split the data of a topic across multiple brokers balance the load between brokers. Each partition can be consumed by only one consumer group, so the parallelism of your service is bound by the number of partition the topic has.

The number of partitions is affected by two main factors, the number of messages and the avg size of each message. In case the volume is high you will need to use the number of brokers as a multiplier, to allow the load to be shared on all consumers and avoid creating a hot partition which will cause a high load on a specific broker. We aim to keep partition throughput up to 1MB per second.

Set Number of Partitions

REPLICAS

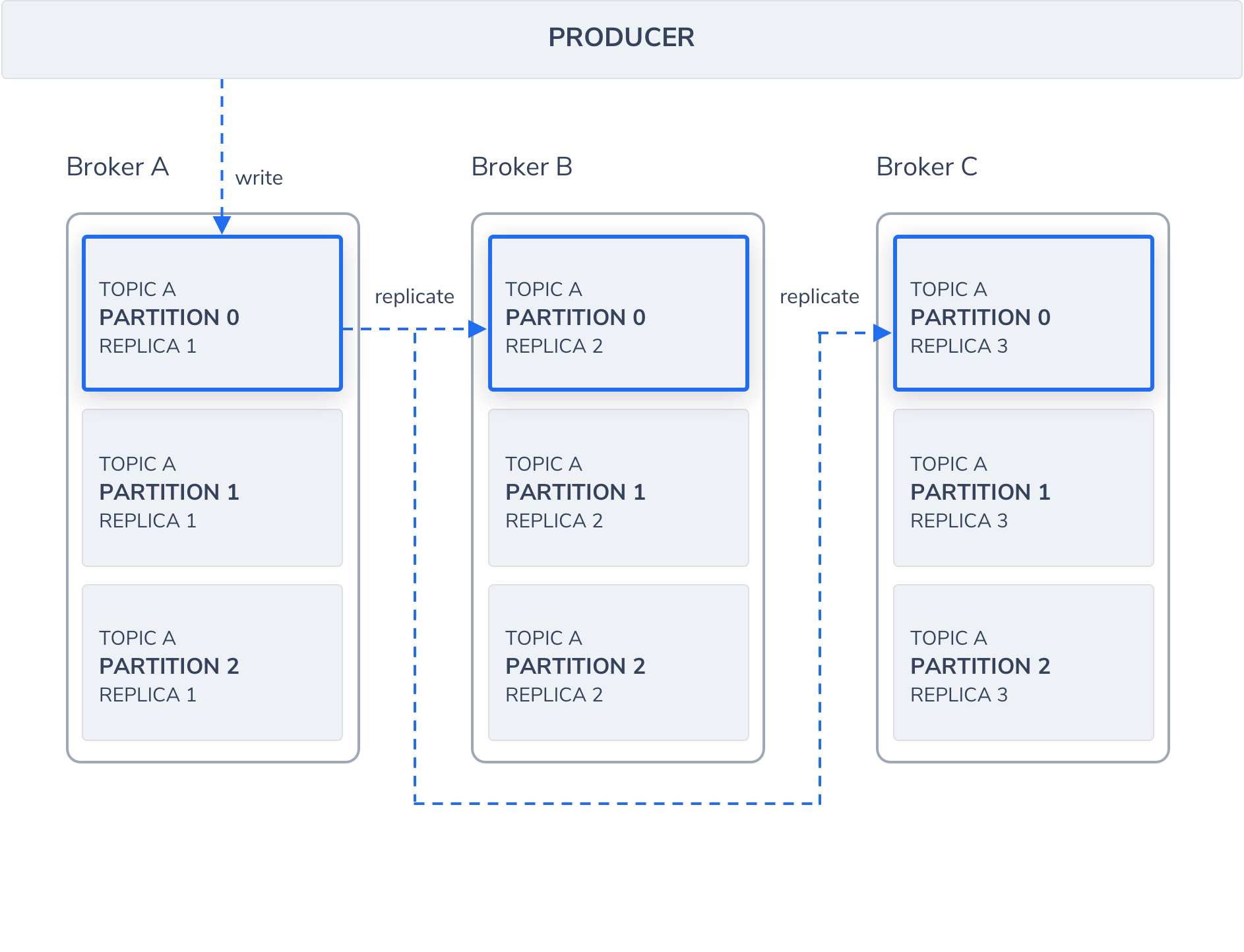

Kafka optionally replicates topic partitions in case the leader partition fails and the follower replica is needed to replace it and become the leader. When configuring a topic, recall that partitions are designed for fast read and write speeds, scalability, and for distributing large amounts of data. The replication factor (RF), on the other hand, is designed to ensure a specified target of fault-tolerance. Replicas do not directly affect performance since at any given time, only one leader partition is responsible for handling producers and consumer requests via broker servers.

Another consideration when deciding the replication factor is the number of consumers that your service needs in order to meet the production volume.

Set Replication Factor (RF)

In case your topic is using keys, consider using RF 3 otherwise, 2 should be sufficient.

RETENTION

The time to keep messages in a topic, or the max topic size. Decide according to your use case. By default, the retention will be 7 days but this is configurable.

Set Retention

COMPACTION

In order to free up space and clean up unneeded records, Kafka compaction can delete records based on the date and size of the record. It can also delete every record with identical keys while retaining the most recent version of that record. Key-based compaction is useful for keeping the size of a topic under control, where only the latest record version is important.

Enable Compaction

For an introduction on Kafka, see this tutorial.

Apache Kafka – Create Topic – Syntax and Examples

Kafka – Create Topic

All the information about Kafka Topics is stored in Zookeeper (Cluster Manager). For each Topic, you may specify the replication factor and the number of partitions. A topic is identified by its name. All these information has to be provided as arguments to the shell script, /kafka-topics.sh, while creating a new Kafka Topic.

Syntax to create Kafka Topic

Following is the syntax to create a topic :

where the arguments are :

| Argument | Description |

| IP and port at which zookeeper is running. | |

| Number of replications that the topic has to maintain in the Kafka Cluster. | |

| Number of partitions into which the Topic has to be partitioned. | |

| Name of the Kafka Topic to be created. |

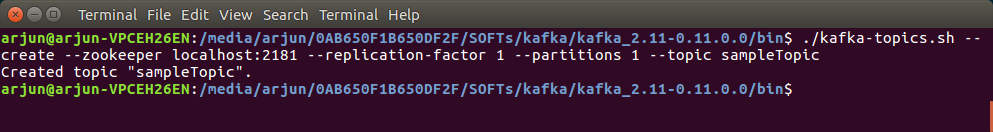

Example to Create a Kafka Topic named sampleTopic

To create a topic in Apache Kafka, Zookeeper and Kafka have to be up and running.

Start Zookeeper and Kafka Cluster

Navigate to the root of Kafka directory and run each of the following commands in separate terminals to start Zookeeper and Kafka Cluster.

Run kafka-topics.sh with required arguments

Kafka provides a script, kafka-topics.sh, in the /bin/ directory, to create a topic in the Kafka cluster.

An example is given below :

Running the script creates a Topic named sampleTopic with 1 replication and 1 partition maintaining metadata in the Zookeeper live at localhost:2181.

Open a terminal from bin directory and run the shell script kafka-topics.sh as shown below :

Created topic “sampleTopic”. : sampleTopic has been created.

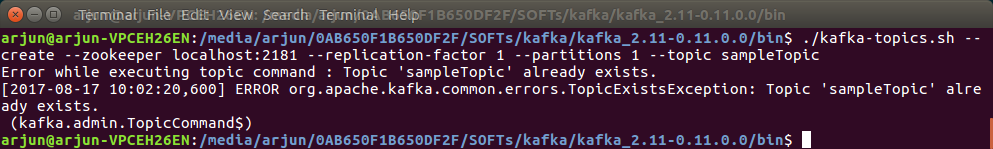

Error : Topic already exists.

When you try to create a duplicate topic, you could get an error saying the topic already exists. An example is given below :

Conclusion

In this Apache Kafka Tutorial – Kafka Create Topic, we have successfully created a Topic in the Kafka cluster.

How to create topics in apache kafka?

What is the bestway to create topics in kafka?

5 Answers 5

Trending sort

Trending sort is based off of the default sorting method — by highest score — but it boosts votes that have happened recently, helping to surface more up-to-date answers.

It falls back to sorting by highest score if no posts are trending.

Switch to Trending sort

All config options you can find are defined here. IMHO, a simple rule for creating topics is the following: number of replicas cannot be more than the number of nodes that you have. Number of topics and partitions is unaffected by the number of nodes in your cluster

Partition number determines the parallelism of the topic since one partition can only be consumed by one consumer in a consumer group. For example, if you only have 10 partitions for a topic and 20 consumers in a consumer group, 10 consumers are idle, not receiving any messages. The number really depends on your application, but 1-1000s are all reasonable.

Replica number is determined by your durability requirement. For a topic with replication factor N, Kafka can tolerate up to N-1 server failures without losing any messages committed to the log. 3 replicas are common configuration. Of course, the replica number has to be smaller or equals to your broker number.

auto.create.topics.enable property controls when Kafka enables auto creation of topic on the server. If this is set to true, when applications attempt to produce, consume, or fetch metadata for a non-existent topic, Kafka will automatically create the topic with the default replication factor and number of partitions. I would recommend turning it off in production and creating topics in advance.

I’d like to share my recent experience I described on my blog The Side Effect of Fetching Kafka Topic Metadata and also give my answers to certain questions brought up here.

1) What is the best way to create topics in kafka? Do we need to create topic prior to publish messages?

I think if we know we are going to use a fixed name Kafka topic in advance, we would be better off to create the topic before we write or read messages from it. This typically can be done in a post startup script by using bin/kafka-topics.sh see the official documentation for example. Or we can use KafkaAdminClient which was introduced in Kafka 0.11.0.0.

On the other hand, I do see certain cases where we would need to generate a topic name on the fly. In these cases, we wouldn’t be able to know the fixed topic name and we can rely on the «auto.create.topics.enable» property. When it is enabled, a topic would be created automatically. And this brings up the second question:

2) Which actions would cause the creation when auto.create.topics.enable is true

Actually as @Lan already pointed out

If this is set to true, when applications attempt to produce, consume, or fetch metadata for a non-existent topic, Kafka will automatically create the topic with the default replication factor and number of partitions.

I would like to put it even simpler:

If auto topic creation is enabled for Kafka brokers, whenever a Kafka broker sees a specific topic name, that topic will be created if it does not already exist

And also the fact that fetch metadata would automatically create the topic is often overlooked by people including myself. A specific example for this is to use the consumer.partitionFor(topic) API, this method would create the given topic if it does not exist.

For anyone who is interested in more details I mentioned above, you can take a look at my own blog post on this same topic too The Side Effect of Fetching Kafka Topic Metadata.

What is a Kafka Topic and How to Create it?

Apache Kafka is an Open-source Stream Processing and Management Platform that receives, stores, organizes, and distributes data across different end-users or applications. As users can push hundreds and thousands of messages or data into Kafka Servers, there can be issues like Data Overloading and Data Duplication. Due to these problems, data present in the Kafka Servers often remains unorganized and confounded.

Consequently, consumers or end-users cannot effectively fetch the desired data from Kafka Servers. To eliminate the complications of having messy and unorganized data in the Kafka Servers, users can create different Kafka Topics in a Kafka Server.

Kafka Topic allows users to store and organize data according to different categories and use cases, allowing users to easily produce and consume messages to and from the Kafka Servers.

In this article, you will learn about Kafka, Kafka Topics, and steps for creating Kafka Topics in the Kafka server. You will also know about the process of Kafka Topic Configuration.

Table of Contents

Prerequisites

What is Apache Kafka?

Developed initially by Linkedin, Apache Kafka is an Open-Source and Distributed Stream Processing platform that stores and handles real-time data. In other words, Kafka is an Event Streaming service that allows users to build event-driven or data-driven applications.

Since Kafka is used for sending (publish) and receiving (subscribe) messages between processes, servers, and applications, it is also called a Publish-Subscribe Messaging System.

By default, Kafka has effective built-in features of partitioning, fault tolerance, data replication, durability, and scalability. Because of such effective capabilities, Apache Kafka is being used by the world’s most prominent companies, including Netflix, Uber, Cisco, and Airbnb.

Scale your data integration effortlessly with Hevo’s Fault-Tolerant No Code Data Pipeline

As the ability of businesses to collect data explodes, data teams have a crucial role to play in fueling data-driven decisions. Yet, they struggle to consolidate the data scattered across sources into their warehouse to build a single source of truth. Broken pipelines, data quality issues, bugs and errors, and lack of control and visibility over the data flow make data integration a nightmare.

1000+ data teams rely on Hevo’s Data Pipeline Platform to integrate data from over 150+ sources in a matter of minutes. Billions of data events from sources as varied as SaaS apps, Databases, File Storage and Streaming sources can be replicated in near real-time with Hevo’s fault-tolerant architecture. What’s more – Hevo puts complete control in the hands of data teams with intuitive dashboards for pipeline monitoring, auto-schema management, custom ingestion/loading schedules.

All of this combined with transparent pricing and 24×7 support makes us the most loved data pipeline software on review sites.

Take our 14-day free trial to experience a better way to manage data pipelines.

What are Apache Kafka Topics?

Apache Kafka has a dedicated and fundamental unit for Event or Message organization, called Topics. In other words, Kafka Topics are Virtual Groups or Logs that hold messages and events in a logical order, allowing users to send and receive data between Kafka Servers with ease.

When a Producer sends messages or events into a specific Kafka Topic, the topics will append the messages one after another, thereby creating a Log File. Furthermore, producers can Push Messages into the tail of these newly created logs while consumers Pull Messages off from a specific Kafka Topic.

By creating Kafka Topics, users can perform Logical Segregation between Messages and Events, which works the same as the concept of different tables having different types of data in a database.

In Apache Kafka, you can create any number of topics based on your use cases. However, each topic should have a unique and identifiable name to differentiate it across various Kafka Brokers in a Kafka Cluster.

Kafka Topic Partition

Apache Kafka’s single biggest advantage is its ability to scale. If Kafka Topics were constrained on a single Machine or on a single Cluster, it would pretty much become its biggest hindrance to scale. Luckily, Apache Kafka has a solution for this.

Apache Kafka divides Topics into several Partitions. For better understanding, you can imagine Kafka Topic as a giant set and Kafka Partitions to be smaller subsets of Records that are owned by Kafka Topics. Each Record holds a unique sequential identifier called the Offset, which gets assigned incrementally by Apache Kafka. This helps Kafka Partition to work as a single log entry, which gets written in append-only mode.

The way Kafka Partitions are structured gives Apache Kafka the ability to scale with ease. Kafka Partitions allow Topics to be parallelized by splitting the data of a particular Kafka Topic across multiple Brokers. Each Broker holds a subset of Records that belongs to the entire Kafka Cluster.

Apache Kafka achieves replication at the Partition level. Redundant units in Topic Partitions are referred to as Replicas, and each Partition generally contains one or more Replicas. That is, the Partition contains messages that are replicated through several Kafka Brokers in the Cluster. Each Partition (Replica) has one Server that acts as a Leader and another set of Servers that act as Followers.

A Kafka Leader replica handles all read/write requests for a particular Partition, and Kafka Followers imitate the Leader. Apache Kafka is intelligent enough, that if in any case, your Lead Server goes down, one of the Follower Servers becomes the Leader. As a good practice, you should try to balance the Leaders so that each Broker is the Leader of the same number of Partitions.

When a Producer publishes a Record to a Topic, it is assigned to its Leader. The Leader adds the record to the commit log and increments the Record Offset. Kafka makes Records available to consumers only after they have been committed, and all incoming data is stacked in the Kafka Cluster.

Each Kafka Producer uses metadata about the Cluster to recognize the Leader Broker and destination for each Partition. Kafka Producers can also add a key to a Record that points to the Partition that the Record will be in, and use the hash of the key to calculate Partition. As an alternative, you can skip this step and specify Partition by yourself.

How to Create Apache Kafka Topics?

Here are the simple 3 steps used to Create an Apache Kafka Topic:

Step 1: Setting up the Apache Kafka Environment

In this method, you will be creating Kafka Topics using the default command-line tool, i.e., command prompt. In other words, you can write text commands in the command prompt terminal to create and configure Kafka Topics.

Below are the steps for creating Kafka topics and configuring the newly created topics to send and receive messages.

Now, Kafka and Zookeeper have started and are running successfully.

Step 2: Creating and Configuring Apache Kafka Topics

When the above command is executed successfully, you will see a message in your command prompt saying, “Created Topic Test.” With the above command, you have created a new topic called Topic Test with a single partition and one replication factor.

The command consists of attributes like Create, Zookeeper, localhost:2181, Replication-factor, Partitions:

Kafka Topics should always have a unique name for differentiating and uniquely identifying between other topics to be created in the future. In this case, you are giving a “Topic Test” as a unique name to the Topic. This name can be used to configure or customize the topic elements in further steps.

In the above steps, you have successfully created a new Kafka Topic. You can list the previously created Kafka Topics using the command given below.

You can also get the information about the newly created Topic by using the following command. The below-given command describes the information of Kafka Topics like topic name, number of partitions, and replicas.

After creating topics in Kafka, you can start producing and consuming messages in the further steps. By default, you have “bin/kafka-console-producer.bat” and “bin/kafka-console-consumer.bat” scripts in your main Kafka Directory. Such pre-written Producer and Consumer Scripts are responsible for running Kafka Producer and Kafka Consumer consoles, respectively.

Initially, you have to use a Kafka Producer for sending or producing Messages into the Kafka Topic. Then, you will use Kafka Consumer for receiving or consuming messages from Kafka Topics.

For that, open a new command prompt and enter the following command.

After the execution of the command, you can see the ” > ” cursor that is frequently blinking. Now, you can confirm that you are in the Producer Console or Window.

Once the Kafka producer is started, you have to start the Kafka consumer. Open a new command window and enter the below command according to your Kafka versions. If you are using an old Kafka version ( 2.0 version), enter the command given below.

In the above commands, the Topic Test is the name of the Topic inside which users will produce and store messages in the Kafka server. The same Topic name will be used on the Consumer side to Consume or Receive messages from the Kafka Server.

After implementing the above steps, you have successfully started the Producer and Consumer Consoles of Apache Kafka. Now, you can write messages in the Producer Panel and receive Messages in the Consumer Panel.

All of the capabilities, none of the firefighting

Using manual scripts and custom code to move data into the warehouse is cumbersome. Frequent breakages, pipeline errors and lack of data flow monitoring makes scaling such a system a nightmare. Hevo’s reliable data pipeline platform enables you to set up zero-code and zero-maintenance data pipelines that just work.

Step 3: Send and Receive Messages using Apache Kafka Topics

Open both the Apache Kafka Producer Console and Consumer Console parallel to each other. Start typing any text or messages in the Producer Console. You can see that the messages you are posting in the Producer Console are Received and Displayed in the Consumer Console.

By this method, you have configured the Apache Kafka Producer and Consumer to write and read messages successfully.

You can navigate to the Data Directories of Apache Kafka to ensure whether the Topic Creation is successful. When you open the Apache Kafka Data Directory, you can find the topics created earlier to store messages. In the directory, such Topics are represented in the form of folders.

If you have created Partitions for your Topics, you can see that the Topic Folders are separated inside the same directory according to the given number of partitions. In the Kafka Data Directory, you will see several files named “Consumer Offsets” that store all the information about the consumer configuration and messages according to the Apache Kafka Topics.

From these steps, you can confirm and ensure that Apache Kafka is properly working for message Producing and Consuming operations.

Conclusion

In this article, you have learned about Apache Kafka, Apache Kafka Topics, and steps to create Apache Kafka Topics. You have learned the Manual Method of creating Topics and customizing Topic Configurations in Apache Kafka by the command-line tool or command prompt.

However, you can also use the Kafka Admin API, i.e., TopicBuilder Class, to programmatically implement the Topic Creation operations. By learning the manual method as a base, you can explore the TopicBuilder method later.

Extracting complicated data from Apache Kafka, on the other hand, can be Difficult and Time-Consuming. If you’re having trouble with these issues and want to find a solution, Hevo Data is a good place to start!

Hevo Data is a No-Code Data Pipeline that offers a faster way to move data from 150+ Data Sources including Apache Kafka, Kafka Confluent Cloud, and other 40+ Free Sources, into your Data Warehouse to be visualized in a BI tool.

You can use Hevo’s Data Pipelines to replicate the data from your Apache Kafka Source or Kafka Confluent Cloud to the Destination system. Hevo is fully automated and hence does not require you to code.

Want to take Hevo for a spin? SIGN UP for a 14-day Free Trial and experience the feature-rich Hevo suite first hand. You can also have a look at the unbeatable pricing that will help you choose the right plan for your business needs.

Share your experience of learning about Apache Kafka Topic Creation & Working in the comments section below!

Kafka topics – Create, List, Configure, Delete

In this article, we are going to look into details about Kafka topics. We will see what exactly are Kafka topics, how to create them, list them, change their configuration and if needed delete topics.

Kafka topics:

Let’s understand the basics of Kafka Topics. Topics are categories of data feed to which messages/ stream of data gets published. You can think of Kafka topic as a file to which some source system/systems write data to. Kafka topics are always multi-subscribed that means each topic can be read by one or more consumers. Just like a file, a topic name should be unique.

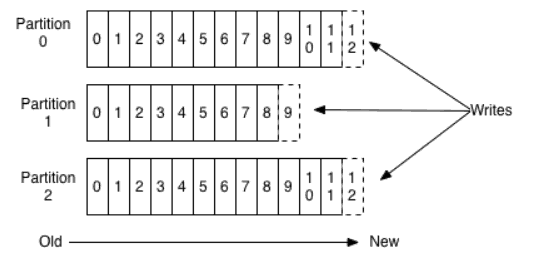

Each topic is split into one or more partitions. Each partition is ordered, an immutable set of records. By ordered means, when a new message gets attached to partition it gets incremental id assigned to it called Offset. Each partition has its own offset starting from 0. Immutable means once a message is attached to partition we cannot modify that message. Following image represents partition data for some topic

As we know, Kafka has many servers know as Brokers. Each broker contains some of the Kafka topics partitions. Each partition has one broker which acts as a leader and one or more broker which acts as followers. All the read and write of that partition will be handled by the leader server and changes will get replicated to all followers. In the case of a leader goes down because of some reason, one of the followers will become the new leader for that partition automatically.

Kafka replicates each message multiple times on different servers for fault tolerance. So, even if one of the servers goes down we can use replicated data from another server. Each topic has its own replication factor. Ideally, 3 is a safe replication factor in Kafka. One point should be noted that you cannot have a replication factor more than the number of servers in your Kafka cluster. Because Kafka will keep the copy of data on the same server for obvious reasons.

Creating a Topic:

Now that we have seen some basic information about Kafka Topics lets create our first topic using Kafka commands. We can type kafka-topic in command prompt and it will show us details about how we can create a topic in Kafka.

For creating topic we need to use the following command