How to find all pages on a website

How to find all pages on a website

3 Ways to Find Number of Pages on a Website

Sometimes you may be looking for finding number of pages on a website. It could be your own or someone’s website or even a competitor’s site that you wanted to check. If it is your own site, it is easy to find the number of pages by looking into your content management system. If the site is not yours then you may get approximate pages with some simple tricks. Here we will check out three ways to find number of pages on a website.

How to Find Number of Pages on a Website?

1. XML Sitemap

The XML Sitemap is the precise way to count the number if pages easily without much effort. Since the Sitemap is accessible to all users, you use this method for any website. Once you have the site URL, open the Sitemap on the browser using one of the below URLs:

http://sitename.com/sitemap.xml – For a site having single Sitemap.

http://sitename.com/sitemap_index.xml – For a site having multiple Sitemaps.

If the site uses advanced Sitemap plugins (like Yoast WordPress SEO) the count of URLs under each Sitemap will be shown.

If the count is not shown on the Sitemap then you may need to count manually either one by one or copy the XML to Excel sheet and count it. You can also use online Sitemap or broken link checker tools for this purpose when size of the site is smaller.

2. Using site: Operator

Open Google search and enter the below search query by replacing the site name with yours:

You will see the results like below with the number of indexed pages in Google. Though this may not be the actual count of webpages on a site, it gives an idea of number of indexed pages on Google.

3. Search Console

Similar to “site:” operator, Google Search Console has a option to see the total number of indexed pages on Google. But you need to be a verified owner of the site in order to use Search Console or the owner should invite you to access the data. Once in Search Console account, navigate to “Google Index > Index Status” menu to see the total number of indexed pages.

Total number of indexed pages on Google search and Search Console includes different types of pages on a site. For example, a single URL can be indexed via direct URL, category, tag, archives, etc. and result in appearing multiple times on search engines. You can also view the details of total submitted and indexed pages from the XML Sitemap submitted to Google under “Crawl > Sitemap” section of Search Console account.

Find all pages on a website

Last update

To find out the weaknesses of your site, you should perform a complete site audit. During this process, you will be able to find out the number of links on your site. Every website owner needs to know the total number of pages to understand if all the pages of the website have made it to the search engine index. So how to see all the pages of a website?

You need to know ways to check the number of pages on your site and your competitor’s site. How can you do this for free with a website page counter?

How many pages does a website have? In this article, we’ll take a look at four easy ways to figure this out.

Why do you need to find all the pages on a website?

By knowing how many pages are on a website, you can calculate if they are indexed and listed in the search engine database because sometimes there may be non-indexable pages.

Find all the pages on a website and you will understand if you have many duplicated pages, which negatively affects your site’s ranking on the web. It is important to know which pages may have errors so that you can detect and correct them.

Errors on your site’s pages significantly hinder your search engine rankings. Perform regular audits and find all URLs on a domain to know the status of your site and discover weaknesses. There are some video guides on the Internet on how to use the site audit.

Another important piece of information is the link weight.

You should evenly distribute the link weight on your resource pages, as this will depend on your search engine rankings. To do this, you need to have every link on your site and each page carrying links to other pages. It is how you transfer the internal link weight on your site.

Also, if you want to create an XML site map, remove unnecessary pages or adjust internal linking, the correct number of pages on your website is essential.

With a complete list of pages, you can cleanse the site from junk, fix technical errors on pages and improve the ranking.

Why are pages “isolated”?

The reasons may be different, for example:

With the help of different tools, we will find absolutely all pages. But let’s take it one step at a time. First, let’s upload the list of all indexed and correctly working pages.

Why one tool is not enough for data collection

Let’s try to collect data from three different tools:

By comparing all the data we will get a complete list of pages on your site.

Indexed URLs we will find in the first stage. But we do not need only them. Many sites have pages to which no internal link leads. They are called orphan pages.

Search for all pages with views in Google Analytics

Search engine crawlers find pages by clicking on internal links. So if no link on the site leads to a page, the crawler will not find it.

They can be found using data from Google Analytics: the system stores information on visits to all pages. Some bad news is that GA does not know about those hits, which were there before you connected analytics to your site.

Such pages will not have many views, because you will not be able to click on them from your site. To find them, proceed as follows.

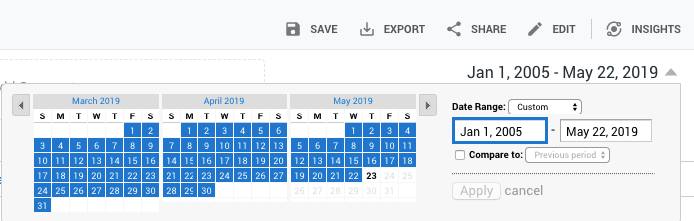

If your site is not young, it is good to specify the data for what period you want to get. This is important because Google Analytics uses data sampling: it does not analyze all of the information, but only a part of it.

Next, click on the Page Views column to sort the list from lesser to greater value. As a result, the most rarely viewed pages are at the top, and orphaned pages are among them.

Move down the list until you see pages with significantly more views. These are already pages with customized linking.

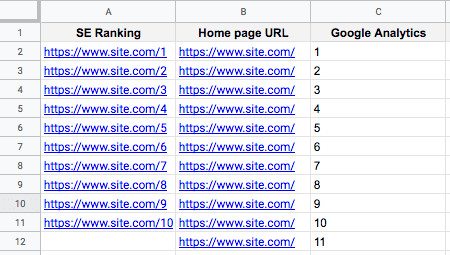

Our next step is to compare the data from SE Ranking and Google Analytics, to understand which pages are not accessible by the search engine crawlers.

We only unloaded the end of the URL from Google Analytics, and we want all the data to be in the same format. Therefore in column B we insert the address of the main page of the site as shown in the screenshot.

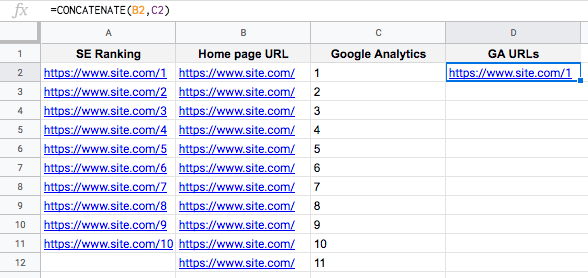

Next, use the concatenate function to merge the values from columns B and C into column D and stretch the formula down to the end of the list.

And now for the fun part: we will compare column “SE Ranking” and column “GA URLs” to find orphan pages.

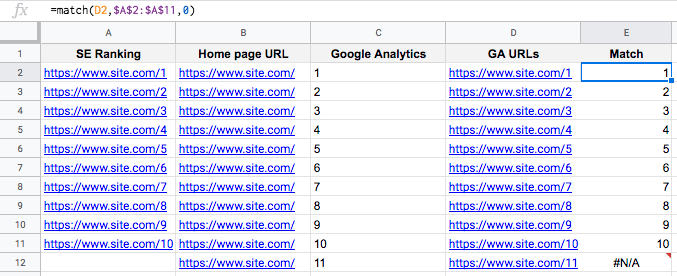

There will be a lot of pages, so it would take an infinitely long time to analyze them manually. Fortunately, there is a match function that allows you to determine which values from the “GA URLs” column are in the “SE Ranking” column. We enter the function in column E and drag it down to the end of the list.

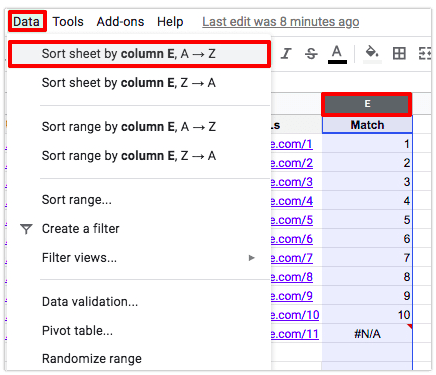

In column E we will see which pages from GA are not in column SE Ranking, there the table will show an error (#N/A). To collect all the errors, sort the data in column E alphabetically.

You now have a complete list of pages not linked to the site. Before moving on, examine each page. Your goal is to understand what that page is, what its role is, and why no links leading to it.

Next, there are three ways to proceed:

After working with isolated pages, you can once again unload and compare the lists from SE Ranking and GA. This way you can make sure you haven’t missed anything.

Get 7 days free trial access to all tools.

No credit card needed!

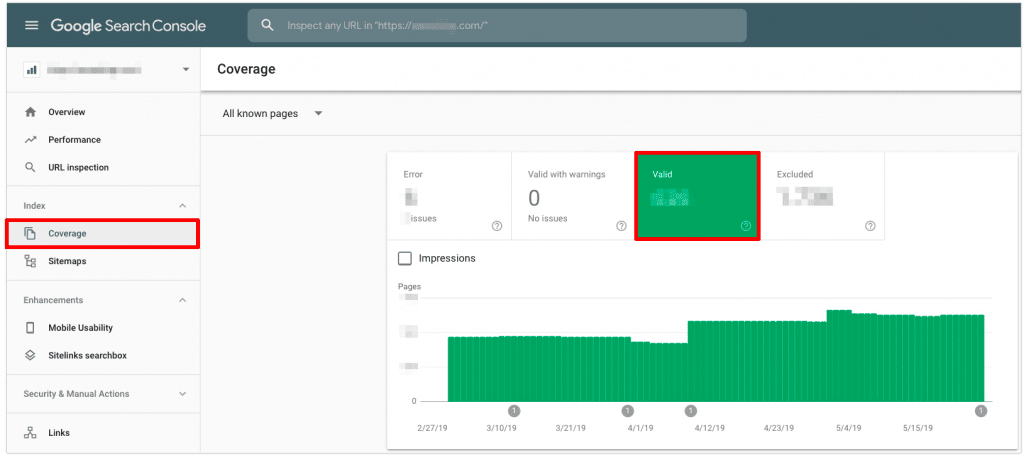

Searching for remaining pages in the Google Search Console

We have learned how to find pages that are not linked to the site. Let’s move on to the rest of the pages that Google knows about: we’ll analyze the data from Google Search Console.

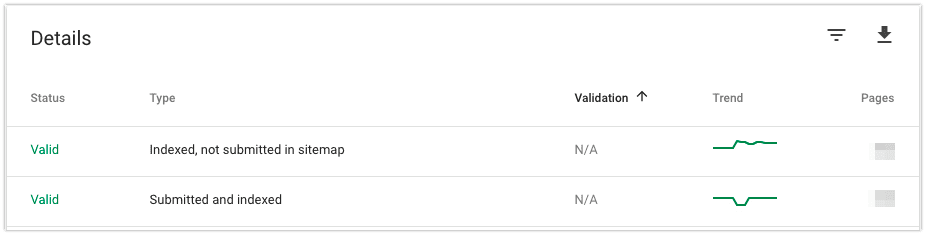

This will list the Indexed pages that are not in the sitemap, as well as the Sent and Indexed pages.

Click on the list to expand it. Examine the data carefully: there may be pages in the list that you have not seen in the SE Ranking and GA downloads. If so, make sure that they are properly performing their role within your site.

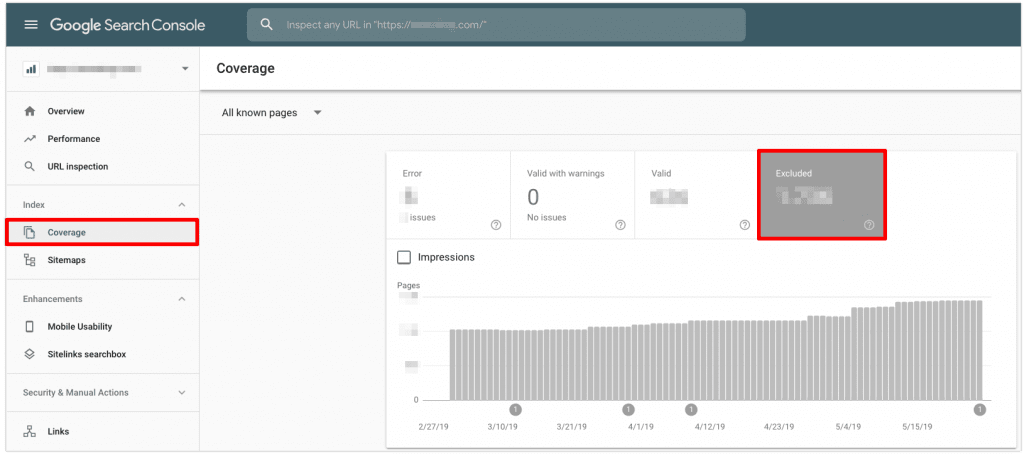

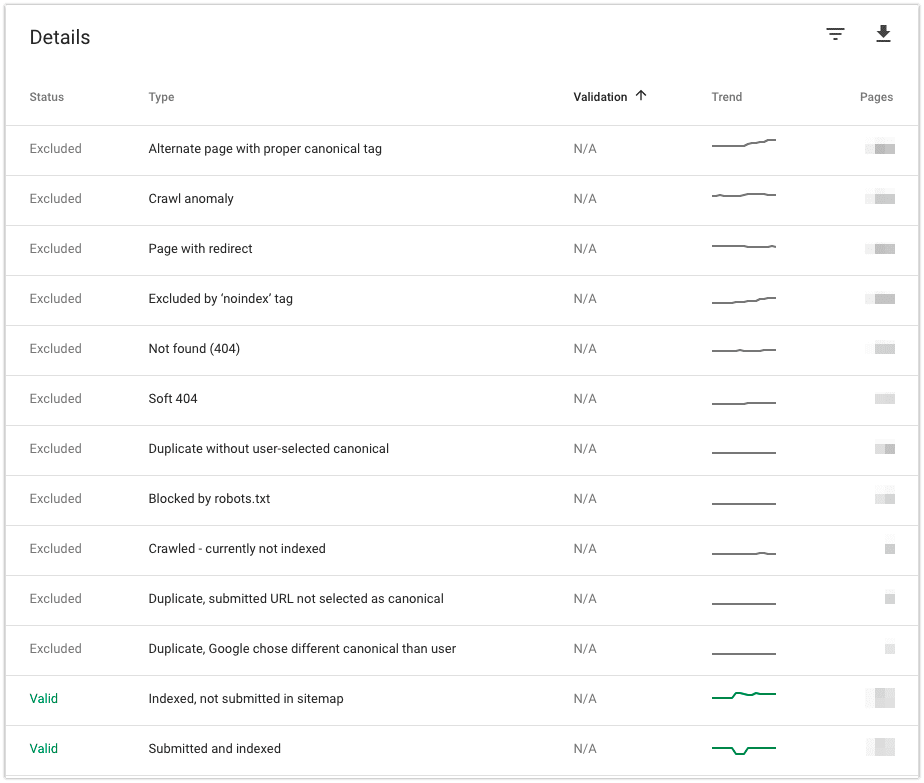

Now let’s go to the Excluded tab so that only non-indexed pages are displayed.

Most often pages from this tab have been deliberately blocked by site owners: these are pages with redirects, closed with a “no index” tag, blocked in the robots.txt file, and so on. Also in this tab, you can identify technical errors that need to be fixed.

If you find pages that you did not encounter in the previous steps, add them to the general list. This way, you will finally have a list of all the pages on your site without exception.

Other ways to find out how many pages a website has

There are other ways to find out how many links your site or your competitor’s site has, despite the site page counter. Let’s look at the most popular ways.

You must create an XML sitemap file. It is very useful when you need to know how to view all the pages of a website. Use a sitemap generator to create one for you; it’s a simple way. It is done automatically and you don’t need to have any technical knowledge or experience in creating XML sitemaps.

Having an XML sitemap is an advantage during search engine ranking. If during the site audit it is discovered that you do not have a sitemap, this fact will be marked as a critical error.

If your site runs on a content management system (CMS), such as WordPress or WIX, you can generate a list of all your web pages from the CMS. There are many plugins on the web that can help you collect all the links on your site with one click. It’s very simple and free – try it to count the pages of your website!

A log of all pages served to visitors is another way to know the number of all pages on your website. Simply log into your cPanel, then look for the raw log files. So you can list all the pages on a website: the most frequently visited links, the ones that have never been visited, and the ones with the highest abandonment rates.

Another simple and popular way to find out how many pages a website has is to use site audit tools. There are many of them, so you can choose one that your team subscribes to. It can be Netpeak Spider or Screaming Frog.

A free subscription to the tool is enough to know the number of all links on your site. You will not need to purchase a subscription just for this task.

Conclusions

If you have access to the necessary tools, it is not difficult to collect all the pages of the site. Yes, you can’t do everything in two clicks, but in the process of collecting data, you will find pages that you may not have guessed existed.

Pages that are not seen by either search engine robots or users, do not bring the site any value. As well as pages that are not indexed because of technical errors. If there are a lot of such pages, it can have a negative impact on SEO results.

The SEO guide to finding all web pages of a website

When running a website, SEO experts and site owners must be aware of all the web pages that are indexed by search engines. But this information alone is not enough. It’s also critical to know all the pages that aren’t visible.

Getting a list of all the web pages of a single website enables you to get a complete overview of that website, and empowers you to clean it up for improved SEO success.

In this blog post, we’ll look into why you need to be able to find all the web pages of a website, how exactly you can do that, and what to do once you have a list of all your web pages.

Why do I need to find every single page?

Search engines are constantly introducing new algorithms and applying manual penalties to pages and sites. So if you don’t have a thorough understanding of all your website’s pages — you’re tiptoeing through an SEO minefield.

In order to avert a serious setback, you must keep a close eye on all the pages that make up your website. Doing so will not only enable you to discover pages you already knew about, but will also help you find forgotten pages, pages you had no idea existed and would otherwise not be able to view.

There are several possible scenarios when you have to know how to find all the web pages of a site, such as:

Now, while getting a list of all crawlable web pages isn’t that difficult of a task, obtaining a list of your lost, forgotten and orphaned pages is another story that we will focus on in more depth.

An orphan page is a web page without any internal links directing to it. In other words, such pages do not have a parent page. And without a parent, they don’t receive any authority and are left without any context, resulting in the search engines not being able to evaluate them.

For example, let’s say you were redesigning your website and accidentally removed the only link to a page without deleting the page itself. Consequently, you’ll have a page that is not connected to the website and its SEO performance will be greatly jeopardized.

However, we’re not only looking to find pages without any internal linking. We’re also tracking down other pages, such as duplicates, that may have slipped from your attention in some other way.

Common causes of abandoned pages

Let’s look into some of the most common reasons why orphaned, lost and forgotten pages may occur on your site:

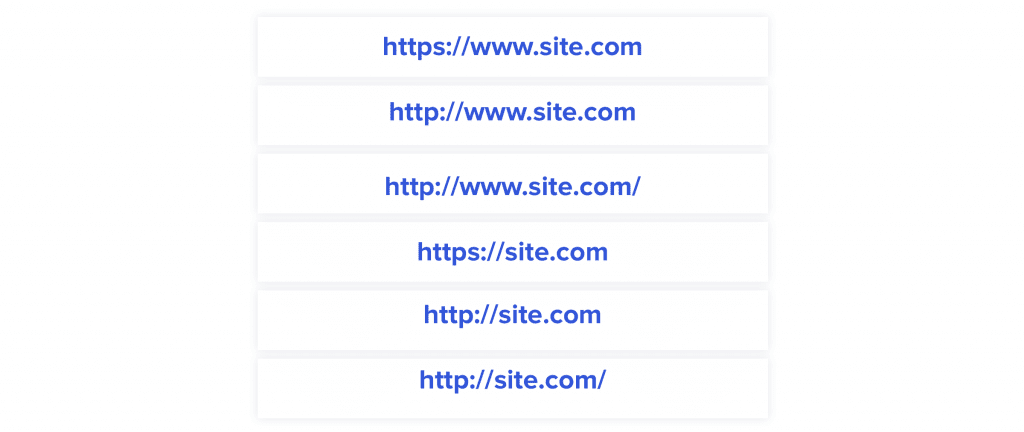

On top of that, if you don’t use http or https, www or non-www, as well as trailing slashes consistently on every web page of your site that’s been made public, this may lead to new abandoned pages.

To see if everything’s set up as it should be on your site, enter all the different variations of your home page into the browser:

As long as each option redirects to the same URL, you’re good.

To recap, if you designed a web page with the ultimate goal of getting it ranked high organically — triple-check that it’s properly linked to your site so that it receives authority and has a chance of being discovered.

Using tools to find all pages of a website

Now, when it comes down to finding all the web pages that belong to a single website, we’re going to be using three tools:

Then, we’ll compare the data sets from these tools to find mismatches, and identify all the pages of your site, including those that aren’t linked to the website, and, therefore, aren’t discoverable via organic search.

Finding crawlable pages via SE Ranking’s Website Audit

Let’s start by collecting all the URLs that both people and search engine crawlers can visit by following your site’s internal links. Analyzing such pages should be your top priority as they get the most amount of attention.

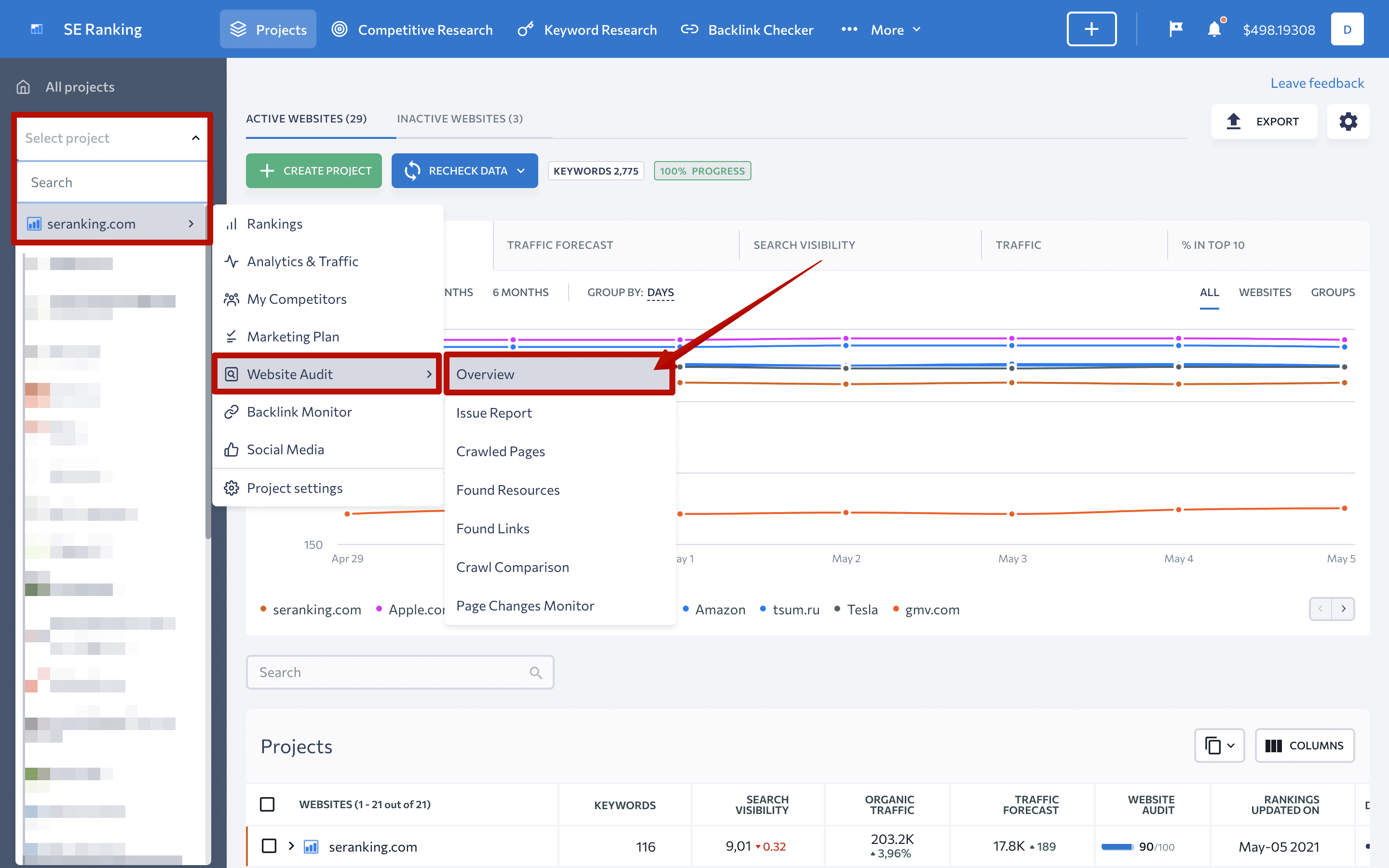

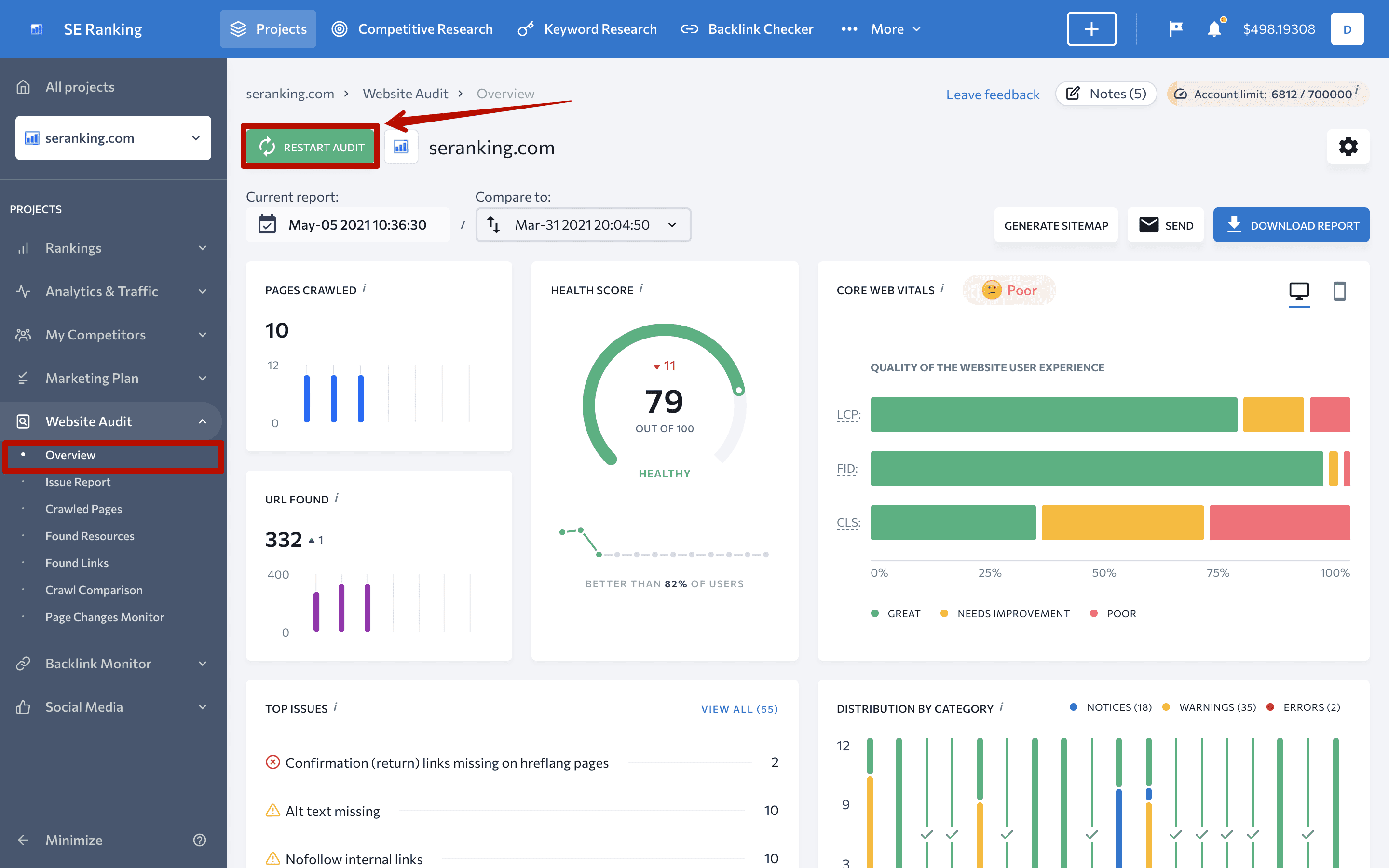

To do this, we’re going to first need to access SE Ranking, add a website or select an existing one, and then go to Website Audit → Overview.

Note: The 14-day free trial gives you access to all of SE Ranking’s available tools and features, including Website Audit.

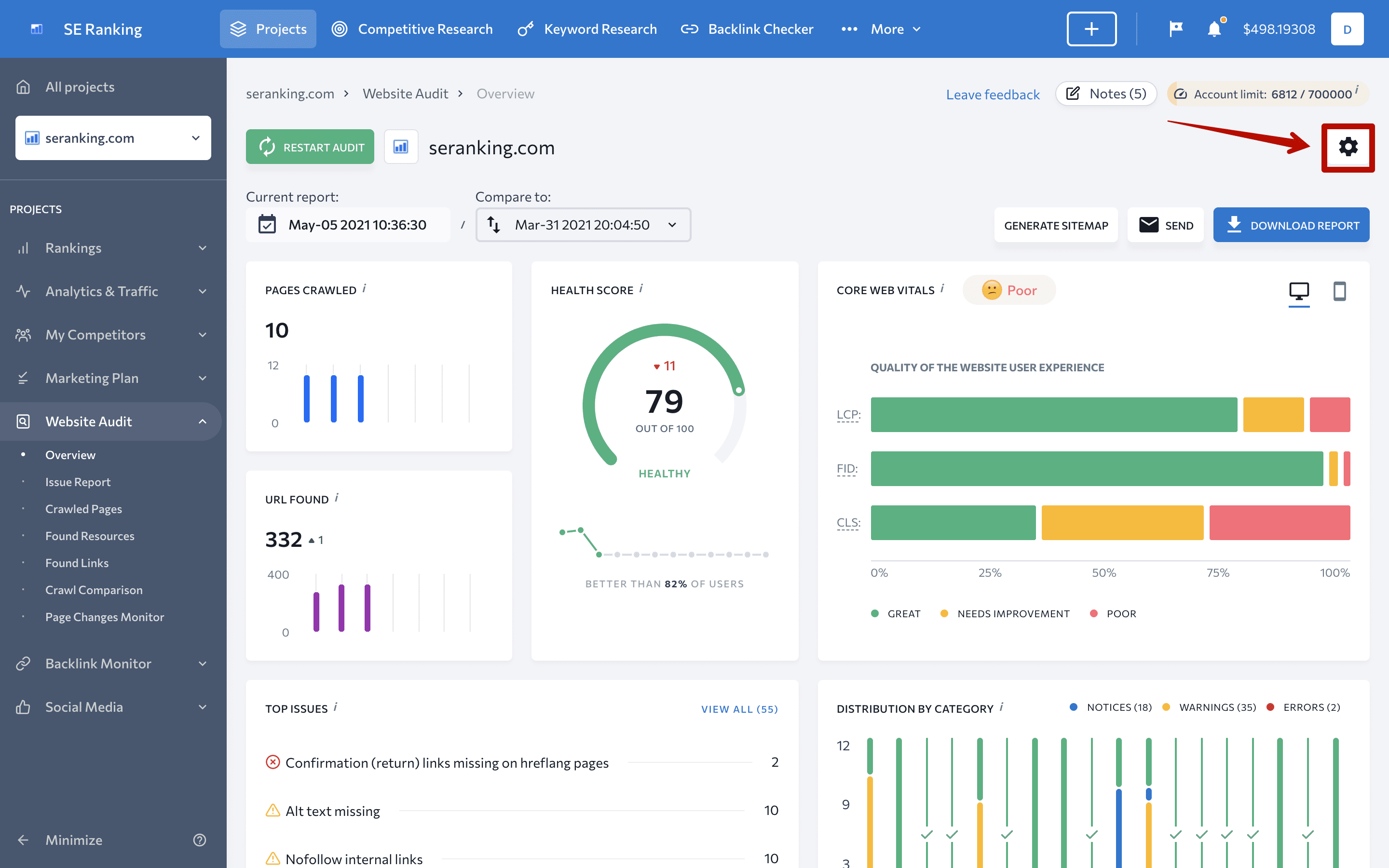

Next, let’s configure the settings to make sure we’re telling the crawler to go through the right pages. To access Website Audit settings, click on the Gear icon in the top right-hand corner:

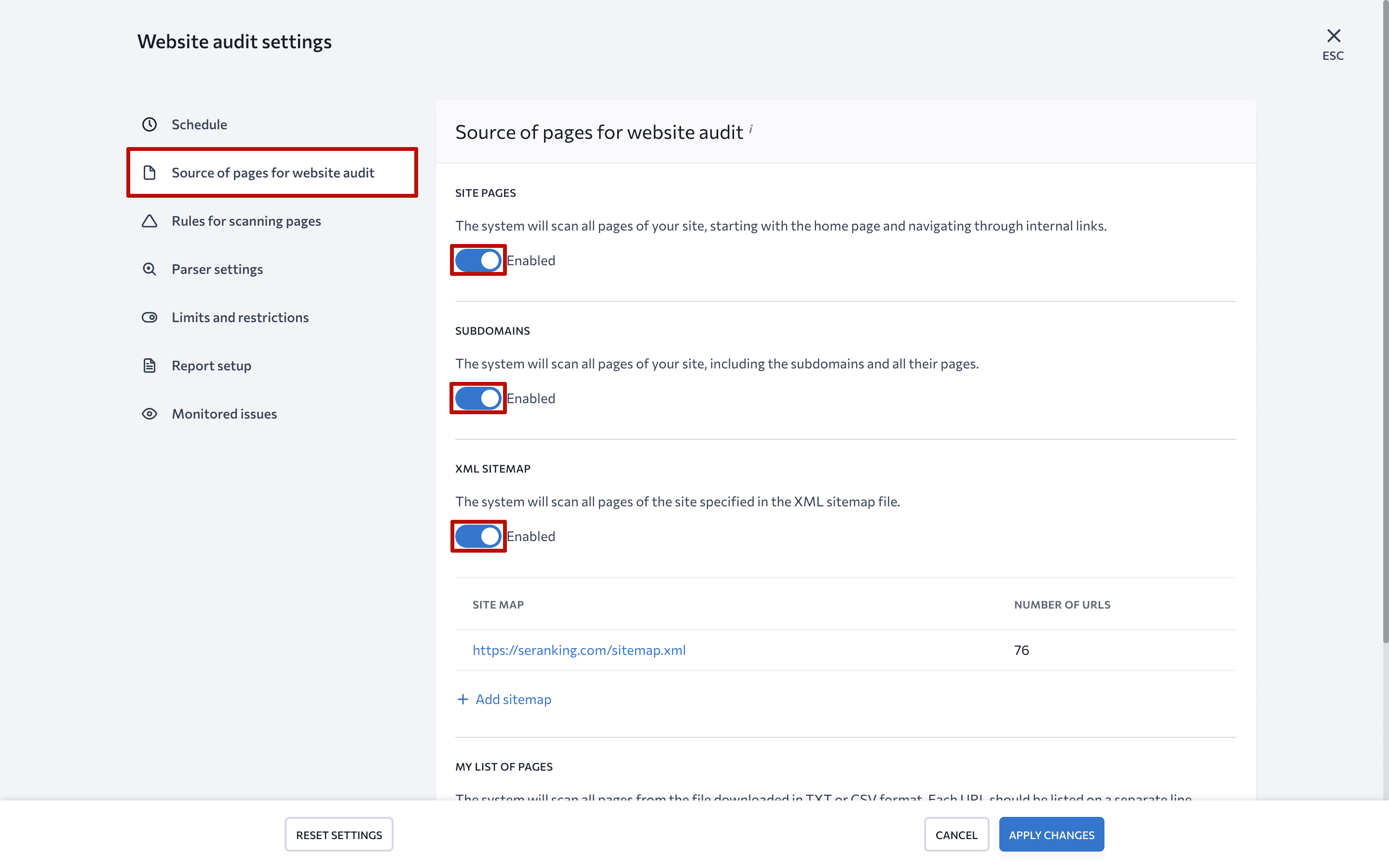

Under settings, go to the Source of pages for website audit tab, and enable the system to scan Site pages, Subdomains, XML sitemap to verify that we’re only scanning what’s been clearly specified, and are including the site’s subdomains along with all their pages:

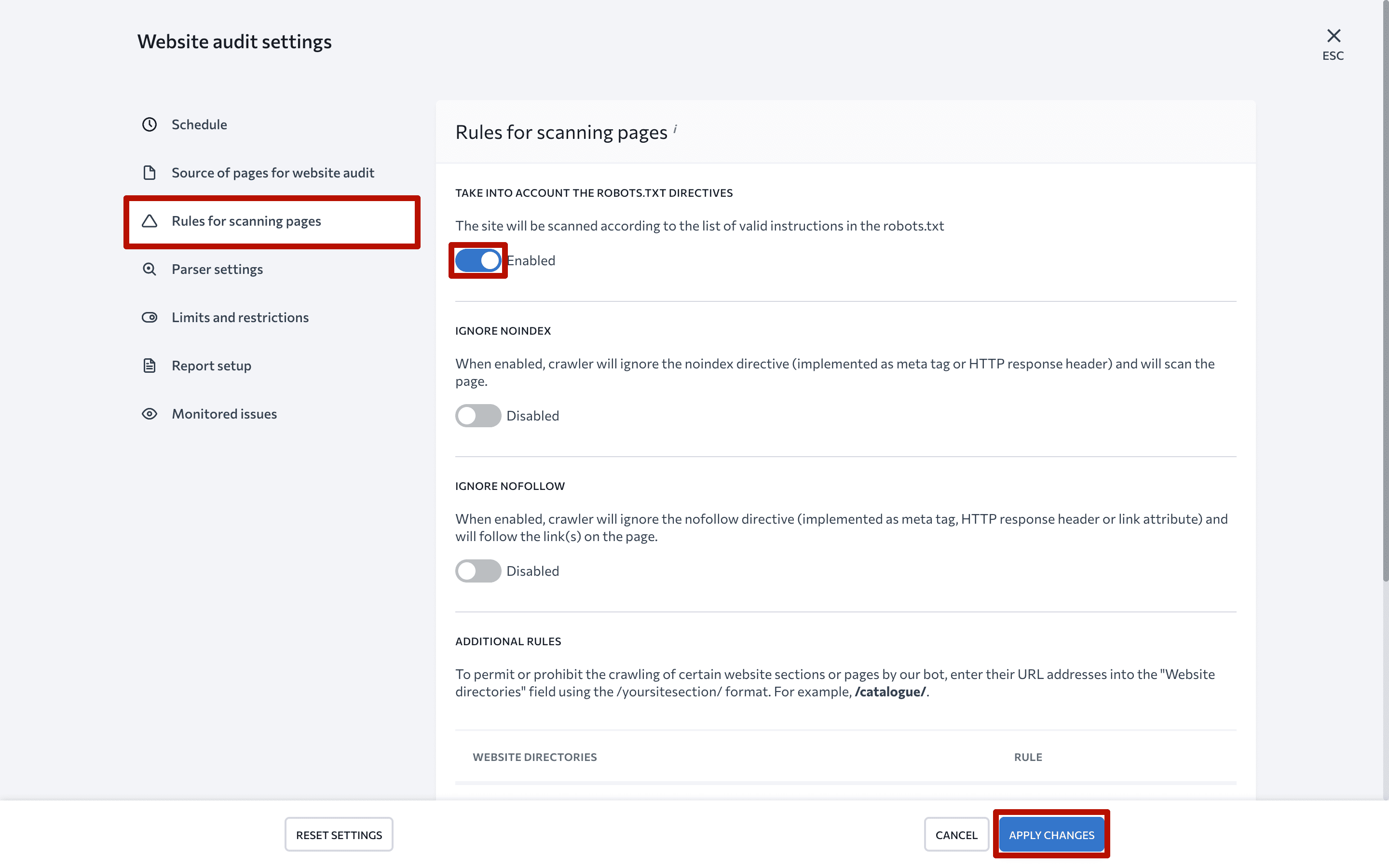

Then, go to Rules for scanning pages, and enable the Take into account the robots.txt directives option to tell the system to follow the instructions specified in the robots.txt file. Click ‘Apply Changes’ when you’re done:

Now, go back to Overview tab and launch the audit with the new settings applied by hitting ‘Restart audit’:

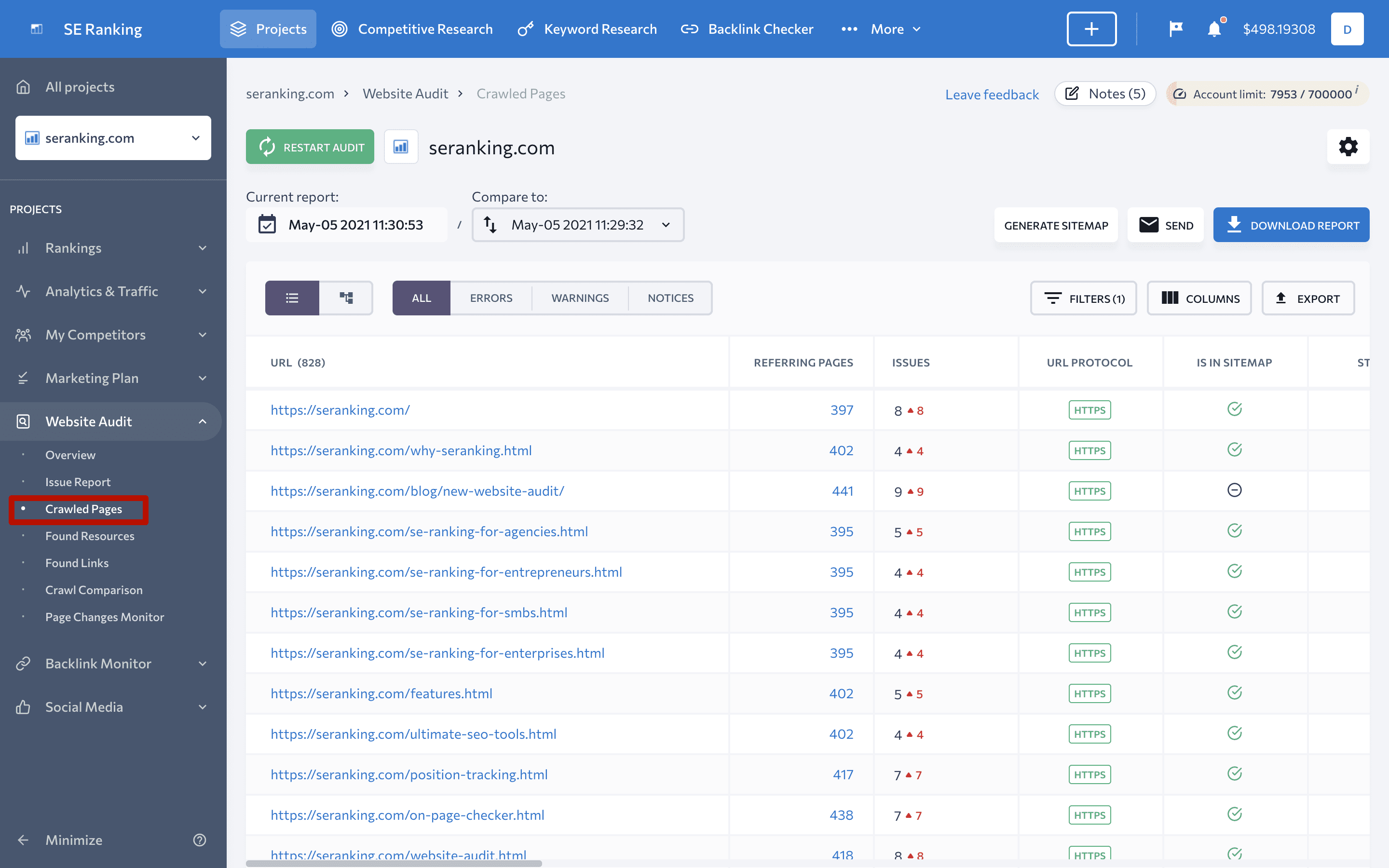

Once the audit is complete, go to Crawled Pages to view the full list of all crawlable pages:

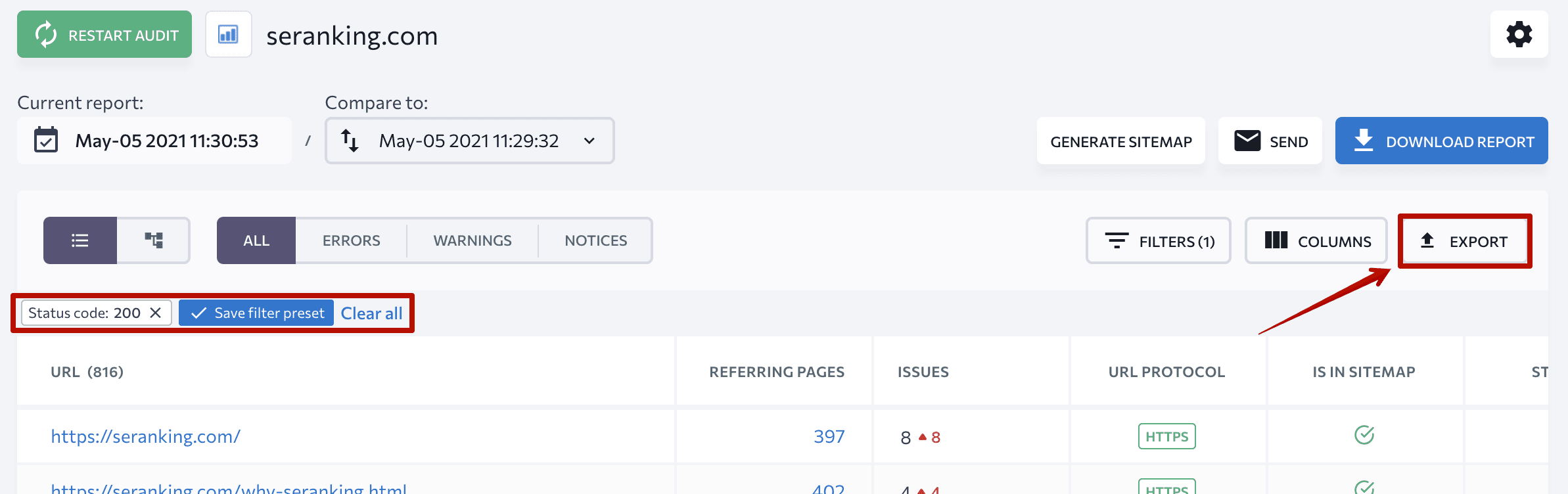

But since we only want to see 200-status-code pages, as in those that are working correctly, we need to add a filter like so:

Now it’s time to export the results:

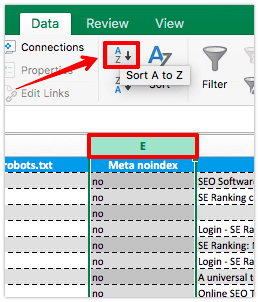

The last thing we need to do here is remove all URLs from the list that have the ‘Yes’ value under the Meta noindex column in Excel. Select the corresponding column and sort it’s data:

Finally, considering the fact that we will have to compare data sets later on, we need to export the results to a place where we can easily perform such tasks. So, copy all the remaining URLs — those with the ‘No’ value under Meta noindex — into a spreadsheet.

(Note that you can use Excel as well, but I prefer Google Sheets.)

Finding all pages with pageviews via Google Analytics

Since crawlers are inherently designed to go through pages that are exclusively reachable via internal links or sitemaps — they aren’t capable of finding orphan pages.

For this reason, you should track down such pages by carefully studying the data in your Google Analytics account. There is only one condition: your website must be linked to your Google Analytics account from the get-go, so that it can collect data behind the scenes.

The logic here is simple: if someone has ever visited any page of your website, Google Analytics will have the data to prove it. And since these visits are made by people, we should ensure such pages serve a distinct SEO or marketing purpose.

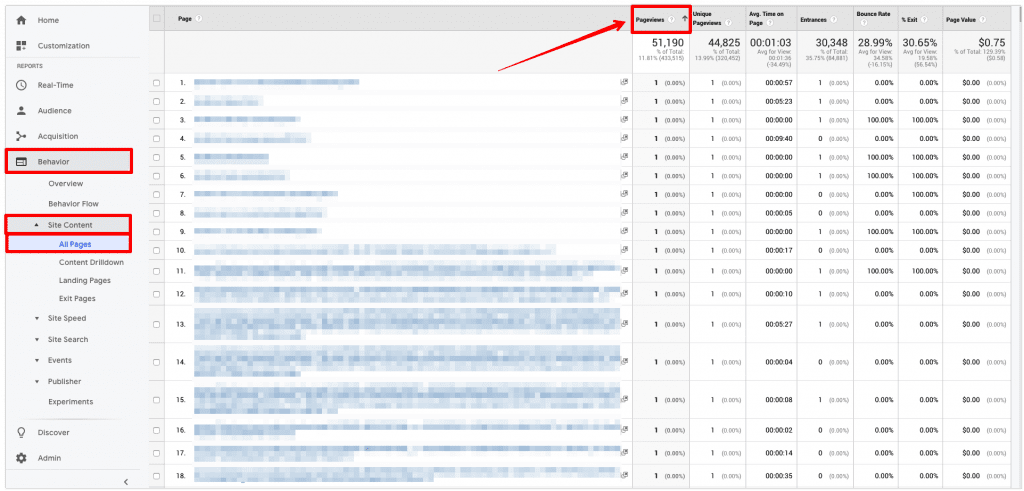

Start by going to Behavior → Site Content → All Pages. Now, we are looking for pages that are difficult (close to impossible) to find by navigating through the site. As a result, they won’t have many pageviews. Close to none, as a matter of fact.

Next, click on ‘Pageviews’ to get the arrow pointing up and sort the page URLs from least to most pageviews. Ultimately, the least visited pages will be seen at the top of the list:

If your site’s been up for some time, it’s a good idea to set the time range to a period before you connected your site to Google Analytics, but mind the data sampling issue.

Now scroll down until you start seeing pages that have had way more visits than your orphan pages and that’s where you should stop. I want to note that since we sorted to view the pages from least to most pageviews, all orphan pages should be there.

Singling out orphan pages

The next step is putting the data from SE Ranking and Google Analytics next to each other and comparing it to learn what web pages weren’t crawled.

The data we collected from Google Analytics isn’t in a URL format, so we need to fix this. To do so, start by inserting the URL of the home page into column B, as shown below:

Then, make use of the concatenate() function to combine the values of column B and C in column D, dragging box D2 down to generate the complete list of URLs:

This is the exciting part: now we need to compare the “SE Ranking” column with the “GA URLs” column to find those lost, forgotten pages.

Obviously, the example above is just an example. In reality, you’ll have way more pages to go through and performing this task manually will take forever.

Luckily, there’s the match function for this that checks to see if every value in the “GA URLs” column is present in the “SE Ranking” column as well. To do this, click on box E2, enter the function, and drag the box all the way down to your last value.

Here’s what you should get:

As you can see, the position in the range is returned in the box should there be matching values. But that’s not what we came for — we’re looking to see if no match (#N/A) has been found, as is the case in box E12.

From the example, it is evident that A12 is empty, therefore E12 returns an error. This means that we’ve found our lucky winner: an orphan page.

Assuming that your list is much longer and not necessarily sorted in any logical order, sort the data in column E as shown in the screenshot below to collect all the errors:

Finally, take the list of all errors, which are actually orphan pages, and insert them into a new spreadsheet. Now you can go through each page and figure out how to handle it.

What to do with orphan pages

Before you do anything else, you must look at each orphan page and understand the big picture — its role in your website and in your marketing efforts. That way, you’ll be able to decide what to do with it.

You have three ways of going about this situation:

To make sure you’ve got all your bases covered, you can run the process anew afterward using updated data.

Finding all other pages via Google Search Console

Now that we know how to find and manage all the pages of your site that have ever had any human visitors, let’s look at the pages that weren’t covered in the previous steps — those that are only accessible to Google.

We’re going to be using the data provided in your Google Search Console account to achieve this.

Start by opening up your account and going to Coverage. Then, make sure to select ‘All known pages’ instead of ‘All submitted pages’ and enable to view only ‘Valid’ pages:

By doing so, you’ll get two lists of pages that have been successfully indexed by the search giant — Indexed, not submitted in sitemap and Submitted and indexed.

Click on a list to expand it and get the full list of the pages that fall under one of these two categories:

Take your time to closely study all the pages listed in there to see if you can find any pages that we’re not collected in the two previous steps. If there are any, make sure to check that they are set up properly in the framework of your site.

Now, let’s select ‘Excluded’ to view only those pages that were intentionally not indexed and won’t appear in Google. Unfortunately, this is where you’ll have to roll up your sleeves and do a lot of manual work:

As you scroll down, you’ll see several lists of excluded pages:

You can view pages with redirects, pages excluded by ‘noindex’ tag, those blocked by rotobs.txt, and so on.

Going through each one of them will give you unfiltered access to every single page of your site. Then, by comparing the orphan page data with the data in these lists, you’ll get a comprehensive overview of all of your site’s pages.

I recommend repeating this process once or twice a year to find new pages that may have gotten away from you.

Closing thoughts

In order for a search engine bot to fully crawl a website, it needs to follow the internal links one by one. But if a web page is not linked to the site in any way, be it accidentally or on purpose, then neither search engines nor humans will be able to reach the page. And this is not great for the site’s SEO performance.

As a site owner or SEO specialist responsible for a site, seeing all the pages of a particular site can help you discover valuable pages you might have forgotten about.

By regularly making sure you’re aware of all the web pages of your site, including orphan pages, you will be able to stay on top of your SEO and marketing efforts.

How to Find All Pages on a Website (and Why You Need To)

Table of Contents

Think about it. Why do you create a website? For your potential customers or audience to easily find you and for you to stand out among the competition, right? How does your content actually get to be seen? Is all the content on your site always seen?

Why you need to find all the pages on your website

It is possible that pages containing valuable information that actually needs to be seen, do not get to be seen at all. If this is the case for your website, then you are probably losing out on significant traffic, or even potential customers.

There could also be pages that are rarely seen, and when they are, users/visitors/potential customers hit a dead-end, as they cannot access other pages. They can only leave. This is as just as bad as those pages that are never seen. Google will begin to note the high bounce rates and question your site’s credibility. This will see your web pages rank lower and lower.

How your content actually gets to be seen

For users, visitors or potential customers to see your content, crawling and indexing needs to be done and done frequently. What is crawling and indexing?

What is crawling and indexing?

For Google to show your content to users/visitors/potential customers, it needs to know first that content exists. How this happens is via crawling. This is when search engines search for new content and add it to its database of already existing content.

What makes crawling possible?

Links:

When you add a link from an existing page to another new page, for example via anchor text, search engine bots or spiders are able to follow the new page and add it to Google’s ‘database’ for future reference.

Sitemaps:

These are also known as XML Sitemaps. Here, the site owner submits a list of all their pages to the search engine. The webmaster can also include details like the last date of modification. The pages are then crawled and added to the ‘database’. This is however not real time. Your new pages or content will not be crawled as soon as you submit your sitemap. Crawling may happen after days or weeks.

Most sites using a Content Management System (CMS) auto-generate these, so it’s a bit of a shortcut. The only time a site might not have the sitemap generated is if you created a website from scratch.

CMS:

If your website is powered by a CMS like Blogger or Wix, the hosting provider (in this case the CMS) is able to ‘tell search engines to crawl any new pages or content on your website.’

Here’s some information to help you with the process:

What is indexing?

Indexing in simple terms is the adding of the crawled pages and content into Google’s ‘database’, which is actually referred to as Google’s index.

Before the content and pages are added to the index, the search engine bots strive to understand the page and the content therein. They even go ahead to catalog files like images and videos.

This is why as a webmaster, on-page SEO comes in handy (page titles, headings, and use of alt text, among others). When your page or pages have these aspects, it becomes easier for Google to ‘understand’ your content, catalog it appropriately and index it correctly.

Using robots.txt

Sometimes, you may not want some pages indexed, or parts of a website. You need to give directives to search engine bots. Using such directives also makes crawling and indexing easier, as there are fewer pages being crawled. Learn more about robots.txt here.

Using ‘noindex’

You can also this other directive if there are pages that you do not want to appear in the search results. Learn more about the noindex.

Before you start adding noindex, you’ll want to identify all of your pages so you can clean up your site and make it easier for crawlers to crawl and index your site properly.

What are some reasons why you need to find all your pages?

What are orphan pages?

An orphan page can be defined as one that has no links from other pages on your site. This makes it almost impossible for these pages to be found by search engine bots, and in addition by users. If the bots cannot find the page, then they will not show it on search results, which further reduces the chances of users finding it.

How do orphan pages come about?

Orphan pages may result from an attempt to keep content private, syntax errors, typos, duplicate content or expired content that was not linked. Here are more ways:

How about dead-end pages?

Unlike orphan pages, dead-end pages have links from other pages on the website but do not link to other external sites. Dead-end pages examples include thank you pages, services pages with no call to actions, and “nothing found” pages when users search for something via the search option.

When you have dead-end pages, people who visit them only have two options: to leave the site or go back to the previous page. That means that you are losing significant traffic, especially if these pages happen to be ‘main pages’ on your website. Worse still, users are left either frustrated, confused or wondering, ‘what’s next’?

If users leave your site feeling frustrated, confused or with any negative emotions, they are never likely to come back, just like unhappy customers are never likely to buy from a brand again.

Where do dead-end pages come from?

Dead end-pages are a result of pages with no calls to action. An example here would be an about page that alludes to the services that your company offers but has no link to those services. Once the reader understands what drives your company, the values you uphold, how the company was founded and the services you offer and is already excited, you need to tell them what to do next.

A simple call to action button ‘view our services’ will do the job. Make sure that the button when clicked actually opens up to the services page. You do not want the user to be served with a 404, which will leave him/her frustrated as well.

What are hidden pages?

Hidden pages are those that are not accessible via a menu or navigation. Though a visitor may be able to view them, especially through anchor text or inbound links, they can be difficult to find.

Pages that fall into the category section are likely to be hidden pages too, as they are located in the admin panel. The search engine may never be able to access them, as they do not access information stored in databases.

Hidden pages can also result from pages that were never added to the site’s sitemap but exist on the server.

Should all hidden pages be done away with?

Not really. There are hidden pages that are absolutely necessary, and should never be accessible from your navigations. Let’s look at examples:

Newsletter sign ups

You can have a page that breaks down the benefits of signing up to the newsletter, how frequently users should expect to receive it, or a graphic showing the newsletter (or previous newsletter). Remember to include the sign up link as well.

Pages containing user information

Pages that require users to share their information should definitely be hidden. Users need to create accounts before they can access them. Newsletter sign ups can also be categorized here.

How to find hidden pages

Like we mentioned, you can find hidden pages using all the methods that are used to find orphan or dead end pages. Let’s explore a few more.

Using robots.txt

Hidden pages are highly likely to be hidden from search engines via robots.txt. To access a site’s robots.txt, type [domain name]/robots.txt into a browser and enter. Replace ‘domain name’ with your site’s domain name. Look out for entries beginning with ‘disallow’ or ‘nofollow’.

Manually finding them

If you sell products via your website for example, and suspect that one of your product categories may be hidden, you can manually look for it. To do this, copy and paste another products URL and edit it accordingly. If you don’t find it, then you were right!.

What if you have no idea of what the hidden pages could be? If you organize your website in directories, you can add your domainname/folder-name to a site’s browser and navigate through the pages and sub-directories.

Once you have found your hidden pages (and they do not need to stay hidden as discussed above), you need to add it to your sitemap and submit a crawl request.

How to find all the pages on your site

You need to find all your web pages in order to know which ones are dead-end or orphan. Let’s explore the different ways to achieve this:

Using your sitemap file

We have already looked at sitemaps. Your sitemap would come in handy when analyzing all of your web pages. If you do not have a sitemap, you can use a sitemap generator to generate one for you. All you need to do is enter your domain name and the sitemap will be generated for you.

Using your CMS

If your site is powered by a content management system(CMS) like WordPress, and your sitemap does not contain all the links, it is possible to generate the list of all your web pages from the CMS. To do this, use a plugin like Export All URLs.

Using a log

A log of all the pages served to visitors also comes in handy. To access the log, log in to your cPanel, then find ‘raw log files’. Alternatively, request your hosting provider to share it. This way you get to see the most frequently visited pages, the never visited pages and those with the highest drop off rates. Pages with high bounce rates or no visitors could be dead-end or orphan pages.

Using Google Analytics

Here are the steps to follow:

Step 1: Log in to your Analytics page.

Step 2: Go to ‘behavior’ then ‘site content’

Step 3: Go to ‘all pages’

Step 4: Scroll to the bottom and on the right choose ‘show rows’

Step 5: Select 500 or 1000 depending on how many pages you would estimate your site to have

Step 6: Scroll up and on the top right choose ‘export’

Step 8: Once the excel is exported choose ‘dataset 1’

Step 9: Sort by ‘unique page views’.

Step 10: Delete all other rows and columns apart from the one with your URLs

Step 11: Use this formula on the second column:

Step 12: Replace the domain with your site’s domain. Drag the formula so that it is applied to the other cells as well.

You now have all your URLs.

If you want to convert them to hyperlinks in order to easily click and access them when looking something up, go on to step 13.

Step 13: Use this formula on the third row:

Drag the formula so that it is applied to the other cells as well.

Manually typing into Google’s search query

You can also type this site: www.abc.com into Google’s search query. Replace ‘abc’ with your domain name. You will get search results with all the URLs that Google has crawled and indexed, including images, links to mentions on other sites, and even hashtags your brand can be linked to.

You can then manually copy each and paste them onto an excel spreadsheet.

What then do you do with your URL list?

At this point, you may be wondering what you need to do with your URL list. Let’s look at the available options:

Manual comparison with log data

One of the options would be to manually compare your URL list with the CMS log and identify the pages that seem to have no traffic at all, or that seem to have the highest bounce rates. You can then use a tool like ours to check for inbound and outbound links for each of the pages that you suspect to be orphan or dead end.

The third comparison approach is copying two data sets – your log and URL list on to Google Sheets. This allows you to use this formula: =VLOOKUP(A1, A: B,2,) to look up URLs that are present in your URL list, but not on your log. The missing pages (rendered as N/A) should be interpreted as orphan pages. Ensure that the log data is on the first or left column.

Using site crawling tools

The other option would be to load your URL list onto tools that can perform site crawls, wait for them to crawl the site and then you copy and paste your URLs onto a spreadsheet before analyzing them one by one, and trying to figure out which ones are orphan or dead end.

These two options can be time-consuming, especially if you have many pages on your site, right?

Well, how about a tool that not only finds you all your URLs but also allows you to filter them and shows their status (so that you know which ones are dead end or orphan?). In other words, if you want a shortcut to finding all of your site’s pages SEOptimer’s SEO Crawl Tool.

SEOptimer’s SEO Crawl Tool

This tool allows you to access all your pages of your site. You can start by going to “Website Crawls” and enter your website url. Hit “Crawl”

Once the crawl is finished you can click on “View Report”:

Our crawl tool will detect all the pages of your website and list them in the “Page Found” section of the crawl.

You can identify “404 Error” issues on our “Issues Found” just beneath the “Pages Found” section:

Our crawlers can identify other issues like finding pages with missing Title, Meta Descriptions, etc. Once you find all of your pages, you can start filtering and work on the issues at hand.

In conclusion

In this article we have looked at how to find all the pages on your site and why it is important. We have also explored concepts like orphan and dead end pages, as well as hidden pages. We have differentiated each one, how to identify each among your URls. There is no better time to find out whether you are losing out due to hidden, orphan or dead-end pages.

To know the weaknesses of your site, you need to do a full site audit. During this process, you will be able to find out the number of links that are on your site. Every website owner needs to know the number of pages to understand if all the website pages have made it into the search engine index. So how to see all pages of a website?

You need to know ways to check the number of pages on your site and your competitor’s site. How can you do this for free with a website page counter?

How many pages does a website have? In this article, we’ll look at four simple ways to find this out.

Why do you need to find all pages on a website?

Knowing how many pages on a website, you can calculate whether they are indexed and listed in the search engine database because sometimes there can be non-indexable pages. Find all pages on a website, and you will understand if you have a lot of duplicate pages, which negatively affects the ranking of your site in the network. It’s important to know which pages may have errors, so you can detect them and fix them.

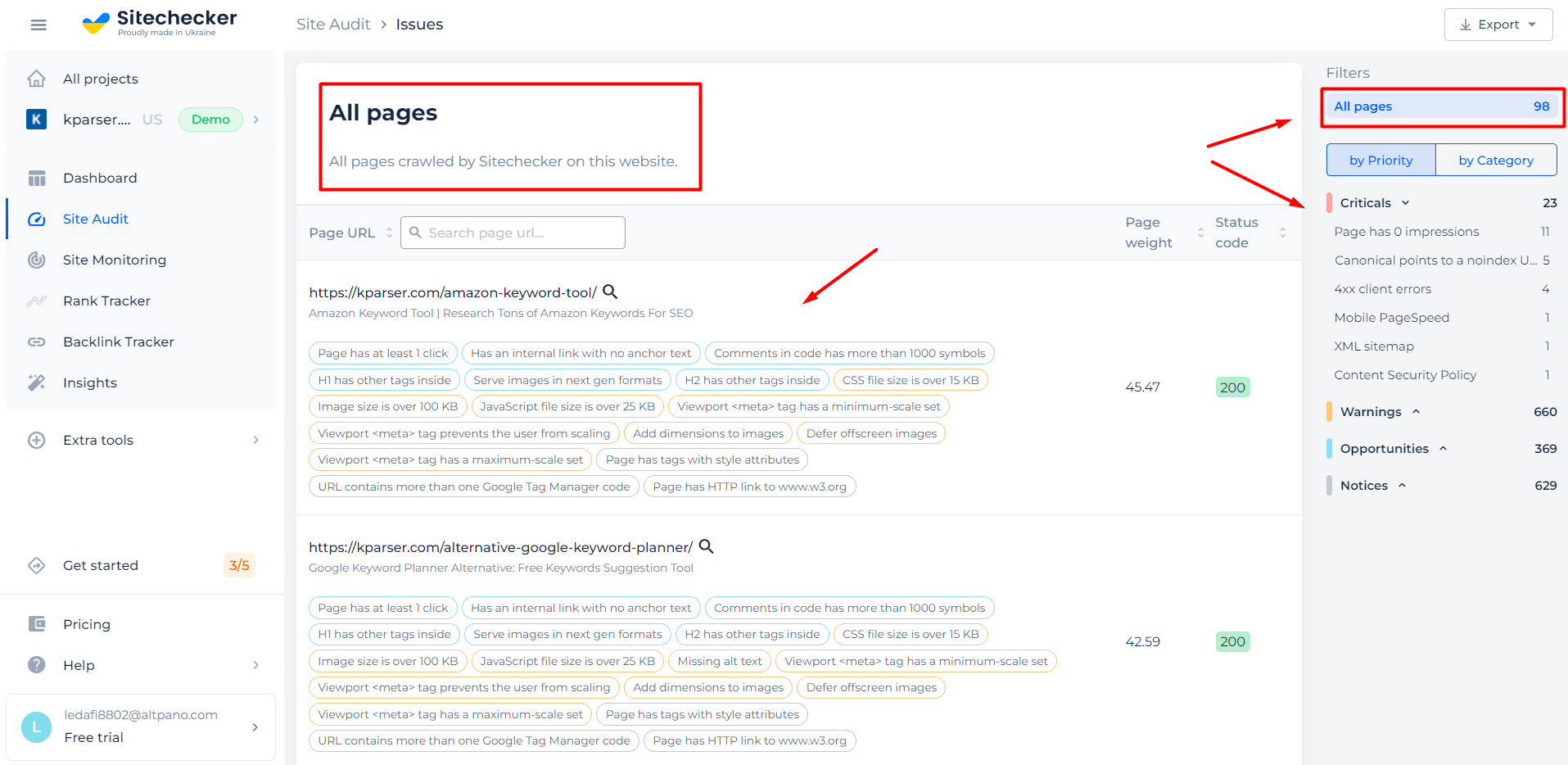

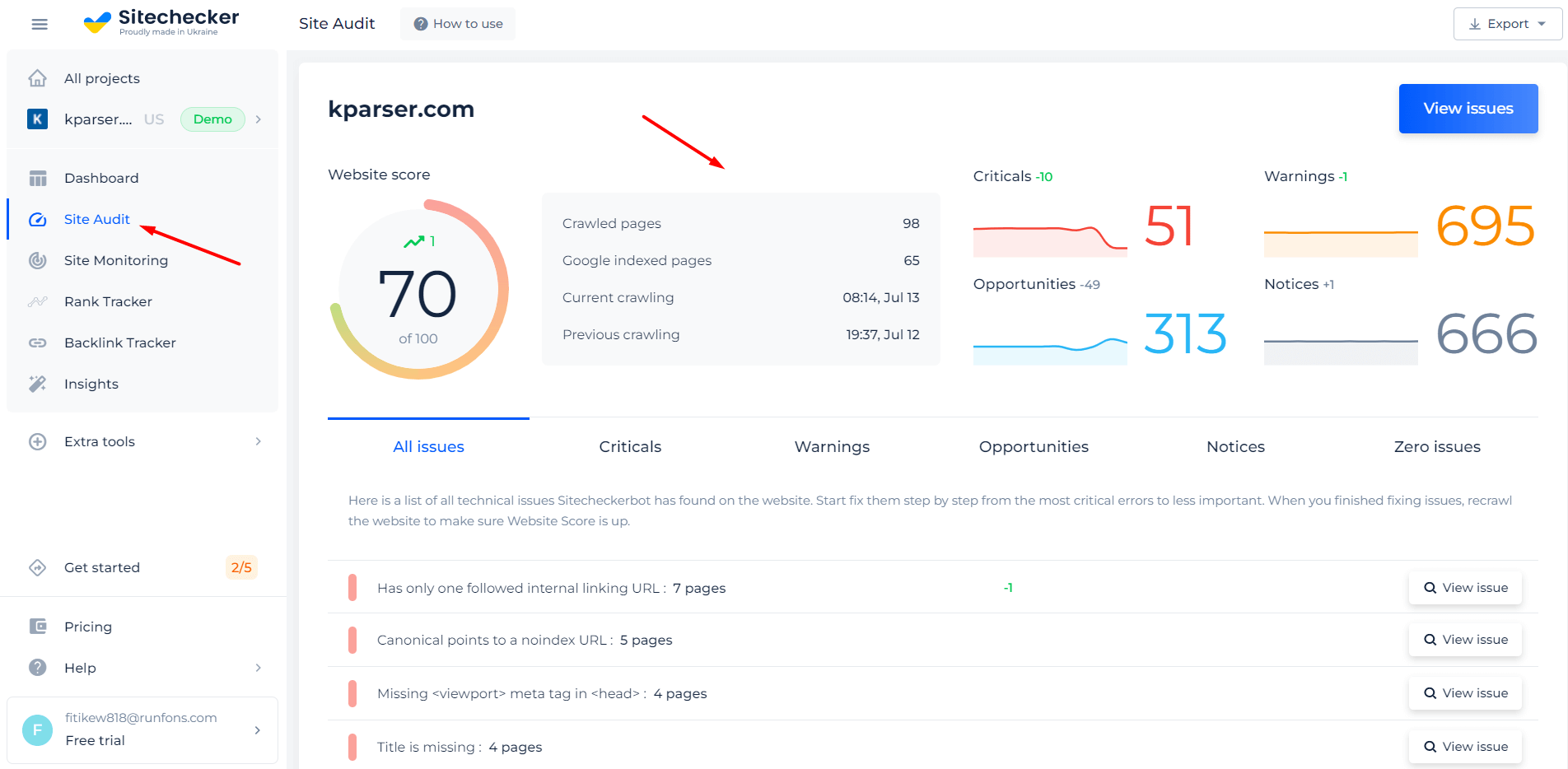

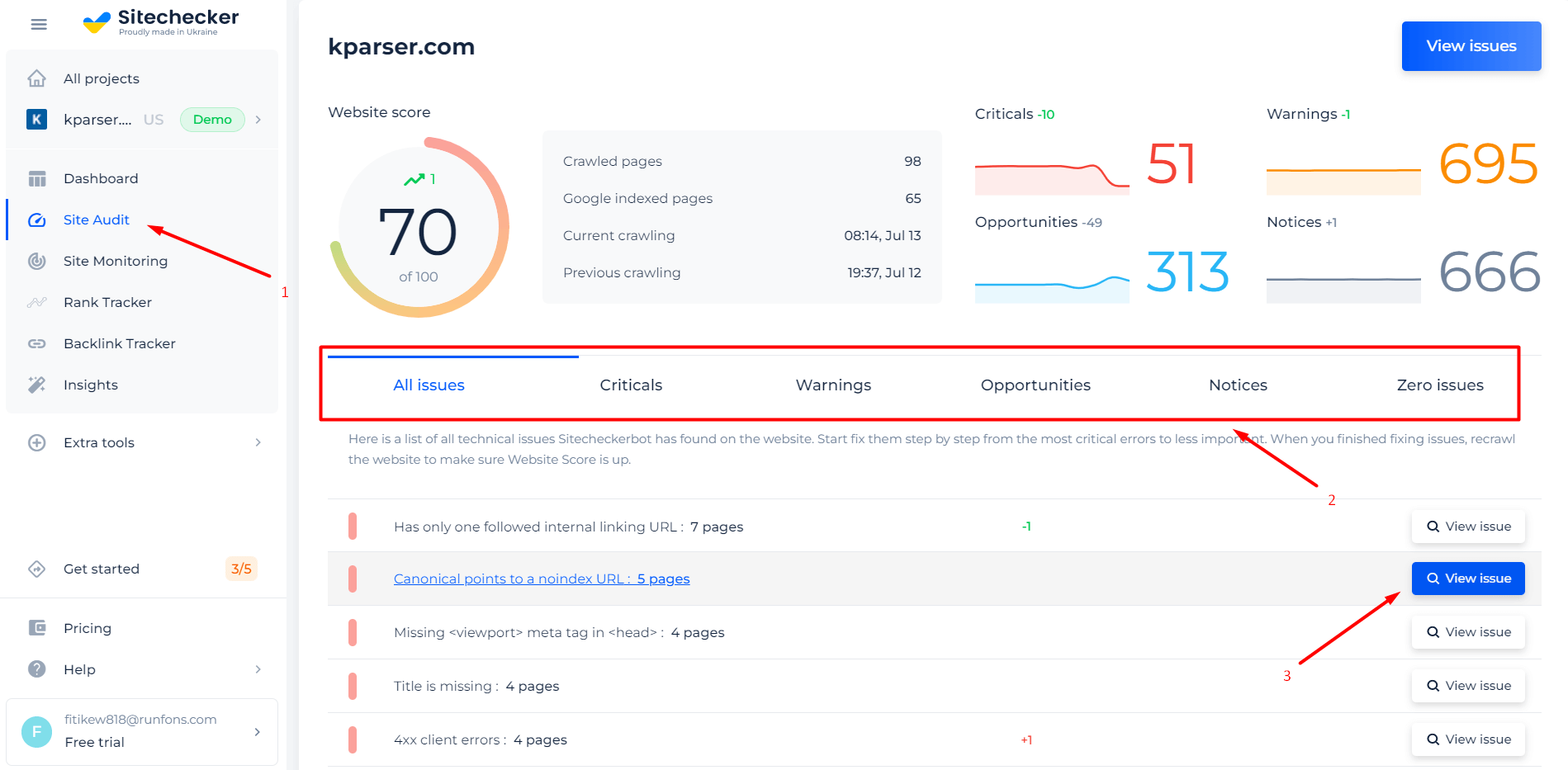

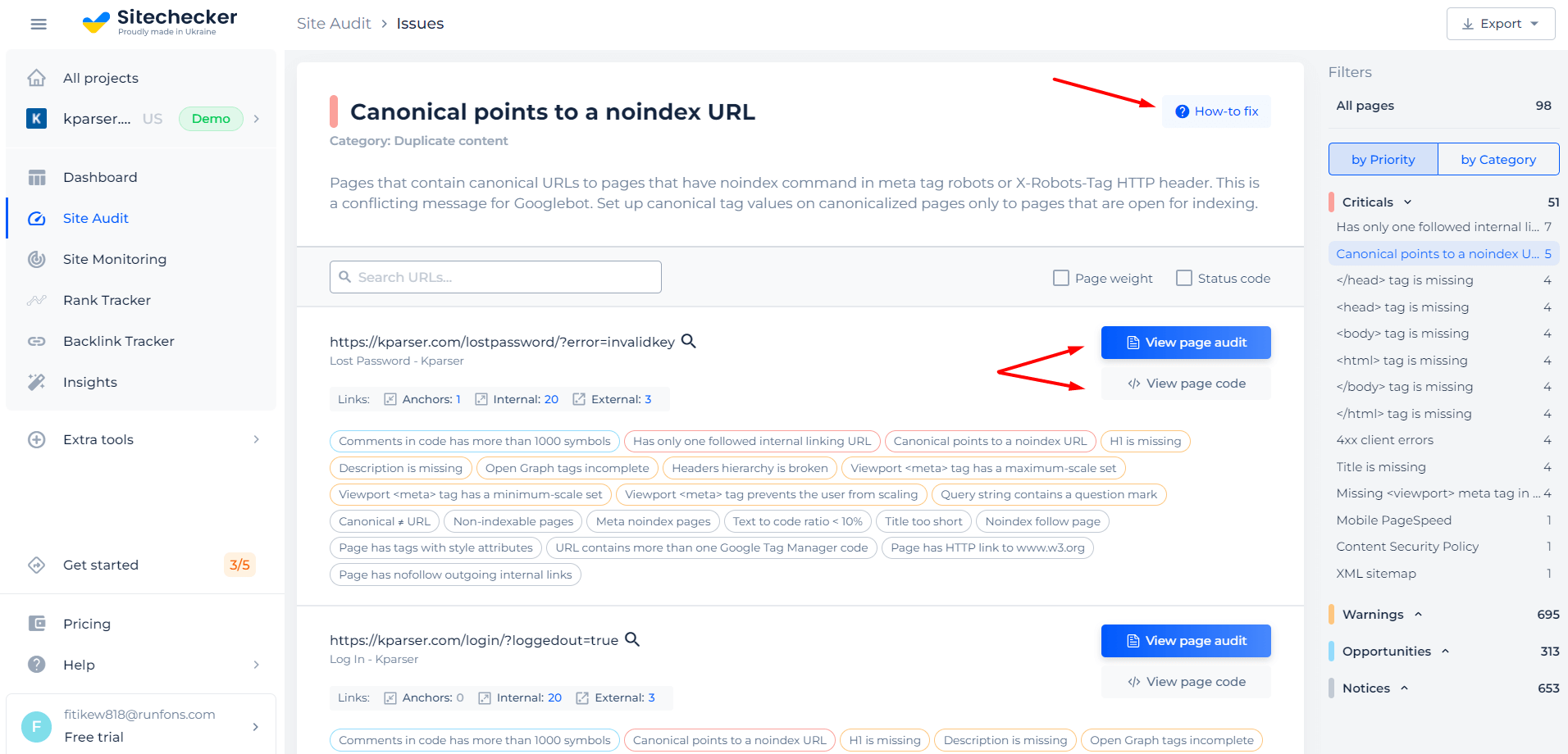

Errors on your site’s pages significantly hinder your search engine rankings. Do regular audits and find all URLs on a domain to know the status of your site and discover weaknesses. Please check out our video guide on how to use site audit on Sitechecker platform.

Another important fact is the link weight. You need to evenly distribute link weight on your resource pages, as this will depend on your ranking in search engines. To do this, you need each link of your site and from each page led links to other pages. It is how the internal link weight on your site is transferred.

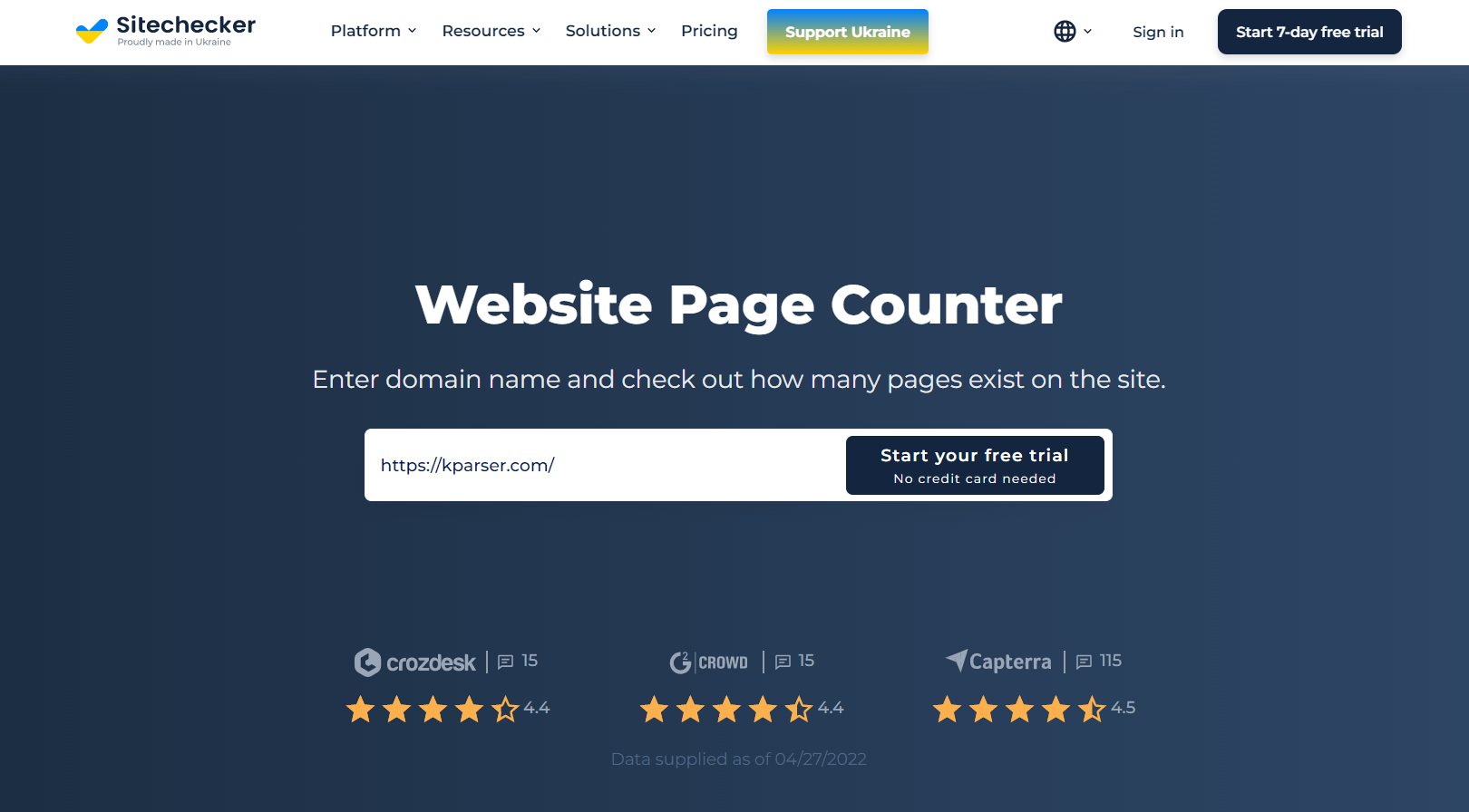

How to use the Website Page Counter?

Do you ask yourself how to find, how many pages your website has? Our tool will give you the answer.

Step 1: Insert Your Domain and Start Free Trial

All you have to do is enter the domain name and start a free trial, and then view all pages on a website. Starting the trial is fast and free.

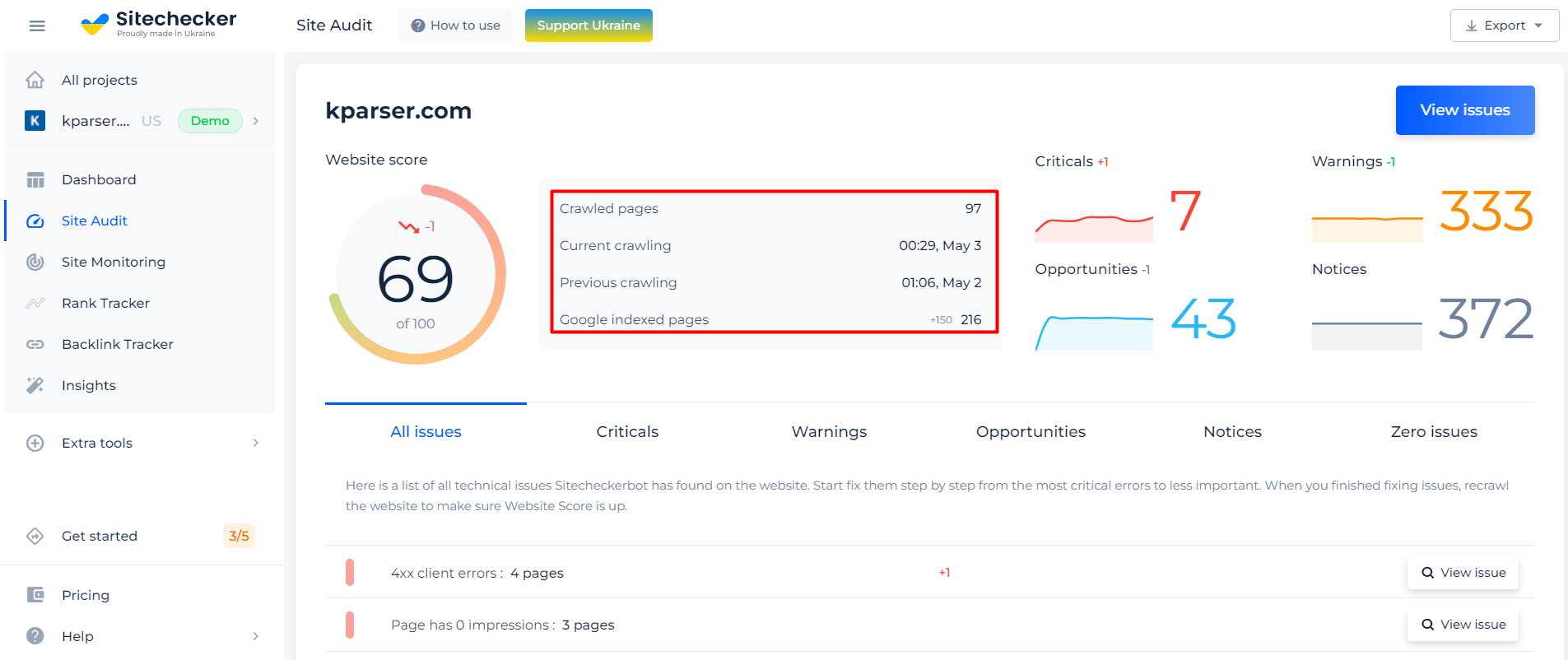

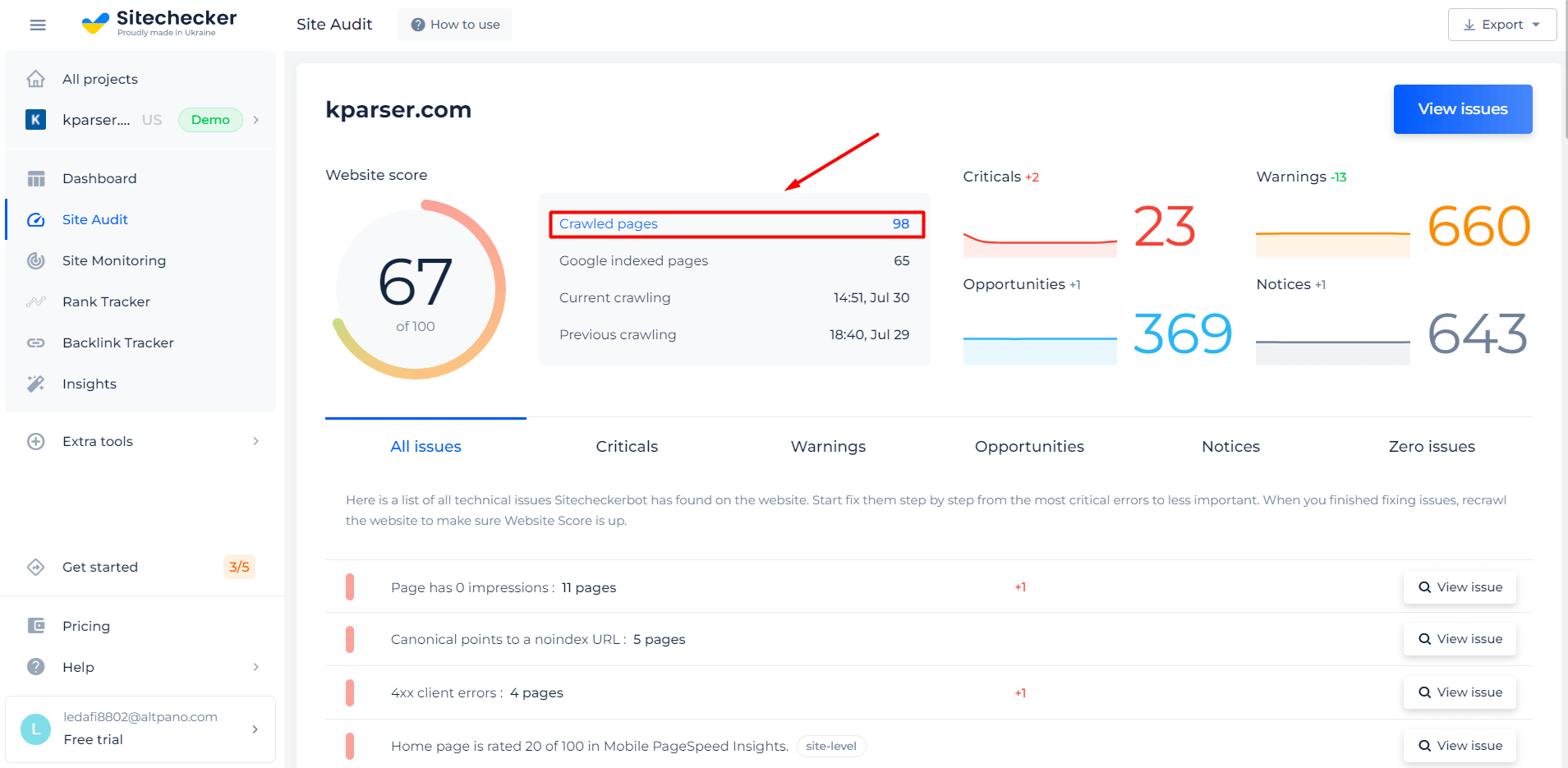

Step 2: Get Result

After crawling, you can see “how many web pages are there”. This number indicates how many pages exist on your site at all. Also, you will see how many pages of the site are already in the Google index.

Also, by clicking on the “Crawled pages” or “Google indexed pages” you can open the list of those pages with information about issues, status, and other kinds of those pages SEO audit information.

Note that search engines can index not all links on your site. If you are using the tool to find out which pages of your site got into the index, consider the crawling instructions. To give clear instructions for indexing, you need to create Robots.txt. A robots.txt file tells search engine crawlers which files the crawler can or can’t request from your site. Some pages can be disallowed in robots.txt file.

Features of Page Counter Tool

With the Google website page counter tool, you can easily find out how to find all pages on a website for free. It is a convenient way to check if the search engine network has indexed all parts of your site. This and another kind of information will be regularly renewed after crawling your site and will be able on the site audit page in your account.

Also, you are able to find all kind of technical problems that your site have. You can view the page list, that has a specific issue and find out the instructions on how to fix them.

So our tool is not just about checking the count of pages and their indexing status, but the possibility makes those pages technically correct to impact your SEO.

Find out not only the number of pages but also the presence of technical errors on them!

Make that full SEO audit to check out technical conditions of all pages on your website.

When do you need the Website page counter?

For users to access your site’s pages from a search engine, your site must be indexed. During indexing, search engine robots crawl your site and add your pages to the index. This way, your site becomes indexed, and users can find you in search engine results. Find out the total number of pages on your site with our tool.

Use the page count tool to know how to find all the pages on a website and which pages of your site are accessible to users, and to know whether all of them are indexed. You can use the Website counter to check the number of links on your competitor’s site. It is a very interesting opportunity to analyze your competitor’s site for free!

Other ways to find out how many pages a website has

There are other ways to find out how many links your site or your competitor’s site has, in spite of the site page counter. Let’s look at the most popular ways.

Look at the XML sitemap file

You should create an XML sitemap file. It is very useful when you need to know how to see all pages on a website. Use a sitemap generator to create one for you; it is a simple way. It is done automatically, and you do not need to have technical knowledge or expertise in XML sitemap creation.

Having an XML sitemap is an advantage during the ranking in search engines. If during the site audit it is found that you do not have a sitemap, then this fact will be marked as a critical error.

Using your CMS

If your site runs on a content management system (CMS), such as WordPress or WIX, you can generate a list of all your web pages from the CMS. There are many plugins on the web that can help you collect all the links on your site in one click. It’s very simple and free. Just give it a try to count website pages!

Using a log

A log of all the pages served to visitors is another way to know the number of all pages on your website. Just log into your cPanel, then find raw log files. So you can list all pages on a website – the most frequently visited links, the never visited, and those with the highest drop-off rates.

Using site crawling tools

Another simple and popular way to find out how many pages a website has is to use site audit tools. There are many of them, so you can choose one that your team has a subscription to. It can be either Netpeak Spider or Screaming Frog.

A free subscription to the tool is enough to know the number of all the links on your site. You won’t need to buy a subscription just for this task.

Final thoughts on website page counter tool

Well, we’ve figured out how to view all pages of a website quickly and for free. As a responsible site owner, you should understand how important it is to know all the pages of your site. Both your site and your competitors’ sites.

Thanks to the free web page counter, you can easily find out the number of all the links on your site and see which ones have made it into the search engine index. Remember, it is important to perform regular site audits to look for critical errors that interfere with your search engine promotion. See all pages on a website with our tool!