How to learning machine learning

How to learning machine learning

Getting started with Machine Learning

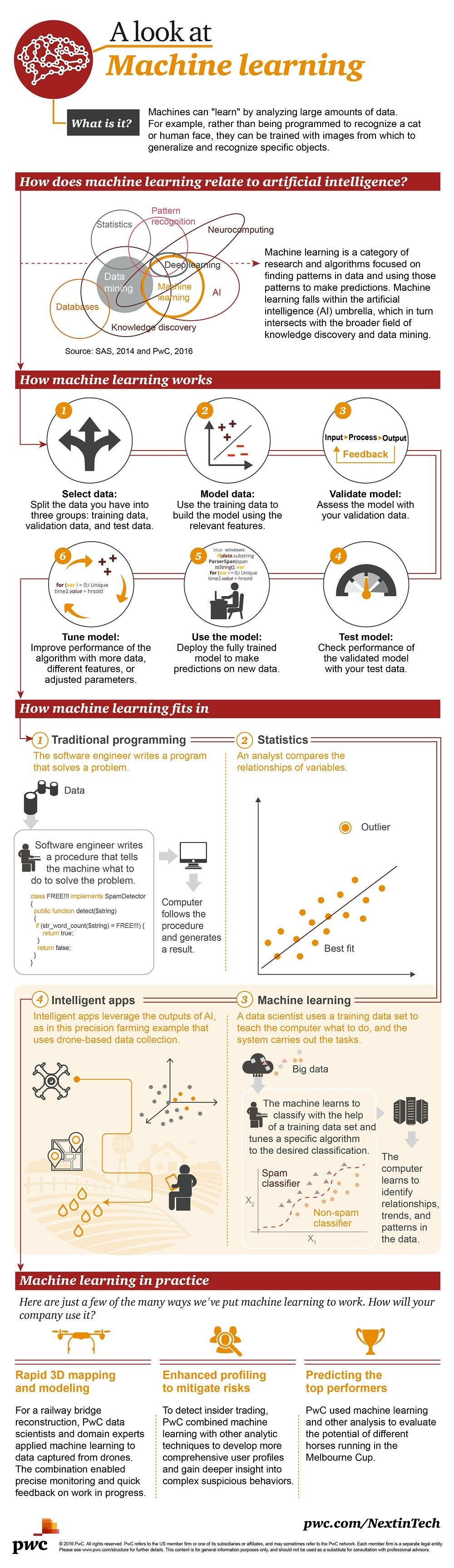

From translation apps to autonomous vehicles, all powers with Machine Learning. It offers a way to solve problems and answer complex questions. It is basically a process of training a piece of software called an algorithm or model, to make useful predictions from data. This article discusses the categories of machine learning problems, and terminologies used in the field of machine learning.

Types of machine learning problems

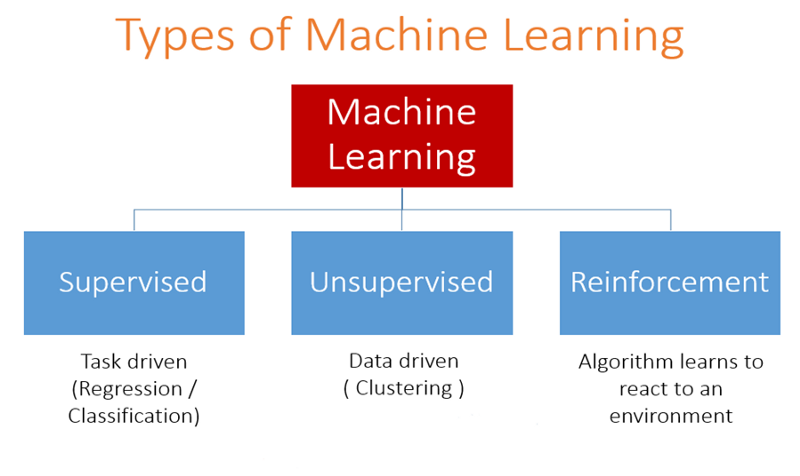

There are various ways to classify machine learning problems. Here, we discuss the most obvious ones.

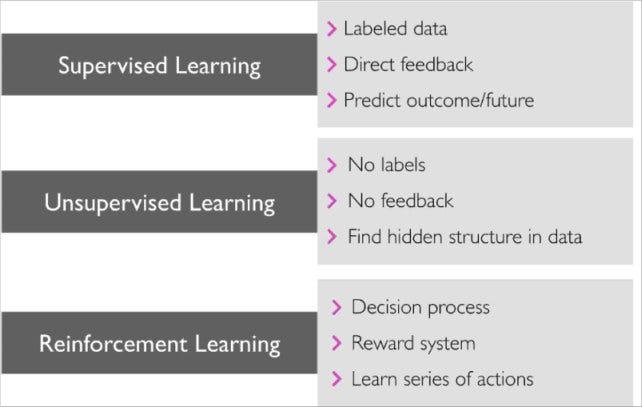

1. On basis of the nature of the learning “signal” or “feedback” available to a learning system

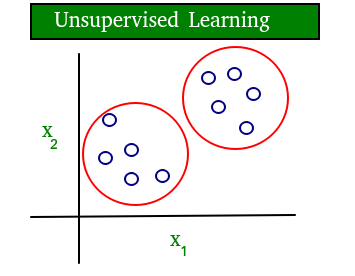

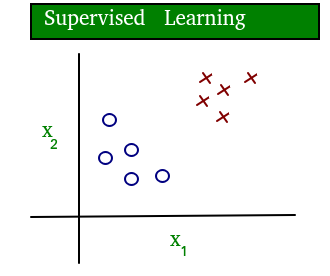

A simple diagram which clears the concept of supervised and unsupervised learning is shown below:

As you can see clearly, the data in supervised learning is labelled, where as data in unsupervised learning is unlabelled.

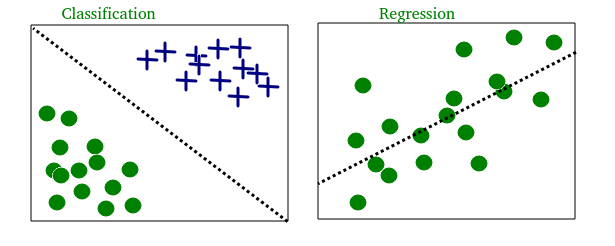

2. Two most common use cases of Supervised learning are:

An example of classification and regression on two different datasets is shown below:

3. Most common Unsupervised learning are:

On the basis of these machine learning tasks/problems, we have a number of algorithms which are used to accomplish these tasks. Some commonly used machine learning algorithms are Linear Regression, Logistic Regression, Decision Tree, SVM(Support vector machines), Naive Bayes, KNN(K nearest neighbors), K-Means, Random Forest, etc. Note: All these algorithms will be covered in upcoming articles.

Terminologies of Machine Learning

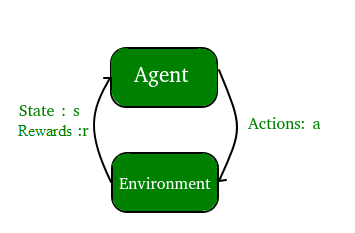

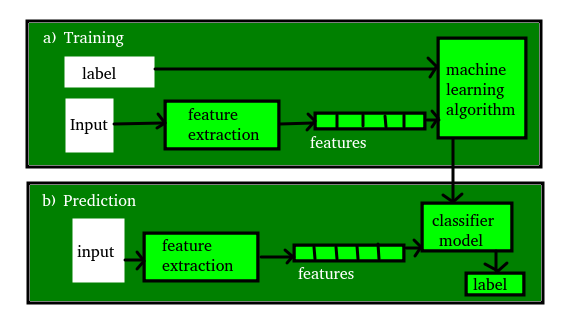

The figure shown below clears the above concepts:

References:

This blog is contributed by Nikhil Kumar. If you like GeeksforGeeks and would like to contribute, you can also write an article using write.geeksforgeeks.org or mail your article to review-team@geeksforgeeks.org. See your article appearing on the GeeksforGeeks main page and help other Geeks. Please write comments if you find anything incorrect, or you want to share more information about the topic discussed above.

GeeksforGeeks Courses

Machine Learning Basic and Advanced – Self Paced Course

Build your foundations strong with our machine learning self-paced course, with topics like Data Dimensionality, Data handling, Regression, Clustering and so much more. Remember, this course is specially designed for beginners, keeping in mind the basic requirements. This Machine Learning course will teach you the skills you need to become a industry ready Machine Learning Engineer. Enrol now!

Chapter-1 Machine Learning Introduction

M achine Learning nowadays going to be popular because of his nature to handle data and this is very powerful tool which can make human life so easy and authentic.When I had started machine learning, I was stuck on “ how to start machine learning?” then I started following some Analytics Vidhya Blog material for reading and it’s make my picture so clear. I followed Learning path of some website but without background knowledge you never start this things.So, in this chapter I start writing is background knowledge we have to needed and some basics about machine learning.

Machine learning is part of AI that use statistics techniques to give a intelligence to machine to perform specific task accurately.

Data Mining is the process of extracting useful information or knowledge (not so obvious patterns or insights) from huge sets of data. This knowledge could further be used for business applications such as Market analysis, Risk management, Fraud detection etc. Data mining could be accomplished by analysis, visualization or Machine Learning modelling.

Machine Learning is the process of gaining knowledge from past data, and using that knowledge to make future predictions. Example Algorithm: Support Vector Machines

Deep learning is a type of machine learning technique with more capabilities since it tries to mimic the neurons in human brain. It tries to learn a phenomenon as a nested hierarchy of concepts, with each concept defined in relation to simpler concepts. Example Algorithm: Convolution Neural Networks

How Machine Learning Works?

Machine learning systems are made up of three major parts, which are:

But Let me show you the Big picture of machine learning in below infographics.

Step 1: Adjust Mindset. Believe you can practice and apply machine learning.

Step 2: Pick a Process. Use a systemic process to work through problems.

Step 3: Pick a Tool. Select a tool for your level and map it onto your process.

Step 4: Practice on Datasets. Select datasets to work on and practice the process.

Step 5: Build a Portfolio. Gather results and demonstrate your skills.

Machine Learning Types

There are variation of types based on their nature to solve the problem.

other resources to learn:

What are the steps used in Machine Learning?

There are 5 basic steps used to perform a machine learning task:

Be it any model, these 5 steps can be used to structure the technique and when we discuss the algorithms, you shall then find how these five steps appear in every model!

Application of Machine Learning

1.Virtual Personal Assistants

Virtual Assistants are integrated to a variety of platforms. For example:

2. Predictions while Commuting

3. Videos Surveillance

4. Social Media Services

5. Email Spam and Malware Filtering

How to Start Learning Machine Learning?

Arthur Samuel coined the term “Machine Learning” in 1959 and defined it as a “Field of study that gives computers the capability to learn without being explicitly programmed”.

And that was the beginning of Machine Learning! In modern times, Machine Learning is one of the most popular (if not the most!) career choices. According to Indeed, Machine Learning Engineer Is The Best Job of 2019 with a 344% growth and an average base salary of $146,085 per year.

But there is still a lot of doubt about what exactly is Machine Learning and how to start learning it? So this article deals with the Basics of Machine Learning and also the path you can follow to eventually become a full-fledged Machine Learning Engineer. Now let’s get started.

What is Machine Learning?

Machine Learning involves the use of Artificial Intelligence to enable machines to learn a task from experience without programming them specifically about that task. (In short, Machines learn automatically without human hand holding. ) This process starts with feeding them good quality data and then training the machines by building various machine learning models using the data and different algorithms. The choice of algorithms depends on what type of data do we have and what kind of task we are trying to automate.

How to start learning ML?

This is a rough roadmap you can follow on your way to becoming an insanely talented Machine Learning Engineer. Of course, you can always modify the steps according to your needs to reach your desired end-goal!

Step 1 – Understand the Prerequisites

In case you are a genius, you could start ML directly but normally, there are some prerequisites that you need to know which include Linear Algebra, Multivariate Calculus, Statistics, and Python. And if you don’t know these, never fear! You don’t need a Ph.D. degree in these topics to get started but you do need a basic understanding.

(a) Learn Linear Algebra and Multivariate Calculus

Both Linear Algebra and Multivariate Calculus are important in Machine Learning. However, the extent to which you need them depends on your role as a data scientist. If you are more focused on application heavy machine learning, then you will not be that heavily focused on maths as there are many common libraries available. But if you want to focus on R&D in Machine Learning, then mastery of Linear Algebra and Multivariate Calculus is very important as you will have to implement many ML algorithms from scratch.

(b) Learn Statistics

Data plays a huge role in Machine Learning. In fact, around 80% of your time as an ML expert will be spent collecting and cleaning data. And statistics is a field that handles the collection, analysis, and presentation of data. So it is no surprise that you need to learn it.

Some of the key concepts in statistics that are important are Statistical Significance, Probability Distributions, Hypothesis Testing, Regression, etc. Also, Bayesian Thinking is also a very important part of ML which deals with various concepts like Conditional Probability, Priors, and Posteriors, Maximum Likelihood, etc.

(c) Learn Python

Some people prefer to skip Linear Algebra, Multivariate Calculus and Statistics and learn them as they go along with trial and error. But the one thing that you absolutely cannot skip is Python! While there are other languages you can use for Machine Learning like R, Scala, etc. Python is currently the most popular language for ML. In fact, there are many Python libraries that are specifically useful for Artificial Intelligence and Machine Learning such as Keras, TensorFlow, Scikit-learn, etc.

So if you want to learn ML, it’s best if you learn Python! You can do that using various online resources and courses such as Fork Python available Free on GeeksforGeeks.

Step 2 – Learn Various ML Concepts

Now that you are done with the prerequisites, you can move on to actually learning ML (Which is the fun part. ) It’s best to start with the basics and then move on to the more complicated stuff. Some of the basic concepts in ML are:

(a) Terminologies of Machine Learning

(b) Types of Machine Learning

(c) How to Practise Machine Learning?

(d) Resources for Learning Machine Learning:

There are various online and offline resources (both free and paid!) that can be used to learn Machine Learning. Some of these are provided here:

Step 3 – Take part in Competitions

After you have understood the basics of Machine Learning, you can move on to the crazy part. Competitions! These will basically make you even more proficient in ML by combining your mostly theoretical knowledge with practical implementation. Some of the basic competitions that you can start with on Kaggle that will help you build confidence are given here:

After you have completed these competitions and other such simple challenges …Congratulations. You are well on your way to becoming a full-fledged Machine Learning Engineer and you can continue enhancing your skills by working on more and more challenges and eventually creating more and more creative and difficult Machine Learning projects.

Machine Learning is Fun!

The world’s easiest introduction to Machine Learning

Have you heard people talking about machine learning but only have a fuzzy idea of what that means? Are you tired of nodding your way through conversations with co-workers? Let’s change that!

This guide is for anyone who is curious about machine learning but has no idea where to start. I imagine there are a lot of people who tried reading the wikipedia article, got frustrated and gave up wishing someone would just give them a high-level explanation. That’s what this is.

The goal is be accessible to anyone — which means that there’s a lot of generalizations. But who cares? If this gets anyone more interested in ML, then mission accomplished.

What is machine learning?

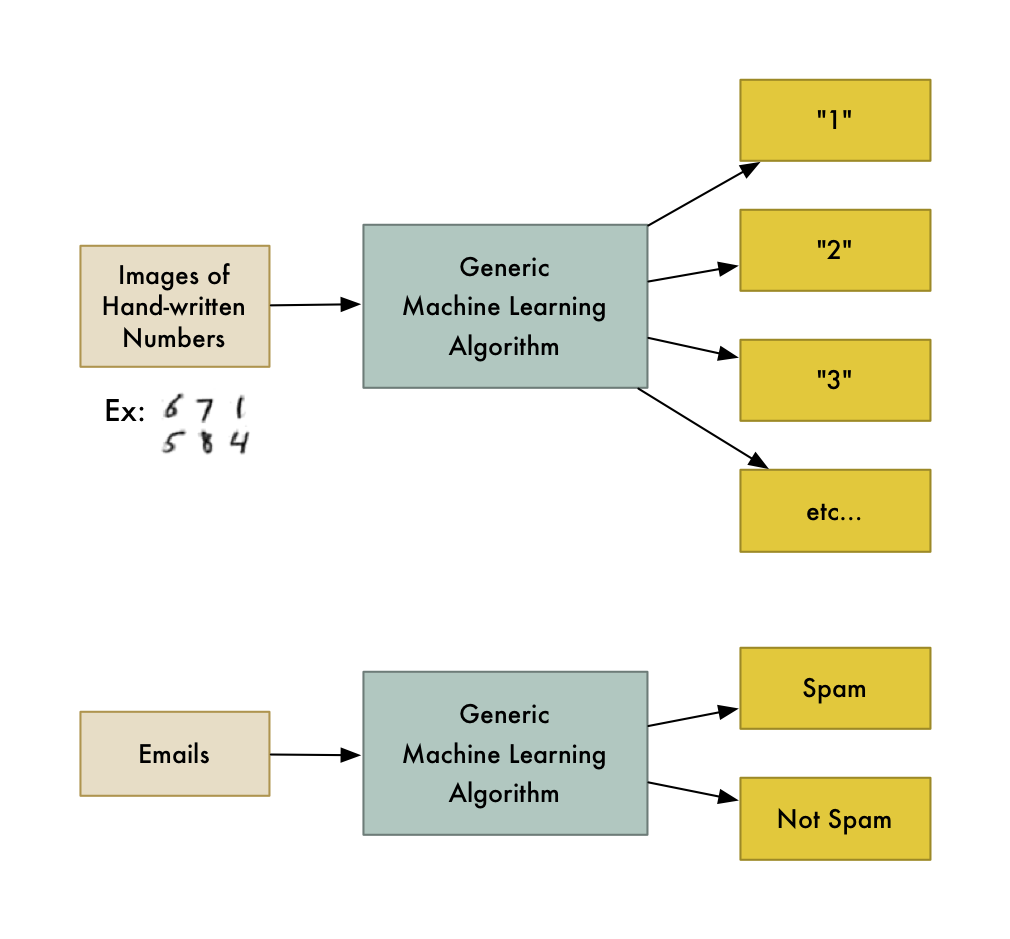

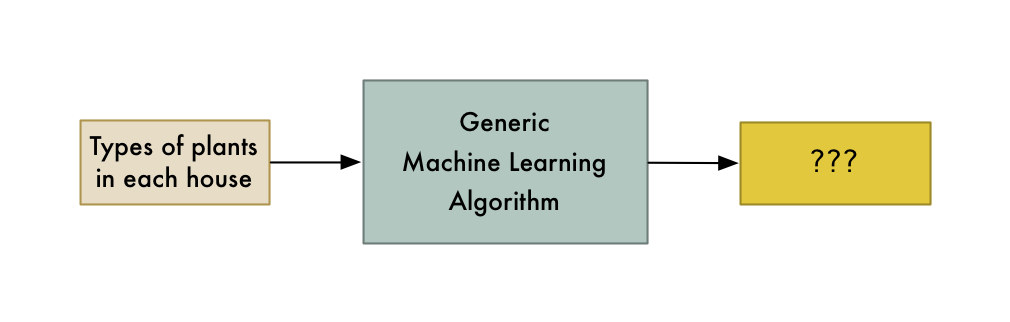

Machine learning is the idea that there are generic algorithms that can tell you something interesting about a set of data without you having to write any custom code specific to the problem. Instead of writing code, you feed data to the generic algorithm and it builds its own logic based on the data.

For example, one kind of algorithm is a classification algorithm. It can put data into different groups. The same classification algorithm used to recognize handwritten numbers could also be used to classify emails into spam and not-spam without changing a line of code. It’s the same algorithm but it’s fed different training data so it comes up with different classification logic.

“Machine learning” is an umbrella term covering lots of these kinds of generic algorithms.

Two kinds of Machine Learning Algorithms

You can think of machine learning algorithms as falling into one of two main categories — supervised learning and unsupervised learning. The difference is simple, but really important.

Supervised Learning

Let’s say you are a real estate agent. Your business is growing, so you hire a bunch of new trainee agents to help you out. But there’s a problem — you can glance at a house and have a pretty good idea of what a house is worth, but your trainees don’t have your experience so they don’t know how to price their houses.

To help your trainees (and maybe free yourself up for a vacation), you decide to write a little app that can estimate the value of a house in your area based on it’s size, neighborhood, etc, and what similar houses have sold for.

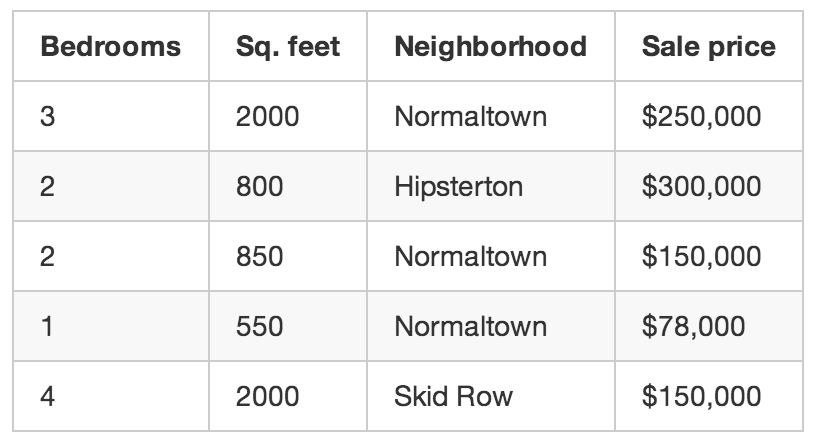

So you write down every time someone sells a house in your city for 3 months. For each house, you write down a bunch of details — number of bedrooms, size in square feet, neighborhood, etc. But most importantly, you write down the final sale price:

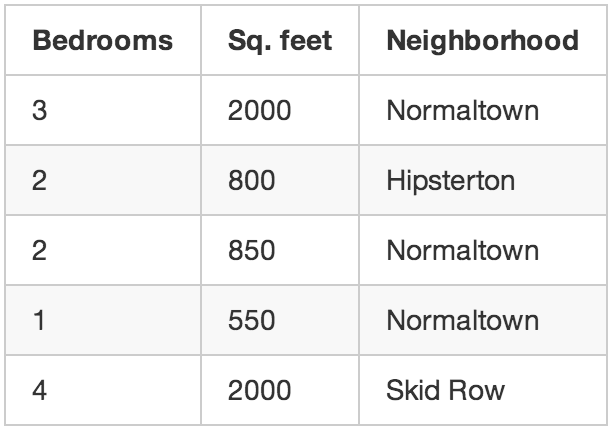

Using that training data, we want to create a program that can estimate how much any other house in your area is worth:

This is called supervised learning. You knew how much each house sold for, so in other words, you knew the answer to the problem and could work backwards from there to figure out the logic.

To build your app, you feed your training data about each house into your machine learning algorithm. The algorithm is trying to figure out what kind of math needs to be done to make the numbers work out.

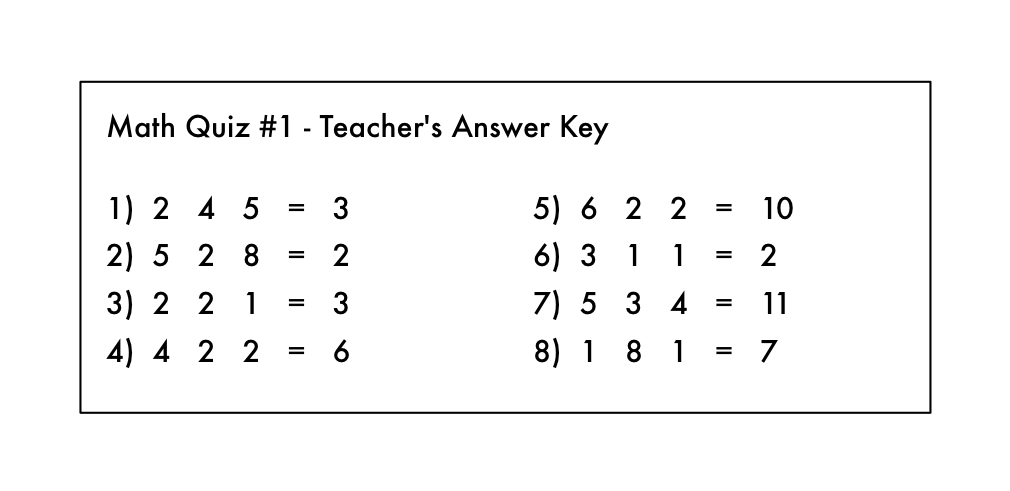

This kind of like having the answer key to a math test with all the arithmetic symbols erased:

From this, can you figure out what kind of math problems were on the test? You know you are supposed to “do something” with the numbers on the left to get each answer on the right.

In supervised learning, you are letting the computer work out that relationship for you. And once you know what math was required to solve this specific set of problems, you could answer to any other problem of the same type!

Unsupervised Learning

Let’s go back to our original example with the real estate agent. What if you didn’t know the sale price for each house? Even if all you know is the size, location, etc of each house, it turns out you can still do some really cool stuff. This is called unsupervised learning.

This is kind of like someone giving you a list of numbers on a sheet of paper and saying “I don’t really know what these numbers mean but maybe you can figure out if there is a pattern or grouping or something — good luck!”

So what could do with this data? For starters, you could have an algorithm that automatically identified different market segments in your data. Maybe you’d find out that home buyers in the neighborhood near the local college really like small houses with lots of bedrooms, but home buyers in the suburbs prefer 3-bedroom houses with lots of square footage. Knowing about these different kinds of customers could help direct your marketing efforts.

Another cool thing you could do is automatically identify any outlier houses that were way different than everything else. Maybe those outlier houses are giant mansions and you can focus your best sales people on those areas because they have bigger commissions.

Supervised learning is what we’ll focus on for the rest of this post, but that’s not because unsupervised learning is any less useful or interesting. In fact, unsupervised learning is becoming increasingly important as the algorithms get better because it can be used without having to label the data with the correct answer.

Side note: There are lots of other types of machine learning algorithms. But this is a pretty good place to start.

That’s cool, but does being able to estimate the price of a house really count as “learning”?

As a human, your brain can approach most any situation and learn how to deal with that situation without any explicit instructions. If you sell houses for a long time, you will instinctively have a “feel” for the right price for a house, the best way to market that house, the kind of client who would be interested, etc. The goal of Strong AI research is to be able to replicate this ability with computers.

But current machine learning algorithms aren’t that good yet — they only work when focused a very specific, limited problem. Maybe a better definition for “learning” in this case is “figuring out an equation to solve a specific problem based on some example data”.

Unfortunately “Machine Figuring out an equation to solve a specific problem based on some example data” isn’t really a great name. So we ended up with “Machine Learning” instead.

Of course if you are reading this 50 years in the future and we’ve figured out the algorithm for Strong AI, then this whole post will all seem a little quaint. Maybe stop reading and go tell your robot servant to go make you a sandwich, future human.

Let’s write that program!

So, how would you write the program to estimate the value of a house like in our example above? Think about it for a second before you read further.

If you didn’t know anything about machine learning, you’d probably try to write out some basic rules for estimating the price of a house like this:

If you fiddle with this for hours and hours, you might end up with something that sort of works. But your program will never be perfect and it will be hard to maintain as prices change.

Wouldn’t it be better if the computer could just figure out how to implement this function for you? Who cares what exactly the function does as long is it returns the correct number:

One way to think about this problem is that the price is a delicious stew and the ingredients are the number of bedrooms, the square footage and the neighborhood. If you could just figure out how much each ingredient impacts the final price, maybe there’s an exact ratio of ingredients to stir in to make the final price.

That would reduce your original function (with all those crazy if’s and else’s) down to something really simple like this:

A dumb way to figure out the best weights would be something like this:

Step 1:

Start with each weight set to 1.0:

Step 2:

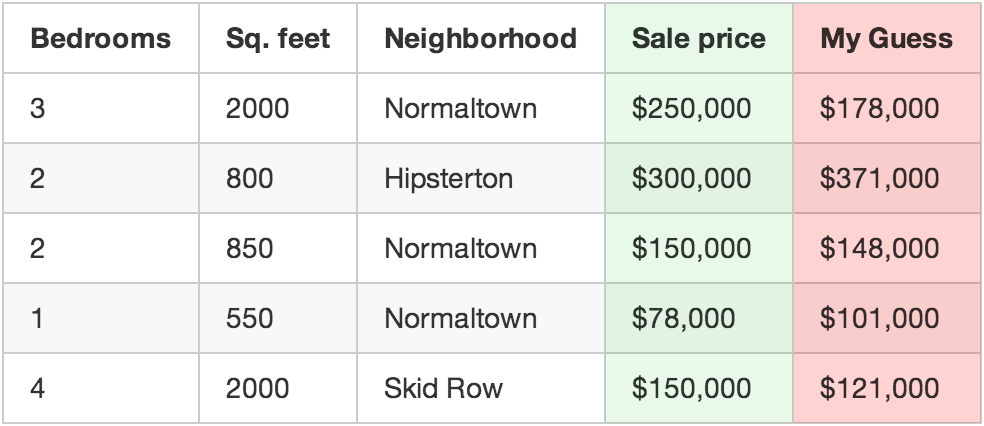

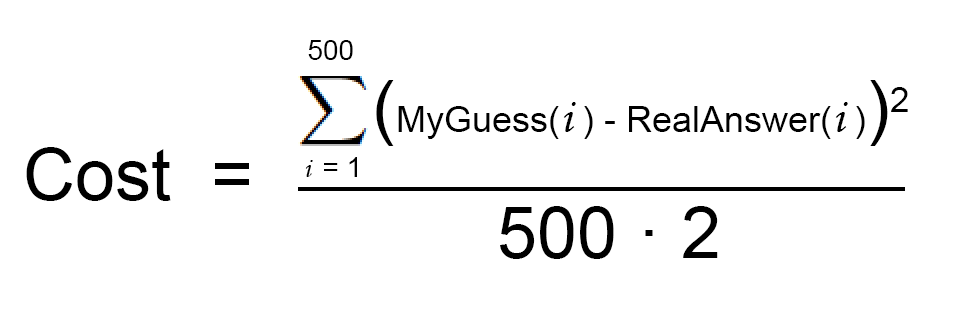

Run every house you know about through your function and see how far off the function is at guessing the correct price for each house:

Now, take that sum total and divide it by 500 to get an average of how far off you are for each house. Call this average error amount the cost of your function.

If you could get this cost to be zero by playing with the weights, your function would be perfect. It would mean that in every case, your function perfectly guessed the price of the house based on the input data. So that’s our goal — get this cost to be as low as possible by trying different weights.

Step 3:

Repeat Step 2 over and over with every single possible combination of weights. Whichever combination of weights makes the cost closest to zero is what you use. When you find the weights that work, you’ve solved the problem!

Mind Blowage Time

That’s pretty simple, right? Well think about what you just did. You took some data, you fed it through three generic, really simple steps, and you ended up with a function that can guess the price of any house in your area. Watch out, Zillow!

But here’s a few more facts that will blow your mind:

Pretty crazy, right?

What about that whole “try every number” bit in Step 3?

Ok, of course you can’t just try every combination of all possible weights to find the combo that works the best. That would literally take forever since you’d never run out of numbers to try.

To avoid that, mathematicians have figured out lots of clever ways to quickly find good values for those weights without having to try very many. Here’s one way:

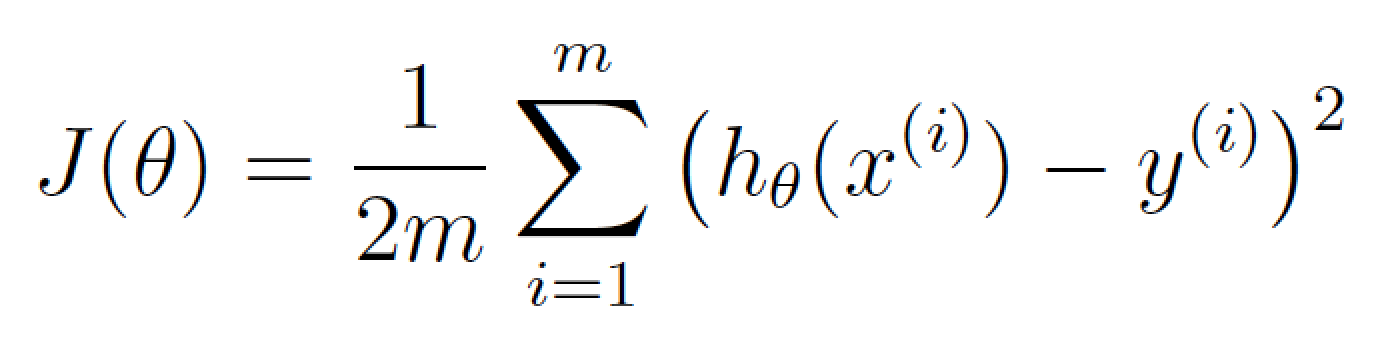

First, write a simple equation that represents Step #2 above:

Now let’s re-write exactly the same equation, but using a bunch of machine learning math jargon (that you can ignore for now):

This equation represents how wrong our price estimating function is for the weights we currently have set.

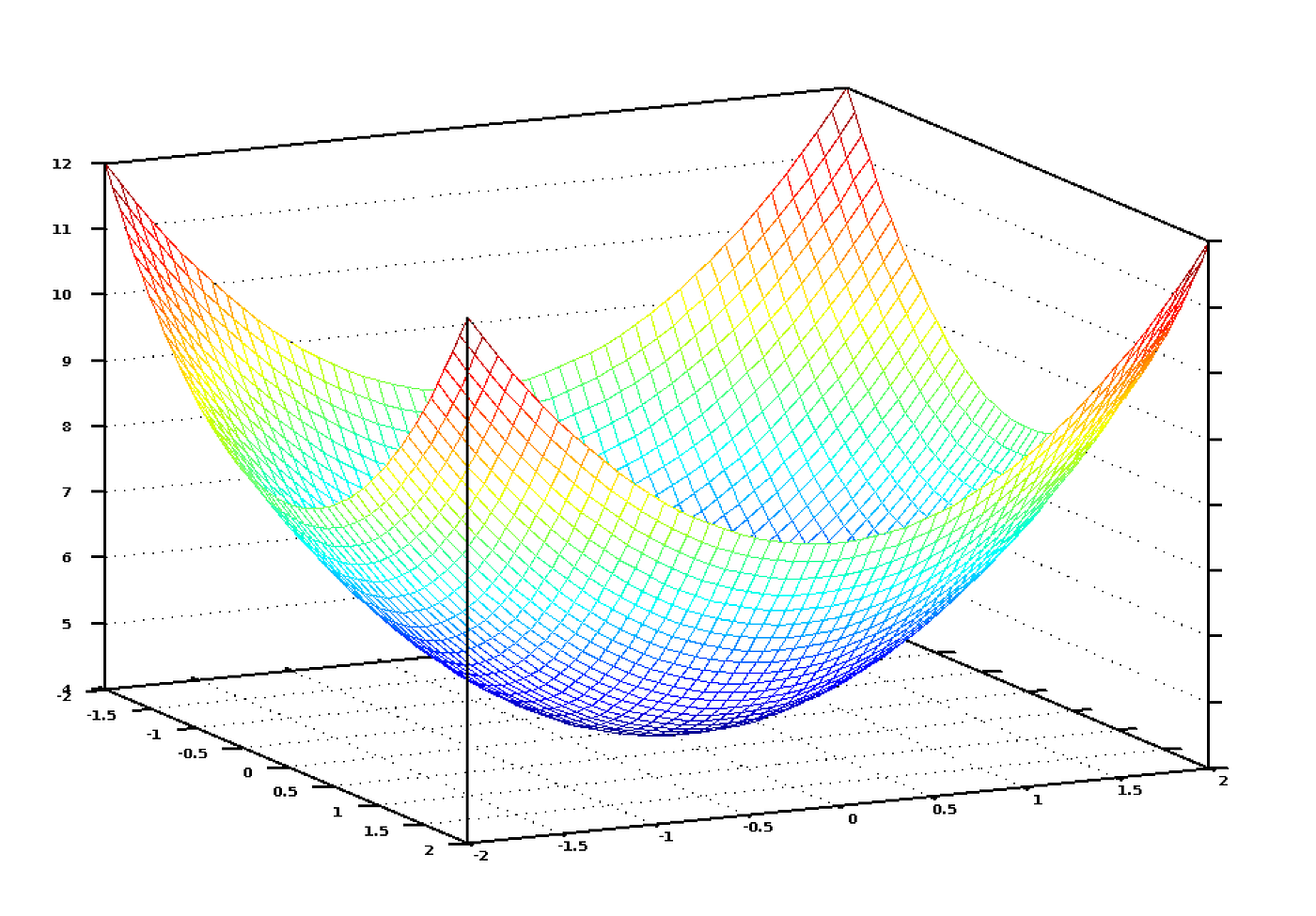

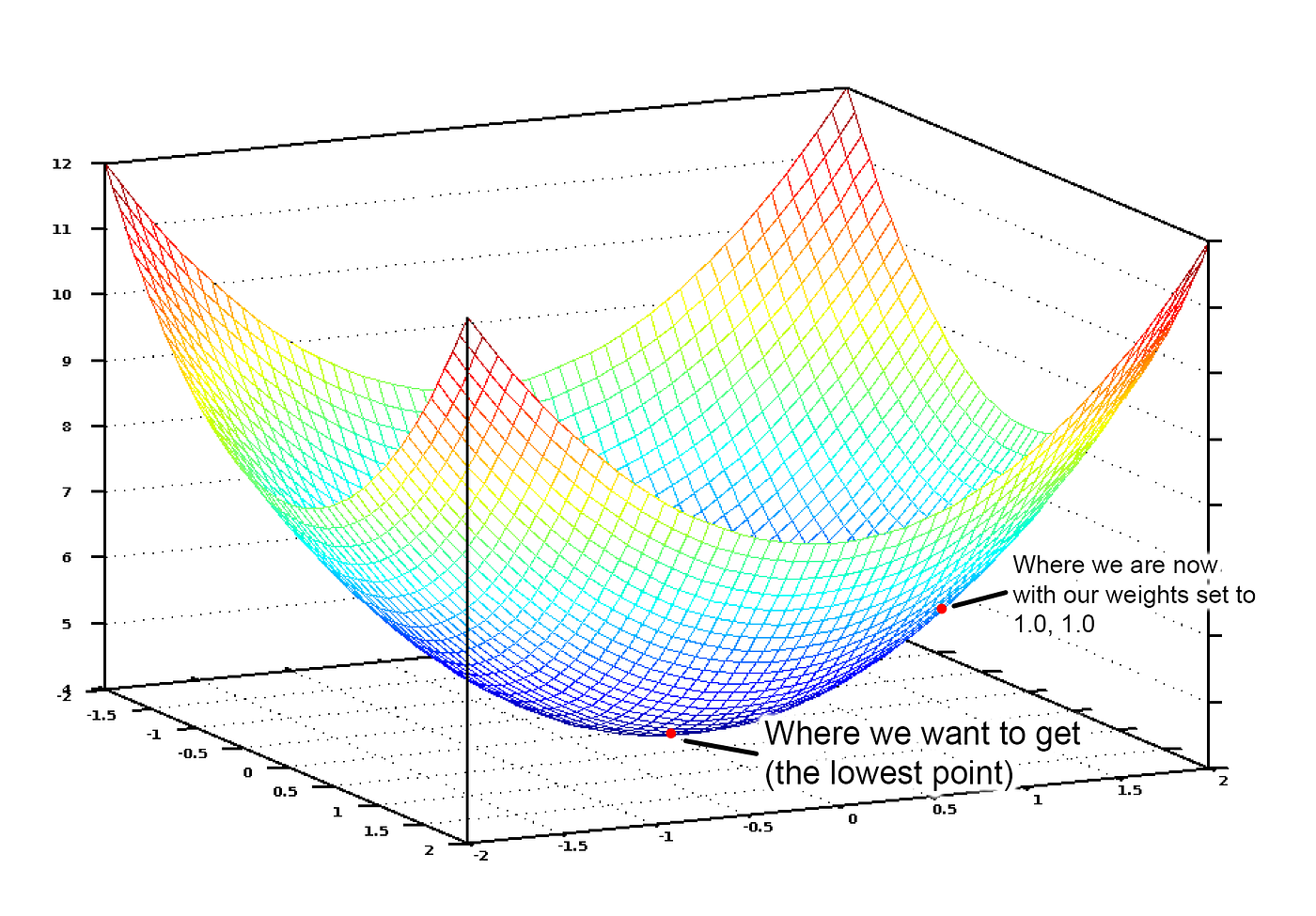

If we graph this cost equation for all possible values of our weights for number_of_bedrooms and sqft, we’d get a graph that might look something like this:

In this graph, the lowest point in blue is where our cost is the lowest — thus our function is the least wrong. The highest points are where we are most wrong. So if we can find the weights that get us to the lowest point on this graph, we’ll have our answer!

So we just need to adjust our weights so we are “walking down hill” on this graph towards the lowest point. If we keep making small adjustments to our weights that are always moving towards the lowest point, we’ll eventually get there without having to try too many different weights.

If you remember anything from Calculus, you might remember that if you take the derivative of a function, it tells you the slope of the function’s tangent at any point. In other words, it tells us which way is downhill for any given point on our graph. We can use that knowledge to walk downhill.

So if we calculate a partial derivative of our cost function with respect to each of our weights, then we can subtract that value from each weight. That will walk us one step closer to the bottom of the hill. Keep doing that and eventually we’ll reach the bottom of the hill and have the best possible values for our weights. (If that didn’t make sense, don’t worry and keep reading).

That’s a high level summary of one way to find the best weights for your function called batch gradient descent. Don’t be afraid to dig deeper if you are interested on learning the details.

When you use a machine learning library to solve a real problem, all of this will be done for you. But it’s still useful to have a good idea of what is happening.

What else did you conveniently skip over?

The three-step algorithm I described is called multivariate linear regression. You are estimating the equation for a line that fits through all of your house data points. Then you are using that equation to guess the sales price of houses you’ve never seen before based where that house would appear on your line. It’s a really powerful idea and you can solve “real” problems with it.

But while the approach I showed you might work in simple cases, it won’t work in all cases. One reason is because house prices aren’t always simple enough to follow a continuous line.

But luckily there are lots of ways to handle that. There are plenty of other machine learning algorithms that can handle non-linear data (like neural networks or SVMs with kernels). There are also ways to use linear regression more cleverly that allow for more complicated lines to be fit. In all cases, the same basic idea of needing to find the best weights still applies.

Also, I ignored the idea of overfitting. It’s easy to come up with a set of weights that always works perfectly for predicting the prices of the houses in your original data set but never actually works for any new houses that weren’t in your original data set. But there are ways to deal with this (like regularization and using a cross-validation data set). Learning how to deal with this issue is a key part of learning how to apply machine learning successfully.

In other words, while the basic concept is pretty simple, it takes some skill and experience to apply machine learning and get useful results. But it’s a skill that any developer can learn!

Is machine learning magic?

Once you start seeing how easily machine learning techniques can be applied to problems that seem really hard (like handwriting recognition), you start to get the feeling that you could use machine learning to solve any problem and get an answer as long as you have enough data. Just feed in the data and watch the computer magically figure out the equation that fits the data!

But it’s important to remember that machine learning only works if the problem is actually solvable with the data that you have.

For example, if you build a model that predicts home prices based on the type of potted plants in each house, it’s never going to work. There just isn’t any kind of relationship between the potted plants in each house and the home’s sale price. So no matter how hard it tries, the computer can never deduce a relationship between the two.

So remember, if a human expert couldn’t use the data to solve the problem manually, a computer probably won’t be able to either. Instead, focus on problems where a human could solve the problem, but where it would be great if a computer could solve it much more quickly.

How to learn more about Machine Learning

In my mind, the biggest problem with machine learning right now is that it mostly lives in the world of academia and commercial research groups. There isn’t a lot of easy to understand material out there for people who would like to get a broad understanding without actually becoming experts. But it’s getting a little better every day.

If you want to try out what you’ve learned in this article, I made a course that walks you through every step of this article, including writing all the code. Give it a try!

If you want to go deeper, Andrew Ng’s free Machine Learning class on Coursera is pretty amazing as a next step. I highly recommend it. It should be accessible to anyone who has a Comp. Sci. degree and who remembers a very minimal amount of math.

Also, you can play around with tons of machine learning algorithms by downloading and installing SciKit-Learn. It’s a python framework that has “black box” versions of all the standard algorithms.

If you liked this article, please consider signing up for my Machine Learning is Fun! Newsletter:

Also, please check out the full-length course version of this article. It covers everything in this article in more detail, including writing the actual code in Python. You can get a free 30-day trial to watch the course if you sign up with this link.

You can also follow me on Twitter at @ageitgey, email me directly or find me on linkedin. I’d love to hear from you if I can help you or your team with machine learning.

Start Here with Machine Learning

These are the Step-by-Step Guides that You’ve Been Looking For!

What do you want help with?

Foundations

Beginner

Intermediate

Advanced

The most common question I’m asked is: “how do I get started?”

My best advice for getting started in machine learning is broken down into a 5-step process:

For more on this top-down approach, see:

Many of my students have used this approach to go on and do well in Kaggle competitions and get jobs as Machine Learning Engineers and Data Scientists.

The benefit of machine learning are the predictions and the models that make predictions.

To have skill at applied machine learning means knowing how to consistently and reliably deliver high-quality predictions on problem after problem. You need to follow a systematic process.

Below is a 5-step process that you can follow to consistently achieve above average results on predictive modeling problems:

For a good summary of this process, see the posts:

Probability is the mathematics of quantifying and harnessing uncertainty. It is the bedrock of many fields of mathematics (like statistics) and is critical for applied machine learning.

Below is the 3 step process that you can use to get up-to-speed with probability for machine learning, fast.

You can see all of the tutorials on probability here. Below is a selection of some of the most popular tutorials.

Probability Foundations

Bayes Theorem

Probability Distributions

Information Theory

Statistical Methods an important foundation area of mathematics required for achieving a deeper understanding of the behavior of machine learning algorithms.

Below is the 3 step process that you can use to get up-to-speed with statistical methods for machine learning, fast.

You can see all of the statistical methods posts here. Below is a selection of some of the most popular tutorials.

Summary Statistics

Statistical Hypothesis Tests

Resampling Methods

Estimation Statistics

Linear algebra is an important foundation area of mathematics required for achieving a deeper understanding of machine learning algorithms.

Below is the 3 step process that you can use to get up-to-speed with linear algebra for machine learning, fast.

You can see all linear algebra posts here. Below is a selection of some of the most popular tutorials.

Linear Algebra in Python

Matrices

Vectors

Matrix Factorization

Optimization is the core of all machine learning algorithms. When we train a machine learning model, it is doing optimization with the given dataset.

You can get familiar with optimization for machine learning in 3 steps, fast.

You can see all optimization posts here. Below is a selection of some of the most popular tutorials.

Local Optimization

Global Optimization

Gradient Descent

Applications of Optimization

Calculus is the hidden driver for the success of many machine learning algorithms. When we talk about the gradient descent optimization part of a machine learning algorithm, the gradient is found using calculus.

You can get familiar with calculus for machine learning in 3 steps.

You can see all calculus posts here. Below is a selection of some of the most popular tutorials.

Basic Calculus

Multivariate Calculus

Calculus for Optimization

Applications of Calculus

Python is the lingua franca of machine learning projects. Not only a lot of machine learning libraries are in Python, but also it is effective to help us finish our machine learning projects quick and neatly. Having good Python programming skills can let you get more done in shorter time!

You can get familiar with Python for machine learning in 3 steps.

You can see all Python posts here. But don’t miss Python for Machine Learning (my book). Below is a selection of some of the most popular tutorials.

Basic Language

Troubleshooting

Language Techniques

Libraries

Machine learning is about machine learning algorithms.

You need to know what algorithms are available for a given problem, how they work, and how to get the most out of them.

Here’s how to get started with machine learning algorithms:

You can see all machine learning algorithm posts here. Below is a selection of some of the most popular tutorials.

Linear Algorithms

Nonlinear Algorithms

Ensemble Algorithms

How to Study/Learn ML Algorithms

Weka is a platform that you can use to get started in applied machine learning.

It has a graphical user interface meaning that no programming is required and it offers a suite of state of the art algorithms.

Here’s how you can get started with Weka:

You can see all Weka machine learning posts here. Below is a selection of some of the most popular tutorials.

Prepare Data in Weka

Weka Algorithm Tutorials

Python is one of the fastest growing platforms for applied machine learning.

You can use the same tools like pandas and scikit-learn in the development and operational deployment of your model.

Below are the steps that you can use to get started with Python machine learning:

You can see all Python machine learning posts here. Below is a selection of some of the most popular tutorials.

Prepare Data in Python

Machine Learning in Python

R is a platform for statistical computing and is the most popular platform among professional data scientists.

It’s popular because of the large number of techniques available, and because of excellent interfaces to these methods such as the powerful caret package.

Here’s how to get started with R machine learning:

You can see all R machine learning posts here. Below is a selection of some of the most popular tutorials.

Data Preparation in R

Applied Machine Learning in R

You can learn a lot about machine learning algorithms by coding them from scratch.

Learning via coding is the preferred learning style for many developers and engineers.

Here’s how to get started with machine learning by coding everything from scratch.

You can see all of the Code Algorithms from Scratch posts here. Below is a selection of some of the most popular tutorials.

Prepare Data

Linear Algorithms

Algorithm Evaluation

Nonlinear Algorithms

Time series forecasting is an important topic in business applications.

Many datasets contain a time component, but the topic of time series is rarely covered in much depth from a machine learning perspective.

Here’s how to get started with Time Series Forecasting:

You can see all Time Series Forecasting posts here. Below is a selection of some of the most popular tutorials.

Data Preparation Tutorials

Forecasting Tutorials

The performance of your predictive model is only as good as the data that you use to train it.

As such data preparation may the most important parts of your applied machine learning project.

Here’s how to get started with Data Preparation for machine learning:

You can see all Data Preparation tutorials here. Below is a selection of some of the most popular tutorials.

Data Cleaning

Feature Selection

Data Transforms

Dimensionality Reduction

XGBoost is a highly optimized implementation of gradient boosted decision trees.

It is popular because it is being used by some of the best data scientists in the world to win machine learning competitions.

Here’s how to get started with XGBoost:

You can see all XGBoosts posts here. Below is a selection of some of the most popular tutorials.

XGBoost Basics

XGBoost Tuning

Imbalanced classification refers to classification tasks where there are many more examples for one class than another class.

These types of problems often require the use of specialized performance metrics and learning algorithms as the standard metrics and methods are unreliable or fail completely.

Here’s how you can get started with Imbalanced Classification:

You can see all Imbalanced Classification posts here. Below is a selection of some of the most popular tutorials.

Performance Measures

Cost-Sensitive Algorithms

Data Sampling

Advanced Methods

Deep learning is a fascinating and powerful field.

State-of-the-art results are coming from the field of deep learning and it is a sub-field of machine learning that cannot be ignored.

Here’s how to get started with deep learning:

You can see all deep learning posts here. Below is a selection of some of the most popular tutorials.

Background

Multilayer Perceptrons

Convolutional Neural Networks

Recurrent Neural Networks

Although it is easy to define and fit a deep learning neural network model, it can be challenging to get good performance on a specific predictive modeling problem.

There are standard techniques that you can use to improve the learning, reduce overfitting, and make better predictions with your deep learning model.

Here’s how to get started with getting better deep learning performance:

You can see all better deep learning posts here. Below is a selection of some of the most popular tutorials.

Better Learning (fix training)

Better Generalization (fix overfitting)

Better Predictions (ensembles)

Tips, Tricks, and Resources

Predictive performance is the most important concern on many classification and regression problems. Ensemble learning algorithms combine the predictions from multiple models and are designed to perform better than any contributing ensemble member.

Here’s how to get started with getting better ensemble learning performance:

You can see all ensemble learning posts here. Below is a selection of some of the most popular tutorials.

Ensemble Basics

Stacking Ensembles

Bagging Ensembles

Boosting Ensembles

Long Short-Term Memory (LSTM) Recurrent Neural Networks are designed for sequence prediction problems and are a state-of-the-art deep learning technique for challenging prediction problems.

Here’s how to get started with LSTMs in Python:

You can see all LSTM posts here. Below is a selection of some of the most popular tutorials using LSTMs in Python with the Keras deep learning library.

Data Preparation for LSTMs

LSTM Behaviour

Modeling with LSTMs

LSTM for Time Series

Working with text data is hard because of the messy nature of natural language.

Text is not “solved” but to get state-of-the-art results on challenging NLP problems, you need to adopt deep learning methods

Here’s how to get started with deep learning for natural language processing:

You can see all deep learning for NLP posts here. Below is a selection of some of the most popular tutorials.

Bag-of-Words Model

Language Modeling

Text Summarization

Text Classification

Word Embeddings

Photo Captioning

Text Translation

Working with image data is hard because of the gulf between raw pixels and the meaning in the images.

Computer vision is not solved, but to get state-of-the-art results on challenging computer vision tasks like object detection and face recognition, you need deep learning methods.

Here’s how to get started with deep learning for computer vision:

You can see all deep learning for Computer Vision posts here. Below is a selection of some of the most popular tutorials.

Image Data Handling

Image Data Augmentation

Image Classification

Image Data Preparation

Basics of Convolutional Neural Networks

Object Recognition

Deep learning neural networks are able to automatically learn arbitrary complex mappings from inputs to outputs and support multiple inputs and outputs.

Methods such as MLPs, CNNs, and LSTMs offer a lot of promise for time series forecasting.

Here’s how to get started with deep learning for time series forecasting:

You can see all deep learning for time series forecasting posts here. Below is a selection of some of the most popular tutorials.

Forecast Trends and Seasonality (univariate)

Human Activity Recognition (multivariate classification)

Forecast Electricity Usage (multivariate, multi-step)

Models Types

Time Series Case Studies

Forecast Air Pollution (multivariate, multi-step)

Generative Adversarial Networks, or GANs for short, are an approach to generative modeling using deep learning methods, such as convolutional neural networks.

GANs are an exciting and rapidly changing field, delivering on the promise of generative models in their ability to generate realistic examples across a range of problem domains, most notably in image-to-image translation tasks.

Here’s how to get started with deep learning for Generative Adversarial Networks:

You can see all Generative Adversarial Network tutorials listed here. Below is a selection of some of the most popular tutorials.

GAN Fundamentals

GAN Loss Functions

Develop Simple GAN Models

GANs for Image Translation

I’m here to help you become awesome at applied machine learning.

If you still have questions and need help, you have some options:

© 2022 Machine Learning Mastery. All Rights Reserved.

LinkedIn | Twitter | Facebook | Newsletter | RSS