How to make docker image

How to make docker image

Образы и контейнеры Docker в картинках

Перевод поста Visualizing Docker Containers and Images, от новичка к новичкам, автор на простых примерах объясняет базовые сущности и процессы в использовании docker.

Если вы не знаете, что такое Docker или не понимаете, как он соотносится с виртуальными машинами или с инструментами configuration management, то этот пост может показаться немного сложным.

Пост предназначен для тех, кто пытается освоить docker cli, понять, чем отличается контейнер и образ. В частности, будет объяснена разница между просто контейнером и запущенным контейнером.

В процессе освоения нужно представить себе некоторые лежащие в основе детали, например, слои файловой системы UnionFS. В течение последней пары недель я изучал технологию, я новичок в мире docker, и командная строка docker показалась мне довольно сложной для освоения.

По-моему, понимание того, как технология работает изнутри — лучший способ быстро освоить новый инструмент и правильно его использовать. Часто новая технология разрабатывает новые модели абстракций и привносит новые термины и метафоры, которые могут быть как будто бы понятны в начале, но без четкого понимания затрудняют последующее использование инструмента.

Хорошим примером является Git. Я не мог понять Git, пока не понял его базовую модель, включая trees, blobs, commits, tags, tree-ish и прочее. Я думаю, что люди, не понимающие внутренности Git, не могут мастерски использовать этот инструмент.

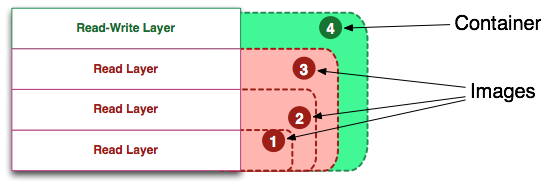

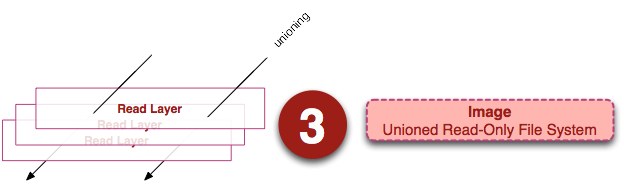

Определение образа (Image)

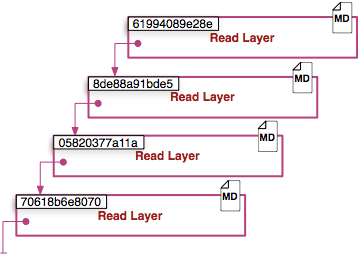

Визуализация образа представлена ниже в двух видах. Образ можно определить как «сущность» или «общий вид» (union view) стека слоев только для чтения.

Слева мы видим стек слоев для чтения. Они показаны только для понимания внутреннего устройства, они доступны вне запущенного контейнера на хост-системе. Важно то, что они доступны только для чтения (иммутабельны), а все изменения происходят в верхнем слое стека. Каждый слой может иметь одного родителя, родитель тоже имеет родителя и т.д. Слой верхнего уровня может быть использован как UnionFS (AUFS в моем случае с docker) и представлен в виде единой read-only файловой системы, в которой отражены все слои. Мы видим эту «сущность» образа на рисунке справа.

Если вы захотите посмотреть на эти слои в первозданном виде, вы можете найти их в файловой системе на хост-машине. Они не видны напрямую из запущенного контейнера. На моей хост-машине я могу найти образы в /var/lib/docker/aufs.

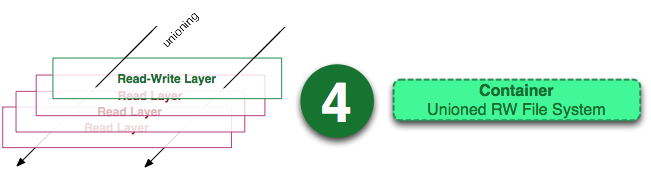

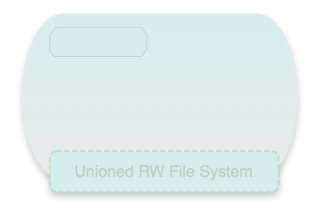

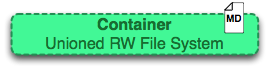

Определение контейнера (Container)

Контейнер можно назвать «сущностью» стека слоев с верхним слоем для записи.

На изображении выше показано примерно то же самое, что на изображении про образ, кроме того, что верхний слой доступен для записи. Вы могли заметить, что это определение ничего не говорит о том, запущен контейнер или нет и это неспроста. Разделение контейнеров на запущенные и не запущенные устранило путаницу в моем понимании.

Контейнер определяет лишь слой для записи наверху образа (стека слоев для чтения). Он не запущен.

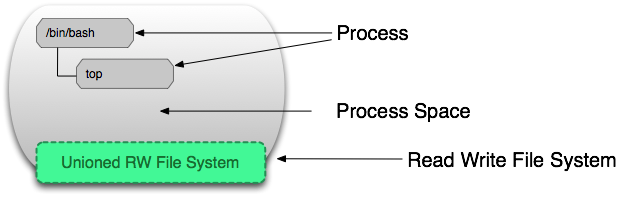

Определение запущенного контейнера

Запущенный контейнер — это «общий вид» контейнера для чтения-записи и его изолированного пространства процессов. Ниже изображен контейнер в своем пространстве процессов.

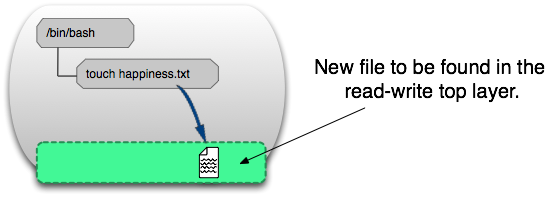

Изоляция файловой системы обеспечивается технологиями уровня ядра, cgroups, namespaces и другие, позволяют докеру быть такой перспективной технологией. Процессы в пространстве контейнера могут изменять, удалять или создавать файлы, которые сохраняются в верхнем слое для записи. Смотрите изображение:

Чтобы проверить это, выполните команду на хост-машине:

Вы можете найти новый файл в слое для записи на хост-машине, даже если контейнер не запущен.

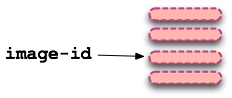

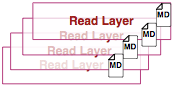

Определение слоя образа (Image layer)

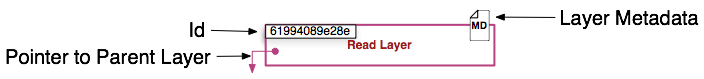

Наконец, мы определим слой образа. Изображение ниже представляет слой образа и дает нам понять, что слой — это не просто изменения в файловой системе.

Метаданные — дополнительная информация о слое, которая позволяет докеру сохранять информацию во время выполнения и во время сборки. Оба вида слоев (для чтения и для записи) содержат метаданные.

Кроме того, как мы уже упоминали раньше, каждый слой содержит указатель на родителя, используя id (на изображении родительские слои внизу). Если слой не указывает на родительский слой, значит он наверху стека.

Расположение метаданных

На данный момент (я понимаю, что разработчики docker могут позже сменить реализацию), метаданные слоев образов (для чтения) находятся в файле с именем «json» в папке /var/lib/docker/graph/id_слоя:

где «e809f156dc985. » — урезанный id слоя.

Свяжем все вместе

Теперь, давайте посмотрим на команды, иллюстрированные понятными картинками.

docker create

До:

После:

docker start

До:

После:

Команда ‘docker start’ создает пространство процессов вокруг слоев контейнера. Может быть только одно пространство процессов на один контейнер.

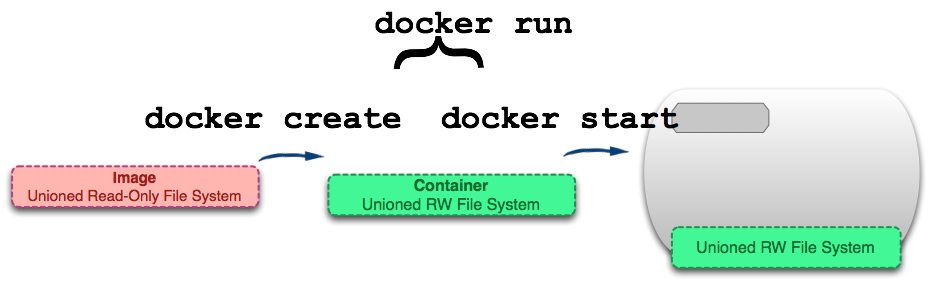

docker run

До:

После:

Один из первых вопросов, который задают люди (я тоже задавал): «В чем разница между ‘docker start’ и ‘docker run’?» Одна из первоначальных целей этого поста — объяснить эту тонкость.

Как мы видим, команда ‘docker run’ находит образ, создает контейнер поверх него и запускает контейнер. Это сделано для удобства и скрывает детали двух команд.

Продолжая сравнение с освоением Git, я скажу, что ‘docker run’ очень похожа на ‘git pull’. Так же, как и ‘git pull’ (который объединяет ‘git fetch’ и ‘git merge’), команда ‘docker run’ объединяет две команды, которые могут использоваться и независимо. Это удобно, но поначалу может ввести в заблуждение.

docker ps

Команда ‘docker ps’ выводит список запущенных контейнеров на вашей хост-машине. Важно понимать, что в этот список входят только запущенные контейнеры, не запущенные контейнеры скрыты. Чтобы посмотреть список всех контейнеров, нужно использовать следующую команду.

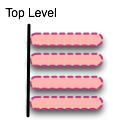

docker images

Команда ‘docker images’ выводит список образов верхнего уровня (top-level images). Фактически, ничего особенного не отличает образ от слоя для чтения. Только те образы, которые имеют присоединенные контейнеры или те, что были получены с помощью pull, считаются образами верхнего уровня. Это различие нужно для удобства, так как за каждым образом верхнего уровня может быть множество слоев.

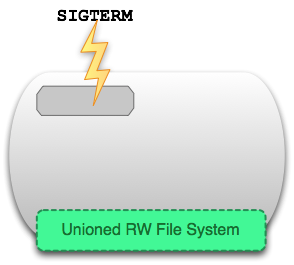

docker stop

До:

После:

Команда ‘docker stop’ посылает сигнал SIGTERM запущенному контейнеру, что мягко останавливает все процессы в пространстве процессов контейнера. В результате мы получаем не запущенный контейнер.

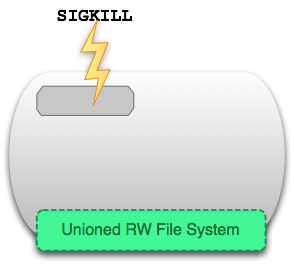

docker kill

До:

После:

Команда ‘docker kill’ посылает сигнал SIGKILL, что немедленно завершает все процессы в текущем контейнере. Это почти то же самое, что нажать Ctrl+\ в терминале.

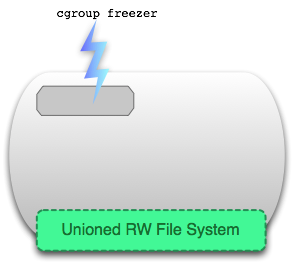

docker pause

До:

После:

В отличие от ‘docker stop’ и ‘docker kill’, которые посылают настоящие UNIX сигналы процессам контейнера, команда ‘docker pause’ используют специальную возможность cgroups для заморозки запущенного пространства процессов. Подробности можно прочитать здесь, если вкратце, отправки сигнала Ctrl+Z (SIGTSTP) не достаточно, чтобы заморозить все процессы в пространстве контейнера.

docker rm

До:

После:

Команда ‘docker rm’ удаляет слой для записи, который определяет контейнер на хост-системе. Должна быть запущена на остановленном контейнерах. Удаляет файлы.

docker rmi

До:

После:

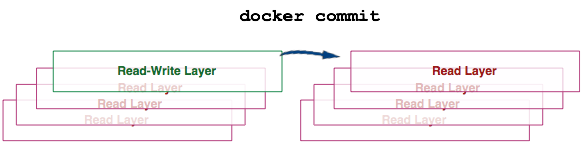

docker commit

До:

После:

Команда ‘docker commit’ берет верхний уровень контейнера, тот, что для записи и превращает его в слой для чтения. Это фактически превращает контейнер (вне зависимости от того, запущен ли он) в неизменяемый образ.

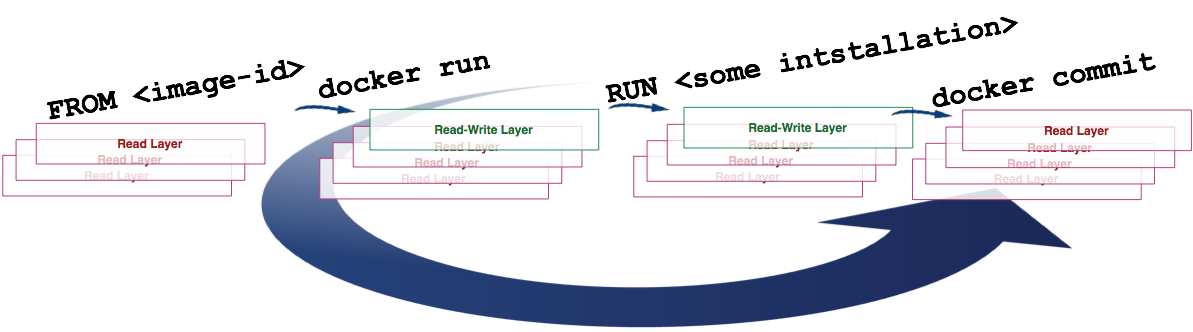

docker build

До:

Dockerfile

После:

Со многими другими слоями.

Команда ‘docker build’ интересна тем, что запускает целый ряд команд:

На изображении выше мы видим, как команда build использует значение инструкции FROM из файла Dockerfile как базовый образ после чего:

1) запускает контейнер (create и start)

2) изменяет слой для записи

3) делает commit

На каждой итерации создается новый слой. При исполнении ‘docker build’ может создаваться множество слоев.

docker exec

До:

После:

Команда ‘docker exec’ применяется к запущенному контейнеру, запускает новый процесс внутри пространства процессов контейнера.

docker inspect |

До:

После:

Команда ‘docker inspect’ получает метаданные верхнего слоя контейнера или образа.

docker save

До:

После:

Команда ‘docker save’ создает один файл, который может быть использован для импорта образа на другую хост-систему. В отличие от команды ‘export’, она сохраняет все слои и их метаданные. Может быть применена только к образам.

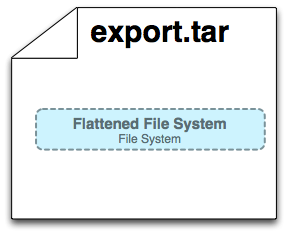

docker export

До:

После:

Команда ‘docker export’ создает tar архив с содержимым файлов контейнера, в результате получается папка, пригодная для использования вне docker. Команда убирает слои и их метаданные. Может быть применена только для контейнеров.

docker history

До:

После:

Команда ‘docker history’ принимает и рекурсивно выводит список всех слоев-родителей образа (которые тоже могут быть образами)

Creating a Docker Image for your Application

This is the recommended workflow for creating your own Docker image for your application:

Write the Dockerfile

Docker builds images automatically by reading the instructions from a Dockerfile. It is a text file that contains all commands needed to build a given image.

In the following example, we will build and run the Hello ZED tutorial application in a container.

First let’s prepare the host with the code:

Open a text editor and create a new Dockerfile with the following content:

We provide some extra arguments to CMake to ensure that CMake and GCC can find all the required CUDA libraries. We also tell the compiler to allow linking even if there are undefined symbols from libraries such as nvcuvid that are not yet available. These will be available at runtime using NVIDIA container toolkit.

For more information on writing dockerfiles, check Dockerfile reference documentation.

Build your Docker Image

Now that you have created a Dockerfile, it’s time to build your image using the docker build command.

Tips: On NVIDIA Jetson, we recommend building your Jetson Docker Container on x86 host, and running it on the target Jetson to avoid long compilation time on boards such as Jetson Nano.

Test your Image

Let’s start the container based on the new image we created using the docker run command.

On Jetson or older Docker versions, use these arguments:

You should now see the output of the terminal.

Optimize your Image Size

Docker images can get very large and become a problem when pulling over the network or pushing on devices with limited storage (such as Jetson Nano). Here are a few advice to keep your image size small:

Minimize the number of RUN commands. Each command adds a layer to the image, so consolidating the number of RUN can reduce the number of layers in the final image. Note that layers are designed to be reusable, and will not be pushed or pulled if they didn’t change.

Remove tarballs or other archive files that were copied during the installation. Each layer is added on top of the others, so files that were not removed in a given RUN step will be present in the final image even if they are removed in a later RUN step.

Similarly, clean package lists that are downloaded with apt-get update by removing /var/lib/apt/lists/* in the same RUN step.

Create separate images for development and production. Production images should not include all of the libraries and dependencies pulled in by the build.

Use multi-stage builds (see Docker docs) and push only your prod image.

Host your Docker Image

Now that you have created your image, you need to share it on a registry so it can be downloaded and run on any destination machine. A registry is a stateless, server-side application that stores and lets you distribute Docker images.

Use Docker Hub Registry

By default, Docker provides an official free-to-use registry, DockerHub, where you can push and pull your images. For example at Stereolabs, the ZED SDK Docker images are built automatically by a public Gitlab CI job and pushed to Stereolabs DockerHub repository.

There are situations where you will not want your image to be publicly available. In this case, you need to create your own private Docker Registry. You can get private repos from Docker, or from many other third-party providers.

Use Local Registry Server

For local development, if your host and target machines are on the same network, you can setup a local registry server and push your images there.

For more information on deploying your own registry server, please refer to Docker docs.

Save and Load Images as Files

Lastly it is also possible to export and load your Docker image as a file.

To export a Docker image simply use :

On the destination machine, simply load the Docker image using :

Next Steps

At this point, you have successfully created a Docker image for the “Hello ZED” application and learnt how to host and share it.

Let’s learn now how to Run and Build Jetson Docker Containers on x86 to speed up development and deployment on embedded boards such as Jetson Nano, without needing cross compilation.

Image-building best practices

Estimated reading time: 9 minutes

Security scanning

When you have built an image, it is a good practice to scan it for security vulnerabilities using the docker scan command. Docker has partnered with Snyk to provide the vulnerability scanning service.

For example, to scan the getting-started image you created earlier in the tutorial, you can just type

The scan uses a constantly updated database of vulnerabilities, so the output you see will vary as new vulnerabilities are discovered, but it might look something like this:

The output lists the type of vulnerability, a URL to learn more, and importantly which version of the relevant library fixes the vulnerability.

There are several other options, which you can read about in the docker scan documentation.

As well as scanning your newly built image on the command line, you can also configure Docker Hub to scan all newly pushed images automatically, and you can then see the results in both Docker Hub and Docker Desktop.

Image layering

Did you know that you can look at what makes up an image? Using the docker image history command, you can see the command that was used to create each layer within an image.

Use the docker image history command to see the layers in the getting-started image you created earlier in the tutorial.

You should get output that looks something like this (dates/IDs may be different).

Each of the lines represents a layer in the image. The display here shows the base at the bottom with the newest layer at the top. Using this, you can also quickly see the size of each layer, helping diagnose large images.

Layer caching

Now that you’ve seen the layering in action, there’s an important lesson to learn to help decrease build times for your container images.

Once a layer changes, all downstream layers have to be recreated as well

Let’s look at the Dockerfile we were using one more time.

Going back to the image history output, we see that each command in the Dockerfile becomes a new layer in the image. You might remember that when we made a change to the image, the yarn dependencies had to be reinstalled. Is there a way to fix this? It doesn’t make much sense to ship around the same dependencies every time we build, right?

Update the Dockerfile to copy in the package.json first, install dependencies, and then copy everything else in.

.dockerignore files are an easy way to selectively copy only image relevant files. You can read more about this here. In this case, the node_modules folder should be omitted in the second COPY step because otherwise, it would possibly overwrite files which were created by the command in the RUN step. For further details on why this is recommended for Node.js applications and other best practices, have a look at their guide on Dockerizing a Node.js web app.

You should see output like this.

You’ll see that all layers were rebuilt. Perfectly fine since we changed the Dockerfile quite a bit.

Now, make a change to the src/static/index.html file (like change the to say “The Awesome Todo App”).

Multi-stage builds

While we’re not going to dive into it too much in this tutorial, multi-stage builds are an incredibly powerful tool to help use multiple stages to create an image. There are several advantages for them:

Maven/Tomcat example

When building Java-based applications, a JDK is needed to compile the source code to Java bytecode. However, that JDK isn’t needed in production. Also, you might be using tools like Maven or Gradle to help build the app. Those also aren’t needed in our final image. Multi-stage builds help.

React example

When building React applications, we need a Node environment to compile the JS code (typically JSX), SASS stylesheets, and more into static HTML, JS, and CSS. If we aren’t doing server-side rendering, we don’t even need a Node environment for our production build. Why not ship the static resources in a static nginx container?

Here, we are using a node:12 image to perform the build (maximizing layer caching) and then copying the output into an nginx container. Cool, huh?

Recap

By understanding a little bit about how images are structured, we can build images faster and ship fewer changes. Scanning images gives us confidence that the containers we are running and distributing are secure. Multi-stage builds also help us reduce overall image size and increase final container security by separating build-time dependencies from runtime dependencies.

Build your Node image

Estimated reading time: 15 minutes

Prerequisites

Work through the orientation and setup in Get started Part 1 to understand Docker concepts.

Enable BuildKit

Before we start building images, ensure you have enabled BuildKit on your machine. BuildKit allows you to build Docker images efficiently. For more information, see Building images with BuildKit.

BuildKit is enabled by default for all users on Docker Desktop. If you have installed Docker Desktop, you don’t have to manually enable BuildKit. If you are running Docker on Linux, you can enable BuildKit either by using an environment variable or by making BuildKit the default setting.

To set the BuildKit environment variable when running the docker build command, run:

To enable docker BuildKit by default, set daemon configuration in /etc/docker/daemon.json feature to true and restart the daemon. If the daemon.json file doesn’t exist, create new file called daemon.json and then add the following to the file.

Restart the Docker daemon.

Overview

To complete this tutorial, you need the following:

Sample application

Let’s create a simple Node.js application that we can use as our example. Create a directory in your local machine named node-docker and follow the steps below to create a simple REST API.

Now, let’s add some code to handle our REST requests. We’ll use a mock server so we can focus on Dockerizing the application.

Open this working directory in your IDE and add the following code into the server.js file.

The mocking server is called Ronin.js and will listen on port 8000 by default. You can make POST requests to the root (/) endpoint and any JSON structure you send to the server will be saved in memory. You can also send GET requests to the same endpoint and receive an array of JSON objects that you have previously POSTed.

Test the application

Let’s start our application and make sure it’s running properly. Open your terminal and navigate to your working directory you created.

To test that the application is working properly, we’ll first POST some JSON to the API and then make a GET request to see that the data has been saved. Open a new terminal and run the following curl commands:

Switch back to the terminal where our server is running. You should now see the following requests in the server logs.

Great! We verified that the application works. At this stage, you’ve completed testing the server script locally.

Press CTRL-c from within the terminal session where the server is running to stop it.

We will now continue to build and run the application in Docker.

Create a Dockerfile for Node.js

A Dockerfile is a text document that contains the instructions to assemble a Docker image. When we tell Docker to build our image by executing the docker build command, Docker reads these instructions, executes them, and creates a Docker image as a result.

Let’s walk through the process of creating a Dockerfile for our application. In the root of your project, create a file named Dockerfile and open this file in your text editor.

What to name your Dockerfile?

The default filename to use for a Dockerfile is Dockerfile (without a file- extension). Using the default name allows you to run the docker build command without having to specify additional command flags.

We recommend using the default ( Dockerfile ) for your project’s primary Dockerfile, which is what we’ll use for most examples in this guide.

The first line to add to a Dockerfile is a # syntax parser directive. While optional, this directive instructs the Docker builder what syntax to use when parsing the Dockerfile, and allows older Docker versions with BuildKit enabled to upgrade the parser before starting the build. Parser directives must appear before any other comment, whitespace, or Dockerfile instruction in your Dockerfile, and should be the first line in Dockerfiles.

Next, we need to add a line in our Dockerfile that tells Docker what base image we would like to use for our application.

Docker images can be inherited from other images. Therefore, instead of creating our own base image, we’ll use the official Node.js image that already has all the tools and packages that we need to run a Node.js application. You can think of this in the same way you would think about class inheritance in object oriented programming. For example, if we were able to create Docker images in JavaScript, we might write something like the following.

class MyImage extends NodeBaseImage <>

In the same way, when we use the FROM command, we tell Docker to include in our image all the functionality from the node:12.18.1 image.

If you want to learn more about creating your own base images, see Creating base images.

To make things easier when running the rest of our commands, let’s create a working directory. This instructs Docker to use this path as the default location for all subsequent commands. This way we do not have to type out full file paths but can use relative paths based on the working directory.

Usually the very first thing you do once you’ve downloaded a project written in Node.js is to install npm packages. This ensures that your application has all its dependencies installed into the node_modules directory where the Node runtime will be able to find them.

Note that, rather than copying the entire working directory, we are only copying the package.json file. This allows us to take advantage of cached Docker layers. Once we have our files inside the image, we can use the RUN command to execute the command npm install. This works exactly the same as if we were running npm install locally on our machine, but this time these Node modules will be installed into the node_modules directory inside our image.

At this point, we have an image that is based on node version 12.18.1 and we have installed our dependencies. The next thing we need to do is to add our source code into the image. We’ll use the COPY command just like we did with our package.json files above.

The COPY command takes all the files located in the current directory and copies them into the image. Now, all we have to do is to tell Docker what command we want to run when our image is run inside of a container. We do this with the CMD command.

Here’s the complete Dockerfile.

Build image

Now that we’ve created our Dockerfile, let’s build our image. To do this, we use the docker build command. The docker build command builds Docker images from a Dockerfile and a “context”. A build’s context is the set of files located in the specified PATH or URL. The Docker build process can access any of the files located in the context.

Let’s build our first Docker image.

View local images

To see a list of images we have on our local machine, we have two options. One is to use the CLI and the other is to use Docker Desktop. Since we are currently working in the terminal let’s take a look at listing images with the CLI.

To list images, simply run the images command.

Your exact output may vary, but you should see the image we just built node-docker:latest with the latest tag.

Tag images

An image name is made up of slash-separated name components. Name components may contain lowercase letters, digits and separators. A separator is defined as a period, one or two underscores, or one or more dashes. A name component may not start or end with a separator.

An image is made up of a manifest and a list of layers. In simple terms, a “tag” points to a combination of these artifacts. You can have multiple tags for an image. Let’s create a second tag for the image we built and take a look at its layers.

To create a new tag for the image we built above, run the following command.

The Docker tag command creates a new tag for an image. It does not create a new image. The tag points to the same image and is just another way to reference the image.

Now run the docker images command to see a list of our local images.

Let’s remove the tag that we just created. To do this, we’ll use the rmi command. The rmi command stands for “remove image”.

Notice that the response from Docker tells us that the image has not been removed but only “untagged”. Verify this by running the images command.

Our image that was tagged with :v1.0.0 has been removed but we still have the node-docker:latest tag available on our machine.

Next steps

In this module, we took a look at setting up our example Node application that we will use for the rest of the tutorial. We also created a Dockerfile that we used to build our Docker image. Then, we took a look at tagging our images and removing images. In the next module, we’ll take a look at how to:

Feedback

Help us improve this topic by providing your feedback. Let us know what you think by creating an issue in the Docker Docs GitHub repository. Alternatively, create a PR to suggest updates.

How to make docker image

Copy raw contents

Build your own images

Docker images are the basis of containers. Each time you’ve used docker run you told it which image you wanted. In the previous sections of the guide you used Docker images that already exist, for example the ubuntu image and the training/webapp image.

You also discovered that Docker stores downloaded images on the Docker host. If an image isn’t already present on the host then it’ll be downloaded from a registry: by default the Docker Hub Registry.

In this section you’re going to explore Docker images a bit more including:

Listing images on the host

Let’s start with listing the images you have locally on our host. You can do this using the docker images command like so:

You can see the images you’ve previously used in the user guide. Each has been downloaded from Docker Hub when you launched a container using that image. When you list images, you get three crucial pieces of information in the listing.

Tip: You can use a third-party dockviz tool or the Image layers site to display

visualizations of image data.

A repository potentially holds multiple variants of an image. In the case of our ubuntu image you can see multiple variants covering Ubuntu 10.04, 12.04, 12.10, 13.04, 13.10 and 14.04. Each variant is identified by a tag and you can refer to a tagged image like so:

So when you run a container you refer to a tagged image like so:

If instead you wanted to run an Ubuntu 12.04 image you’d use:

Getting a new image

So how do you get new images? Well Docker will automatically download any image you use that isn’t already present on the Docker host. But this can potentially add some time to the launch of a container. If you want to pre-load an image you can download it using the docker pull command. Suppose you’d like to download the centos image.

You can see that each layer of the image has been pulled down and now you can run a container from this image and you won’t have to wait to download the image.

One of the features of Docker is that a lot of people have created Docker images for a variety of purposes. Many of these have been uploaded to Docker Hub. You can search these images on the Docker Hub website.

Pulling our image

The team can now use this image by running their own containers.

Creating our own images

The team has found the training/sinatra image pretty useful but it’s not quite what they need and you need to make some changes to it. There are two ways you can update and create images.

Updating and committing an image

To update an image you first need to create a container from the image you’d like to update.

Inside our running container first let’s update Ruby:

Now let’s add the json gem.

Once this has completed let’s exit our container using the exit command.

Now you have a container with the change you want to make. You can then commit a copy of this container to an image using the docker commit command.

You’ve also specified the container you want to create this new image from, 0b2616b0e5a8 (the ID you recorded earlier) and you’ve specified a target for the image:

You can then look at our new ouruser/sinatra image using the docker images command.

To use our new image to create a container you can then:

Building an image from a Dockerfile

To do this you create a Dockerfile that contains a set of instructions that tell Docker how to build our image.

Each instruction creates a new layer of the image. Try a simple example now for building your own Sinatra image for your fictitious development team.

Examine what your Dockerfile does. Each instruction prefixes a statement and is capitalized.

Note: You use # to indicate a comment

The first instruction FROM tells Docker what the source of our image is, in this case you’re basing our new image on an Ubuntu 14.04 image. The instruction uses the MAINTAINER instruction to specify who maintains the new image.

Lastly, you’ve specified two RUN instructions. A RUN instruction executes a command inside the image, for example installing a package. Here you’re updating our APT cache, installing Ruby and RubyGems and then installing the Sinatra gem.

Now let’s take our Dockerfile and use the docker build command to build an image.

Now you can see the build process at work. The first thing Docker does is upload the build context: basically the contents of the directory you’re building in. This is done because the Docker daemon does the actual build of the image and it needs the local context to do it.

Note: An image can’t have more than 127 layers regardless of the storage driver. This limitation is set globally to encourage optimization of the overall size of images.

You can then create a container from our new image.

Setting tags on an image

You can also add a tag to an existing image after you commit or build it. We can do this using the docker tag command. Now, add a new tag to your ouruser/sinatra image.

Now, see your new tag using the docker images command.

When pushing or pulling to a 2.0 registry, the push or pull command output includes the image digest. You can pull using a digest value.

Push an image to Docker Hub

Once you’ve built or created a new image you can push it to Docker Hub using the docker push command. This allows you to share it with others, either publicly, or push it into a private repository.

Remove an image from the host

You can also remove images on your Docker host in a way similar to containers using the docker rmi command.

Delete the training/sinatra image as you don’t need it anymore.

Note: To remove an image from the host, please make sure that there are no containers actively based on it.

Until now you’ve seen how to build individual applications inside Docker containers. Now learn how to build whole application stacks with Docker by networking together multiple Docker containers.