How to normalize data

How to normalize data

How to Normalize Data Between 0 and 1

To normalize the values in a dataset to be between 0 and 1, you can use the following formula:

zi = (xi – min(x)) / (max(x) – min(x))

For example, suppose we have the following dataset:

The minimum value in the dataset is 13 and the maximum value is 71.

To normalize the first value of 13, we would apply the formula shared earlier:

To normalize the second value of 16, we would use the same formula:

To normalize the third value of 19, we would use the same formula:

We can use this exact same formula to normalize each value in the original dataset to be between 0 and 1:

Using this normalization method, the following statements will always be true:

When to Normalize Data

Often we normalize variables when performing some type of analysis in which we have multiple variables that are measured on different scales and we want each of the variables to have the same range.

This prevents one variable from being overly influential, especially if it’s measured in different units (i.e. if one variable is measured in inches and another is measured in yards).

It’s also worth noting that we used a method known as min-max normalization in this tutorial to normalize the data values.

The two most common normalization methods are as follows:

1. Min-Max Normalization

2. Mean Normalization

Additional Resources

The following tutorials explain how to normalize data using different statistical softwares:

2 Easy Ways to Normalize data in Python

While we believe that this content benefits our community, we have not yet thoroughly reviewed it. If you have any suggestions for improvements, please let us know by clicking the “report an issue“ button at the bottom of the tutorial.

In this tutorial, we are going to learn about how to normalize data in Python. While normalizing we change the scale of the data. Data is most commonly rescaled to fall between 0-1.

Why Do We Need To Normalize Data in Python?

Machine learning algorithms tend to perform better or converge faster when the different features (variables) are on a smaller scale. Therefore it is common practice to normalize the data before training machine learning models on it.

Normalization also makes the training process less sensitive to the scale of the features. This results in getting better coefficients after training.

This process of making features more suitable for training by rescaling is called feature scaling.

The formula for Normalization is given below :

We subtract the minimum value from each entry and then divide the result by the range. Where range is the difference between the maximum value and the minimum value.

Steps to Normalize Data in Python

We are going to discuss two different ways to normalize data in python.

The first one is by using the method вЂnormalize()’ under sklearn.

Using normalize() from sklearn

Let’s start by importing processing from sklearn.

Now, let’s create an array using Numpy.

Now we can use the normalize() method on the array. This method normalizes data along a row. Let’s see the method in action.

Complete code

Here’s the complete code from this section :

Output :

We can see that all the values are now between the range 0 to 1. This is how the normalize() method under sklearn works.

You can also normalize columns in a dataset using this method. Let’s see how to do that next.

Normalize columns in a dataset using normalize()

Since normalize() only normalizes values along rows, we need to convert the column into an array before we apply the method.

To demonstrate we are going to use theВ California Housing dataset.

Let’s start by importing the dataset.

Next, we need to pick a column and convert it into an array. We are going to use the ‘total_bedrooms’ column.

How to Normalize a Dataset Without Converting Columns to Array?

Let’s see what happens when we try to normalize a dataset without converting features into arrays for processing.

Here the values are normalized along the rows, which can be very unintuitive. Normalizing along rows means that each individual sample is normalized instead of the features.

However, you can specify the axis while calling the method to normalize along a feature (column).

The value of axis parameter is set to 1 by default. If we change the value to 0, the process of normalization happens along a column.

You can see that the column for total_bedrooms in the output matches the one we got above after converting it into an array and then normalizing.

Using MinMaxScaler() to Normalize Data in Python

Sklearn provides another option when it comes to normalizing data: MinMaxScaler.

This is a more popular choice for normalizing datasets.

Here’s the code for normalizing the housing dataset using MinMaxScaler :

You can see that the values in the output are between (0 and 1).

MinMaxScaler also gives you the option to select feature range. By default, the range is set to (0,1). Let’s see how to change the range to (0,2).

The values in the output are now between (0,2).

Conclusion

These are two methods to normalize data in Python. We covered two methods of normalizing data under sklearn. Hope you had fun learning with us!

Want to learn more? Join the DigitalOcean Community!

Join our DigitalOcean community of over a million developers for free! Get help and share knowledge in our Questions & Answers section, find tutorials and tools that will help you grow as a developer and scale your project or business, and subscribe to topics of interest.

How, When, and Why Should You Normalize / Standardize / Rescale Your Data?

Last Updated on May 28, 2020 by Editorial Team

Author(s): Swetha Lakshmanan

Before diving into this topic, lets first start with some definitions.

“Rescaling” a vector means to add or subtract a constant and then multiply or divide by a constant, as you would do to change the units of measurement of the data, for example, to convert a temperature from Celsius to Fahrenheit.

“Normalizing” a vector most often means dividing by a norm of the vector. It also often refers to rescaling by the minimum and range of the vector, to make all the elements lie between 0 and 1 thus bringing all the values of numeric columns in the dataset to a common scale.

“Standardizing” a vector most often means subtracting a measure of location and dividing by a measure of scale. For example, if the vector contains random values with a Gaussian distribution, you might subtract the mean and divide by the standard deviation, thereby obtaining a “standard normal” random variable with mean 0 and standard deviation 1.

After reading this post you will know:

Let’s get started.

Why Should You Standardize / Normalize Variables:

Standardization:

Standardizing the features around the center and 0 with a standard deviation of 1 is important when we compare measurements that have different units. Variables that are measured at different scales do not contribute equally to the analysis and might end up creating a bais.

For example, A variable that ranges between 0 and 1000 will outweigh a variable that ranges between 0 and 1. Using these variables without standardization will give the variable with the larger range weight of 1000 in the analysis. Transforming the data to comparable scales can prevent this problem. Typical data standardization procedures equalize the range and/or data variability.

Normalization:

Similarly, the goal of normalization is to change the values of numeric columns in the dataset to a common scale, without distorting differences in the ranges of values. For machine learning, every dataset does not require normalization. It is required only when features have different ranges.

For example, consider a data set containing two features, age, and income(x2). Where age ranges from 0–100, while income ranges from 0–100,000 and higher. Income is about 1,000 times larger than age. So, these two features are in very different ranges. When we do further analysis, like multivariate linear regression, for example, the attributed income will intrinsically influence the result more due to its larger value. But this doesn’t necessarily mean it is more important as a predictor. So we normalize the data to bring all the variables to the same range.

When Should You Use Normalization And Standardization:

Normalization is a good technique to use when you do not know the distribution of your data or when you know the distribution is not Gaussian (a bell curve). Normalization is useful when your data has varying scales and the algorithm you are using does not make assumptions about the distribution of your data, such as k-nearest neighbors and artificial neural networks.

Standardization assumes that your data has a Gaussian (bell curve) distribution. This does not strictly have to be true, but the technique is more effective if your attribute distribution is Gaussian. Standardization is useful when your data has varying scales and the algorithm you are using does make assumptions about your data having a Gaussian distribution, such as linear regression, logistic regression, and linear discriminant analysis.

Dataset:

I have used the Lending Club Loan Dataset from Kaggle to demonstrate examples in this article.

Importing Libraries:

Importing dataset:

Let’s import three columns — Loan amount, int_rate and installment and the first 30000 rows in the data set (to reduce the computation time)

If you import the entire data, there will be missing values in some columns. You can simply drop the rows with missing values using the pandas drop na method.

Basic Analysis:

Let’s now analyze the basic statistical values of our dataset.

The different variables present different value ranges, therefore different magnitudes. Not only the minimum and maximum values are different, but they also spread over ranges of different widths.

Standardization (Standard Scalar) :

As we discussed earlier, standardization (or Z-score normalization) means centering the variable at zero and standardizing the variance at 1. The procedure involves subtracting the mean of each observation and then dividing by the standard deviation:

The result of standardization is that the features will be rescaled so that they’ll have the properties of a standard normal distribution with

where μ is the mean (average) and σ is the standard deviation from the mean.

CODE:

StandardScaler from sci-kit-learn removes the mean and scales the data to unit variance. We can import the StandardScalar method from sci-kit learn and apply it to our dataset.

Now let’s check the mean and standard deviation values

As expected, the mean of each variable is now around zero and the standard deviation is set to 1. Thus, all the variable values lie within the same range.

However, the minimum and maximum values vary according to how spread out the variable was, to begin with, and is highly influenced by the presence of outliers.

Normalization (Min-Max Scalar) :

In this approach, the data is scaled to a fixed range — usually 0 to 1.

In contrast to standardization, the cost of having this bounded range is that we will end up with smaller standard deviations, which can suppress the effect of outliers. Thus MinMax Scalar is sensitive to outliers.

A Min-Max scaling is typically done via the following equation:

CODE:

Let’s import MinMaxScalar from Scikit-learn and apply it to our dataset.

Now let’s check the mean and standard deviation values.

After MinMaxScaling, the distributions are not centered at zero and the standard deviation is not 1.

But the minimum and maximum values are standardized across variables, different from what occurs with standardization.

Robust Scalar (Scaling to median and quantiles) :

Scaling using median and quantiles consists of subtracting the median to all the observations and then dividing by the interquartile difference. It Scales features using statistics that are robust to outliers.

The interquartile difference is the difference between the 75th and 25th quantile:

The equation to calculate scaled values:

CODE:

First, Import RobustScalar from Scikit learn.

Now check the mean and standard deviation values.

As you can see, the distributions are not centered in zero and the standard deviation is not 1.

Neither are the minimum and maximum values set to a certain upper and lower boundaries like in the MinMaxScaler.

I hope you found this article useful. Happy learning!

References

How, When and Why Should You Normalize/Standardize/Rescale Your Data? was originally published in Towards AI — Multidisciplinary Science Journal on Medium, where people are continuing the conversation by highlighting and responding to this story.

How to normalize data in Excel, Tableau or any analytics tool you use

The concept of data normalization is one of the few concepts that come up over and over again during your work as an analyst. This concept is so critical that without fully understanding it’s importance and applications, you’ll never succeed as an analyst.

In this post I’m going to cover, in detail, the concept of normalization of data and how to normalize data in Excel and Tableau. The concepts covered in this post will be relevant for a wide range of the analyses you run in the future, no matter the tool or tools you use.

What is data normalization and why do I need to normalize data?

Imagine two farmers, Bob and John.

Bob and John have apple orchards that produce tons of apples each season. Now imagine I told you that Bob produced 10 tons of apples in 2017 compared to John who only produced 6 tons.

Without any additional information you’d have to say that Bob is the more productive farmer but this would be a very naive argument to make.

Now think as an analyst. What information would you need to determine which farmer is more productive?

As an analyst I’d want to understand all the variables involved in the output of these farmers. These variables may include:

Let’s assume that both farmers are located in the same geography but Bob has 1,000 hectares of orchards while John only has 400 hectares. Let’s assume both farmers have an equal number of farm workers in relation to the size of their farms.

We can now say that Bob managers to produce 10 tons from 1,000 hectares of land (1 ton per 100 hectares) while John managers to produce 6 tons from 400 hectares of land (1.5 tons per 100 hectors). John is now clearly the more productive farmer.

The basic idea behind normalization of data is to bring equality to the numbers in our analyses. If we don’t take into account inherent unfairness and flatten them out the variables then we will end up with incorrect results which could lead to disastrous consequences.

If we were a bank and wanted to give a loan to the more productive farmer, and we didn’t normalize our data, we would have picked the least productive farmer.

The time element in data normalization

Another very common use case for data normalization is adjusting for time. In the example above I mentioned that the output was produced in 2017. If instead of providing a time constraint I mentioned that the tonnage was produced in the «lifetime» of each farmer you’d now need to account for time.

Time is a variable which affects all business metrics to some extent and you’ll need to constantly adjust for time.

Cohort analyses like the one shown in the image below will by their very design, normalize for time but most other data visualizations won’t and you’ll need to do some extra work.

The table above shows a cohort table with each row representing a group of X with each column representing a time period (day, week, month). Each cell when looking down is equal to the cell above it in terms of time.

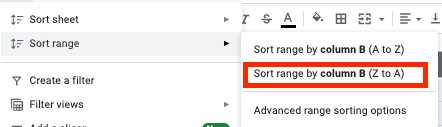

How to normalize data in excel?

In order to normalize data in excel you’ll need to familiarize yourself with the formulas I’ve listed below.

The IF function will help you create flags which will act as filters once we start slicing up our data. The DATEDIF function is very helpful in helping us determine how many time periods (days, weeks, months, etc) have passed between two dates. The AND function will save you a bunch of time building flags which take into account 2 or more helper columns.

Let’s take a look at an example and how these formulas will become critical in helping us normalize data in excel.

Let’s imagine we have a data set like the one shown above. The data contains info on the amount of kilograms of apples that are harvested each day grouped by farmer and farm.

Let’s say you’re tasked with calculating which farmer was more productive in the first 30 days of the harvest from their first farm. To work this out we need to flag the relevant rows and then we can simply group by the farmer.

To determine which rows are relevant we’ll need to create some helper columns. The first would be DATEDIF to determine if the harvest day is within the first 30 days of the start of the harvest. The formula I’d use would be:

=DATEDIF(E2,A2,»d»)

This would give me the number of days between the start of the harvest and the harvest day. Later I’ll check if this number is smaller or equal to 30.

The next helper column we need is a flag to determine if the row is related to the first farm. We already have such a flag in the «farm_num» column so we don’t need to create this flag.

Lastly we want a «master» flag which takes into account the two variables, «is the first farm», and datediff being smaller or equal to 30. To add this flag I’d use the following formula:

=AND(D2 = 1, F2

The last step in this entire process is to create a pivot table and use our new «is_relevant_row» flag as a filter.

The concept is the same no matter which data set you’ll working with. You want to work out the variables which are relevant and then make sure you manipulate your calculations to take the differences into account.

How to normalize data in Tableau?

Normalizing data in Tableau is very similar to how you’d do it in Excel.

The formulas that you’ll use most often in this kind of work are:

Once you’ve loaded the data set into Tableau create the datediff calculated column using the formula below:

=datediff(‘day’,[Started Harvesting At],[Date])

After creating the datadiff helper column go a head and create the master flag by using the IIF formula.

Final thoughts on normalizing data

I find the goal of normalizing data during an analysis a fun challenge. There is something fun about building logic to limit your sample size in a way that makes everything fair.

Data Normalization: Converting Raw Numbers Into Revenue

Data is at the heart of all business decisions. It surrounds us at every turn. Unfortunately, the information you get directly from data sources is often unstructured, fragmented, and misleading.

You are probably sitting on a pile of dull data that could help you attract leads, improve your ROI, and increase revenue.

Data normalization will turn your raw numbers into actionable insights that drive value.

What is data normalization?

To use some big words, data normalization is the process of organizing data in such a way as to fit it into a specific range or standard forms. It helps analysts acquire new insights, minimize data redundancy, get rid of duplicates, and make data easily digestible for further analysis.

However, such wording might be complicated and confusing, so let’s boil it down to a simple and illustrative example.

Imagine you’re a gardener harvesting apples. This year, you’ve managed to harvest 500 apples from 20 trees. However, your neighbor boasts of gathering 1,000 apples and calls you an awful gardener.

If you compare your 500 apples to your neighbor’s 1,000 apples, it might seem that you are not a very skillful gardener. But your neighbor never told you that he planted a hundred apple trees to achieve such a yield.

If you normalize the data, it is revealed that your neighbor is in an unpleasant situation. With 500 apples from 20 trees, you harvest 25 apples per tree, while your neighbor gets only 10 apples per tree. So, who’s an awful gardener now?

The analysis can get muddied by lots of unnormalized data, so you can’t see the forest for the trees.

Here’s another example using gun control data. It shows us just how easy it is to fall victim to a cognitive bias without data normalization.

Without normalized insights, it’s almost impossible to build a comprehensive picture and make informed decisions on the researched topic.

Ok, but when do you need to normalize data?

Users experience different problems as a result of heterogeneous data (data with high variability of data types and formats). There are other use cases when you need to normalize data. Let’s consider this issue from the perspective of marketing analysts.

First and foremost is the unification of naming conventions. For example, while gathering data from tens of marketing channels, analysts often encounter the same metrics under different names. As a result, analysts face difficulties when mapping data.

For example, here’s how one Reddit user explains their problems with disparate data.

Another challenge is consolidating disparate data, such as currencies or timezones, into a single source of truth. You simply can’t build an insightful dashboard while your ad spend is divided into dollars, euros, pounds, and a few other currencies.

Here’s how another marketer describes this problem on Reddit.

So, when is it the right time to normalize your data?

Terms that are often confused: data normalization vs. standardization

In data transformation, standardization and normalization are two different terms that are often confused.

Normalization rescales the values of a data set to make them fall into a range of [0,1]. This process is useful when you need all parameters to be on a positive scale.

Standardization adjusts your data to have a mean of 0 and a standard deviation of 1. It doesn’t have to fall into a specific range and is much less affected by outliers.

This information is more than enough for marketing analysts to stop confusing the terms. However, if you want to dive deeper into this topic, you can find all the differences between the two concepts here.

How to normalize data and get your insights together

At its core, data normalization requires you to create a standard data format for all records in your data set. Your algorithm should store all data in a unified format without regard to the input.

Here are some examples of data normalization:

Getting your data normalized goes hand in hand with database normalization. Let’s get a brief overview of what that is.

Database normalization: a precondition for purified insights

Database normalization is the process of organizing tables and data rows inside a relational database.

The process includes creating and managing relationships between tables. While normalizing databases, analysts and data engineers rely on rules that help protect data and make it more flexible for further analysis.

All rules are declared by database normalization types or so-called “Normal Forms”.

There are seven Normal Forms in total (the first three are the most frequently used):

Let’s go through all of these Normal Forms to learn how they help normalize your data.

First Normal Form (1NF)

The first Normal Form requires a data table to meet the following conditions:

Let’s review an example. Here we have two records from the employee table.

The Department_Name cells contain more than one parameter in a cell. Thus, they violate the first rule of the 1NF.

You have to split this table into two parts to normalize your table and remove repeating groups. The normalized tables will look like this:

The Second and Third Normal Forms revolve around dependencies between primary key columns and non-key columns.

Second Normal Form (2NF)

The main requirement of the Second Normal Form is that all of the table’s attributes should depend on the primary key. In other words, all values in the secondary columns should have a dependency on the primary column.

Note: A primary key is a unique column value that helps to identify a database record. It has some restrictions and attributes:

Additionally, the table must already be in 1NF with all partial dependencies removed and placed in a separate table.

At this stage, a composite primary key becomes the most problematic issue.

Note: A composite primary key is a primary key made out of two or more data columns.

Let’s imagine that you have a table that keeps track of the courses that your employees have taken. Different employees may enroll in the same course. That’s why you’ll need a composite key to identify the unique record. The Date column will be the additional parameter for your composite key. Take a look at the example:

However, the Description column is functionally dependent on the Name column. If you alter the course’s name, you should also change the description. So, you’ll need to create a separate table for the course description to comply with 2NF requirements.

That’s how you can give the course description a separate key and get away from using a composite key.

Third Normal Form (3NF)

The third Normal Form requires all the non-key columns in your table to depend on the primary key directly. In other words, if you remove any of the non-key columns, the remaining columns should still provide a unique identifier for each record.

Here are the main requirements of the 3NF:

The main difference between 2NF and 3NF is that in 3NF, there are no transitive dependencies. A transitive dependency exists when the non-key column depends on another non-key column.

Note: A transitive dependency is an indirect relationship between values within the same table that causes a functional dependency. A functional dependency sets particular constraints between attributes. In this relationship, attribute A determines the value of attribute B, while B determines A’s value.

Take a look at the example below:

Here, employee ID determines the department ID from our previous example, while the department ID determines the department name. Here’s where an indirect dependency between employee ID and department name occurs.

To comply with 3NF requirements, we need to split the table into multiple pieces.

With this structure, all non-key columns depend solely on the primary key.

Even though there are seven Normal Forms, the database is considered to be normalized after complying with 3NF requirements. We’ll do a quick overview of the remaining Normal Forms to cover the topic to the end.

Boyce-Codd Normal Form (BCNF)

It’s a more robust version of the 3NF. A BCNF table should comply with all 3NF rules and have no multiple overlapping candidate keys.

Fourth Normal Form (4NF)

The database is considered a 4NF if any of its instances contain two or more independent and multivalued data entries.

Fifth Normal Form (5NF)

A table falls into the fifth Normal Form if it complies with 4NF requirements, and it can’t be split into smaller tables without losing data.

Sixth Normal Form (6NF)

The sixth Normal Form is intended to decompose relation variables into irreducible components. It might be important when dealing with temporal variables or other interval data.

That’s all for database normalization 101. Now that you know how everything works, you can better understand the benefits that data normalization brings to your insights.

Why is it important to normalize data?

As we’ve already mentioned, data normalization’s major goal and benefit is to reduce data redundancy and inconsistencies in databases. The less duplication you have, the fewer errors and issues that can occur during data retrieval.

However, there are less obvious benefits that assist data analysts in their workflow.

Data mapping is no longer a time suck

If you’ve ever had to deal with unnormalized data, you know that the process of mapping data from multiple tables into one is pretty tedious.

It requires joining multiple tables, dealing with duplicates, and cleaning many empty data entries.

Of course, you can normalize data manually by writing SQL queries or Python scripts. However, data mapping tools with automated data normalization capabilities will speed up the process.

For example, Oracle Integration Cloud offers data mapping functionalities. After normalizing data in the cloud, the tool then builds metadata for source schemas and creates a one-to-one record for every data object in the target schema.

Analysts working with marketing insights have their own hidden gems. Improvado’s MCDM (Marketing Common Data Model) is a Swiss army knife for marketing and sales data normalization. The tool unifies disparate naming conventions, normalizes your insights, and bridges the gap between data sources and your destination with no manual actions required.

Use data storage more efficiently

With each passing day, companies collect more and more data that take up storage space. Whether you use cloud storage or an on-premises data warehouse, you have to use it effectively.

For example, 100 TB of data stored in AWS S3 will cost you $2,580 per month. What’s more, Amazon will charge you for every query you perform on your data. Tens of gigabytes of redundant data will not only increase your invoice for storage services but also cause you to pay for the analysis of meaningless insights.

Scaling on-premises data warehouses is also expensive, so clearing out unnecessary information can help you reduce operational costs and TCO (Total Cost of Ownership).

Reduce your time-to-insights

Apart from cost reduction, analysts can also boost their analysis productivity.

Executing queries on terabytes of data takes time. While your system processes the query, you can feel free to drink a cup of coffee and discuss politics with your colleagues. But it’s a pity when the query output is pointless because you had some inconsistencies in your data set.

With normalized data, you always get the expected output, but without surprises such as «N/A», «NaN», «NULL», etc. Moreover, the system carries out your queries faster when you parse only the relevant data. Who knows, maybe next time you’ll get the output before your coffee machine makes your cappuccino!

Build dashboards that you can trust

Data visualization is the best way to build a comprehensive picture of your analysis efforts. However, dashboards lack value if you build them upon harsh data. The dashboard won’t reflect the true state of affairs if you feed it duplicates.

That’s why data normalization is a top priority if you want to explain complex concepts or performance indicators through the prism of colorful charts and bars.

How to normalize data in different environments

Since data analysts work with different tools, we will explain how to normalize data in the most in-demand environments in today’s market.

How to normalize data in Python

Data scientists and analysts working with Python use several libraries to manipulate data and tidy it up. Here are the most popular among them:

We’ll review some of the functionalities of these libraries that might help you speed up your data normalization process.

Dropping columns in your data set

Raw data often contains excessive or unnecessary categories. For example, you’re working with a data set of marketing metrics that include impressions, CPC, CTR, ROAS, and conversions, but you only need conversions from this table.

If everything besides conversions isn’t important for the analysis, you need to remove excessive columns. Pandas offers an easy way to remove columns from a data set with the drop() function.

First, you have to define the list of columns you want to drop. In our case, it will look like this:

column_drop_list = [‘Impressions, ‘CPC’, ‘CTR’, ‘ROAS’]

Then, you need to execute the function:

dataframe_name.drop(column_drop_list, inplace=True, axis=1)

In this line of code, the first parameter stands for the name of our list of columns. Setting the inplace parameter to True means that Pandas will apply changes directly to your object. The third parameter indicates whether to drop labels or columns from the data frame (‘0’ stands for labels, ‘1’ stands for columns).

After checking the data set again, you’ll see that all redundant columns have been successfully removed.

Cleaning up data fields

Another step is to tidy up data fields. It helps to increase data consistency and get data into a standardized format.

The main problem here is that you can’t be sure that the API of a marketing platform will transfer 100% accurate data. You could still encounter misplaced characters or misleading data down the line.

A single marketing campaign can only have one amount of impressions. That’s why we need to separate valuable numbers from other characters.

Regular expressions (regex) can help you identify all of the digits inside your dataset. This regex generator will help you create a regular expression for your needs and test it right away.

Then, with the help of the str.extract() function, we can extract the required data from the data set as columns.

Finally, you might need to convert your column to a numerical version. Since all columns in the data frame have the object type, converting it to a numerical value will simplify further calculations. You can do this with the help of the pd.to_numeric() function.

Renaming columns in the data frame

Data sources often transfer columns with names analysts can’t understand. For example, CTR might be called C_T_R_final for some reason.

Another problem is revealed when you merge data from different sources and analyze it as a whole. While the first data source refers to impressions as imps, another one calls it views. This makes it difficult to calculate and build a holistic picture across all data sources.

That’s why you need to rename your columns to get everything structured.

First, create a dictionary with the future names of your columns. Let’s assume that we have impressions from Google Ads and Facebook Ads with different naming conventions. In this case, our dictionary will look the following way:

В В ‘Views’: ‘Facebook Ads Impressions’>

Then, you should use the rename() function on your data frame:

Now, your columns will have names assigned in the dictionary.

Pandas has a lot more different functions that can help you normalize data. We recommend reading the official documentation to get a better grasp of other functionalities.

How to normalize data in Excel

Excel or Google Sheets is a powerful tool loved by many analysts due to its ease of use and broad capabilities. There’s no doubt that programming languages, such as R or Python, have more features to offer, but spreadsheets do a great job of analyzing data.

However, your tables might contain heterogeneous data, and Excel provides a toolset to normalize insights.

Trimming extra spaces

Identifying excessive spaces in a large table is a waste of time when done manually. Fortunately, Excel and Google Sheets have a TRIM function that allows analysts to remove extra spaces in a data set with just one function. Take a look at the example below.

As you can see, the entry data has large whitespace between the words. With the TRIM function, data is put into the right format.

Removing empty data rows in the data set

Empty cells can escalate into a true nightmare during the analysis. That’s why you should always deal with them beforehand. Here’s how to do that.

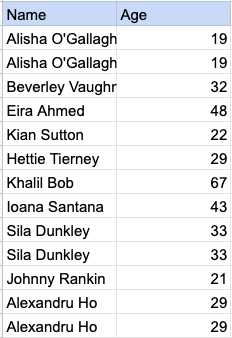

Removing duplicates

Duplicate data entries are a common problem for analysts working in Excel or Google Sheets. That’s why these tools have a dedicated feature to remove duplicates in a fast and easy way.

Google Sheets has a UNIQUE function that allows you to keep only unique data in your table.

Suppose you have this simple table with Name and Age columns that contain multiple duplicates.

You can get a clean table without any duplicate entries by feeding your data set to the UNIQUE function.

Text case normalization

After importing data from text files, you will often find inconsistent text cases in names or titles. You can easily fix your data in Excel or Google Sheets by using the following features:

Depending on your particular use case, there are many ways to normalize Excel or Google Sheets data. These guides shed more light on Excel data normalization:

Automated data normalization tools

Programming languages offer a broad toolset to normalize your data. However, manual data normalization has its limitations.

First of all, analysts need strong engineering knowledge and hands-on experience with the required libraries. Data scientists and engineers are highly desirable talent, and their paychecks are often astronomical.

Moreover, coding takes time, and it’s often prone to mistakes. So, a follow-up review of the analyzed dataset is a must-have. Eventually, the analysis process may take a lot more time than intended.

Automated tools save analysts’ time and offer more precise results. You can streamline your data to the normalization tool and get purified insights in minutes, not days.

Let’s consider the example of Improvado. Improvado is a revenue ETL platform that helps marketing analysts and salespeople align their disparate data and store insights in one place.

The platform gathers data from 300+ sources and helps analysts to normalize them with zero effort. Today’s marketing and sales tools market is fragmented, and different platforms use different naming conventions for similar metrics.

Improvado’s Marketing Common Data Model (MCDM) is a unified data model that provides automated cross-channel mapping, deduping, and UNIONing of popular data sources. Besides, it stitches and standardizes paid media sources together, automatically transferring analysis-ready insights to your data warehouse.

For example, Improvado can automatically merge Google Ads and Bing data into one table.

Furthermore, the platform can automatically parse data and convert it to a suitable format. For example, here’s how Improvado splits Date into Day of Week, Month, and Year columns.

Improvado goes even further, allowing analysts to automatically parse Adobe Analytics tracking codes on your website. You can extract the embedded AdGroup ID from the tracking code without manual manipulations.

Then, analysts can combine internal spreadsheets with classifications and match them based on the network ID.

Eventually, analysts get all of the tables matched by AdGroup ID and combined into the final results table.

That was just one of many use cases where Improvado can normalize data and deliver insights in a digestible way for further research.

With all insights in one place, the platform can streamline them to any visualization tool of your choice. Purified and structured data makes building a comprehensive cross-channel dashboard much easier. For example, here’s a Data Studio dashboard based on Improvado’s insights:

Normalize marketing and sales data with Improvado

Data normalization takes time, but clear insights are always worth the effort. Why waste your time on data normalization if you can dive straight into analysis and dramatically cut your time-to-insight?

Improvado untangles your web of revenue data, reduces the time spent on manual data manipulations, and ensures the highest granularity of insights. With this ETL system, you can analyze trustworthy data and build real-time dashboards that demonstrate the effectiveness of your marketing dollars. Schedule a call to learn more.

Learn how a revenue ETL platform can help you exceed your marketing goals and save time your analysts’ time.