How to parse html python

How to parse html python

Web Scraping and Parsing HTML in Python with Beautiful Soup

The internet has an amazingly wide variety of information for human consumption. But this data is often difficult to access programmatically if it doesn’t come in the form of a dedicated REST API. With Python tools like Beautiful Soup, you can scrape and parse this data directly from web pages to use for your projects and applications.

Let’s use the example of scraping MIDI data from the internet to train a neural network with Magenta that can generate classic Nintendo-sounding music. In order to do this, we’ll need a set of MIDI music from old Nintendo games. Using Beautiful Soup we can get this data from the Video Game Music Archive.

Getting started and setting up dependencies

Before moving on, you will need to make sure you have an up to date version of Python 3 and pip installed. Make sure you create and activate a virtual environment before installing any dependencies.

You’ll need to install the Requests library for making HTTP requests to get data from the web page, and Beautiful Soup for parsing through the HTML.

With your virtual environment activated, run the following command in your terminal:

We’re using Beautiful Soup 4 because it’s the latest version and Beautiful Soup 3 is no longer being developed or supported.

Using Requests to scrape data for Beautiful Soup to parse

First let’s write some code to grab the HTML from the web page, and look at how we can start parsing through it. The following code will send a GET request to the web page we want, and create a BeautifulSoup object with the HTML from that page:

Getting familiar with Beautiful Soup

The find() and find_all() methods are among the most powerful weapons in your arsenal. soup.find() is great for cases where you know there is only one element you’re looking for, such as the body tag. On this page, soup.find(id=’banner_ad’).text will get you the text from the HTML element for the banner advertisement.

soup.find_all() is the most common method you will be using in your web scraping adventures. Using this you can iterate through all of the hyperlinks on the page and print their URLs:

Parsing and navigating HTML with BeautifulSoup

Before writing more code to parse the content that we want, let’s first take a look at the HTML that’s rendered by the browser. Every web page is different, and sometimes getting the right data out of them requires a bit of creativity, pattern recognition, and experimentation.

Our goal is to download a bunch of MIDI files, but there are a lot of duplicate tracks on this webpage as well as remixes of songs. We only want one of each song, and because we ultimately want to use this data to train a neural network to generate accurate Nintendo music, we won’t want to train it on user-created remixes.

When you’re writing code to parse through a web page, it’s usually helpful to use the developer tools available to you in most modern browsers. If you right-click on the element you’re interested in, you can inspect the HTML behind that element to figure out how you can programmatically access the data you want.

Let’s use the find_all method to go through all of the links on the page, but use regular expressions to filter through them so we are only getting links that contain MIDI files whose text has no parentheses, which will allow us to exclude all of the duplicates and remixes.

Create a file called nes_midi_scraper.py and add the following code to it:

This will filter through all of the MIDI files that we want on the page, print out the link tag corresponding to them, and then print how many files we filtered.

Downloading the MIDI files we want from the webpage

Now that we have working code to iterate through every MIDI file that we want, we have to write code to download all of them.

In this download_track function, we’re passing the Beautiful Soup object representing the HTML element of the link to the MIDI file, along with a unique number to use in the filename to avoid possible naming collisions.

Run this code from a directory where you want to save all of the MIDI files, and watch your terminal screen display all 2230 MIDIs that you downloaded (at the time of writing this). This is just one specific practical example of what you can do with Beautiful Soup.

The vast expanse of the World Wide Web

Now that you can programmatically grab things from web pages, you have access to a huge source of data for whatever your projects need. One thing to keep in mind is that changes to a web page’s HTML might break your code, so make sure to keep everything up to date if you’re building applications on top of this.

If you’re looking for something to do with the data you just grabbed from the Video Game Music Archive, you can try using Python libraries like Mido to work with MIDI data to clean it up, or use Magenta to train a neural network with it or have fun building a phone number people can call to hear Nintendo music.

I’m looking forward to seeing what you build. Feel free to reach out and share your experiences or ask any questions.

Guide to Parsing HTML with BeautifulSoup in Python

Introduction

Web scraping is programmatically collecting information from various websites. While there are many libraries and frameworks in various languages that can extract web data, Python has long been a popular choice because of its plethora of options for web scraping.

Ethical Web Scraping

Web scraping is ubiquitous and gives us data as we would get with an API. However, as good citizens of the internet, it’s our responsibility to respect the site owners we scrape from. Here are some principles that a web scraper should adhere to:

An Overview of Beautiful Soup

The HTML content of the webpages can be parsed and scraped with Beautiful Soup. In the following section, we will be covering those functions that are useful for scraping webpages.

What makes Beautiful Soup so useful is the myriad functions it provides to extract data from HTML. This image below illustrates some of the functions we can use:

Let’s get hands-on and see how we can parse HTML with Beautiful Soup. Consider the following HTML page saved to file as doc.html :

The HTML file doc.html needs to be prepared. This is done by passing the file to the BeautifulSoup constructor, let’s use the interactive Python shell for this, so we can instantly print the contents of a specific part of a page:

Now we can use Beautiful Soup to navigate our website and extract data.

Navigating to Specific Tags

From the soup object created in the previous section, let’s get the title tag of doc.html :

Here’s a breakdown of each component we used to get the title:

Beautiful Soup is powerful because our Python objects match the nested structure of the HTML document we are scraping.

To get the text of the first tag, enter this:

To get the title within the HTML’s body tag (denoted by the «title» class), type the following in your terminal:

For deeply nested HTML documents, navigation could quickly become tedious. Luckily, Beautiful Soup comes with a search function so we don’t have to navigate to retrieve HTML elements.

Searching the Elements of Tags

The find_all() method takes an HTML tag as a string argument and returns the list of elements that match with the provided tag. For example, if we want all a tags in doc.html :

We’ll see this list of a tags as output:

Here’s a breakdown of each component we used to search for a tag:

We can search for tags of a specific class as well by providing the class_ argument. Beautiful Soup uses class_ because class is a reserved keyword in Python. Let’s search for all a tags that have the «element» class:

As we only have two links with the «element» class, you’ll see this output:

What if we wanted to fetch the links embedded inside the a tags? Let’s retrieve a link’s href attribute using the find() option. It works just like find_all() but it returns the first matching element instead of a list. Type this in your shell:

The find() and find_all() functions also accept a regular expression instead of a string. Behind the scenes, the text will be filtered using the compiled regular expression’s search() method. For example:

The list upon iteration, fetches the tags starting with the character b which includes and :

Free eBook: Git Essentials

Check out our hands-on, practical guide to learning Git, with best-practices, industry-accepted standards, and included cheat sheet. Stop Googling Git commands and actually learn it!

We’ve covered the most popular ways to get tags and their attributes. Sometimes, especially for less dynamic web pages, we just want the text from it. Let’s see how we can get it!

Getting the Whole Text

The get_text() function retrieves all the text from the HTML document. Let’s get all the text of the HTML document:

Your output should be like this:

Sometimes the newline characters are printed, so your output may look like this as well:

Now that we have a feel for how to use Beautiful Soup, let’s scrape a website!

Now that we have mastered the components of Beautiful Soup, it’s time to put our learning to use. Let’s build a scraper to extract data from https://books.toscrape.com/ and save it to a CSV file. The site contains random data about books and is a great space to test out your web scraping techniques.

In the modules mentioned above:

Before you begin, you need to understand how the webpage’s HTML is structured. In your browser, let’s go to http://books.toscrape.com/catalogue/page-1.html. Then right-click on the components of the webpage to be scraped, and click on the inspect button to understand the hierarchy of the tags as shown below.

This will show you the underlying HTML for what you’re inspecting. The following picture illustrates these steps:

From inspecting the HTML, we learn how to access the URL of the book, the cover image, the title, the rating, the price, and more fields from the HTML. Let’s write a function that scrapes a book item and extract its data:

The last line of the above snippet points to a function to write the list of scraped strings to a CSV file. Let’s add that function now:

As we have a function that can scrape a page and export to CSV, we want another function that crawls through the paginated website, collecting book data on each page.

To do this, let’s look at the URL we are writing this scraper for:

The only varying element in the URL is the page number. We can format the URL dynamically so it becomes a seed URL:

For the final piece to the puzzle, we initiate the scraping flow. We define the seed_url and call the browse_and_scrape() to get the data. This is done under the if __name__ == «__main__» block:

If you’d like to learn more about the if __name__ == «__main__» block, check out our guide on how it works.

You can execute the script as shown below in your terminal and get the output as:

Good job! If you wanted to have a look at the scraper code as a whole, you can find it on GitHub.

Conclusion

Web scraping is a useful skill that helps in various activities such as extracting data like an API, performing QA on a website, checking for broken URLs on a website, and more. What’s the next scraper you’re going to build?

html.parser — Simple HTML and XHTML parser¶

This module defines a class HTMLParser which serves as the basis for parsing text files formatted in HTML (HyperText Mark-up Language) and XHTML.

Create a parser instance able to parse invalid markup.

If convert_charrefs is True (the default), all character references (except the ones in script / style elements) are automatically converted to the corresponding Unicode characters.

An HTMLParser instance is fed HTML data and calls handler methods when start tags, end tags, text, comments, and other markup elements are encountered. The user should subclass HTMLParser and override its methods to implement the desired behavior.

This parser does not check that end tags match start tags or call the end-tag handler for elements which are closed implicitly by closing an outer element.

Changed in version 3.4: convert_charrefs keyword argument added.

Example HTML Parser Application¶

As a basic example, below is a simple HTML parser that uses the HTMLParser class to print out start tags, end tags, and data as they are encountered:

The output will then be:

HTMLParser Methods¶

HTMLParser instances have the following methods:

HTMLParser. feed ( data ) В¶

Reset the instance. Loses all unprocessed data. This is called implicitly at instantiation time.

Return current line number and offset.

Return the text of the most recently opened start tag. This should not normally be needed for structured processing, but may be useful in dealing with HTML “as deployed” or for re-generating input with minimal changes (whitespace between attributes can be preserved, etc.).

The following methods are called when data or markup elements are encountered and they are meant to be overridden in a subclass. The base class implementations do nothing (except for handle_startendtag() ):

This method is called to handle the start tag of an element (e.g.

The tag argument is the name of the tag converted to lower case. The attrs argument is a list of (name, value) pairs containing the attributes found inside the tag’s <> brackets. The name will be translated to lower case, and quotes in the value have been removed, and character and entity references have been replaced.

All entity references from html.entities are replaced in the attribute values.

HTMLParser. handle_endtag ( tag ) В¶

This method is called to handle the end tag of an element (e.g.

The tag argument is the name of the tag converted to lower case.

HTMLParser. handle_data ( data ) В¶

This method is called to process arbitrary data (e.g. text nodes and the content of and ).

HTMLParser. handle_entityref ( name ) В¶

HTMLParser. handle_charref ( name ) В¶

HTMLParser. handle_comment ( data ) В¶

This method is called when a comment is encountered (e.g. ).

HTMLParser. handle_decl ( decl ) В¶

This method is called to handle an HTML doctype declaration (e.g. html> ).

The decl parameter will be the entire contents of the declaration inside the markup (e.g. ‘DOCTYPE html’ ).

HTMLParser. handle_pi ( data ) В¶

The HTMLParser class uses the SGML syntactic rules for processing instructions. An XHTML processing instruction using the trailing ‘?’ will cause the ‘?’ to be included in data.

This method is called when an unrecognized declaration is read by the parser.

The data parameter will be the entire contents of the declaration inside the markup. It is sometimes useful to be overridden by a derived class. The base class implementation does nothing.

Examples¶

The following class implements a parser that will be used to illustrate more examples:

Parsing a doctype:

Parsing an element with a few attributes and a title:

The content of script and style elements is returned as is, without further parsing:

Parsing named and numeric character references and converting them to the correct char (note: these 3 references are all equivalent to ‘>’ ):

Feeding incomplete chunks to feed() works, but handle_data() might be called more than once (unless convert_charrefs is set to True ):

Web Scraping с помощью python

Введение

Недавно заглянув на КиноПоиск, я обнаружила, что за долгие годы успела оставить более 1000 оценок и подумала, что было бы интересно поисследовать эти данные подробнее: менялись ли мои вкусы в кино с течением времени? есть ли годовая/недельная сезонность в активности? коррелируют ли мои оценки с рейтингом КиноПоиска, IMDb или кинокритиков?

Но прежде чем анализировать и строить красивые графики, нужно получить данные. К сожалению, многие сервисы (и КиноПоиск не исключение) не имеют публичного API, так что, приходится засучить рукава и парсить html-страницы. Именно о том, как скачать и распарсить web-cайт, я и хочу рассказать в этой статье.

В первую очередь статья предназначена для тех, кто всегда хотел разобраться с Web Scrapping, но не доходили руки или не знал с чего начать.

Off-topic: к слову, Новый Кинопоиск под капотом использует запросы, которые возвращают данные об оценках в виде JSON, так что, задача могла быть решена и другим путем.

Задача

Инструменты

Загрузка данных

Первая попытка

Приступим к выгрузке данных. Для начала, попробуем просто получить страницу по url и сохранить в локальный файл.

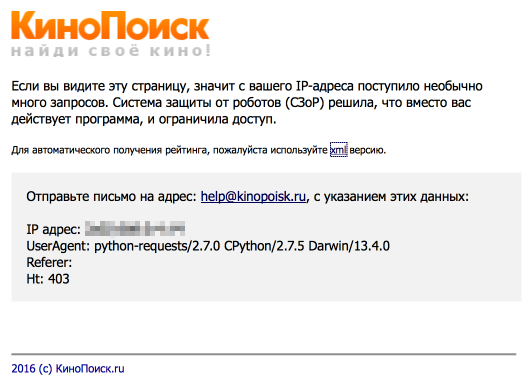

Открываем полученный файл и видим, что все не так просто: сайт распознал в нас робота и не спешит показывать данные.

Разберемся, как работает браузер

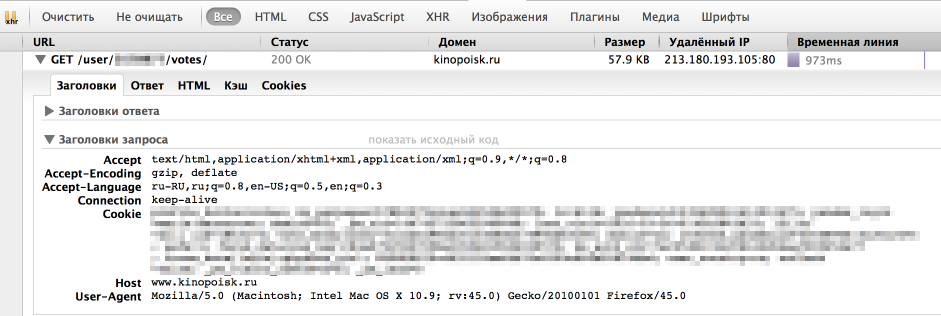

Однако, у браузера отлично получается получать информацию с сайта. Посмотрим, как именно он отправляет запрос. Для этого воспользуемся панелью «Сеть» в «Инструментах разработчика» в браузере (я использую для этого Firebug), обычно нужный нам запрос — самый продолжительный.

Как мы видим, браузер также передает в headers UserAgent, cookie и еще ряд параметров. Для начала попробуем просто передать в header корректный UserAgent.

На этот раз все получилось, теперь нам отдаются нужные данные. Стоит отметить, что иногда сайт также проверяет корректность cookie, в таком случае помогут sessions в библиотеке Requests.

Скачаем все оценки

Парсинг

Немного про XPath

XPath — это язык запросов к xml и xhtml документов. Мы будем использовать XPath селекторы при работе с библиотекой lxml (документация). Рассмотрим небольшой пример работы с XPath

Подробнее про синтаксис XPath также можно почитать на W3Schools.

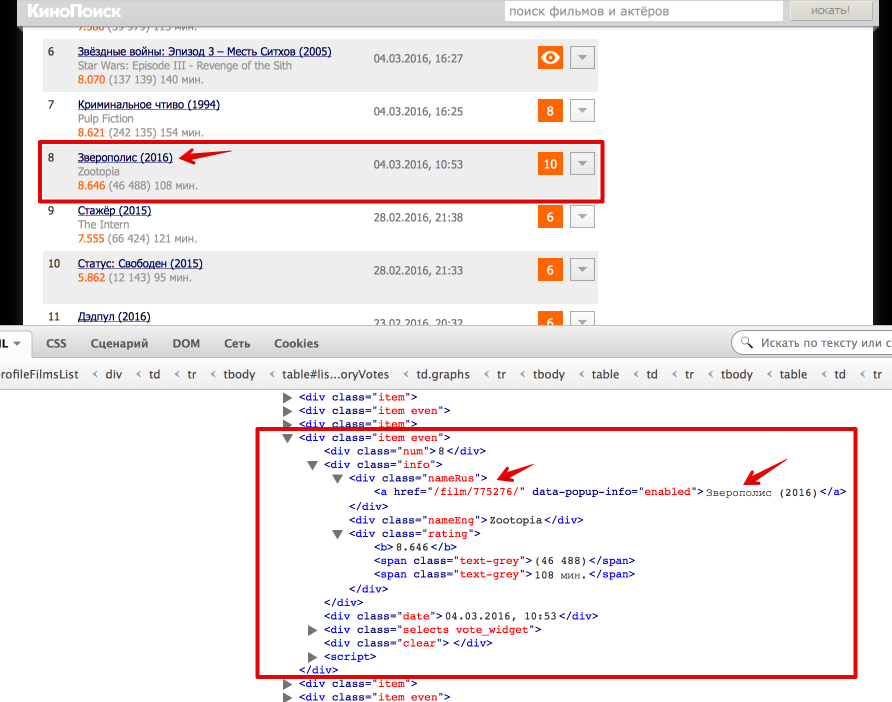

Вернемся к нашей задаче

. Выделим эту ноду:

Каждый фильм представлен как

. Рассмотрим, как вытащить русское название фильма и ссылку на страницу фильма (также узнаем, как получить текст и значение атрибута).

Еще небольшой хинт для debug’a: для того, чтобы посмотреть, что внутри выбранной ноды в BeautifulSoup можно просто распечатать ее, а в lxml воспользоваться функцией tostring() модуля etree.

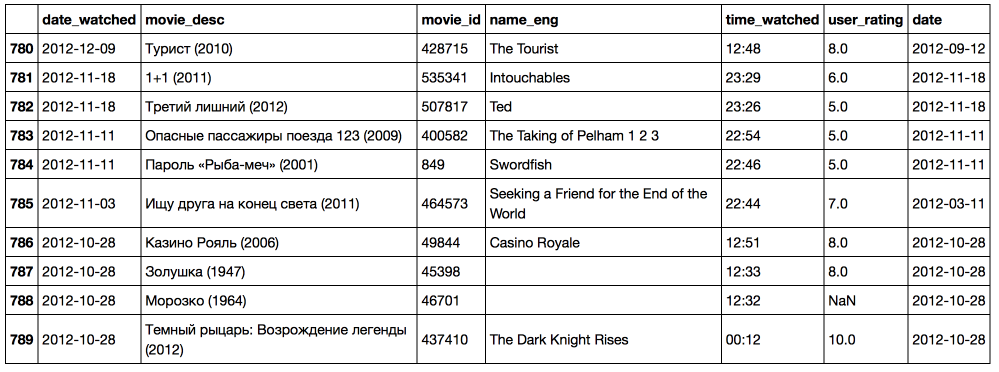

Резюме

В результате, мы научились парсить web-сайты, познакомились с библиотеками Requests, BeautifulSoup и lxml, а также получили пригодные для дальнейшего анализа данные о просмотренных фильмах на КиноПоиске.

Полный код проекта можно найти на github’e.

Parse HTML Data in Python

Through the emergence of web browsers, data all over the web is extensively available to absorb and use for various purposes. However, this HTML data is difficult to be injected programmatically in a raw manner.

We need to have some medium to parse the HTML script to be available programmatically. This article will provide the various ways we can parse HTML data quickly through Python methods/libraries.

Use the BeautifulSoup Module to Parse HTML Data in Python

Python offers the BeautifulSoup module to parse and pull essential data from the HTML and XML files.

This saves hours for every programmer by helping them navigate through the file structure to parse and fetch the data in a readable format from the HTML or marked-up structure.

Please enable JavaScript

The BeautifulSoup module accepts the HTML data/file or a web page URL as an input and returns the requested data using customized functions available within the module.

Let us look at some of the functions served by BeautifulSoup through the below example. We will be parsing the below HTML file ( example.html ) to extract some data.

To use the functions available in the BeautifulSoup module, we need to install it using the below command.

Once done, we then pass the HTML file ( example.html ) to the module, as shown below.

The BeautifulSoup() function creates an object/pointer that points to the HTML file through the HTML.parser navigator. We can now use the pointer data (as seen in the above code) to traverse the website or the HTML file.

Let us understand the HTML tag component breakup through the below diagram.

We use object.html_outer_tag.html_inner_tag to extract the data within a specific HTML tag from the entire script or web page. With the BeautifulSoup module, we can even fetch data against individual HTML tags such as title, div, p, etc.

Let us try to extract the data against different HTML tags shown below in a complete code format.

We tried to extract the data enclosed within the tag wrapped around the as the outer tag with the above code. Thus, we point the BeautifulSoup object to that tag.

Let us consider the below example to understand the parsing of HTML tags such as

Consider the below HTML code.

If we wish to display or extract the information of the tag

Thus, we can scrape web pages directly using this module. It interacts with the data over the web/HTML/XML page and fetches the essential customized data based on the tags.

Use the PyQuery Module to Parse HTML Data in Python

Python PyQuery module is a jQuery library that enables us to trigger jQuery functions against XML or HTML documents to easily parse through the XML or HTML scripts to extract meaningful data.

The pyquery module offers us a PyQuery function that enables us to set a pointer to the HTML code for data extraction. It accepts the HTML snippet/file as an input and returns the pointer object to that file.

This object can further be used to point to the exact HTML tag whose content/text is to be parsed. Consider the below HTML snippet ( demo.html ).

We then import the PyQuery function from within the pyquery module. With the PyQuery function, we point an object to the demo.html file in a readable format.

Then, the object(‘html_tag’).text() enables us to extract the text associated with any HTML tag.

The obj(‘head’) function points to the tag of the HTML script, and the text() function enables us to retrieve the data bound to that tag.

Use the lxml Library to Parse HTML Data in Python

Python offers us an lxml.html module to efficiently parse and deal with HTML data. The BeautifulSoup module also performs HTML parsing, but it turns out to be less effective when it comes to handling complex HTML scripts while scraping the web pages.

With the lxml.html module, we can parse the HTML data and extract the data values against a particular HTML tag using the parse() function. This function accepts the web URL or the HTML file as an input and associates a pointer to the root element of the HTML script with the getroot() function.

We can then use the same pointer with the cssselect(html_tag) function to display the content bound by the passed HTML tag. We will be parsing the below HTML script through the lxml.html module.

Let us have a look at the Python snippet below.

Here, we have associated object info with the HTML script ( example.html ) through the parse() function. Furthermore, we use cssselect() function to display the content bound with the

It displays all the data enclosed by the and div tags.

Use the justext Library to Parse HTML Data in Python

Python justext module lets us extract a more simplified form of text from within the HTML scripts. It helps us eliminate unnecessary content from the HTML scripts, headers, footers, navigation links, etc.

With the justext module, we can easily extract full-fledged text/sentences suitable for generating linguistic data sources. The justext() function accepts the web URL as an input, targets the content of the HTML script, and extracts the English statements/paragraphs/text out of it.

Consider the below example.

We have used the requests.get() function to do a GET call to the web URL passed to it. Once we point a pointer to the web page, we use the justext() function to parse the HTML data.

The justext() function accepts the web page pointer variable as an argument and parks it with the content function to fetch the web page content.

Moreover, it uses the get_stoplist() function to look for sentences of a particular language for parsing (English, in the below example).

Use the EHP Module to Parse HTML Data in Python

Having explored the different Python modules for parsing HTML data, fancy modules like BeautifulSoup and PyQuery do not function efficiently with huge or complex HTML scripts. To handle broken or complex HTML scripts, we can use the Python EHP module.

The learning curve of this module is pretty simple and is easy to adapt. The EHP module offers us the Html() function, which generates a pointer object and accepts the HTML script as an input.

To make this happen, we use the feed() function to feed the HTML data to the Html() function for identification and processing. Finally, the find() method enables us to parse and extract data associated with a specific tag passed to it as a parameter.

Have a look at the below example.

Here, we have the HTML script in the script variable. We have fed the HTML script to the Html() method using the feed() function internally through object parsing.

We then tried to parse the HTML data and get the data against the

Conclusion

This tutorial discussed the different approaches to parse HTML data using various Python in-built modules/libraries. We also saw the practical implementation of real-life examples to understand the process of HTML data parsing in Python.