How to save sklearn model

How to save sklearn model

How to save and load your Scikit-learn models in a minute

Have you ever built a Machine learning Model and wondered how to save them? Well in a minute, I will show you how to save your Scikit learn models as a file.

The saving of data is called Serialization, where we store an object as a stream of bytes to save on a disk. Loading or restoring the model is called Deserialization, where we restore the stream of bytes from the disk back to the Python object.

Reasons why you should save your model?

Tools to save and restore models in Scikit-learn

The first tool we describe is Pickle, the standard Python tool for object serialization and deserialization. Afterwards, we look at the Joblib library which offers easy (de)serialization of objects containing large data arrays, and finally, we present a manual approach for saving and restoring objects to/from JSON (JavaScript Object Notation). None of these approaches represents an optimal solution, but the right fit should be chosen according to the needs of your project.

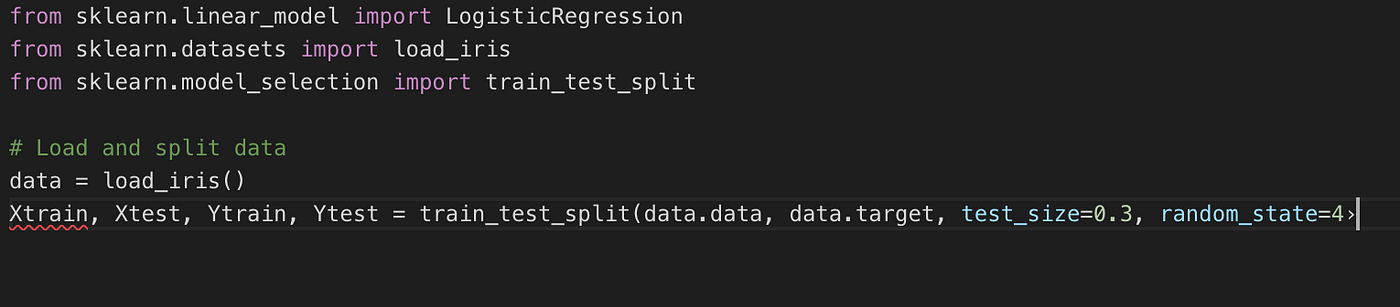

Initially, let’s create one scikit-learn model. In our example, we’ll use a Logistic Regression model and the Iris dataset. Let’s import the needed libraries, load the data, and split it into training and test sets.

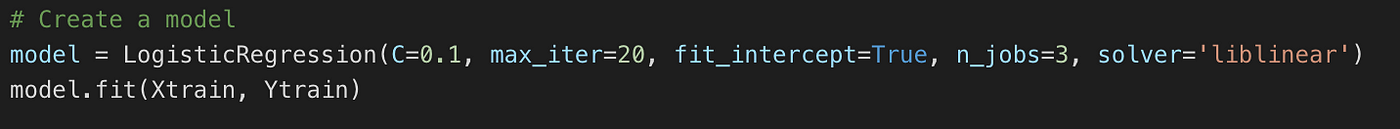

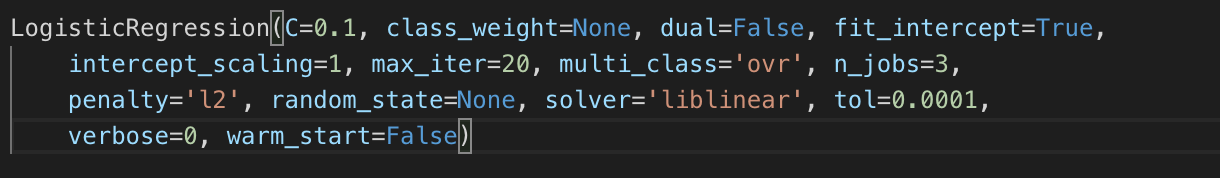

Now let’s create the model with some non-default parameters and fit it to the training data. We assume that you have previously found the optimal parameters of the model, i.e. the ones which produce the highest estimated accuracy.

Our Model is trained now. We can save the model and later load the model to make predictions on unseen data.

Save your model with Pickle

Pickle is used for serializing and de-serializing Python object structures also called marshalling or flattening. Serialization refers to the process of converting an object in memory to a byte stream that can be stored on a disk or sent over a network. Later on, this character stream can then be retrieved and de-serialized back to a Python object. Pickle is very useful when you are working with machine learning algorithms, where you need to save them to be able to generate predictions at a later time, without having to rewrite everything.

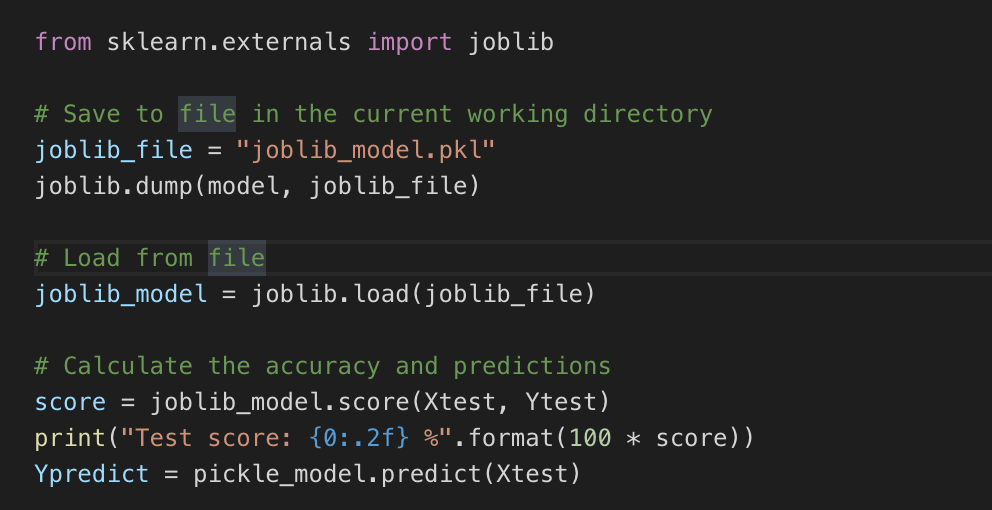

Save your model with Joblib

Joblib is part of the SciPy ecosystem and provides utilities for pipelining Python jobs. It provides utilities for saving and loading Python objects that make use of NumPy data structures, efficiently. This can be useful for some machine learning algorithms that require a lot of parameters or store the entire dataset (like K-Nearest Neighbors). While Pickle requires a file object to be passed as an argument, Joblib works with both file objects and string filenames. In case your model contains large arrays of data, each array will be stored in a separate file, but the save and restore procedure will remain the same.

Save your model Using JSON format

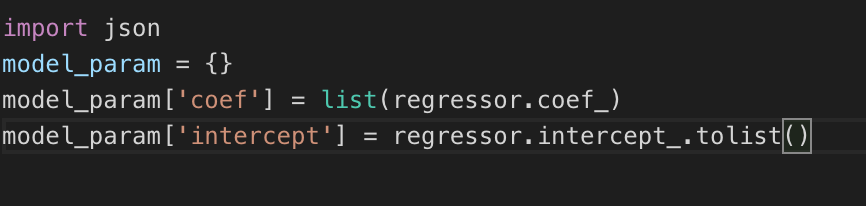

Depending on your project, many times you would find Pickle and Joblib as unsuitable solutions. Some of these reasons are discussed later in the Consideration section. We will first import the JSON library, create a dictionary containing the coefficients and intercept. Coefficients and intercept are an array object. We cannot dump an array into JSON strings so we convert the array to a list and store it in the dictionary

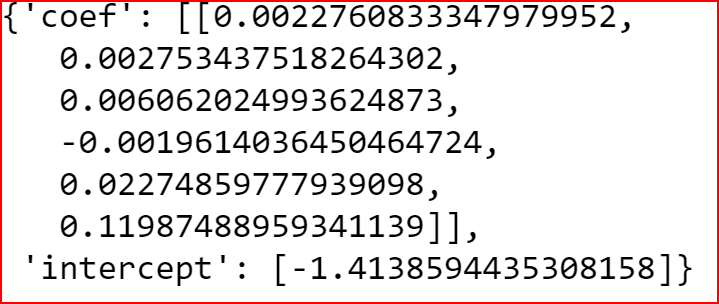

we convert Python dictionary to a JSON string using JSON dumps. we need indented output so we provide indent parameter and set it to 4. Save the JSON string to a file.

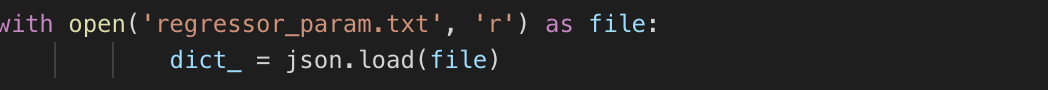

We load the content of the file to a JSON string. Open the file in ‘read’ mode and then load the JSON data into a python object which in our case is a dictionary

Since the data serialization using JSON actually saves the object into a string format, rather than byte stream, the ‘regressor_param.txt’ file could be opened and modified with a text editor. Although this approach would be convenient for the developer, it is less secure since an intruder can view and amend the content of the JSON file.

Congratulations! You’re now ready to start pickling and unpickling files with Python. You’ll be able to save your machine learning models and resume work on them later on. The Pickle and Joblib libraries are quick and easy to use but have compatibility issues across different Python versions and changes in the learning model.

New to data science? Need mentoring?

You can reach out here

Loved this article? Then follow me on medium to get more Insightful articles.

I worked an open source project that helps data scientists navigate the issues of basic data wrangling and preprocessing steps. The idea behind Slik is to jump-start supervised learning projects. Link to the documentation can be found here

9. Model persistence¶

After training a scikit-learn model, it is desirable to have a way to persist the model for future use without having to retrain. The following sections give you some hints on how to persist a scikit-learn model.

9.1. Python specific serialization¶

It is possible to save a model in scikit-learn by using Python’s built-in persistence model, namely pickle:

In the specific case of scikit-learn, it may be better to use joblib’s replacement of pickle ( dump & load ), which is more efficient on objects that carry large numpy arrays internally as is often the case for fitted scikit-learn estimators, but can only pickle to the disk and not to a string:

Later you can load back the pickled model (possibly in another Python process) with:

dump and load functions also accept file-like object instead of filenames. More information on data persistence with Joblib is available here.

9.1.1. Security & maintainability limitations¶

pickle (and joblib by extension), has some issues regarding maintainability and security. Because of this,

Never unpickle untrusted data as it could lead to malicious code being executed upon loading.

While models saved using one version of scikit-learn might load in other versions, this is entirely unsupported and inadvisable. It should also be kept in mind that operations performed on such data could give different and unexpected results.

In order to rebuild a similar model with future versions of scikit-learn, additional metadata should be saved along the pickled model:

The training data, e.g. a reference to an immutable snapshot

The python source code used to generate the model

The versions of scikit-learn and its dependencies

The cross validation score obtained on the training data

This should make it possible to check that the cross-validation score is in the same range as before.

Aside for a few exceptions, pickled models should be portable across architectures assuming the same versions of dependencies and Python are used. If you encounter an estimator that is not portable please open an issue on GitHub. Pickled models are often deployed in production using containers, like Docker, in order to freeze the environment and dependencies.

If you want to know more about these issues and explore other possible serialization methods, please refer to this talk by Alex Gaynor.

9.2. Interoperable formats¶

For reproducibility and quality control needs, when different architectures and environments should be taken into account, exporting the model in Open Neural Network Exchange format or Predictive Model Markup Language (PMML) format might be a better approach than using pickle alone. These are helpful where you may want to use your model for prediction in a different environment from where the model was trained.

ONNX is a binary serialization of the model. It has been developed to improve the usability of the interoperable representation of data models. It aims to facilitate the conversion of the data models between different machine learning frameworks, and to improve their portability on different computing architectures. More details are available from the ONNX tutorial. To convert scikit-learn model to ONNX a specific tool sklearn-onnx has been developed.

PMML is an implementation of the XML document standard defined to represent data models together with the data used to generate them. Being human and machine readable, PMML is a good option for model validation on different platforms and long term archiving. On the other hand, as XML in general, its verbosity does not help in production when performance is critical. To convert scikit-learn model to PMML you can use for example sklearn2pmml distributed under the Affero GPLv3 license.

Save classifier to disk in scikit-learn

How do I save a trained Naive Bayes classifier to disk and use it to predict data?

I have the following sample program from the scikit-learn website:

6 Answers 6

Trending sort

Trending sort is based off of the default sorting method — by highest score — but it boosts votes that have happened recently, helping to surface more up-to-date answers.

It falls back to sorting by highest score if no posts are trending.

Switch to Trending sort

Classifiers are just objects that can be pickled and dumped like any other. To continue your example:

Edit: if you are using a sklearn Pipeline in which you have custom transformers that cannot be serialized by pickle (nor by joblib), then using Neuraxle’s custom ML Pipeline saving is a solution where you can define your own custom step savers on a per-step basis. The savers are called for each step if defined upon saving, and otherwise joblib is used as default for steps without a saver.

You can also use joblib.dump and joblib.load which is much more efficient at handling numerical arrays than the default python pickler.

Joblib is included in scikit-learn:

Edit: in Python 3.8+ it’s now possible to use pickle for efficient pickling of object with large numerical arrays as attributes if you use pickle protocol 5 (which is not the default).

What you are looking for is called Model persistence in sklearn words and it is documented in introduction and in model persistence sections.

So you have initialized your classifier and trained it for a long time with

After this you have two options:

1) Using Pickle

2) Using Joblib

One more time it is helpful to read the above-mentioned links

In many cases, particularly with text classification it is not enough just to store the classifier but you’ll need to store the vectorizer as well so that you can vectorize your input in future.

future use case:

Before dumping the vectorizer, one can delete the stop_words_ property of vectorizer by:

to make dumping more efficient. Also if your classifier parameters is sparse (as in most text classification examples) you can convert the parameters from dense to sparse which will make a huge difference in terms of memory consumption, loading and dumping. Sparsify the model by:

Which will automatically work for SGDClassifier but in case you know your model is sparse (lots of zeros in clf.coef_) then you can manually convert clf.coef_ into a csr scipy sparse matrix by:

and then you can store it more efficiently.

sklearn estimators implement methods to make it easy for you to save relevant trained properties of an estimator. Some estimators implement __getstate__ methods themselves, but others, like the GMM just use the base implementation which simply saves the objects inner dictionary:

The recommended method to save your model to disc is to use the pickle module:

However, you should save additional data so you can retrain your model in the future, or suffer dire consequences (such as being locked into an old version of sklearn).

In order to rebuild a similar model with future versions of scikit-learn, additional metadata should be saved along the pickled model:

The training data, e.g. a reference to a immutable snapshot

The python source code used to generate the model

The versions of scikit-learn and its dependencies

The cross validation score obtained on the training data

This is especially true for Ensemble estimators that rely on the tree.pyx module written in Cython(such as IsolationForest ), since it creates a coupling to the implementation, which is not guaranteed to be stable between versions of sklearn. It has seen backwards incompatible changes in the past.

Machine Learning — How to Save and Load scikit-learn Models

In this post, we will explore how to persist in a model built using scikit-learn libraries in Python. Load the saved model for prediction. Here we will explore three different methods — using pickle, joblib and storing the model parameters in a JSON file

What is saving and loading of a model in Machine Learning?

We save model’s parameter and coefficients i.e.; model’s weights and biases to file on the disk. We can later load the saved model’s weights and biases to make a prediction for unseen data.

Saving of data is also called as Serialization where we store an object as a stream of bytes to save on a disk

Before we cover some Machine Learning finance applications, let’s first understand what Machine Learning is. Machine…

Loading or restoring the model is called as Deserialization where we restore the stream of bytes from the disk back to the Python object

Why we would like to save a model and later restore it?

We would like to save and load the model

How can we save and load the models built using scikit-learn?

We will discuss three different ways to save and load the scikit-learn models using

We will create a Linear Regression model, save the model and load the models using pickle, joblib and saving and loading the model coefficients to a file in JSON format.

Below we have the code to create the Linear Regression

Our Model is trained now. We can save the model and later load the model to make predictions on unseen data.

Using Pickle

We will first import the library

Specifying the file name and path where we want to save the model

To save the model, open the file in write and binary mode. Along with filename pass the linear regression model we built

To load the model, open the file in reading and binary mode

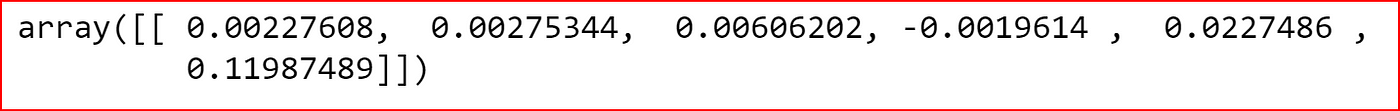

let’s check if we have the same values for the coefficients

we can now use the loaded model to make a prediction for the test data

Using Joblib

First we will import the joblib library from sklearn.externals

To save the model, we use joblib.dump to which we specify the filename and the regression model which we need save.

Note that we are only providing the filename and not opening the file as we did for the pickle method.

Now that we have saved the model, we can load the model using joblib.load

Using JSON Format

We can also save the model parameters in a JSON file and load them back. In the example below, we will save the model coefficients and intercept and load them back.

We will first import the json library, create a dictionary containing the coefficients and intercept.

Coefficients and intercept are an array object. We cannot dump an array into JSON strings so we convert the array to a list and store it in the dictionary

we convert Python dictionary to a JSON string using json dumps. we need indented output so we provide indent parameter and set it to 4

Save the json string to a file

We load the content of the file to a json string. Open the file in read mode and then load the json data into python object which in our case is a dictionary

Are there any other considerations when saving or loading the model?

Clap if you liked the article!

References:

Data set from— Mohan S Acharya, Asfia Armaan, Aneeta S Antony: A Comparison of Regression Models for Prediction of Graduate Admissions, IEEE International Conference on Computational Intelligence in Data Science 2019

3.4. Model persistence¶

After training a scikit-learn model, it is desirable to have a way to persist the model for future use without having to retrain. The following section gives you an example of how to persist a model with pickle. We’ll also review a few security and maintainability issues when working with pickle serialization.

3.4.1. Persistence example¶

It is possible to save a model in scikit-learn by using Python’s built-in persistence model, namely pickle:

In the specific case of scikit-learn, it may be better to use joblib’s replacement of pickle ( dump & load ), which is more efficient on objects that carry large numpy arrays internally as is often the case for fitted scikit-learn estimators, but can only pickle to the disk and not to a string:

Later you can load back the pickled model (possibly in another Python process) with:

dump and load functions also accept file-like object instead of filenames. More information on data persistence with Joblib is available here.

3.4.2. Security & maintainability limitations¶

pickle (and joblib by extension), has some issues regarding maintainability and security. Because of this,

Never unpickle untrusted data as it could lead to malicious code being executed upon loading.

While models saved using one version of scikit-learn might load in other versions, this is entirely unsupported and inadvisable. It should also be kept in mind that operations performed on such data could give different and unexpected results.

In order to rebuild a similar model with future versions of scikit-learn, additional metadata should be saved along the pickled model:

The training data, e.g. a reference to an immutable snapshot

The python source code used to generate the model

The versions of scikit-learn and its dependencies

The cross validation score obtained on the training data

This should make it possible to check that the cross-validation score is in the same range as before.

Since a model internal representation may be different on two different architectures, dumping a model on one architecture and loading it on another architecture is not supported.

If you want to know more about these issues and explore other possible serialization methods, please refer to this talk by Alex Gaynor.

Источники информации:

- http://scikit-learn.org/stable/model_persistence.html

- http://stackoverflow.com/questions/10592605/save-classifier-to-disk-in-scikit-learn

- http://medium.datadriveninvestor.com/machine-learning-how-to-save-and-load-scikit-learn-models-d7b99bc32c27

- http://scikit-learn.org/0.22/modules/model_persistence.html