How to submit kaggle from notebook

How to submit kaggle from notebook

Submitting results to Kaggle competition from command line regardless of kernel type or file name, in or out of Kaggle

Within Kaggle: How can I submit my results to Kaggle competition regardless of kernel type or file name?

And if I am in a notebook outside Kaggle (Colab, Jupyter, Paperspace, etc.)?

1 Answer 1

Introduction (you can skip this part)

I was looking around for a method to do that. In particular, being able to submit at any point within the notebook (so you can test different approaches), a file with any name (to keep things separated), and any number of times (respecting the Kaggle limitations).

I found many webs explaining the process like

However they fail to clarify that the Kernel must be of type «Script» and not «Notebook».

That has some limitations that I haven’t fully explored.

I just wanted to be able to submit whatever file from the notebook, just like any other command within it.

The process

Well, here is the process I came up with.

Suggestions, errors, comments, improvements are welcome. Specifically I’d like to know why this method is no better than the one described above.

Q: Where do I get my kaggle credentials?

A: You get them from https://www.kaggle.com > ‘Account’ > «Create new API token»

1. Install required libraries

See Note below.

How you get the file there is up to you.

One simple way is this:

This may seem a bit cumbersome, but soon or later your API credentials may change and updating the file in one point (the dataset) will update it in all your notebooks.

3. Submit with a simple command.

Here is the code name of the competition. You can get it from the url of the competition or from the section «My submissions» within the competition page.

Note: If you are too conscious about security of your credentials and/or want to share the kernel, then you can type the 2 commands with your credentials on the «Console» instead of within the notebook (example below). They will be valid/available during that session only.

You can find the console at the bottom of your kernel.

PS: Initially this was posted here, but when the answer grew the Markdown display breaks in Kaggle (not in other places), therefore I had to take it out of Kaggle.

Making Your First Kaggle Submission

An easy-to-understand guide to getting started with competitions and successfully modelling and making your first submission.

In the world of Data Science, using Kaggle is almost a necessity. You use it to get datasets for your projects, view and learn from various notebooks shared generously by people who want to see you succeed in building good machine learning models, discover new insights into how to approach complex machine learning problems, the list goes on.

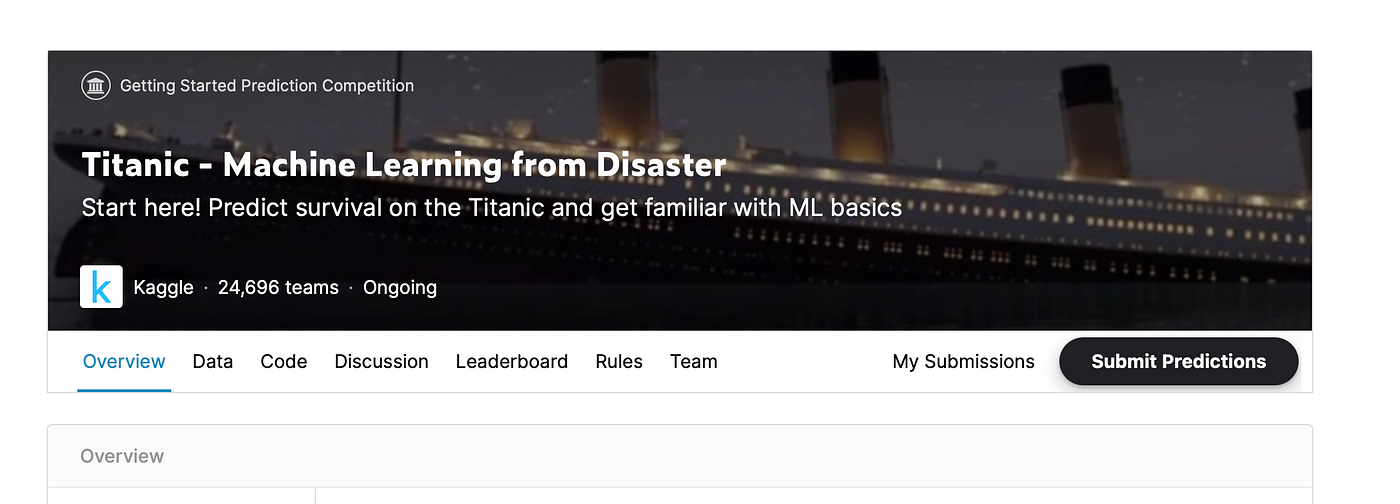

One of the best ways to try out your skills in the real world is through the competitions hosted on the website. The Titanic competition is the most wholesome, beginner friendly way to get started and obtain a nice feel of what to expect and how to approach a problem in the best possible ways.

In this article, I will walk you through making your first machine learning model and successfully entering your own ship to sail in the sea of these competitions.

Understanding the data

First — open up the competition page. You will need to be referencing it every now and then.

For this particular task, our problem statement and the end goal are clearly defined on the competition page itself:

We need to develop an ML algorithm to predict the survival outcome of passengers on the Titanic.

The outcome is measured as 0 (not survived) and 1 (survived). This is the dead giveaway that we have a binary classification problem at hand.

Well, fire up your jupyter notebook, and let’s see what the data looks like!

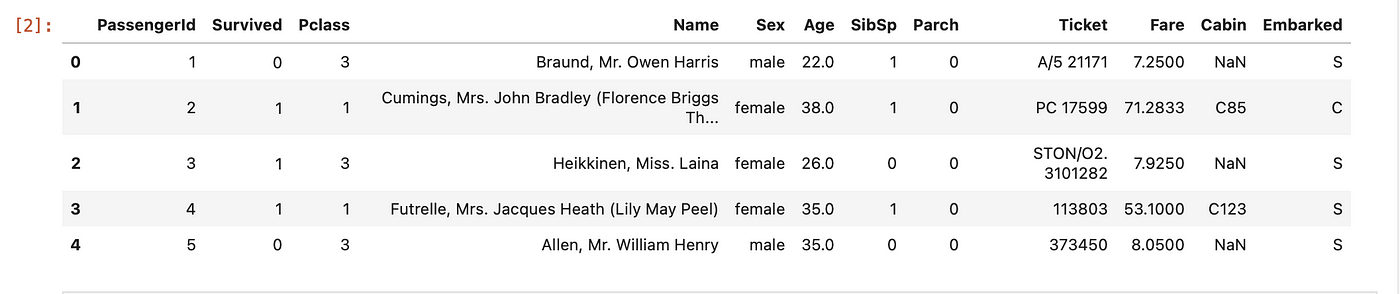

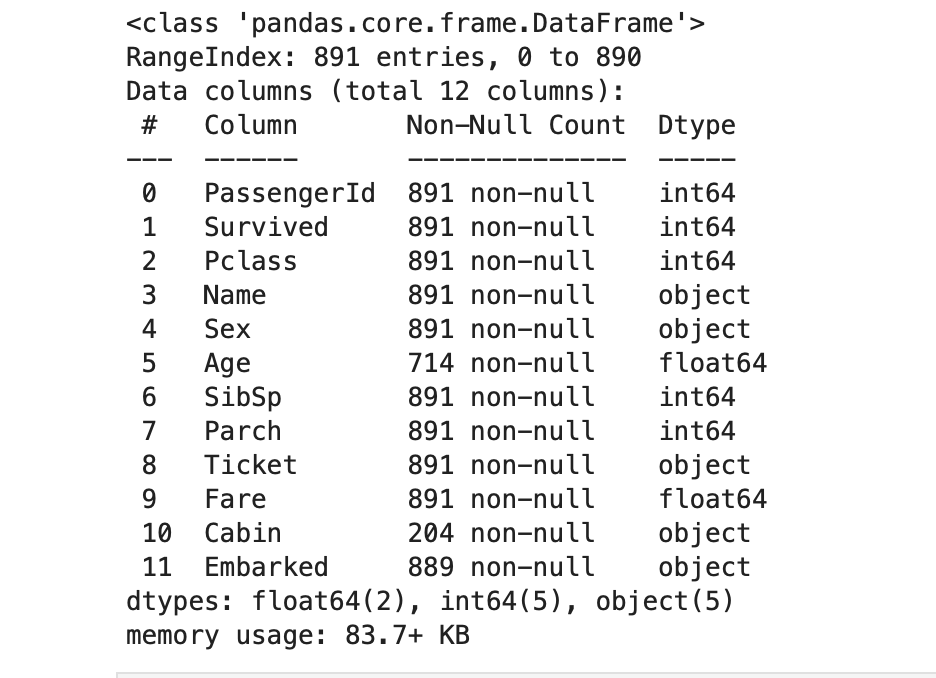

At the first glance — there is a mix of categorical and continuous features. Let’s take a look at the data types for the columns:

I won’t go into the details about what each of these features actually represent about a Titanic passenger — I’ll assume you’ve read about it by now on the Kaggle website (which you should if you haven’t).

Data cleaning

This is the backbone of our entire ML workflow, the step that makes or breaks a model. We’ll be dealing with:

of the data, as described in this wonderful article. Read it later if you want to get the most in-depth knowledge about data cleaning techniques.

Now go ahead and make a combined list of the train and test data to start our cleaning.

Our target variable will the Survived column — let’s keep it aside.

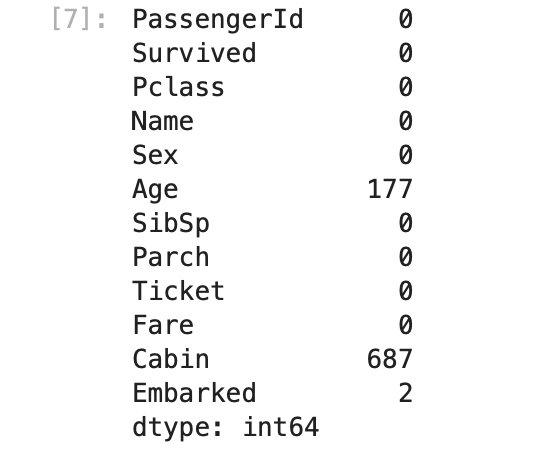

First we check for null values in columns of training data.

Right away we can observe that three columns seem quite unncessary for modelling. Also, the cabin column is quite sparsely represented. We could keep it and derive some kind of value from it but for now, let’s keep it simple.

Now we move on to other columns that have null values.

We substitute the median values for Age and Fare, while the mode value for Embarked (which will be S).

Doing that, we now have no null values in our data. Job well done!

Feature engineering

Now, let’s create some new features for our data.

We create a column called ‘ familySize’ which will be the sum of our parents+siblings+children.

Also, we want a new column called ‘ isAlone’ which basically means if the passenger of the Titanic was travelling alone aboard.

Finally, we add one more thing, which is — the title of the passengers as a separate column.

Let’s create a new column first.

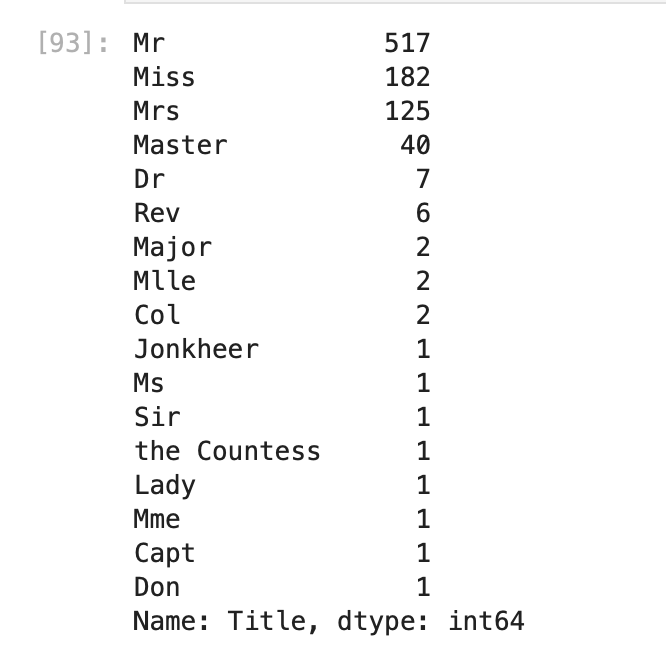

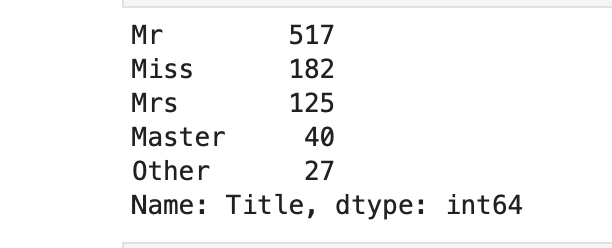

Now, let’s see how many unique titles were created.

That is quite a lot! We don’t want so many titles.

So let’s go ahead and accumulate all titles with less than 10 passengers into a separate ‘ Other’ category.

Now we look at the modified column.

This looks way better.

Alright, let’s finally transform our two continuous columns — Age and Fare into quartile bins. Learn more about this function here.

This makes these two columns categorical, which is exactly what we want. Now, let’s take another look at our data.

Label Encoding our data

All our categorical columns can now be encoded into 0, 1, 2… etc. labels via the convenient function provided by sklearn.

Alright! Now only two more steps remain. First, we drop the unnecessary columns.

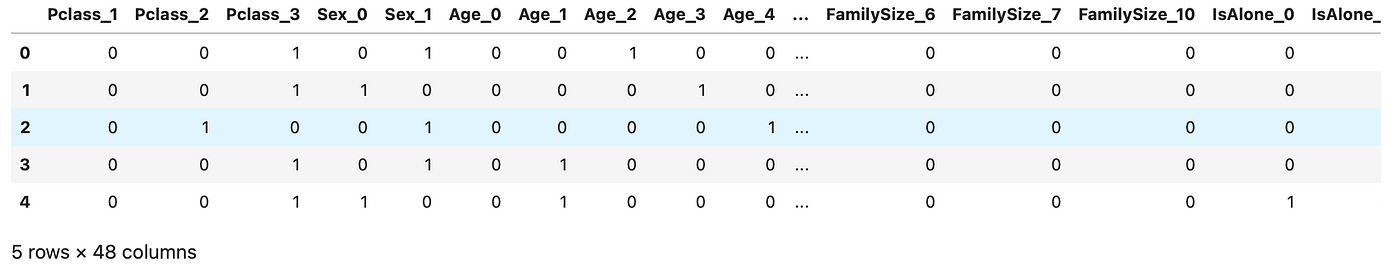

and lastly, we one— hot— encode our non-label columns with pandas’ get_dummies function.

Let’s take another look at our data now, shall we?

Awesome! Now we can begin our modelling!

Making a SVM model

Split our data into train and validation sets.

Now our training data will have the shape:

We now drop the label column from our train data.

and finally, we separate our label column from other columns.

With sklearn’s splitting function, we split our train data into train and validation sets with a 80–20 split.

Let’s take another look at the data shape again.

Perfect! We are ready to train our svm model.

Training the model

We import our support vector machine model:

Next, we construct a simple classifier from it and fit it on our training data and labels:

Good! We’re very near to making our final submission predictions. Great job so far!

Now, let’s validate our model on the validation set.

This looks quite nice for a simple linear svm model.

Making the predictions for submission

We are finally here, the moment when we generate our first submissions predictions and hence, our file which we will be uploading to the competition website.

Let’s use our trained classifier model to predict on the test(submission) set of the data.

Finally, we make a

We check the shape of our final prediction output dataframe:

Awesome! This is exactly what we need.

The last step is to make a csv file from this dataframe:

And we are done!

Making the submission

Go to the competition’s website and look for the below page to upload your csv file.

All the code from this article is available in my repo here. Although, if you’ve followed along so far, you will already have a workable codebase+file for submission.

The README file also helps with additional things like building your virtual environment and a few other things, so make sure to check it out if you want.

Easy Kaggle Offline Submission With Chaining Kernel Notebooks

A simple guide on facilitating your Kaggle submission for competitions with restricted internet access using Kaggle kernel as an intermediate step as data storage.

Are you new to Kaggle? Have you found it a fascinating world for data scientists or machine learning engineers but then you struggle with your submission? Then the following guide is right for you! I will show you how to make your experience smoother, reproducible, and extend your professional life experiences.

Best Practices to Rank on Kaggle Competition with PyTorch Lightning and Grid.ai Spot Instances

Complete data science cycle of solving Image classification challenge with an interactive session, hyper-parameter…

Quick Kaggle introduction

Kaggle is a widely known data science platform connecting machine learning (ML) enthusiasts with companies and organizations proposing challenging topics to be solved with some financial motivation for successful solutions. As an ML engineer, you benefit from an already prepared (mostly high-quality) dataset served for you ready to dive in. In addition, Kaggle offers discussions and sharing & presenting their work as Jupyter notebooks (called Kernels) and custom datasets.

In the end, if you participate in a competition and want to rank on the leaderboard, you need to submit your solution. There are two types of competitions they ask the user to (a) upload generated predictions in the given submission format or (b) prepare a kernel that produces predictions in the submission format. The first case is relatively trivial and easy to do, so we’ll focus only on kernel submissions.

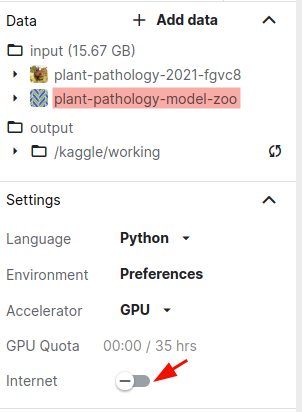

These competitions include just a minimal testing dataset for user verification of his prediction pipeline. For data leaking and other security reasons running kernels are not allowed to use the internet (as users could upload all test data to their storage and later overfit the dataset). On the other hand, this makes submissions not easy if your kernel needs to install additional dependencies or download your latest trained model.

The dirty way with datasets

The intuitive and first-hand solution is to create storage with required extra packages and a trained model. For this purpose, you can use/create a Kaggle dataset and upload all data there. I ques that even you can see that it is banding one tool for a different purpose.

Guide on Submitting Offline Kernels to a Kaggle Competition

This post presents how to create a simple Kaggle kernel for a Competition submission that require an offline environment

In the previous post, we have talked about the need for hyperparameter searchers, and we have walked throw Grid.ai Runs which simplifies fine-tuning in the cloud. We can even monitor our training online and eventually terminate some by our choice with all default configurations.

Hyper-Parameter Optimization with Grid.ai and No Code Change

Best Practices to Rank on Kaggle with PyTorch Lightning and Grid Spot Instances (Part 4/5)

Now that we have our best model, it’s time to submit our results. This challenge and several others have restricted access to the test data, which means that the real test dataset is provided only for isolated prediction run without any external connection.

Submitting our Model to Kaggle Competition

In this challenge, the user is asked to prepare a notebook that reads a folder with test images and saves its prediction to a specific submission.csv file. You can debug the flow as a few test images are provided, and you can work with the kernel in an interactive session. While the final evaluation is performed, the dummy test folder is replaced by the true one.

Said so, the user had typically two options — prepare a kernel which:

a) does the full training followed by prediction on test images;

b) uses saved checkpoint and performs the prediction only.

Making multiple iterations with a) scenario is difficult, especially since the Kaggle kernel runtime is limited. The b) option allows us to outsource the heavy computing to any other platform and come back with the trained model or even an ensemble of models.

Create a Private Kaggle Dataset for Models and Packages

For test data leakage, these evaluation kernels are required to run offline, which means that we have to have all the needed packages and checkpoints included in the Kaggle workspace.

For this purpose, we create private storage (Kaggle calls it dataset even you can have there almost anything) where we upload the required packages (all needed python packages not included in the default Kaggle kernel) and our trained model — checkpoint.

How to get started on Kaggle Competitions

Step by step guide on how to structure your first Data Science projects on Kaggle

If you are starting your journey in data science and machine learning, you may have heard of Kaggle, the world’s largest data science community. With the myriad of courses, books, and tutorials addressing the subject online, it’s perfectly normal to feel overwhelmed with no clue where to start.

Although there isn’t a unanimous agreement on the best approach to take when starting to learn a skill, getting started on Kaggle from the beginning of your data science path is solid advice.

It is an amazing place to learn and share your experience and data scientists of all levels can benefit from collaboration and interaction with other users. More experienced users can keep up to date with new trends and technologies, while beginners will find a great environment to get started in the field.

Kaggle has several crash courses to help beginners train their skills. There are courses on python, pandas, machine learning, deep learning, only to name a few. As you gain more confidence, you can enter competitions to test your skills. In fact, after a few courses, you will be encouraged to join your first competition.

In this article, I’ll show you, in a straightforward approach, some tips on how to structure your first project. I’ll be working on the Housing Prices Competition, one of the best hands-on projects to start on Kaggle.

1. Understand the Data

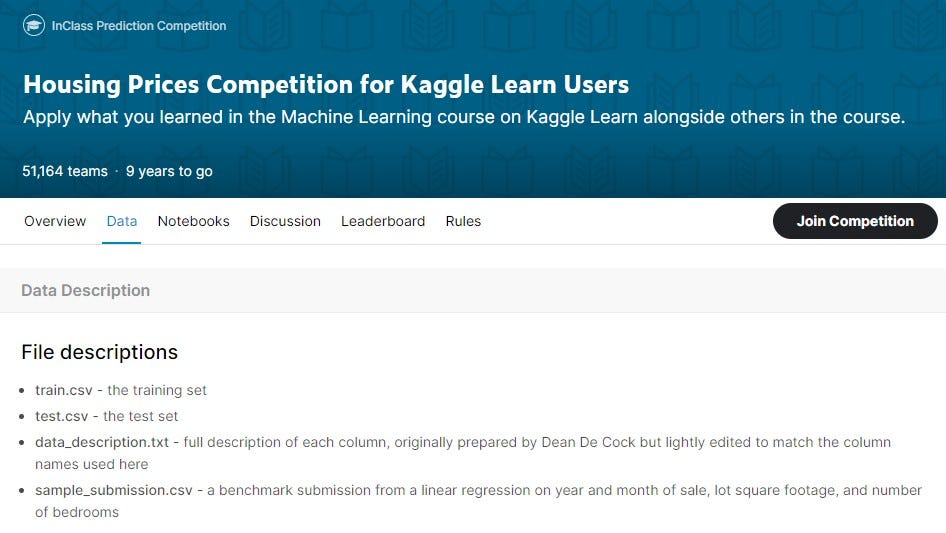

The first step when you face a new data set is to take some time to know the data. In Kaggle competitions, you’ll come across something like the sample below.

On the competition’s page, you can check the project description on Overview and you’ll find useful information about the data set on the tab Data. In Kaggle competitions, it’s common to have the training and test sets provided in separate files. On the same tab, there’s usually a summary of the features you’ll be working with and some basic statistics. It’s crucial to understand which problem needs to be addressed and the data set we have at hand.

You can use the Kaggle notebooks to execute your projects, as they are similar to Jupyter Notebooks.

2. Import the necessary libraries and data set

2.1. Libraries

The libraries used in this project are the following.

2.2. Data set

To get an overview of the data, let’s check the first rows and the size of the data set.

Источники информации:

- http://towardsdatascience.com/making-your-first-kaggle-submission-36fa07739272

- http://towardsdatascience.com/easy-kaggle-offline-submission-with-chaining-kernels-30bba5ea5c4d

- http://towardsdatascience.com/submitting-model-predictions-to-kaggle-competition-ccb64b17132e

- http://towardsdatascience.com/how-to-get-started-on-kaggle-competitions-68b91e3e803a