How to visualize decision tree in python

How to visualize decision tree in python

WillKoehrsen / visualize_decision_tree.py

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters. Learn more about bidirectional Unicode characters

BarryDeCicco commented Feb 9, 2020

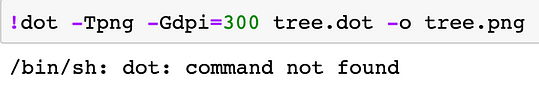

Note, this doesn’t work in my jupyter notebook running python 3.7.4

`FileNotFoundError Traceback (most recent call last)

in

21 # Convert to png using system command (requires Graphviz)

22 from subprocess import call

—> 23 call([‘dot’, ‘-Tpng’, ‘tree.dot’, ‘-o’, ‘tree.png’, ‘-Gdpi=600’])

24

25 # Display in jupyter notebook

C:\ProgramData\Anaconda3\lib\subprocess.py in call(timeout, *popenargs, **kwargs)

321 retcode = call([«ls», «-l»])

322 «»»

—> 323 with Popen(*popenargs, **kwargs) as p:

324 try:

325 return p.wait(timeout=timeout)

C:\ProgramData\Anaconda3\lib\subprocess.py in init(self, args, bufsize, executable, stdin, stdout, stderr, preexec_fn, close_fds, shell, cwd, env, universal_newlines, startupinfo, creationflags, restore_signals, start_new_session, pass_fds, encoding, errors, text)

773 c2pread, c2pwrite,

774 errread, errwrite,

—> 775 restore_signals, start_new_session)

776 except:

777 # Cleanup if the child failed starting.

C:\ProgramData\Anaconda3\lib\subprocess.py in _execute_child(self, args, executable, preexec_fn, close_fds, pass_fds, cwd, env, startupinfo, creationflags, shell, p2cread, p2cwrite, c2pread, c2pwrite, errread, errwrite, unused_restore_signals, unused_start_new_session)

1176 env,

1177 os.fspath(cwd) if cwd is not None else None,

-> 1178 startupinfo)

1179 finally:

1180 # Child is launched. Close the parent’s copy of those pipe

FileNotFoundError: [WinError 2] The system cannot find the file specified

`

Visualizing Decision Trees with Python (Scikit-learn, Graphviz, Matplotlib)

Learn about how to visualize decision trees using matplotlib and Graphviz.

Decision trees are a popular supervised learning method for a variety of reasons. Benefits of decision trees include that they can be used for both regression and classification, they don’t require feature scaling, and they are relatively easy to interpret as you can visualize decision trees. This is not only a powerful way to understand your model, but also to communicate how your model works. Consequently, it would help to know how to make a visualization based on your model.

This tutorial covers:

As always, the code used in this tutorial is available on my GitHub. With that, let’s get started!

How to Fit a Decision Tree Model using Scikit-Learn

In order to visualize decision trees, we need first need to fit a decision tree model using scikit-learn. If this section is not clear, I encourage you to read my Understanding Decision Trees for Classification (Python) tutorial as I go into a lot of detail on how decision trees work and how to use them.

Import Libraries

The following import statements are what we will use for this section of the tutorial.

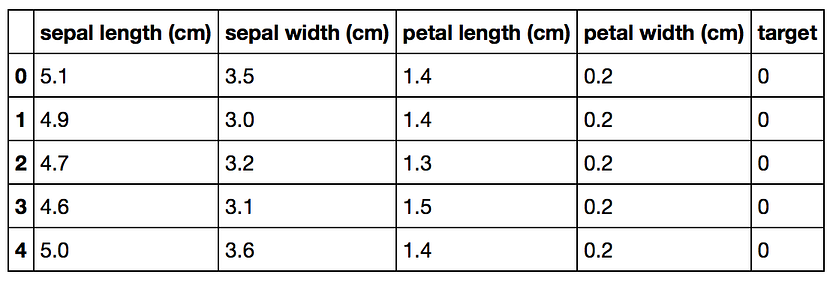

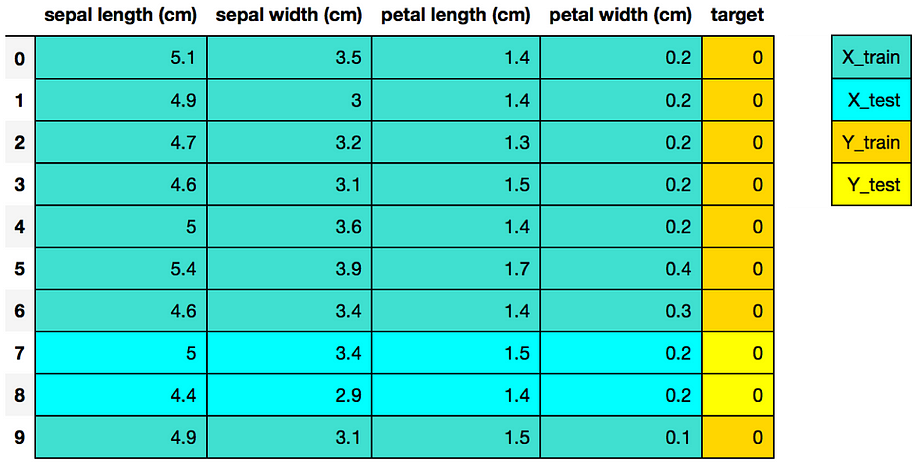

Load the Dataset

The Iris dataset is one of datasets scikit-learn comes with that do not require the downloading of any file from some external website. The code below loads the iris dataset.

Splitting Data into Training and Test Sets

The code below puts 75% of the data into a training set and 25% of the data into a test set.

Scikit-learn 4-Step Modeling Pattern

How to Visualize Decision Trees using Matplotlib

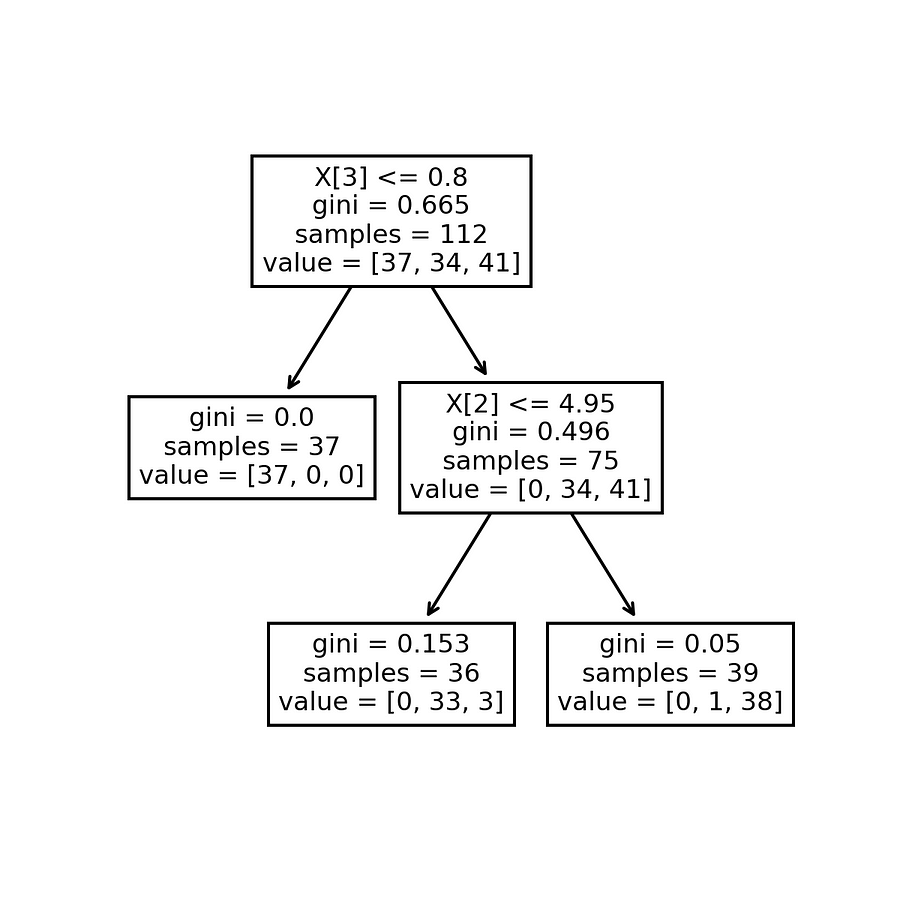

As of scikit-learn version 21.0 (roughly May 2019), Decision Trees can now be plotted with matplotlib using scikit-learn’s tree.plot_tree without relying on the dot library which is a hard-to-install dependency which we will cover later on in the blog post.

The code below plots a decision tree using scikit-learn.

In addition to adding the code to allow you to save your image, the code below tries to make the decision tree more interpretable by adding in feature and class names (as well as setting filled = True ).

How to Visualize Decision Trees using Graphviz

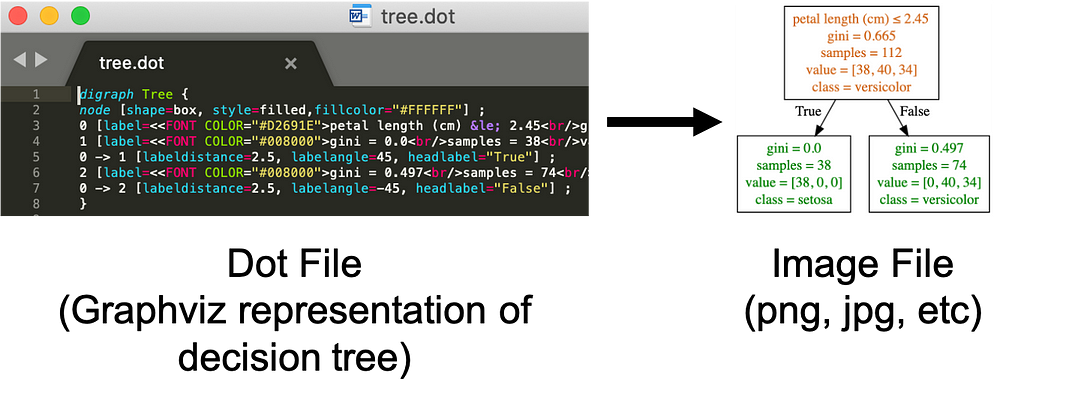

Graphviz is open source graph visualization software. Graph visualization is a way of representing structural information as diagrams of abstract graphs and networks. In data science, one use of Graphviz is to visualize decision trees. I should note that the reason why I am going over Graphviz after covering Matplotlib is that getting this to work can be difficult. The first part of this process involves creating a dot file. A dot file is a Graphviz representation of a decision tree. The problem is that using Graphviz to convert the dot file into an image file (png, jpg, etc) can be difficult. There are a couple ways to do this including: installing python-graphviz though Anaconda, installing Graphviz through Homebrew (Mac), installing Graphviz executables from the official site (Windows), and using an online converter on the contents of your dot file to convert it into an image.

Export your model to a dot file

Installing and Using Graphviz

How to Install and Use on Mac through Anaconda

To be able to install Graphviz on your Mac through this method, you first need to have Anaconda installed (If you don’t have Anaconda installed, you can learn how to install it here).

Open a terminal. You can do this by clicking on the Spotlight magnifying glass at the top right of the screen, type terminal and then click on the Terminal icon.

Type the command below to install Graphviz.

After that, you should be able to use the dot command below to convert the dot file into a png file.

How to Install and Use on Mac through Homebrew

If you don’t have Anaconda or just want another way of installing Graphviz on your Mac, you can use Homebrew. I previously wrote an article on how to install Homebrew and use it to convert a dot file into an image file here (see the Homebrew to Help Visualize Decision Trees section of the tutorial).

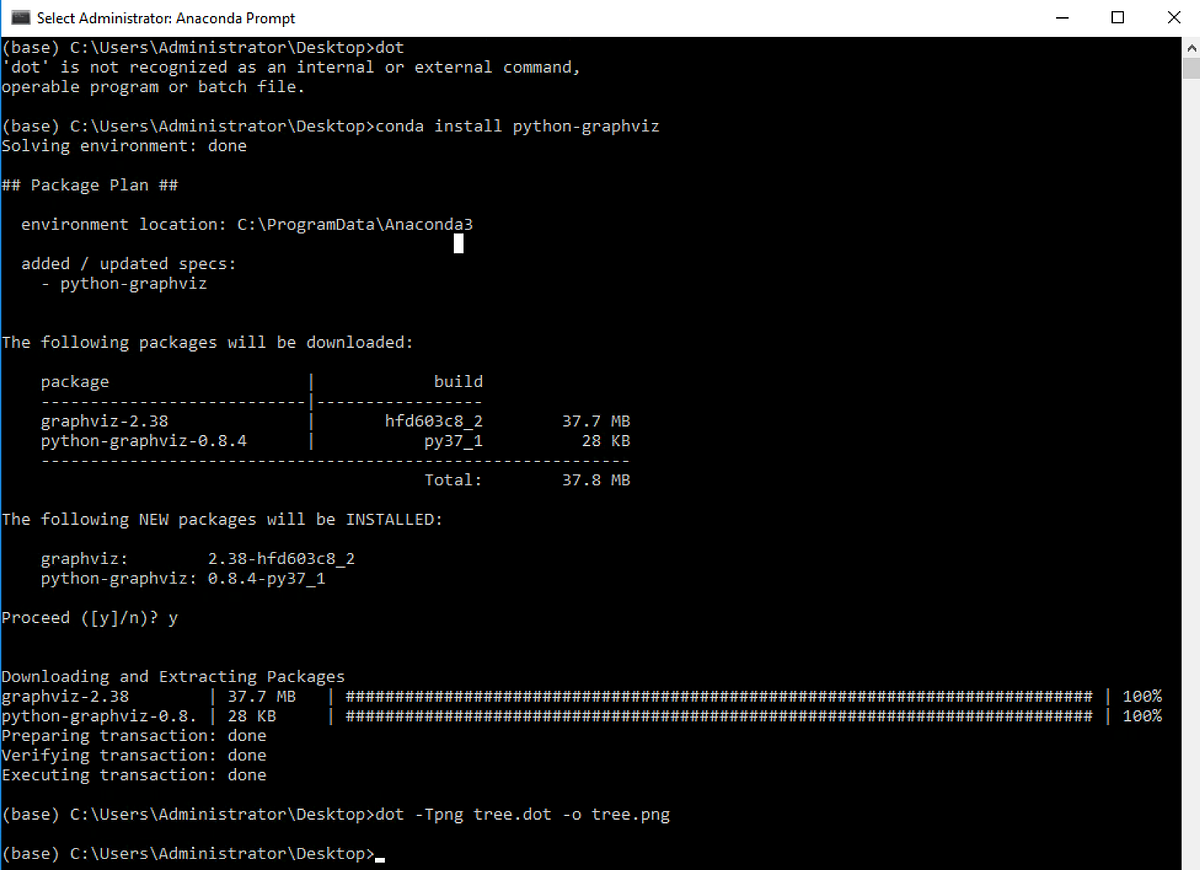

How to Install and Use on Windows through Anaconda

This is the method I prefer on Windows. To be able to install Graphviz on your Windows through this method, you first need to have Anaconda installed (If you don’t have Anaconda installed, you can learn how to install it here).

Open a terminal/command prompt and enter the command below to install Graphviz.

After that, you should be able to use the dot command below to convert the dot file into a png file.

How to Install and Use on Windows through Graphviz Executable

If you don’t have Anaconda or just want another way of installing Graphviz on your Windows, you can use the following link to download and install it.

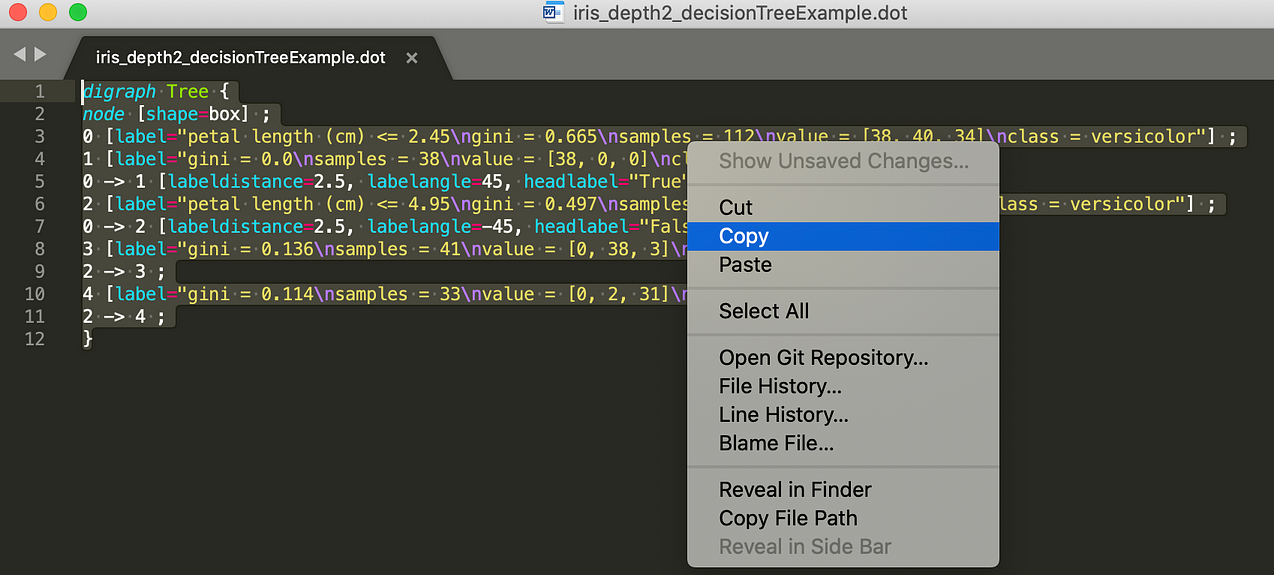

How to Use an Online Converter to Visualize your Decision Trees

If all else fails or you simply don’t want to install anything, you can use an online converter.

In the image below, I opened the file with Sublime Text (though there are many different programs that can open/read a dot file) and copied the content of the file.

In the image below, I pasted the content from the dot file onto the left side of the online converter. You can then choose what format you want and then save the image on the right side of the screen.

Keep in mind that there are other online converters that can help accomplish the same task.

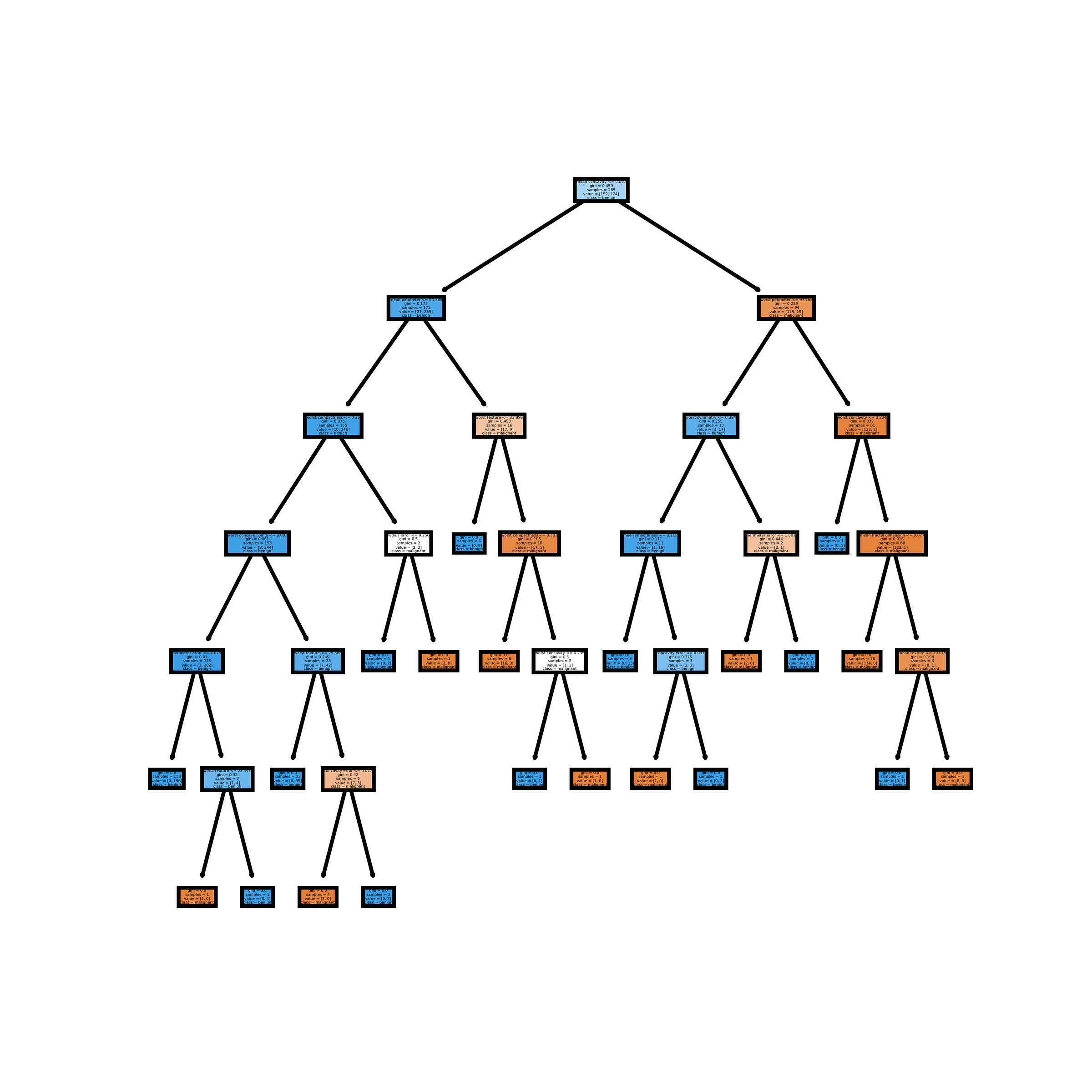

How to Visualize Individual Decision Trees from Bagged Trees or Random Forests®

A weakness of decision trees is that they don’t tend to have the best predictive accuracy. This is partially because of high variance, meaning that different splits in the training data can lead to very different trees.

The image above could be a diagram for Bagged Trees or the random forest algorithm models which are ensemble methods. This means using multiple learning algorithms to obtain a better predictive performance than could be obtained from any of the constituent learning algorithms alone. In this case, many trees protect each other from their individual errors. How exactly Bagged Trees and the random forest algorithm models work is a subject for another blog, but what is important to note is that for each both models we grow N trees where N is the number of decision trees a user specifies. Consequently after you fit a model, it would be nice to look at the individual decision trees that make up your model.

Fit a Random Forest® Model using Scikit-Learn

In order to visualize individual decision trees, we need first need to fit a Bagged Trees or Random Forest® model using scikit-learn (the code below fits the random forest algorithm model).

Visualizing your Estimators

You can now view all the individual trees from the fitted model. In this section, I will visualize all the decision trees using matplotlib.

You can now visualize individual trees. The code below visualizes the first decision tree.

You can try to use matplotlib subplots to visualize as many of the trees as you like. The code below visualizes the first 5 decision trees. I personally don’t prefer this method as it is even harder to read.

Create Images for each of the Decision Trees (estimators)

Keep in mind that if for some reason you want images for all your estimators (decision trees), you can do so using the code on my GitHub. If you just want to see each of the 100 estimators for the random forest algorithm model fit in this tutorial without running the code, you can look at the video below.

Concluding Remarks

This tutorial covered how to visualize decision trees using Graphviz and Matplotlib. Note that the way to visualize decision trees using Matplotlib is a newer method so it might change or be improved upon in the future. Graphviz is currently more flexible as you can always modify your dot files to make them more visually appealing like I did using the dot language or even just alter the orientation of your decision tree. One thing we didn’t cover was how to use dtreeviz which is another library that can visualize decision trees. There is an excellent post on it here.

If you have any questions or thoughts on the tutorial, feel free to reach out in the comments below or through Twitter. If you want to learn more about how to utilize Pandas, Matplotlib, or Seaborn libraries, please consider taking my Python for Data Visualization LinkedIn Learning course.

RANDOM FORESTS and RANDOMFORESTS are registered marks of Minitab, LLC.

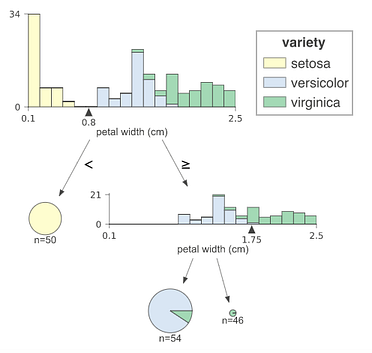

Creating and Visualizing Decision Trees with Python

Decision trees are the building blocks of some of the most powerful supervised learning methods that are used today. A decision tree is basically a binary tree flowchart where each node splits a group of observations according to some feature variable. The goal of a decision tree is to split your data into groups such that every element in one group belongs to the same category. Decision trees can also be used to approximate a continuous target variable. In that case, the tree will make splits such that each group has the lowest mean squared error.

One of the great properties of decision trees is that they are very easily interpreted. You do not need to be familiar at all with machine learning techniques to understand what a decision tree is doing. Decision tree graphs are very easily interpreted, plus they look cool! I will show you how to generate a decision tree and create a graph of it in a Jupyter Notebook (formerly known as IPython Notebooks). In this example, I will be using the classic iris dataset. Use the following code to load it.

Sklearn will generate a decision tree for your dataset using an optimized version of the CART algorithm when you run the following code.

You can also import DecisionTreeRegressor from sklearn.tree if you want to use a decision tree to predict a numerical target variable. Try switching one of the columns of df with our y variable from above and fitting a regression tree on it.

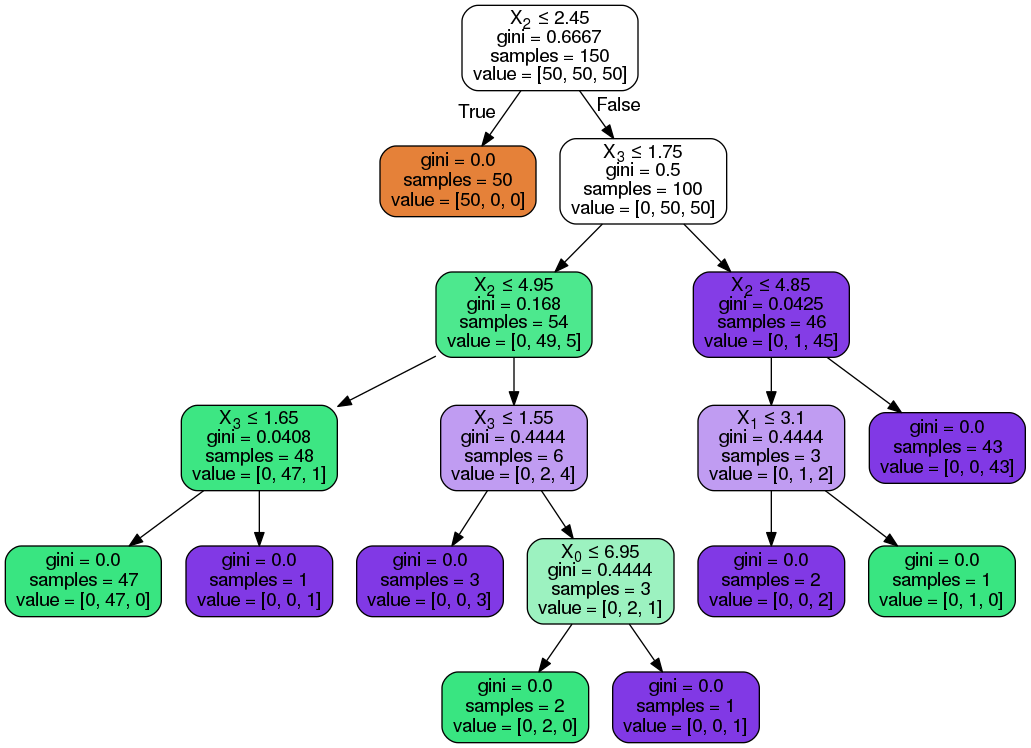

Now that we have a decision tree, we can use the pydotplus package to create a visualization for it.

The ‘value’ row in each node tells us how many of the observations that were sorted into that node fall into each of our three categories. We can see that our feature X2, which is the petal length, was able to completely distinguish one species of flower (Iris-Setosa) from the rest.

The biggest drawback to decision trees is that the split it makes at each node will be optimized for the dataset it is fit to. This splitting process will rarely generalize well to other data. However, we can generate huge numbers of these decision trees, tuned in slightly different ways, and combine their predictions to create some of our best models today.

How to Visualize a Decision Tree from a Random Forest in Python using Scikit-Learn

A helpful utility for understanding your model

Here’s the complete code: just copy and paste into a Jupyter Notebook or Python script, replace with your data and run:

The final result is a complete decision tree as an image.

Explanation of code

3. Convert dot to png using a system command: running system commands in Python can be handy for carrying out simple tasks. This requires installation of graphviz which includes the dot utility. For the complete options for conversion, take a look at the documentation.

4. Visualize: the best visualizations appear in the Jupyter Notebook. (Equivalently you can use matplotlib to show images).

Considerations

With a random forest, every tree will be built differently. I use these images to display the reasoning behind a decision tree (and subsequently a random forest) rather than for specific details.

It’s helpful to limit maximum depth in your trees when you have a lot of features. Otherwise, you end up with massive trees, which look impressive, but cannot be interpreted at all! Here’s a full example with 50 features.

Conclusions

Machine learning still suffers from a black box problem, and one image is not going to solve the issue! Nonetheless, looking at an individual decision tree shows us this model (and a random forest) is not an unexplainable method, but a sequence of logical questions and answers — much as we would form when making predictions. Feel free to use and adapt this code for your data.

As always, I welcome feedback, constructive criticism, and hearing about your data science projects. I can be reached on Twitter @koehrsen_will

visualize decision tree in python with graphviz

» data-medium-file=»https://i2.wp.com/dataaspirant.com/wp-content/uploads/2017/04/visualize-Decision-Tree.jpg?fit=300%2C171&ssl=1″ data-large-file=»https://i2.wp.com/dataaspirant.com/wp-content/uploads/2017/04/visualize-Decision-Tree.jpg?fit=690%2C394&ssl=1″ loading=»lazy» alt=»Visualize Decision Tree» width=»690″ height=»394″ srcset=»https://i2.wp.com/dataaspirant.com/wp-content/uploads/2017/04/visualize-Decision-Tree.jpg?w=700&ssl=1 700w, https://i2.wp.com/dataaspirant.com/wp-content/uploads/2017/04/visualize-Decision-Tree.jpg?w=300&ssl=1 300w» sizes=»(max-width: 690px) 100vw, 690px» data-recalc-dims=»1″>

Visualize Decision Tree

How to visualize a decision tree in Python

The decision tree classifier is the most popularly used supervised learning algorithm. Unlike other classification algorithms, the decision tree classifier is not a black box in the modeling phase. What that’s means, we can visualize the trained decision tree to understand how the decision tree gonna work for the give input features.

So in this article, you are going to learn how to visualize the trained decision tree model in Python with Graphviz. So let’s begin with the table of contents.

Table of contents

Introduction to Decision tree classifier

The decision tree classifier is mostly used classification algorithm because of its advantages over other classification algorithms. When we say the advantages it’s not about the accuracy of the trained decision tree model. It’s all about the usage and understanding of the algorithm.

Decision tree advantages:

As we knew the advantages of using the decision tree over other classification algorithms. Now let’s look at the basic introduction to the decision tree.

The above keywords used to give you the basic introduction to the decision tree classifier. If new to the decision tree classifier, Please spend some time on the below articles before you continue reading about how to visualize the decision tree in Python.

The decision tree classifier is a classification model that creates a set of rules from the training dataset. Later the created rules used to predict the target class. To get a clear picture of the rules and the need for visualizing decision, Let build a toy kind of decision tree classifier. Later use the build decision tree to understand the need to visualize the trained decision tree.

Fruit classification with decision tree classifier

» data-medium-file=»https://i1.wp.com/dataaspirant.com/wp-content/uploads/2017/04/fruit-classification-with-decision-tree.jpg?fit=300%2C180&ssl=1″ data-large-file=»https://i1.wp.com/dataaspirant.com/wp-content/uploads/2017/04/fruit-classification-with-decision-tree.jpg?fit=500%2C300&ssl=1″ loading=»lazy» alt=»fruit classification with decision tree» width=»500″ height=»300″ srcset=»https://i1.wp.com/dataaspirant.com/wp-content/uploads/2017/04/fruit-classification-with-decision-tree.jpg?w=500&ssl=1 500w, https://i1.wp.com/dataaspirant.com/wp-content/uploads/2017/04/fruit-classification-with-decision-tree.jpg?w=300&ssl=1 300w» sizes=»(max-width: 500px) 100vw, 500px» data-recalc-dims=»1″>

Fruit classification with decision tree

The decision tree classifier will train using the apple and orange features, later the trained classifier can be used to predict the fruit label given the fruit features.

The fruit features is a dummy dataset. Below are the dataset features and the targets.

| Weight (grams) | Smooth (Range of 1 to 10) | Fruit |

| 170 | 9 | 1 |

| 175 | 10 | 1 |

| 180 | 8 | 1 |

| 178 | 8 | 1 |

| 182 | 7 | 1 |

| 130 | 3 | 0 |

| 120 | 4 | 0 |

| 130 | 2 | 0 |

| 138 | 5 | 0 |

| 145 | 6 | 0 |

The dummy dataset having two features and targets.

Let’s follow the below workflow for modeling the fruit classifier.

Источники информации:

- http://www.kdnuggets.com/2020/04/visualizing-decision-trees-python.html

- http://medium.com/@rnbrown/creating-and-visualizing-decision-trees-with-python-f8e8fa394176

- http://towardsdatascience.com/how-to-visualize-a-decision-tree-from-a-random-forest-in-python-using-scikit-learn-38ad2d75f21c

- http://dataaspirant.com/visualize-decision-tree-python-graphviz/