Over the past decades scientists have made major discoveries in genetic

Over the past decades scientists have made major discoveries in genetic

The Top Ten Scientific Discoveries of the Decade

Breakthroughs include measuring the true nature of the universe, finding new species of human ancestors, and unlocking new ways to fight disease

Former Science Editor

December 31, 2019

Millions of new scientific research papers are published every year, shedding light on everything from the evolution of stars to the ongoing impacts of climate change to the health benefits (or determents) of coffee to the tendency of your cat to ignore you. With so much research coming out every year, it can be difficult to know what is significant, what is interesting but largely insignificant, and what is just plain bad science. But over the course of a decade, we can look back at some of the most important and awe-inspiring areas of research, often expressed in multiple findings and research papers that lead to a true proliferation of knowledge. Here are ten of the biggest strides made by scientists in the last ten years.

New Human Relatives

The human family tree expanded significantly in the past decade, with fossils of new hominin species discovered in Africa and the Philippines. The decade began with the discovery and identification of Australopithecus sediba, a hominin species that lived nearly two million years ago in present-day South Africa. Matthew Berger, the son of paleoanthropologist Lee Berger, stumbled upon the first fossil of the species, a right clavicle, in 2008, when he was only 9 years old. A team then unearthed more fossils from the individual, a young boy, including a well-preserved skull, and A. sediba was described by Lee Berger and colleagues in 2010. The species represents a transitionary phase between the genus Australopithecus and the genus Homo, with some traits of the older primate group but a style of walking that resembled modern humans.

Also discovered in South Africa by a team led by Berger, Homo naledi lived much more recently, some 335,000 to 236,000 years ago, meaning it may have overlapped with our own species, Homo sapiens. The species, first discovered in the Rising Star Cave system in 2013 and described in 2015, also had a mix of primitive and modern features, such as a small brain case (about one-third the size of Homo sapiens) and a large body for the time, weighing approximately 100 pounds and standing up to five feet tall. The smaller Homo luzonensis (three to four feet tall) lived in the Philippines some 50,000 to 67,000 years ago, overlapping with several species of hominin. The first H. luzonensis fossils were originally identified as Homo sapiens, but a 2019 analysis determined that the bones belonged to an entirely unknown species.

These three major finds in the last ten years suggest that the bones of more species of ancient human relatives are likely hidden in the caves and sediment deposits of the world, waiting to be discovered.

Taking Measure of the Cosmos

When Albert Einstein first published the general theory of relativity in 1915, he likely couldn’t have imagined that 100 years later, astronomers would test the theory’s predictions with some of the most sophisticated instruments ever built—and the theory would pass each test. General relativity describes the universe as a “fabric” of space-time that is warped by large masses. It’s this warping that causes gravity, rather than an internal property of mass as Isaac Newton thought.

One prediction of this model is that the acceleration of masses can cause “ripples” in space-time, or the propagation of gravitational waves. With a large enough mass, such as a black hole or a neutron star, these ripples may even be detected by astronomers on Earth. In September 2015, the LIGO and Virgo collaboration detected gravitational waves for the first time, propagating from a pair of merging black holes some 1.3 billion light-years away. Since then, the two instruments have detected several additional gravitational waves, including one from a two merging neutron stars.

Another prediction of general relativity—one that Einstein himself famously doubted—is the existence of black holes at all, or points of gravitational collapse in space with infinite density and infinitesimal volume. These objects consume all matter and light that strays too close, creating a disk of superheated material falling into the black hole. In 2017, the Event Horizon Telescope collaboration—a network of linked radio telescopes around the world—took observations that would later result in the first image of the environment around a black hole, released in April 2019.

The Hottest Years on Record

Scientists have been predicating the effects of burning coal and fossil fuels on the temperature of the planet for over 100 years. A 1912 issue of Popular Mechanics contains an article titled “Remarkable Weather of 1911: The Effect of the Combustion of Coal on the Climate—What Scientists Predict for the Future,” which has a caption that reads: “The furnaces of the world are now burning about 2,000,000,000 tons of coal a year. When this is burned, uniting with oxygen, it adds about 7,000,000,000 tons of carbon dioxide to the atmosphere yearly. This tends to make the air a more effective blanket for the earth and to raise its temperature. The effect may be considerable in a few centuries.”

Just one century later, and the effect is considerable indeed. Increased greenhouse gases in the atmosphere have produced hotter global temperatures, with the last five years (2014 to 2018) being the hottest years on record. 2016 was the hottest year since the National Oceanic and Atmospheric Administration (NOAA) started recording global temperature 139 years ago. The effects of this global change include more frequent and destructive wildfires, more common droughts, accelerating polar ice melt and increased storm surges. California is burning, Venice is flooding, urban heat deaths are on the rise, and countless coastal and island communities face an existential crisis—not to mention the ecological havoc wreaked by climate change, stifling the planet’s ability to pull carbon back out of the atmosphere.

In 2015, the United Nations Framework Convention on Climate Change (UNFCCC) reached a consensus on climate action, known as the Paris Agreement. The primary goal of the Paris Agreement is to limit global temperature increases to 1.5 degrees Celsius over pre-industrial levels. To achieve this goal, major societal transformations will be required, including replacing fossil fuels with clean energy such as wind, solar and nuclear; reforming agricultural practices to limit emissions and protect forested areas; and perhaps even building artificial means of pulling carbon dioxide out of the atmosphere.

Editing Genes

Ever since the double-helix structure of DNA was revealed in the early 1950s, scientists have hypothesized about the possibility of artificially modifying DNA to change the functions of an organism. The first approved gene therapy trial occurred in 1990, when a four-year-old girl had her own white blood cells removed, augmented with the genes that produce an enzyme called adenosine deaminase (ADA), and then reinjected into her body to treat ADA deficiency, a genetic condition that hampers the immune system’s ability to fight disease. The patient’s body began producing the ADA enzyme, but new white blood cells with the corrected gene were not produced, and she had to continue receiving injections.

Now, genetic engineering is more precise and available than ever before, thanks in large part to a new tool first used to modify eukaryotic cells (complex cells with a nucleus) in 2013: CRISPR-Cas9. The gene editing tool works by locating a targeted section of DNA and “cutting” out that section with the Cas9 enzyme. An optional third step involves replacing the deleted section of DNA with new genetic material. The technique can be used for a wide range of applications, from increasing the muscle mass of livestock, to producing resistant and fruitful crops, to treating diseases like cancer by removing a patient’s immune system cells, modifying them to better fight a disease, and reinjecting them into the patient’s body.

In late 2018, Chinese researchers led by He Jiankui announced that they had used CRISPR-Cas9 to genetically modify human embryos, which were then transferred to a woman’s uterus and resulted in the birth of twin girls—the first gene-edited babies. The twins’ genomes were modified to make the girls more resistant to HIV, although the genetic alterations may have also resulted in unintended changes. The work was widely condemned by the scientific community as unethical and dangerous, revealing a need for stricter regulations for how these powerful new tools are used, particularly when it comes to changing the DNA of embryos and using those embryos to birth live children.

Mysteries of Other Worlds Revealed

Spacecraft and telescopes have revealed a wealth of information about worlds beyond our own in the last decade. In 2015, the New Horizons probe made a close pass of Pluto, taking the first nearby observations of the dwarf planet and its moons. The spacecraft revealed a surprisingly dynamic and active world, with icy mountains reaching up to nearly 20,000 feet and shifting plains that are no more than 10 million years old—meaning the geology is constantly changing. The fact that Pluto—which is an average of 3.7 billion miles from the sun, about 40 times the distance of Earth—is so geologically active suggests that even cold, distant worlds could get enough energy to heat their interiors, possibly harboring subsurface liquid water or even life.

A bit closer to home, the Cassini spacecraft orbited Saturn for 13 years, ending its mission in September 2017 when NASA intentionally plunged the spacecraft into the atmosphere of Saturn so it would burn up rather than continue orbiting the planet once it had exhausted its fuel. During its mission, Cassini discovered the processes that feed Saturn’s rings, observed a global storm encircle the gas giant, mapped the large moon Titan and found some of the ingredients for life in the plumes of icy material erupting from the watery moon Enceladus. In 2016, a year before the end of the Cassini mission, the Juno spacecraft arrived at Jupiter, where it has been measuring the magnetic field and atmospheric dynamics of the largest planet in the solar system to help scientists understand how Jupiter—and everything else around the sun—originally formed.

In 2012, the Curiosity rover landed on Mars, where it has made several significant discoveries, including new evidence of past water on the red planet, the presence of organic molecules that could be related to life, and mysterious seasonal cycles of methane and oxygen that hint at a dynamic world beneath the surface. In 2018, the European Space Agency announced that ground-penetrating radar data from the Mars Express spacecraft provided strong evidence that a liquid reservoir of water exists underground near the Martian south pole.

Meanwhile, two space telescopes, Kepler and TESS, have discovered thousands of planets orbiting other stars. Kepler launched in 2009 and ended its mission in 2018, revealing mysterious and distant planets by measuring the decrease in light when they pass in front of their stars. These planets include hot Jupiters, which orbit close to their stars in just days or hours; mini Neptunes, which are between the size of Earth and Neptune and may be gas, liquid, solid or some combination; and super Earths, which are large rocky planets that astronomers hope to study for signs of life. TESS, which launched in 2018, continues the search as Kepler’s successor. The space telescope has already discovered hundreds of worlds, and it could find 10,000 or even 20,000 before the end of the mission.

Fossilized Pigments Reveal the Colors of Dinosaurs

The decade began with a revolution in paleontology as scientists got their first look at the true colors of dinosaurs. First, in January 2010, an analysis of melanosomes—organelles that contain pigments—in the fossilized feathers of Sinosauropteryx, a dinosaur that lived in China some 120 to 125 million years ago, revealed that the prehistoric creature had “reddish-brown tones” and stripes along its tail. Shortly after, a full-body reconstruction revealed the colors of a small feathered dinosaur that lived some 160 million years ago, Anchiornis, which had black and white feathers on its body and a striking plume of red feathers on its head.

The study of fossilized pigments has continued to expose new information about prehistoric life, hinting at potential animal survival strategies by showing evidence of countershading and camouflage. In 2017, a remarkably well-preserved armored dinosaur which lived about 110 million years ago, Borealopelta, was found to have reddish-brown tones to help blend into the environment. This new ability to identify and study the colors of dinosaurs will continue to play an important role in paleontological research as scientists study the evolution of past life.

Redefining the Fundamental Unit of Mass

In November 2018, measurement scientists around the world voted to officially changed the definition of a kilogram, the fundamental unit of mass. Rather than basing the kilogram off of an object—a platinum-iridium alloy cylinder about the size of a golf ball—the new definition uses a constant of nature to set the unit of mass. The change replaced the last physical artifact used to define a unit of measure. (The meter bar was replaced in 1960 by a specific number of wavelengths of radiation from krypton, for example, and later updated to define a meter according to the distance light travels in a tiny fraction of a second.)

By using a sophisticated weighing machine known as a Kibble balance, scientists were able to precisely measure a kilogram according to the electromagnetic force required to hold it up. This electric measurement could then be expressed in terms of Planck’s constant, a number originally used by Max Planck to calculate bundles of energy coming from stars.

The kilogram was not the only unit of measure that was recently redefined. The changes to the International System of Units, which officially went into effect in May 2019, also changed the definition for the ampere, the standard unit of electric current; the kelvin unit of temperature; and the mole, a unit of amount of substance used in chemistry. The changes to the kilogram and other units will allow more precise measurements for small amounts of material, such as pharmaceuticals, as well as give scientists around the world access to the fundamental units, rather than defining them according to objects that must be replicated and calibrated by a small number of labs.

First Ancient Human Genome Sequenced

In 2010, scientists gained a new tool to study the ancient past and the people who inhabited it. Researchers used a hair preserved in permafrost to sequence the genome of a man who lived some 4,000 years ago in what is now Greenland, revealing the physical traits and even the blood type of a member of one of the first cultures to settle in that part of the world. The first nearly complete reconstruction of a genome from ancient DNA opened the door for anthropologists and geneticists to learn more about the cultures of the distant past than ever before.

Extracting ancient DNA is a major challenge. Even if genetic material such as hair or skin is preserved, it is often contaminated with the DNA of microbes from the environment, so sophisticated sequencing techniques must be used to isolate the ancient human’s DNA. More recently, scientists have used the petrous bone of the skull, a highly dense bone near the ear, to extract ancient DNA.

Thousands of ancient human genomes have been sequenced since the first success in 2010, revealing new details about the rise and fall of lost civilizations and the migrations of people around the globe. Studying ancient genomes has identified multiple waves of migration back and forth across the frozen Bering land bridge between Siberia and Alaska between 5,000 and 15,000 years ago. Recently, the genome of a young girl in modern Denmark was sequenced from a 5,700-year-old piece of birch tar used as chewing gum, which also contained her mouth microbes and bits of food from one of her last meals.

A Vaccine and New Treatments to Fight Ebola

This decade included the worst outbreak of Ebola virus diseases in history. The epidemic is believed to have begun with a single case of an 18-month-old-boy in Guinea infected by bats in December 2013. The disease quickly spread to neighboring countries, reaching the capitals of Liberia and Sierra Leone by July 2014, providing an unprecedented opportunity for the transmission of the disease to a large number of people. Ebola virus compromises the immune system and can cause massive hemorrhaging and multiple organ failure. Two and a half years after the initial case, more than 28,600 people had been infected, resulting in at least 11,325 deaths, according to the CDC.

The epidemic prompted health officials to redouble their efforts to find an effective vaccine to fight Ebola. A vaccine known as Ervebo, made by the pharmaceutical company Merck, was tested in a clinical trial in Guinea performed toward the end of the outbreak in 2016 that proved the vaccine effective. Another Ebola outbreak was declared in the Democratic Republic of the Congo in August 2018, and the ongoing epidemic has spread to become the deadliest since the West Africa outbreak, with 3,366 reported cases and 2,227 deaths as of December 2019. Ervebo has been used in the DRC to fight the outbreak on an expanded access or “compassionate use” basis. In November 2019, Ervebo was approved by the European Medicines Agency (EMA), and a month later it was approved in the U.S. by the FDA.

In addition to a preventative vaccine, researchers have been seeking a cure for Ebola in patients who have already been infected by the disease. Two treatments, which involve a one-time delivery of antibodies to prevent Ebola from infecting a patient’s cells, have recently shown promise in a clinical trial in the DRC. With a combination of vaccines and therapeutic treatments, healthcare officials hope to one day eradicate the viral infection for good.

CERN Detects the Higgs Boson

Over the past several decades, physicists have worked tirelessly to model the workings of the universe, developing what is known as the Standard Model. This model describes four basic interactions of matter, known as the fundamental forces. Two are familiar in everyday life: the gravitational force and the electromagnetic force. The other two, however, only exert their influence inside the nuclei of atoms: the strong nuclear force and the weak nuclear force.

Part of the Standard Model says that there is a universal quantum field that interacts with particles, giving them their masses. In the 1960s, theoretical physicists including François Englert and Peter Higgs described this field and its role in the Standard Model. It became known as the Higgs field, and according to the laws of quantum mechanics, all such fundamental fields should have an associated particle, which came to be known as the Higgs boson.

Decades later, in 2012, two teams using the Large Hadron Collider at CERN to conduct particle collisions reported the detection of a particle with the predicted mass of the Higgs boson, providing substantial evidence for the existence of the Higgs field and Higgs boson. In 2013, the Nobel Prize in Physics was awarded to Englert and Higgs “for the theoretical discovery of a mechanism that contributes to our understanding of the origin of mass of subatomic particles, and which recently was confirmed through the discovery of the predicted fundamental particle.” As physicists continue to refine the Standard Model, the function and discovery of the Higgs boson will remain a fundamental part of how all matter gets its mass, and therefore, how any matter exists at all.

A simple guide to CRISPR, one of the biggest science stories of the decade

It could revolutionize everything from medicine to agriculture. Better read up now.

If you buy something from a Vox link, Vox Media may earn a commission. See our ethics statement.

Share this story

Share All sharing options for: A simple guide to CRISPR, one of the biggest science stories of the decade

One of the biggest and most important science stories of the past few years will probably also be one of the biggest science stories of the next few years. So this is as good a time as any to get acquainted with the powerful new gene editing technology known as CRISPR.

If you haven’t heard of CRISPR yet, the short explanation goes like this: In the past nine years, scientists have figured out how to exploit a quirk in the immune systems of bacteria to edit genes in other organisms — plants, mice, even humans. With CRISPR, they can now make these edits quickly and cheaply, in days rather than weeks or months. (The technology is often known as CRISPR/Cas9, but we’ll stick with CRISPR, pronounced “crisper.”)

We’re talking about a powerful new tool to control which genes get expressed in plants, animals, and even humans; the ability to delete undesirable traits and, potentially, add desirable traits with more precision than ever before.

So far scientists have used it to reduce the severity of genetic deafness in mice, suggesting it could one day be used to treat the same type of hearing loss in people. They’ve created mushrooms that don’t brown easily and edited bone marrow cells in mice to treat sickle-cell anemia. Down the road, CRISPR might help us develop drought-tolerant crops and create powerful new antibiotics. CRISPR could one day even allow us to wipe out entire populations of malaria-spreading mosquitoes or resurrect once-extinct species like the passenger pigeon.

A big concern is that while CRISPR is relatively simple and powerful, it isn’t perfect. Scientists have recently learned that the approach to gene editing can inadvertently wipe out and rearrange large swaths of DNA in ways that may imperil human health. That follows recent studies showing that CRISPR-edited cells can inadvertently trigger cancer. That’s why many scientists argue that experiments in humans are premature: The risks and uncertainties around CRISPR modification are extremely high.

On this front, 2018 brought some shocking news: In November, a scientist in China, He Jiankui, reported that he had created the world’s first human babies with CRISPR-edited genes: a pair of twin girls resistant to HIV.

The announcement stunned scientists around the world. The director of the National Institutes of Health, Francis Collins, said the experiment was “profoundly disturbing and tramples on ethical norms.”

It also created more questions than it answered: Did Jiankui actually pull it off? Does he deserve praise or condemnation? Do we need to pump the brakes on CRISPR research?

While independent researchers have not yet confirmed that Jiankui was successful, there are other CRISPR applications that are close to fruition from new disease therapies to novel tactics for fighting malaria. So here’s a basic guide to what CRISPR is and what it can do.

What the heck is CRISPR, anyway?

If we want to understand CRISPR, we should go back to 1987, when Japanese scientists studying E. coli bacteria first came across some unusual repeating sequences in the organism’s DNA. “The biological significance of these sequences,” they wrote, “is unknown.” Over time, other researchers found similar clusters in the DNA of other bacteria (and archaea). They gave these sequences a name: Clustered Regularly Interspaced Short Palindromic Repeats — or CRISPR.

Yet the function of these CRISPR sequences was mostly a mystery until 2007, when food scientists studying the Streptococcus bacteria used to make yogurt showed that these odd clusters actually served a vital function: They’re part of the bacteria’s immune system.

See, bacteria are under constant assault from viruses, so they produce enzymes to fight off viral infections. Whenever a bacterium’s enzymes manage to kill off an invading virus, other little enzymes will come along, scoop up the remains of the virus’s genetic code and cut it into tiny bits. The enzymes then store those fragments in CRISPR spaces in the bacterium’s own genome.

Now comes the clever part: CRISPR spaces act as a rogue’s gallery for viruses, and bacteria use the genetic information stored in these spaces to fend off future attacks. When a new viral infection occurs, the bacteria produce special attack enzymes, known as Cas9, that carry around those stored bits of viral genetic code like a mug shot. When these Cas9 enzymes come across a virus, they see if the virus’s RNA matches what’s in the mug shot. If there’s a match, the Cas9 enzyme starts chopping up the virus’s DNA to neutralize the threat. It looks a little like this:

CRISPR/Cas9 gene editing complex from Streptococcus pyogenes. The Cas9 nuclease protein uses a guide RNA sequence to cut DNA at a complementary site. Cas9 protein red, DNA yellow, RNA blue. Shutterstock

So that’s what CRISPR/Cas9 does. For a while, these discoveries weren’t of much interest to anyone except microbiologists — until a series of further breakthroughs occurred.

How did CRISPR revolutionize gene editing?

In 2011, Jennifer Doudna of the University of California Berkeley and Emmanuelle Charpentier of Umeå University in Sweden were puzzling over how the CRISPR/Cas9 system actually worked. How did the Cas9 enzyme match the RNA in the mug shots with that in the viruses? How did the enzymes know when to start chopping?

The scientists soon discovered they could “fool” the Cas9 protein by feeding it artificial RNA — a fake mug shot. When they did that, the enzyme would search for anything with that same code, not just viruses, and start chopping. In a landmark 2012 paper, Doudna, Charpentier, and Martin Jinek showed they could use this CRISPR/Cas9 system to cut up any genome at any place they wanted.

While the technique had only been demonstrated on molecules in test tubes at that point, the implications were breathtaking.

Further advances followed. Feng Zhang, a scientist at the Broad Institute in Boston, co-authored a paper in Science in February 2013 showing that CRISPR/Cas9 could be used to edit the genomes of cultured mouse cells or human cells. In the same issue of Science, Harvard’s George Church and his team showed how a different CRISPR technique could be used to edit human cells.

Since then, researchers have found that CRISPR/Cas9 is amazingly versatile. Not only can scientists use CRISPR to “silence” genes by snipping them out, they can also harness repair enzymes to substitute desired genes into the “hole” left by the snippers (though this latter technique is trickier to pull off). So, for instance, scientists could tell the Cas9 enzyme to snip out a gene that causes Huntington’s disease and insert a “good” gene to replace it.

It’s now one of the hottest fields around. In 2011, there were fewer than 100 published papers on CRISPR. In 2018, there were more than 17,000 and counting, with refinements to CRISPR, new techniques for manipulating genes, improvements in precision, and more. “This has become such a fast-moving field that I even have trouble keeping up now,” says Doudna. “We’re getting to the point where the efficiencies of gene editing are at levels that are clearly going to be useful therapeutically as well as a vast number of other applications.”

There’s also been an intense legal battle over who exactly should get credit for this CRISPR technology and who owns the potentially lucrative rights. Was Doudna’s 2012 paper at the University of California Berkeley the breakthrough, or was Zhang’s 2013 research at the Broad Institute the key advance? In September, a federal appeals court rejected the University of California Berkeley’s arguments that the school has exclusive rights to CRISPR patents, upholding the Broad Institute’s patents on some CRISPR applications.

But the important thing is that CRISPR has arrived.

So what can we use CRISPR for?

What am I in for now? Shutterstock

Paul Knoepfler, an associate professor at UC Davis School of Medicine, told Vox that CRISPR makes him feel like a “kid in a candy store.”

At the most basic level, CRISPR can make it much easier for researchers to figure out what different genes in different organisms actually do — by, for instance, knocking out individual genes and seeing which traits are affected. This is important: While we’ve had a complete “map” of the human genome since 2003, we don’t really know what function all those genes serve. CRISPR can help speed up genome screening, and genetics research could advance massively as a result.

Researchers have also discovered there are numerous CRISPRs. So CRISPR is actually a pretty broad term. “It’s like the term ‘fruit’ — it describes a whole category,” said the Broad’s Zhang. When people talk about CRISPR, they are usually referring to the CRISPR/Cas9 system we’ve been talking about here. But in recent years, researchers like Zhang have found other types of CRISPR proteins that also work as gene editors. Cas13, for example, can edit DNA’s sister, RNA. “Cas9 and Cas13 are like apples and bananas,” Zhang added.

The real fun — and, potentially, the real risks — could come from using CRISPRs to edit various plants and animals. A 2016 paper in Nature Biotechnology by Rodolphe Barrangou and Doudna listed a flurry of potential future applications:

1) Edit crops to be more nutritious: Crop scientists are already looking to use CRISPR to edit the genes of various crops to make them tastier or more nutritious or better survivors of heat and stress. They could potentially use CRISPR to snip out the allergens in peanuts. Korean researchers are looking to see if CRISPR could help bananas survive a deadly fungal disease. Some scientists have shown that CRISPR can create hornless dairy cows — a huge advance for animal welfare.

Recently, major companies like Monsanto and DuPont have begun licensing CRISPR technology, hoping to develop valuable new crop varieties. While this technique won’t entirely replace traditional GMO techniques, which can transplant genes from one organism to another, CRISPR is a versatile new tool that can help identify genes associated with desired crop traits much more quickly. It could also allow scientists to insert desired traits into crops more precisely than traditional breeding, which is a much messier way of swapping in genes.

“With genome editing, we can absolutely do things we couldn’t do before,” says Pamela Ronald, a plant geneticist at the University of California Davis. That said, she cautions that it’s only one of many tools for crop modification out there — and successfully breeding new varieties could still take years of testing.

It’s also possible that these new tools could attract controversy. Foods that have had a few genes knocked out via CRISPR are currently regulated more lightly than traditional GMOs. Policymakers in Washington, DC, are currently debating whether it might make sense to rethink regulations here. This piece for Ensia by Maywa Montenegro delves into some of the debates CRISPR raises in agriculture.

2) New tools to stop genetic diseases: Scientists are now using CRISPR/Cas9 to edit the human genome and try to knock out genetic diseases like hypertrophic cardiomyopathy. They’re also looking at using it on mutations that cause Huntington’s disease or cystic fibrosis, and are talking about trying it on the BRCA-1 and 2 mutations linked to breast and ovarian cancers. Scientists have even shown that CRISPR can knock HIV infections out of T cells.

So far, however, scientists have only tested this on cells in the lab. There are still a few hurdles to overcome before anyone starts clinical trials on actual humans. For example, the Cas9 enzymes can occasionally “misfire” and edit DNA in unexpected places, which in human cells might lead to cancer or even create new diseases. As geneticist Allan Bradley, of England’s Wellcome Sanger Institute, told STAT, CRISPR’s ability to wreak havoc on DNA has been “seriously underestimated.”

And while there have also been major advances in improving CRISPR precision and reducing these off-target effects, scientists are urging caution on human testing. That’s a big reason why Jiankui’s experiments in producing human babies with CRISPR-edited genomes are so controversial and alarming. Researchers who examined the few findings that Jiankui publicly revealed said the results showed that the babies’ genes were not edited precisely. There’s also plenty of work to be done on actually delivering the editing molecules to particular cells — a major challenge going forward.

3) Powerful new antibiotics and antivirals: One of the most frightening public health facts around is that we are running low on effective antibiotics as bacteria evolve resistance to them. Currently, it’s difficult and costly to develop fresh antibiotics for deadly infections. But CRISPR/Cas9 systems could, in theory, be developed to eradicate certain bacteria more precisely than ever (though, again, figuring out delivery mechanisms will be a challenge). Other researchers are working on CRISPR systems that target viruses such as HIV and herpes.

4) Gene drives that could alter entire species: Scientists have also demonstrated that CRISPR could be used, in theory, to modify not just a single organism but an entire species. It’s an unnerving concept called “gene drive.”

It works like this: Normally, whenever an organism like a fruit fly mates, there’s a 50-50 chance that it will pass on any given gene to its offspring. But using CRISPR, scientists can alter these odds so that there’s a nearly 100 percent chance that a particular gene gets passed on. Using this gene drive, scientists could ensure that an altered gene propagates throughout an entire population in short order:

By harnessing this technique, scientists could, say, genetically modify mosquitoes to only produce male offspring — and then use a gene drive to push that trait through an entire population. Over time, the population would go extinct. “Or you could just add a gene making them resistant to the malaria parasite, preventing its transmission to humans,” Vox’s Dylan Matthews explains in his story on CRISPR gene drives for malaria.

Suffice to say, there are also hurdles to overcome before this technology is rolled out en masse — and not necessarily the ones you’d expect. “The problem of malaria gene drives is rapidly becoming a problem of politics and governance more than it is a problem of biology,” Matthews writes. Regulators will need to figure out how to handle this technology, and ethicists will need to grapple with knotty questions about its fairness.

5) Creating “designer babies”: This is the one that gets the most attention, and rightly so. It’s not entirely far-fetched to think we might one day be confident enough in CRISPR’s safe to use it to edit the human genome — to eliminate disease, or to select for athleticism or superior intelligence.

But Jiankui’s recent attempt to introduce protection from HIV in embryos intended for pregnancy, which involved little oversight, is not how most scientists want the field to move forward.

The concern is that there are not enough safeguards yet to prevent harm nor enough knowledge to do definite good. In Jiankui’s case, he also did not tell his university about his experiment ahead of time, likely did not fully inform the parents of the modified babies of the risks involved, and may have had a financial incentive from his two affiliated biotech companies.

We’re not even close to the point where scientists could safely make the complex changes needed to, for instance, improve intelligence, in part because it involves so many genes. So don’t go dreaming of Gattaca just yet.

“I think the reality is we don’t understand enough yet about the human genome, how genes interact, which genes give rise to certain traits, in most cases, to enable editing for enhancement today,” Doudna said in 2015. Still, she added: “That’ll change over time.”

Wait, should we really create designer babies?

Can’t wait to have a superhuman sister. (Shutterstock)

Given all the fraught issues associated with gene editing, many scientists are advocating a slow approach here. They are also trying to keep the conversation about this technology open and transparent, build public trust, and avoid some of the mistakes that were made with GMOs. But with CRISPR’s ease of use and low costs, it’s challenging to keep rogue experiments in check.

In February 2017, a report from the National Academy of Sciences said that clinical trials could be greenlit in the future “for serious conditions under stringent oversight.” But it also made clear that “genome editing for enhancement should not be allowed at this time.”

Society still needs to grapple with all the ethical considerations at play here. For example, if we edited a germline, future generations wouldn’t be able to opt out. Genetic changes might be difficult to undo. Even this stance has worried some researchers, like Francis Collins of the National Institutes of Health, who has said the US government will not fund any genomic editing of human embryos.

In the meantime, researchers in the US who can drum up their own funding, along with others in the UK, Sweden, and China, are moving forward with their own experiments.

Further reading

Millions turn to Vox to understand what’s happening in the news. Our mission has never been more vital than it is in this moment: to empower through understanding. Financial contributions from our readers are a critical part of supporting our resource-intensive work and help us keep our journalism free for all. Please consider making a contribution to Vox today.

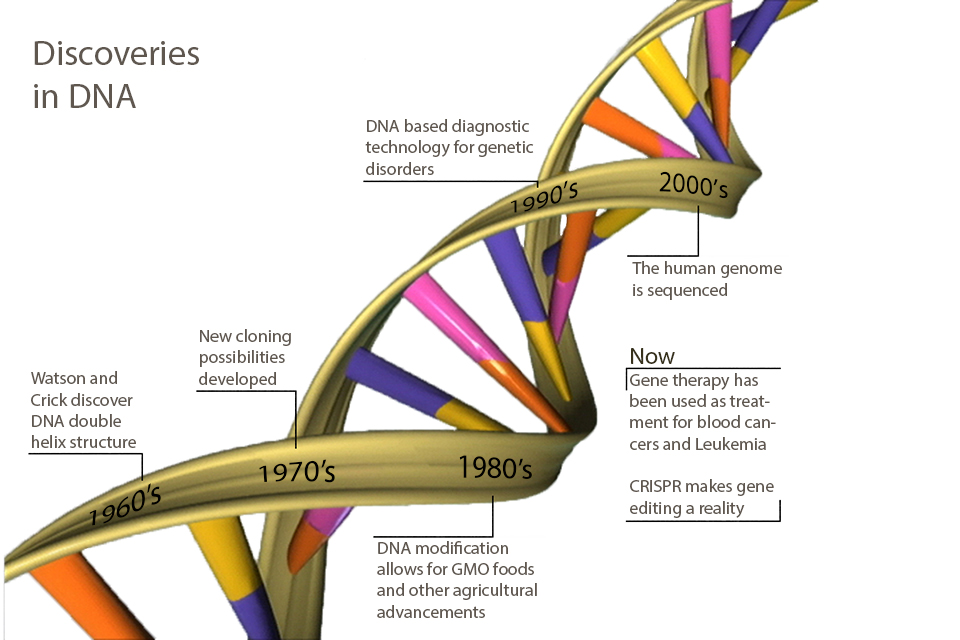

Discoveries in DNA: What’s New Since You Went to High School?

Joel Eissenberg, Ph.D., associate dean for research and professor of biochemistry and molecular biology at Saint Louis University School of Medicine, shares highlights from recent decades in the field of genetics.

Scientific and technological advances in the last 50 years have led to extraordinary progress in the field of genetics, with the sequencing of the human genome as both a high point and starting point for more breakthroughs to come.

Joel Eissenberg, Ph.D., associate dean for research and professor of biochemistry and molecular biology at Saint Louis University School of Medicine

Advances in molecular genetics have propelled progress in fields that deal with inherited diseases, cancer, personalized medicine, genetic counseling, the microbiome, diagnosis and discovery of viruses, taxonomy of species, genealogy, forensic science, epigenetics, junk DNA, gene therapy and gene editing.

For many non-scientists, a recap may be in order. With such rapid progress, the field has moved well beyond the knowledge covered in many biology classes over the years.

If you took high school biology in the 1960s, you probably learned about DNA as the genetic material and the structure of the DNA double helix (published in 1953 by Watson and Crick). You may also have learned about the genetic code, by which the sequences of DNA encode amino acids (worked out by Nirenberg, Khorana and colleagues by 1961).

If you took high school biology in the 1970s, you probably also learned about cloning (worked out by Herb Boyer, Stanley Cohen and Paul Berg by 1972) and the potential for recombinant DNA technology to provide gene therapy, create novel drugs and improve agriculture.

If you took high school biology in the 1980s, you may have learned about the clinical use of recombinant human insulin for diabetes treatment (approved for the Eli Lilly products in the US by the FDA in 1982). In agriculture, the use of Agrobacterium tumefacians as a bacteria-mediated delivery system to transfer recombinant DNA to crops (developed by Mary-Dell Chilton and colleagues in the 1970s) marked the advent of GMO foods and other commercial plant products.

Image courtesy of the National Institutes of Health, adapted by Ellen Hutti.

If you took high school biology in the 1990s, you probably learned about the molecular basis for human genetic disorders such as cystic fibrosis (1989), Huntingtons (1993), Duchenne and Becker muscular dystrophy (1987), and a rapidly growing list of single-gene disorders, and the correspondingly rapid growth in clinical diagnostic technology based on DNA sequence information, enabling certain diagnosis, sometimes before the advent of overt symptoms.

If you took high school biology in the ‘00s, you probably heard about the completion of the human genome sequence. The completion of a “rough draft” was announced by President Bill Clinton and British Prime Minister Tony Blair in 2000, although a more-or-less complete sequence was only finalized in 2006. You may also have learned that this achievement heralded the arrival of the age of personalized medicine.

Although it isn’t biology, it must be acknowledged that the human genome sequencing project also required parallel advances in computer speed and storage to acquire, store and manipulate billions of nucleotides of DNA sequence. The assembly and analysis of human tumor cell genomes, many of which contain chromosome deletions, duplications and insertions, as well as single nucleotide changes, requires immense data storage capacity and high-speed computation.

The invention of the Polymerase Chain Reaction (PCR) technology by Kary Mullis and colleagues in 1985 transformed molecular genetics. This had immediate application for DNA diagnostics, because once a gene implicated in an inherited disorder has been identified and sequenced in its normal form, PCR could be used to amplify the corresponding sequences from patient DNA in a matter of hours, with sequencing of the PCR products to identify the exact molecular mutation in a matter of days at that time, and today in a matter of hours. PCR has also seen application in the identification of emerging pathogens.

The findings made by scientists during these decades have led to advances in many different fields. Explore the following topics to learn more about how breakthroughs in molecular genetics are being applied in the world.

Thus far, the impact of molecular genetics on human disease has been primarily to identify specifically which genes are implicated in specific diseases. For single-gene disorders, mutations have been discovered in hundreds of genes. The current challenge is to identify which genes contribute to multifactorial conditions like obesity, heart disease, alcohol dependency, schizophrenia and autism. So far, “genome-wide association studies” have identified variant DNA sequences showing statistical association with these and other complex diseases, but demonstrating a mechanistic role for these variants has proven elusive.

Rapid and inexpensive genome sequencing, together with high-speed informatics and a large and expanding database of annotated human DNA sequence variants associated with disease risk, has made possible personalized medicine and customized genetic counseling. In a particularly famous case, actress Angelina Jolie elected to have a double mastectomy and her ovaries and fallopian tubes surgically removed when she learned that she carried a mutation in the BRCA1 gene that predicted an 87 percent risk of breast cancer and a 50 percent risk of ovarian cancer. Unfortunately, for the vast majority of genetic cancer associations, removing nonessential tissue is not an option. However, prior knowledge of an increased risk can result in increased surveillance, and cancer is most curable when caught early. For example, persons at increased risk for hereditary non-polyposis colorectal cancer should undergo frequent colonoscopies to identify and remove pre-cancerous colon polyps before they turn into full-blown colon cancer. Since 23andMe began offering direct-to-consumer marketing of genomic sequencing, over a dozen companies now offer various forms of genome sequencing and analysis.

A healthy intestine carries about 10 times as many microorganisms as the number of cells in the entire body. The metabolic activity of these microorganisms can significantly impact health. For example, their metabolic activity is an important source of biotin (vitamin B7), and the composition of gut microflora can shape the immune response, leading to sensitivity or resistance to allergies and autoimmunity. The availability of a large number of complete microbial genomes and the technology of high-volume DNA sequencing has enabled the genotyping of gut microbiomes under different dietary and health conditions, leading to new, detailed understanding of the differences between healthy and unhealthy gut microflora. Direct-to-consumer gut microflora sequencing services are currently available, although the benefits of this knowledge for otherwise healthy people are currently limited.

Most high school biology students learn some basic animal and plant taxonomy. The foundation of classical taxonomy is morphology. With the availability of genome sequences from representative species in whole phyla, rigorous quantitative measurements of genetic distance based on DNA sequence divergence has been used to test existing evolutionary trees and to re-classify organisms in all kingdoms. For example, large-scale taxonomic DNA sequence comparisons have established more rigorous relationship trees and taxonomic distances for the large and diverse class Aves (birds) and the phylum Arthropoda.

Human genome sequencing provides much more detailed and specific genealogical information. Several commercial services will provide information on likely ancestry based on combinations of DNA sequence variants known to be rare or prevalent among people originating from different regions of the world. However, it should be noted that one sometimes unwelcome outcome of genome sequences for pedigree or genealogical purposes is the discovery of non-paternity. While rates vary widely between different populations, they have ranged between 2 and 30 percent in specific studies.

Forensic science is increasingly turning to DNA sequencing to implicate or exonerate potential culprits and to identify remains. In such cases, it is usually sufficient to sequence only a subset of genomic DNA representing regions found to be most variable among individuals. This approach circumvents the much higher cost of sequencing and data management for whole genome sequencing, while providing sufficient specificity for forensic purposes.

High school biology students are taught Mendel’s laws of genetic inheritance. The first law states that a genetic trait is transmitted from one generation to the next without modification, even when it is recessive and not evident in carriers. The idea that a genetic trait could be modified by the life experience of its carrier is a violation of this law and is typically dismissed as Lamarckian fallacy in high school classes.

Biochemists have known that human DNA (as well as the DNAs of many microbes, plants and animals) contains other bases besides the canonical adenine, cytosine, guanine and thymine (ACGT). In human chromosomes, 3 to 5 percent of the cytosine bases are actually a modified form of cytosine called 5-methyl cytosine. This modification is usually associated with repression of genes in humans. Importantly, the extent of modifications can differ at the same gene in the sperm and egg, such that the expression of dad’s copy will be different from mom’s copy in the child that inherits them. This phenomenon is called “imprinting.” Parental imprinting is important for genetic health, as failure of this imprinting underlies syndromes such as Prader-Willi, Angelmans, Beckwith-Weidemann and Silver-Russell syndromes.

The transmission of different states of gene expression through multiple cell divisions and across generations has been termed “epigenetic,” since the underlying DNA sequence is identical in both states. While the four bases of DNA – adenine, cytosine, guanine and thymine – cannot be altered by a parent’s life experiences, scientists have discovered that a form of one of the base pairs, cytosine, can be expressed in different forms as a result of environmental factors. Techniques have been developed over the last decade to allow for “epigenomics,” the genome-wide characterization of methylation patterns. Epigenomics is presently being used to identify inherited pre-disposition to obesity, diabetes, cardiovascular disease, addiction and psychiatric disorders, as well as markers for aging and cancer progression.

Drugs that inhibit or stabilize epigenetic marks are in clinical use for cancer and are being tested for other indications like sickle cell disease.

For over 50 years, it has been known that among multicellular animals and plants, the size of genomes can vary over orders of magnitude in ways that are not explained by the apparent complexity of the organisms specified by those genomes. With the availability of whole genome sequencing, it has become evident that, for example, the number of genes in humans is not much greater than that of the fruit fly. Much of our genome consists of repetitive DNA sequences, transposable elements and non-functional relicts of genes and transposons with no discernible function. This DNA has been termed “junk DNA” to convey this apparent lack of function.

In the past 15 years, however, detailed analysis of which regions of DNA are transcribed into RNA copies has uncovered a significant amount of non-protein-coding RNAs that serve regulatory functions. So-called microRNAs have been shown to target specific protein-coding RNAs for destruction or inhibition of protein synthesis. Other RNAs, termed long noncoding RNAs, also appear to regulate expression of protein-coding RNAs, but the mechanisms are just now being worked out. That said, this still leaves a majority of our genome with no apparent function. It seems likely that the mechanisms by which DNA accumulates in genomes through transposon jumping and genome duplications is not balanced with a similar rate of sequence elimination, and that the burden of this unpurged DNA has little or no evolutionary cost.

A major goal for gene sequencing has always been as a platform for the design and implementation of gene therapy. The first gene therapy was bone marrow engraftment for the treatment of leukemia and other blood cancers. The first human bone marrow transplant was performed in 1956 by E. Donnall Thomas. In these therapies, the patient’s own blood-producing bone marrow cells are treated with radiation and chemotherapy, and the blood of a twin or closely matched donor is instilled. The idea is that the ablation will destroy any remnant of the cancer together with the healthy hematopoietic stem cells, and that donor’s blood stem cells will populate the patient’s marrow and regenerate the entire red and white blood cell repertoire from healthy cells. In effect, this is gene therapy, since the donor’s genes are replacing the patients genes in the blood cell lineages.

Targeted gene therapies, however, had to wait for (1) the identification of the genes to target, (2) the cloning and/or sequencing of the relevant genes and in some cases, the specific disease-causing variant, (3) a full understanding of the normal gene function and regulation, and (4) the development of efficient ways to deliver genes to the relevant tissues at therapeutic levels. The advent of molecular cloning, DNA sequencing and the many tools of molecular genetics and cell biology has given us sufficient knowledge of the basis for disease and the genes to target, but what has limited the application of gene therapy has been efficient gene delivery systems.

Scientists realized viruses could be the perfect tool to do the work of gene editing. They are already designed by nature to insert themselves into our DNA. The first successful targeted human gene therapy was reported in 2000. This was a virus-mediated therapeutic gene to treat X-linked severe combined immunodeficiency. The deck was stacked in favor of success in the choice of this particular disease since it was known that the therapeutic gene just had to be expressed in a modest number of blood cells to achieve therapeutic benefit. The therapeutic gene was carried by an engineered retrovirus that was used to infect the patient’s blood cells before they were re-injected into the patient.

Thus far, targeted gene therapy successes have been very limited. Other blood disorders that have shown significant benefit from targeted gene therapy in small trials include hemophilia (specifically, factor IX deficiency), severe beta-thalassemia (deficiency for the adult beta-globin gene) and leukemia, where the patient’s immune cells were treated to enable them to recognize cancer cells and destroy them. Targeted gene therapy for degenerative blindness caused by Leber congenital amaurosis improved vision for a few years but failed to arrest the degeneration process.

The first approved commercial targeted gene therapy is Alipogene tiparovovec (trade name Glybera), a virus-mediated therapeutic delivery of human lipoprotein lipase to the muscle cells of lipoprotein lipase deficiency patients. It was approved for clinical use in Europe in 2012.

For many genetic disorders, the disease results from the expression of a defective gene product, not the complete absence of the product. For example, sickle cell disease and the major form of cystic fibrosis are both associated with abnormal proteins. In such cases, editing a patient’s own gene to the normal form should provide greater benefit than merely expressing the normal protein in the presence of the abnormal one.

For gene editing to work, it is essential to uniquely target a single site among the 3 billion nucleotides in the haploid (single set of unpaired chromosomes) human genome. In other words, therapeutic gene editing therapy must be able to efficiently edit the intended target without introducing off-target editing at sites that fortuitously resemble the intended target.

Two targeting strategies are currently under development—protein-based targeting and RNA-based targeting. In both cases, the idea is to target an enzyme that cuts both strands of the double helix at a specific site. If the goal is to inactivate the target gene, the creation of the break is sufficient to trigger cellular mechanisms that lead to error-prone repair and inactivating mutations. True editing—the replacement of bad sequence with good sequence—requires the simultaneous introduction of DNA fragments containing good sequence into the same cells.

Protein-based targeting strategies rely on custom-engineered modular proteins that recognize and bind to specific DNA sequences. One approach builds on the so-called zinc finger protein fold first described in sequence-specific transcription factors. The publicly traded biotech company Sangamo BioSciences was founded in 1995 to exploit zinc finger protein engineering for gene therapy and agricultural genetic engineering. In this approach, a series of zinc finger modules, each chosen to recognize a specific 3-nucleotide motif, are fused in tandem to one another and to a nuclease subunit.

Another protein-based targeting strategy, TALENs, is based on the transcription activator-like effector (proteins secreted by Xanthomonas bacteria). In this case, repeating 33-34 amino acid modules with specificity to each of the four bases in DNA are fused in tandem to create the targeting peptide.

More recently, the clustered regularly-interspaced short palindromic repeats (CRISPR) prokaryotic immunity mechanism has been exploited for gene editing. In this case, specific DNA sequences are targeted by RNA-DNA hybridization, which directs the Cas9 enzyme to cleave DNA at the target site. This mechanism was only worked out in 2007, but has already emerged as the front-runner technology for gene editing, due to its relative simplicity and high efficiency. In human cells, the efficiency of zinc-finger- and TALE-mediated editing achieve efficiencies of 1 to 50 percent, while CRISPR-Cas9 editing has been reported to have efficiencies of up to 78 percent in single-cell mouse embryos. Exciting clinical applications of gene editing include correcting the mutation in the bone marrow stem cells of patients with sickle cell disease or hemophilia.

10 Recent Scientific Breakthroughs And Discoveries

Contemporary science is a hotbed of cutting-edge research. Astronomers across the globe have marveled at the first photographic evidence of a black hole. An unconventional in vitro fertilization (IVF) technique has come under fire from medical professionals.

Elsewhere, scientists from Japan have fired explosives at an asteroid, and in Germany, they have discovered a prehistoric molecule from the early years of universe. Setting aside the negative headlines—like the Israeli spacecraft that crash-landed on the Moon or the Indian missile test that might have endangered the International Space Station—here are ten of the most fascinating breakthroughs to have made recent headlines.

10 Astronomers Have Bombed An Asteroid

A group of Japanese astronomers has decided to bomb the asteroid Ryugu, hoping it can answer some fundamental questions about the origins of life on Earth. A cone-shaped instrument known as a “small carry-on impact” was sent hurtling into the asteroid, where it blasted out a crater using a baseball-sized wad of copper explosives.

The device was fired from the Hayabusa 2 spacecraft—a pioneering exploration mission operated by the Japan Aerospace Exploration Agency. The spacecraft will head back to Ryugu at a later date to collect samples from beneath the asteroid’s surface that been uncovered in the blast.

Researchers predict there is a wealth of organic material and water from the birth of the solar system preserved underground in the asteroid. By analyzing the samples from Hayabusa 2, they hope to gain a clearer understanding of the early stages of the solar system and of life on Earth. [1]

9 Can We Taste Smells?

It seems our tongues might be more capable than we originally realized. A research team from Philadelphia has suggested that receptor cells in the tongue are able to detect odors and smells. Their work is prompting experts to reassess whether taste and smell are combined by the brain alone or if there is some level of association between the two signals.

The group, whose findings were published in the journal Chemical Senses, began by experimenting on receptors in genetically modified mice. Following this, they moved onto cells in humans, which displayed similar properties to the mice and were found to respond to aromatic compounds.

For now, it is far too early to draw any concrete conclusion, but with further development, these ideas may be applied to persuade people to eat a healthier diet. Dr. Mehmet Hakan Ozdener, a specialist at the Monell Chemical Senses Center, has suggested that mildly altering the scent of some foods could reduce sugar intake. [2]

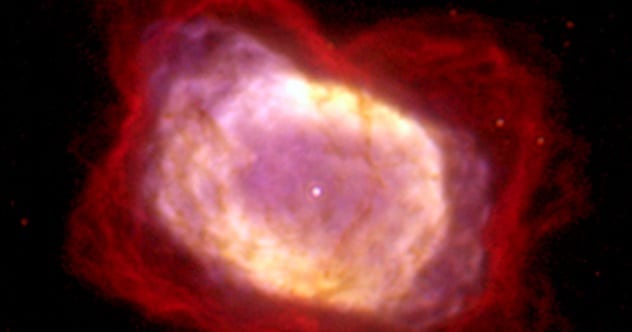

8 Molecule Detected From The ‘Dawn Of Chemistry’

After decades of scouring the cosmos, experts have successfully detected the compound helium hydride, thought to be the first molecule formed in the history of the universe. Due to the obstructive effects of the Earth’s atmosphere, researchers decided to take to the skies to make their landmark discovery. The Stratospheric Observatory for Infrared Astronomy, an airborne observatory built into a modified Boeing 747, was able to pick up infrared signals emanating from the prehistoric molecule.

In a period dubbed the “dawn of chemistry,” around 100,000 years after the Big Bang, the universe had cooled to a low enough temperature that particles began to interact and coalesce. In this era, when light atoms and molecules first came into being, it was helium hydride that paved the way for far more intricate interstellar structures to come. By continuing to investigate the elusive molecule, researchers are able to explore the expansion of the universe in its nascent stages. [3]

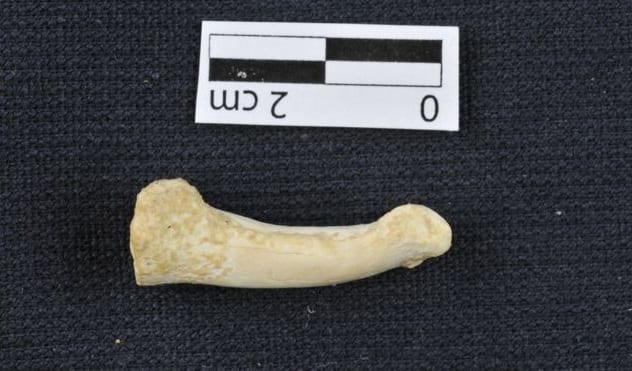

7 New Species Of Primitive Human Discovered

Another strand has been added to the history of human evolution. Remnants of an extinct relative have been found in Callao Cave on the island of Luzon in the Philippines. The primitive species, known as Homo luzonensis, is said to have resembled modern humans in some respects but in others was closer to our ancient ape-like ancestors. On top of that, they are thought to have been competent climbers, as indicated by the curved bones in their fingers and toes.

The discovery poses a number of questions about the long and complex history of our species. With only 13 teeth and bones on which to base their hypotheses, experts remain in the dark as to how Homo luzonensis came to be on the island in the first place. Furthermore, the species’ features suggest that our ancient ancestors made the journey out of Africa to Southeast Asia, an idea which contradicts current historical theories. [4]

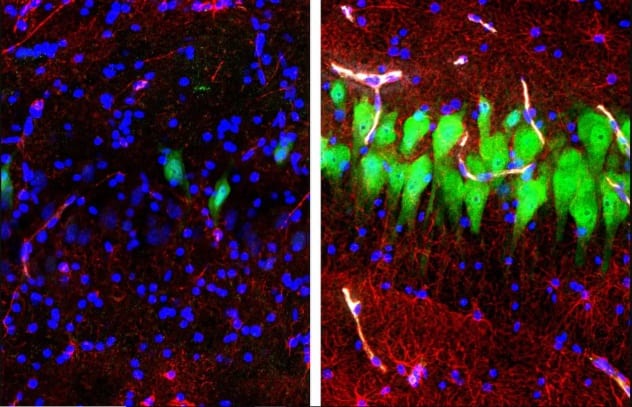

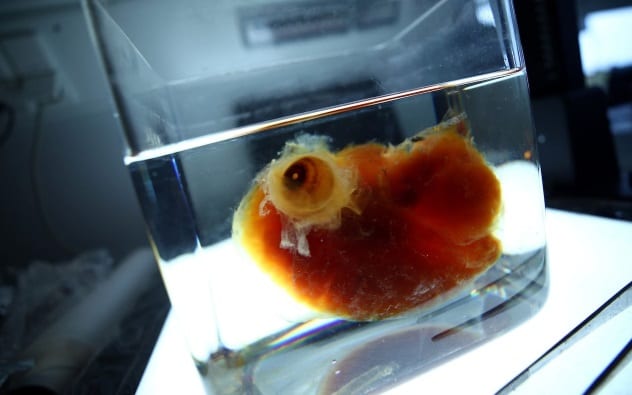

6 Pig Brain Revived After Death

The zombie pigs are said to be among us. A team of neuroscientists from the Yale University School of Medicine have demonstrated that, in part, it is possible to revive a pig’s brain hours after death. Their pioneering new system, BrainEx, has restored a number of basic functions to over 30 dead brains, such as the ability to absorb sugars and oxygen. (The left picture above shows a dead brain, and the right shows a partially reactivated brain.)

The technology involved in BrainEx sends an oxygen-rich solution pulsing through the pig’s grey matter. This fluid partially revives the cells for a maximum of six hours, while also slowing down the process of deterioration after death. However, the brains are, by definition, still dead; there is no evidence of consciousness being reinstated.

This highly advanced research presents an ethical quandary over whether it is correct to experiment on semi-living beings. The National Institute of Health has been discussing the implications of BrainEx since 2016 via their Neuroethics Working Group and are wary of the possible consequences of using similar technology on humans. [5]

5 Scientists Create Transparent Organs

Organ transplants may soon become a thing of the past. For years, scientists have strove to create fully functioning artificial organs to address the significant dearth of donors. The dream is now one step closer, courtesy of Dr. Ali Erturk and his colleagues at the Ludwig Maximilians University in Munich.

The team has successfully developed a technique to fashion transparent human organs, with an aim to further understand their elaborate inner structures. Organic solvents are used to remove fats and pigments without disturbing any of the tissue underneath. The uncovered organs can be explored in intricate detail using a laser scanner, which allows scientists to build up a complete structural image of the body part.

Erturk is confident that, as technology improves, these scans can be used as blueprints to produce 3-D bioprinted replica organs. The team hopes to have constructed a 3-D printed kidney by 2025. [6]

4 Obesity-Resistant Genes Discovered

The link between genetics and body mass has been known for many years, but now scientists at Cambridge University have identified exactly which genes keep people slim. Around four million people in Britain, six percent of the population with European ancestry, have a specific DNA coding that prevents them from gaining large amounts of weight.

Previous studies discovered that the gene MC4R controls a protein known as melanocortin 4, a brain receptor associated with appetite. Participants in this experiment with a specific strand of MC4R displayed more restraint in their appetites, making them far less likely to suffer from obesity or type 2 diabetes. This deepened understanding of genetics opens up the possibility of a slimming medication to combat rising levels of obesity. [7]

3 Memory Loss Reversed

By stimulating the brain with electrical pulses, scientists are able to temporarily offset the debilitating effects of memory loss. With age, vital cognitive networks in the brain begin to lose their synchronicity. This leads to the deterioration of the working memory—the short-term processing system that plays a key role in tasks like facial recognition and arithmetic.

Now, neuroscientists at Boston University have found that noninvasive electrical stimulation appears to improve the connection between these networks. The researchers reported that a study group of 60- to 76-year-olds performed significantly better in a series of working memory tasks after around half an hour of pulse treatment. Those with the most pronounced memory issues showed the biggest improvements.

Further clinical trials are needed to determine whether this stimulation is a viable method for combating memory loss or dementia. [8]

2 Baby Born With DNA From Three People

In a breakthrough moment for in vitro fertilization, a baby has been born in Greece with DNA from three different people. The newborn boy was conceived using the mitochondrial donation technique, in which the intracellular structure of the mother’s egg is modified slightly using a second donor egg. During the technique, the mitochondria—tiny, floating structures that provide power to the cell—from the mother’s egg are replaced by that of a donor. While the vast majority of the baby’s genetic material has been passed down from his parents, an extremely small amount of his DNA—around 0.2 percent—originates from the donor.

Doctors claim this is the first time that mitochondrial donation has been applied to combat fertility issues. Spanish embryologist Nuno Costa-Borges has labeled the healthy birth a “revolution in assisted reproduction” and claims that it has the potential to help a multitude of would-be mothers in the future.

However, critics of the treatment are warning women to proceed with caution. Reproductive expert Tim Child has explained how little is known about the risks or benefits of the technique, dismissing Costa-Borges’s claims as unfounded in evidence. [9]

1 First image Of A Black Hole

For the past century, the only evidence astrophysicists have had for the existence of black holes has been scientific theories and indirect observations. The cosmic giants have a gravitational attraction so powerful that nothing can escape their immense pull, and their existence has been incredibly challenging to verify. And yet, in spite of the major difficulties, scientists have managed to generate an image of one.

The picture in question is of the fiery disk of accreted gas surrounding the black hole at the core of the Messier 87 galaxy. With a diameter of 38 billion kilometers (23.6 million mi), the active supermassive colossus lurks 55 million light-years from our planet. The black hole itself is impossible to literally “see,” but the dark area at the center of the ring corresponds to its shadow.

To capture the image, a task described by leading astrophysicist France Cordova as “Herculean,” scientists deployed the Event Horizon Telescope (EHT), a global network of high-precision radio telescopes. [10] This Herculean task required a Herculean amount of data, so much that it was impossible to transfer over the Internet. Instead, half a ton of hard drives had to be flown to a central location, where the readings were combined using state-of-the-art processing techniques.

The image appears to verify the first predictions of black holes made by Einstein’s general theory of relativity, which addresses the distortion of space and time caused by immense, massive objects. The EHT researchers now have their sights on Sagittarius A—the supermassive beast at the heart of the Milky Way.

How Genetics Is Changing Our Understanding of ‘Race’

Send any friend a story

As a subscriber, you have 10 gift articles to give each month. Anyone can read what you share.

Give this article

Beginning in 1972, genetic findings began to be incorporated into this argument. That year, the geneticist Richard Lewontin published an important study of variation in protein types in blood. He grouped the human populations he analyzed into seven “races” — West Eurasians, Africans, East Asians, South Asians, Native Americans, Oceanians and Australians — and found that around 85 percent of variation in the protein types could be accounted for by variation within populations and “races,” and only 15 percent by variation across them. To the extent that there was variation among humans, he concluded, most of it was because of “differences between individuals.”

In this way, a consensus was established that among human populations there are no differences large enough to support the concept of “biological race.” Instead, it was argued, race is a “social construct,” a way of categorizing people that changes over time and across countries.

It is true that race is a social construct. It is also true, as Dr. Lewontin wrote, that human populations “are remarkably similar to each other” from a genetic point of view.

But over the years this consensus has morphed, seemingly without questioning, into an orthodoxy. The orthodoxy maintains that the average genetic differences among people grouped according to today’s racial terms are so trivial when it comes to any meaningful biological traits that those differences can be ignored.

The orthodoxy goes further, holding that we should be anxious about any research into genetic differences among populations. The concern is that such research, no matter how well-intentioned, is located on a slippery slope that leads to the kinds of pseudoscientific arguments about biological difference that were used in the past to try to justify the slave trade, the eugenics movement and the Nazis’ murder of six million Jews.

I have deep sympathy for the concern that genetic discoveries could be misused to justify racism. But as a geneticist I also know that it is simply no longer possible to ignore average genetic differences among “races.”

Groundbreaking advances in DNA sequencing technology have been made over the last two decades. These advances enable us to measure with exquisite accuracy what fraction of an individual’s genetic ancestry traces back to, say, West Africa 500 years ago — before the mixing in the Americas of the West African and European gene pools that were almost completely isolated for the last 70,000 years. With the help of these tools, we are learning that while race may be a social construct, differences in genetic ancestry that happen to correlate to many of today’s racial constructs are real.

Recent genetic studies have demonstrated differences across populations not just in the genetic determinants of simple traits such as skin color, but also in more complex traits like bodily dimensions and susceptibility to diseases. For example, we now know that genetic factors help explain why northern Europeans are taller on average than southern Europeans, why multiple sclerosis is more common in European-Americans than in African-Americans, and why the reverse is true for end-stage kidney disease.

I am worried that well-meaning people who deny the possibility of substantial biological differences among human populations are digging themselves into an indefensible position, one that will not survive the onslaught of science. I am also worried that whatever discoveries are made — and we truly have no idea yet what they will be — will be cited as “scientific proof” that racist prejudices and agendas have been correct all along, and that those well-meaning people will not understand the science well enough to push back against these claims.

This is why it is important, even urgent, that we develop a candid and scientifically up-to-date way of discussing any such differences, instead of sticking our heads in the sand and being caught unprepared when they are found.

To get a sense of what modern genetic research into average biological differences across populations looks like, consider an example from my own work. Beginning around 2003, I began exploring whether the population mixture that has occurred in the last few hundred years in the Americas could be leveraged to find risk factors for prostate cancer, a disease that occurs 1.7 times more often in self-identified African-Americans than in self-identified European-Americans. This disparity had not been possible to explain based on dietary and environmental differences, suggesting that genetic factors might play a role.

Self-identified African-Americans turn out to derive, on average, about 80 percent of their genetic ancestry from enslaved Africans brought to America between the 16th and 19th centuries. My colleagues and I searched, in 1,597 African-American men with prostate cancer, for locations in the genome where the fraction of genes contributed by West African ancestors was larger than it was elsewhere in the genome. In 2006, we found exactly what we were looking for: a location in the genome with about 2.8 percent more African ancestry than the average.

When we looked in more detail, we found that this region contained at least seven independent risk factors for prostate cancer, all more common in West Africans. Our findings could fully account for the higher rate of prostate cancer in African-Americans than in European-Americans. We could conclude this because African-Americans who happen to have entirely European ancestry in this small section of their genomes had about the same risk for prostate cancer as random Europeans.

Did this research rely on terms like “African-American” and “European-American” that are socially constructed, and did it label segments of the genome as being probably “West African” or “European” in origin? Yes. Did this research identify real risk factors for disease that differ in frequency across those populations, leading to discoveries with the potential to improve health and save lives? Yes.

While most people will agree that finding a genetic explanation for an elevated rate of disease is important, they often draw the line there. Finding genetic influences on a propensity for disease is one thing, they argue, but looking for such influences on behavior and cognition is another.

But whether we like it or not, that line has already been crossed. A recent study led by the economist Daniel Benjamin compiled information on the number of years of education from more than 400,000 people, almost all of whom were of European ancestry. After controlling for differences in socioeconomic background, he and his colleagues identified 74 genetic variations that are over-represented in genes known to be important in neurological development, each of which is incontrovertibly more common in Europeans with more years of education than in Europeans with fewer years of education.

It is not yet clear how these genetic variations operate. A follow-up study of Icelanders led by the geneticist Augustine Kong showed that these genetic variations also nudge people who carry them to delay having children. So these variations may be explaining longer times at school by affecting a behavior that has nothing to do with intelligence.

This study has been joined by others finding genetic predictors of behavior. One of these, led by the geneticist Danielle Posthuma, studied more than 70,000 people and found genetic variations in more than 20 genes that were predictive of performance on intelligence tests.

Is performance on an intelligence test or the number of years of school a person attends shaped by the way a person is brought up? Of course. But does it measure something having to do with some aspect of behavior or cognition? Almost certainly. And since all traits influenced by genetics are expected to differ across populations (because the frequencies of genetic variations are rarely exactly the same across populations), the genetic influences on behavior and cognition will differ across populations, too.

You will sometimes hear that any biological differences among populations are likely to be small, because humans have diverged too recently from common ancestors for substantial differences to have arisen under the pressure of natural selection. This is not true. The ancestors of East Asians, Europeans, West Africans and Australians were, until recently, almost completely isolated from one another for 40,000 years or longer, which is more than sufficient time for the forces of evolution to work. Indeed, the study led by Dr. Kong showed that in Iceland, there has been measurable genetic selection against the genetic variations that predict more years of education in that population just within the last century.

To understand why it is so dangerous for geneticists and anthropologists to simply repeat the old consensus about human population differences, consider what kinds of voices are filling the void that our silence is creating. Nicholas Wade, a longtime science journalist for The New York Times, rightly notes in his 2014 book, “A Troublesome Inheritance: Genes, Race and Human History,” that modern research is challenging our thinking about the nature of human population differences. But he goes on to make the unfounded and irresponsible claim that this research is suggesting that genetic factors explain traditional stereotypes.

One of Mr. Wade’s key sources, for example, is the anthropologist Henry Harpending, who has asserted that people of sub-Saharan African ancestry have no propensity to work when they don’t have to because, he claims, they did not go through the type of natural selection for hard work in the last thousands of years that some Eurasians did. There is simply no scientific evidence to support this statement. Indeed, as 139 geneticists (including myself) pointed out in a letter to The New York Times about Mr. Wade’s book, there is no genetic evidence to back up any of the racist stereotypes he promotes.

Another high-profile example is James Watson, the scientist who in 1953 co-discovered the structure of DNA, and who was forced to retire as head of the Cold Spring Harbor Laboratories in 2007 after he stated in an interview — without any scientific evidence — that research has suggested that genetic factors contribute to lower intelligence in Africans than in Europeans.