The information revolution how do you understand it

The information revolution how do you understand it

Rethinking the information revolution

Beyond all the needs that it fulfils, all technological innovation is underpinned by a common driving force: how to make information flow more efficiently. From when the first modern humans walked the earth, we’ve assumed that it was their survival instinct that drove innovation. It certainly has, but we forget that without the ability to efficiently pass on information from one generation to the next, our ancestors would’ve had to reinvent the most basic things every time they needed it.

From the beginning of human civilisation till today, our aim has been to increase, what can be termed, brain to brain bandwidth. The idea encompasses not just flow of information from one person to the other but also how effectively it is transmitted, that is how well it is understood or used by the person receiving it.

We’ve come to associate the last 50 years with the period when the information revolution took place. But that is because the industrial revolution that preceded it made life easy enough for us to focus primarily on information and its transmission. Is the information revolution slowing down though? Certainly not.

The machine of the dreamers

The personal computer was expected to make its way into every home well before the 1990s. But its limitations with speed and memory did not let that happen. Its main users for many years were technology geeks, nerds and hackers.

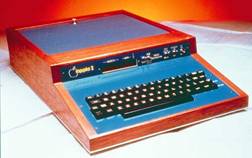

While no one doubted the achievement of Apple I from a purely technical standpoint, giants of the field like IBM did not believe in the dream of the PC-enthusiasts. In 1976 it was hard to imagine how exactly an abstruse gadget in a wood-casing with the title “Apple I” scrawled over the headpiece would have a large impact on ordinary life. But should it have been so difficult? The fundamental role of information in our lives seemed to have been underplayed.

By the time the personal computer, as we know it*, was first built, it had already been over a decade since Gordon Moore’s prediction that the number of components on an integrated circuit would double every two years^.

A general purpose information processing device was going to be in demand and would become cheap enough for many to afford. But it still took a genius and a rebel like Steve Jobs to force the incumbents to accept that the PC age had begun.

The byproduct of science

The next innovation after the PC that had a comparable impact on humanity’s brain to brain bandwidth was the internet. What the PC made possible was a better way to access and manipulate information. The advent of the internet brought things a step further by enabling us to connect such information with relative ease.

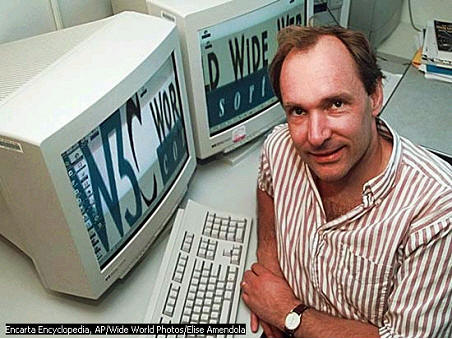

However, like the PC before it, mass adoption took time. After being invented as a means of transferring data between physicists, Sir Tim Berners-Lee’s idea took off in the mid-90’s. Since then the internet has disrupted not just information transfer mechanisms but many other markets. From the postal system to the education system, anything that has information transfer at its heart has been changed by the internet.

The rise of social

While many might dispute social media as the next big innovation, there is little doubt that adding a personal touch to information flow has made a huge difference. Defined as a website that allows you to make a profile page, connect with friends and view your friends’ connections, the first social networking website was SixDegrees.com launched in 1997.

But innovation in this sector is reaching a plateau. All social networking websites have essentially the same features: profiles, news feed, data-sharing (photos, links, documents, etc.) and many ways of bringing users together in groups or by direct communication. We’ve reached a point today when people are spending less time on social networks than before.

Virtually face to face

The next innovation needed in increasing our brain to brain bandwidth are being touted to come from wearable computing, be it smartwatches or products like Google Glass. But these seem like an incremental development rather than one that is paradigm-shifting.

What we really need is a virtual way to replicate the water-cooler effect. The effect is named after the phenomenon that colleagues in an office meet at a water-cooler, which leads to serendipitous exchange of ideas. It is thought that the internet has led to the decline of these chance events happening, and thus slowed down the pace of innovation.

It was this that formed the core of a recent note from Marissa Mayer, Yahoo’s CEO, that asked Yahoo employees to stop working from home. Many decried Mayer’s note, calling her out of touch with reality. But she has a point because there is a lot of value in face to face communication. No innovation yet has come close to solving that problem.

A solution to this problem will truly impact the world. Economists have found out that the easiest way to double world GDP is to get rid of international borders. Which, of course, is a politically implausible proposition. But if technological development could allow virtual presence of a person to be nearly as good as real presence, this dividend would not remain an unrealised one.

And perhaps Yahoo workers could start working from home again.

* Many will dispute which exactly was the first personal computer. Perhaps it was GENIAC built in 1955. The Apple II built in 1977 was the first mass-produced PC. But the first PC with a graphic user interface, that we have become so accustomed to, was Lisa built in 1983.

^ The often-quoted period of 18 months was a modification by David House, of Intel, who said the growth in computing power will come not just from more transistors but also from faster ones

Information Revolution

Related terms:

Download as PDF

About this page

Foreword by Valey Kamalov

The information revolution led us to the age of the internet, where optical communication networks play a key role in delivering massive amounts of data. The world has experienced phenomenal network growth during the last decade, and further growth is imminent. The internet will continue to expand due to user population growth and internet penetration: previously inaccessible geographical regions in Africa and Asia will come online. Network growth will only be accelerated by improvements in integrated circuits. Transistor size has been halved every two years since the middle of the last century. The new internet-based global economy requires a worldwide network with high capacity and availability, which is currently limited by submarine optical communication cables.

Philosophy of Computing and Information Technology

Equity and access

The information revolution has been claimed to exacerbate inequalities in society, such as racial, class and gender inequalities, and to create a new, digital divide, in which those that have the skills and opportunities to use information technology effectively reap the benefits while others are left behind. In computer ethics, it is studied how both the design of information technologies and their embedding in society could increase inequalities, and how ethical policies may be developed that result in a fairer and more just distribution of their benefits and disadvantages. This research includes ethical analyses of the accessibility of computer systems and services for various social groups, studies of social biases in software and systems design, normative studies of education in the use of computers, and ethical studies of the digital gap between industrialized and developing countries.

Data processing

Abstract

An information revolution is currently occurring in agriculture resulting in the production of massive datasets at different spatial and temporal scales; therefore, efficient techniques for processing and summarizing data are crucial for effective precision management. With the profusion and wide diversification of data sources provided by modern technology, such as remote and proximal sensing, sensor datasets could be used as auxiliary information to supplement a sparsely sampled target variable. Remote and proximal sensing data are often massive, taken on different spatial and temporal scales, and subject to measurement error biases. Moreover, differences between the instruments are always present; nevertheless, a data fusion approach could take advantage of their complementary features by combining the sensor datasets in a manner that is statistically robust. It would then be ideal to jointly use (fuse) partial information from the diverse today-available sources so efficiently to achieve a more comprehensive view and knowledge of the processes under study. The chapter investigates the data fusion process in agriculture and introduces the concepts of geostatistical data fusion with applications in remote and proximal sensing.

Information Society, Geography of

The information revolution associated with the development of the digital computer is the latest revolution in communications technologies which enable a spatially extensive society to annihilate space with time, to extend control over larger areas, and to facilitate human experience with other places. A new economic geography is emerging in the information society around a spatial division of labor, between core locations where knowledge-based activities employing face-to-face communication cluster, and more peripheral locations where routine activities can be carried out cheaply, coordinated and monitored from the core. Spatial inequalities also exist on the location of and access to the infrastructure of information technology. The information society is having a contradictory impact on space. The relationship between the geographical propinquity of social actors and similarities in their situation with respect to other social actors is weakening. Whereas some places are becoming closer to one another, others remain distant in the spaces of telecommunication. The information society is undermining the cohesion of place, as individuals are less tied to place-based communities, but in other ways place is being reinforced. The information society is simultaneously contributing to both the empowerment of localities and the globalization of interactions, thereby reinforcing ‘glocalization.’

Information Technology and the Productivity Challenge

Interorganizational Processes

This kind of dynamic is seen throughout our economy as relationships between organizations become more information intensive. The possibility of computerized data has enabled government to demand more and more detailed information from hospitals, banks, military contractors, and universities. It has stimulated bank customers to expect 24-hour information on their accounts, and users of overnight delivery services to demand instant tracking of their packages. Firms expect to be able to tap into their suppliers’ computers to find out about their status of orders, inventory levels, and so on. Car dealerships are required to provide the auto companies with detailed breakdowns of what sold in the last week.

Whatever the long-term implications of such phenomena, in the immediate term IT is placing greater burdens of information-work upon organizations, and firms have to make sizable investments of personnel and equipment to provide this kind of information access. In highly competitive environments, or when facing legally mandated demands, corporations may have no way of passing on the costs of all this information provision to those who demand it. Thus the burden of information provision absorbs or attenuates the benefits accruing from increased productivity elsewhere in the organization.

The hacker group

Introduction

Since the information revolution the Internet has been a driving force behind many—if not most—social reforms. From the 1% marches to the Arab Spring: The Internet was used to fuel, coordinate, and facilitate protests. The Internet turned out to be a safe haven for liberal thinkers and was used to establish contacts with other like-minded individuals at the other end of the globe. The global nature of the Internet makes (targeted) communication accessible to anyone. This was at the core of many great revelations: WikiLeaks being the first, The Intercept and Edward Snowden following quickly.

In the early days the Internet was a safe haven for free thinkers; there was no censorship and no laws were directly applicable. This opened up opportunities on the Internet to influence governments and their laws. However, this situation has changed: The Internet has become securitized and militarized. Whereas the Internet used to be a place aimed at free and unhindered flow of information and ideas, now it is increasingly influenced by State actors and large non-State actors. Whereas any individual could tread onto the Internet and fight for a cause, nowadays you need to tread carefully.

These different contexts require a versatile, intelligent, and very specific type of individual to fight on the digital forefront. This chapter will zoom in to look at the cornerstone of cyber guerilla: the hacker group. Sections 1 and 2 will focus on the two roles hacker group members have to be able to fulfill. Mirroring the amorphous character of cyber guerilla, group members should be able to fulfill the role of (1) social reformer and (2) combatant. These two sections are aimed at describing the ideological foundations of hacker group members. Section 3 describes the hacker group composition and will describe the intellectual capacities and skill-sets needed in the group.

Digital systems

9.5.12 Broadband ISDN and asynchronous transfer mode (ATM)

The continuing information revolution requires services with ever-increasing data rates. Even the individual consumer, who is limited by dial-up telephone lines using 56 kbps modems or ISDN, is increasingly demanding high-speed broadband access into the home to achieve the best Internet experience possible. “Broadband” is the term used to describe high-speed access to data, voice, and video signals. With this capability, Web pages appear faster, audio and video files arrive quickly, and more than one person in a household can access the Internet at the same time. Broadband technologies such as cable, satellite, and digital subscriber line (DSL) bring Internet information to the home, in any format, with quality results. A service, newer than ISDN, called broadband ISDN (B-ISDN), which is based on the asynchronous transfer mode (ATM), supports connectivity at rates up to, and soon exceeding, 155 Mbps. There are major differences between broadband ISDN and ISDN. Specifically, end-to-end communication is by asynchronous transfer mode rather than by synchronous transfer mode (STM) 42 as in ISDN. Since the early 1990s, the most important technical innovation to come out of the broadband ISDN effort is ATM. It was developed by telephone companies, primarily AT&T. It is a high-data-rate transmission technique—in some ways similar to traditional telephone circuit switching—made possible by fast and reliable computers that can switch and route very small packets of a message, called cells, over a network at rates in the hundreds of Mbps.

Generally speaking, packet switching is a technique in which a message is first divided into segments of convenient length, called packets. Once a particular network software has divided (fragmented) the message, the packets are transmitted individually, and if necessary are stored in a queue at network nodes 43 and orderly transmitted when a time slot on a link is free. Finally the packets are reassembled at the destination as the original message. It is how the packets are fragmented and transmitted that distinguishes the different network technologies. For example, short-range Ethernet networks typically interconnect office computers and use packets of 1500 bytes called frames; the Internet’s TCP/IP uses packets of up to 64 kbytes called datagrams; and ATM uses very short packets (cells) of 53 bytes.

From this point forward, when we speak of packet switching, we understand it to be the TCP/IP connectionless service 44 used by the Internet in which the message transmission delay from end-to-end depends on the number of nodes involved and the level of traffic in the network. It can be a fraction of a second or take hours if network routes are busy. Since packets are sent only when needed (a link is dedicated only for the duration of the packet’s transmission), links are frequently available for other connections. Packet switching therefore is more efficient than circuit switching, but at the expense of increased transmission delays. This type of a connectionless communication with variable-length packets (referred to as IP packets or datagrams) is well suited for any digital data transmission which contains data that are “bursty,” i.e., not needing to communicate for an extended period of time and then needing to communicate large quantities of information as fast as possible, as in email and file transfer. But, because of potentially large delays, packet switching was assumed not as suitable for real-time services (streaming media) such as voice or live video where the amount of information flow is more even but very sensitive to delays and to when and in what Horder the information arrives. Because of these characteristics, separate networks were/are used for voice and video (STM), and data. This was/is a contentious issue because practically minded corporations prefer a single data network that could also carry voice in addition to data because there is more data than voice traffic.

ATM combines the efficiency of packet switching with the reliability of circuit switching. A connection, called a virtual circuit (VC), is established between two end points before data transfer is begun and torn down when end-to-end communication is finished. ATM can guarantee each virtual circuit the quality of service (QoS) it needs. For example, data on a virtual circuit used to transmit video can experience small, almost constant delays. On the other hand, a virtual circuit used for data traffic will experience variable delays which could be large. It is this ability to transmit traffic with such different characteristics that made ATM an attractive network technology. However, because of its cost, currently it is only occasionally used and many providers are phasing it out.

The most recent implementation of ATM improves packet switching by putting the data into short, fixed-length packets (cells). These small packets can be switched very quickly (Gbps range) by ATM switches 45 (fast computers), are transported to the destination by the network, and are reassembled there. Many ATM switches can be connected together to build large networks. The fixed length of the ATM cells, which is 53 bytes (48 bytes of data and 5 bytes of header information), allows the information to be conveyed in a predictable manner for a large variety of different traffic types on the same network. Each stage of a link can operate asynchronously (asynchronous here means that the cells are sent at arbitrary times), but as long as all the cells reach their destination in the correct order, it is irrelevant at what precise bit rate the cells were carried. Furthermore, ATM identifies and prioritizes different virtual circuits, thus guaranteeing those connections requiring a continuous bit stream sufficient capacity to ensure against excessive time delays. Because ATM is an extremely flexible technology for routing 46 short, fixed-length packets over a network, ATM coexists with current LAN/WAN (local/wide area network) technology and smoothly integrates numerous existing network technologies such as Ethernet and TCP/IP. However, because the Internet with TCP/IP is currently the preferred service, ATM is slowly being phased out.

How does ATM identify and prioritize the routing of packets through the network? In the virtual circuit packet-switching technique, which ATM uses, a call-setup phase first sets up a route before any packets are sent. It is a connection-oriented service and in that sense is similar to an old-fashioned circuit-switched telephone network because all packets will now use the established circuit, but it also differs from traditional circuit switching because at each node of the established route, packets are queued and must wait for retransmission. It is the order in which these packets are transmitted that makes ATM different from traditional packet-switching networks. By considering the quality of service required for each virtual circuit, the ATM switches prioritize the incoming cells. In this manner, delays can be kept low for real-time traffic, for example.

In short, ATM technology can smoothly integrate different types of traffic by using virtual circuits to switch small fixed-length packets called cells. In addition, ATM can provide quality of service guarantees to each virtual circuit although this requires sophisticated network control mechanisms whose discussion is beyond the scope of this text.

Summarizing, we can say that we have considered three different switching mechanisms: circuit switching used by the telephone network, datagram packet switching used by the Internet, and virtual circuit switching in ATM networks. Even though ATM was developed primarily as a high-speed (Gbps rates over optical fibers), connection-oriented networking technology that uses 53-byte cells, modern ATM networks accept and can deliver much larger packets. Modern ATM is very flexible and smoothly integrates different types of information and different types of networks that run at different data rates. Thus connectionless communication of large file-transfer packets, for example, can coexist with connection-oriented communication of real-time voice and video. When an ATM switch in a network receives an IP packet (up to 64 kbytes) or an Ethernet packet (1500 bytes), it simply fragments the packet into smaller ATM cells and transmits the cells to the next node. Even though this technology appeared promising, it is being phased out in favor of TCP/IP which is less costly and more flexible.

Public Health

III.E.1. Information Management

Given the “ information revolution ” our society is experiencing, it is not uncommon to assume that “information” infers only the involvement of computers and communication technology. In organizational settings, one often further assumes that the major issue involved is the introduction of information technology within the organization. What is often overlooked is that the introduction of information technology in an organization is much more of a social process than it is a technical one. If the people involved in information management are to coordinate the acquisition and provision of information effectively, they must understand how people process information, both as individuals and as members of organized groups or units. The real challenges in implementing successful information systems are those of managing people and their perceptions.

Digital Divide, The

III.A. Community Networks

Community networks are community-based electronic network services, provided at little or no cost to users. In essence, community networks establish a new technological infrastructure that augments and restructures the existing social infrastructure of the community.

Most community networks began as part of the Free-Net movement during the mid-1980s. According to the Victoria Free-Net Association, Free-Nets are “loosely organized, community-based, volunteer-managed electronic network services. They provide local and global information sharing and discussion at no charge to the Free-Net user or patron.” This includes discussion forums or real-time chat dealing with various social, cultural, and political topics such as upcoming activities and events, ethnic interests, or local elections, as well as informal bartering, classifieds, surveys and polls, and more. The Cleveland Free-Net, founded in 1986 by Dr. Tom Grundner, was the first community network. It grew out of the “St. Silicon’s Hospital and Information Dispensary,” an electronic bulletin board system (BBS) for health care that evolved from an earlier bulletin board system, the Chicago BBS.

In 1989, Grundner founded The National Public Telecomputing Network (NPTN) which, according to the Victoria Free-Net Association, “evolved as the public lobbying group, national organizing committee, and public policy representative for U.S.-based Free-Nets and [contributed] to the planning of world-wide Free-Nets.” NPTN grew to support as many as 163 affiliates in 42 states and 10 countries. However, in the face of rapidly declining commercial prices for Internet connectivity, and a steady increase in the demands to maintain high-quality information services, NPTN (and many of its affiliates) filed for bankruptcy in 1996. While a number of Free-Nets still exist today, many of the community networking initiatives that are presently active have incorporated some aspects of the remaining models for community technology— community technology centers and community content.

The aforementioned study at RAND’s CIRA involved the evaluation of the following five community networks: (1) Public Electronic Network, Santa Monica, CA; (2) Seattle Community Network, Seattle, WA; (3) Playing to Win Network, New York, NY; (4) LatinoNet, San Francisco, CA; and (5) Blacksburg Electronic Village (BEV), Blacksburg, VA. Their findings included increased participation in discussion and decision making among those who were politically or economically disadvantaged, in addition to the following results:

Improved access to information—Computers and the Internet allowed “individuals and groups to tap directly into vast amounts and types of information from on-line databases and from organizations that advertise or offer their products and services online.”

Restructuring of nonprofit and community-based organizations—Computers and the Internet assisted these organizations in operating more effectively.

Delivery of government services and political participation—Computers and the Internet promoted a more efficient dissemination of local and federal information and services and encouraged public awareness of, and participation in, government processes.

Examples of other community networks include Big Sky Telegraph, Dillon, Montana; National Capital Free-Net, Ottawa, Ontario; Buffalo Free-Net, Buffalo, New York; and PrairieNet, Urbana-Champaign, Illinois.

Information Revolution

The information revolution led us to the age of the internet, where optical communication networks play a key role in delivering massive amounts of data.

Related terms:

Download as PDF

About this page

The Information Revolution

Publisher Summary

Information revolution is a period of change that might prove as significant to the lives of people. Computer technology is at the root of this change, and continuing advancements in that technology seem to ensure that this revolution would touch the lives of people. Computers are unique machines; they help to extend the brain power. Computerized robots have been replacing blue-collar workers; they might soon be replacing white collar workers as well. Computers are merely devices that follow sets of instructions called computer programs, or software, that have been written by people called computer programmers. Computers offer many benefits, but there are also many dangers. They could help others invade one’s privacy or wage war. They might turn one into button pusher and cause massive unemployment. User-friendly systems can be easily used by untrained people. The key development that made personal computers possible was the invention of the microprocessor chip at Intel in 1971.

Foreword by Valey Kamalov

The information revolution led us to the age of the internet, where optical communication networks play a key role in delivering massive amounts of data. The world has experienced phenomenal network growth during the last decade, and further growth is imminent. The internet will continue to expand due to user population growth and internet penetration: previously inaccessible geographical regions in Africa and Asia will come online. Network growth will only be accelerated by improvements in integrated circuits. Transistor size has been halved every two years since the middle of the last century. The new internet-based global economy requires a worldwide network with high capacity and availability, which is currently limited by submarine optical communication cables.

Philosophy of Computing and Information Technology

Equity and access

The information revolution has been claimed to exacerbate inequalities in society, such as racial, class and gender inequalities, and to create a new, digital divide, in which those that have the skills and opportunities to use information technology effectively reap the benefits while others are left behind. In computer ethics, it is studied how both the design of information technologies and their embedding in society could increase inequalities, and how ethical policies may be developed that result in a fairer and more just distribution of their benefits and disadvantages. This research includes ethical analyses of the accessibility of computer systems and services for various social groups, studies of social biases in software and systems design, normative studies of education in the use of computers, and ethical studies of the digital gap between industrialized and developing countries.

WEEE generation and the consequences of its improper disposal

2.1 Introduction

Today, ICTs and other consumer electronics have infiltrated every nook and cranny of modern society as they are highly desirable and considered indispensable in social life. The multifunctionality of most ICTs makes them especially attractive to consumers. However, due to the relatively short lifespan of most of these devices, the accumulation of a large volume of WEEE has become a major problem at the local and international levels. Rapid product obsolescence within the high-tech industry has led to unprecedented generation and accumulation of WEEE. The rapid generation of WEEE and their improper management and disposal, which have significant adverse effects on human health and the biophysical environment, represent the dark side of ICTs. The adverse consequences are linked directly to the hazardous, nonbiodegradable, bioaccumulative, and persistent properties of the chemical elements and compounds found in WEEE.

e-Wastes pose a serious threat to human and nonhuman species health as well as to ecological sustainability around the globe. The share volume of e-waste produced and disposed of annually raises concerns about the adverse consequences for the environment and human health. What are the consequences of the rapid generation, improper management, and disposal of WEEE? This chapter is devoted to addressing this salient question. The socioeconomic, environmental, and human health consequences of WEEE are addressed in the following pages. This chapter reviews the patterns of flow of WEEE from the Global North to the Global South, the Basel Convention, the opportunities and risks associated with e-waste, and arrays of adverse health and environmental consequences of improper or crude management of e-waste.

Digital systems

9.5.12 Broadband ISDN and asynchronous transfer mode (ATM)

The continuing information revolution requires services with ever-increasing data rates. Even the individual consumer, who is limited by dial-up telephone lines using 56 kbps modems or ISDN, is increasingly demanding high-speed broadband access into the home to achieve the best Internet experience possible. “Broadband” is the term used to describe high-speed access to data, voice, and video signals. With this capability, Web pages appear faster, audio and video files arrive quickly, and more than one person in a household can access the Internet at the same time. Broadband technologies such as cable, satellite, and digital subscriber line (DSL) bring Internet information to the home, in any format, with quality results. A service, newer than ISDN, called broadband ISDN (B-ISDN), which is based on the asynchronous transfer mode (ATM), supports connectivity at rates up to, and soon exceeding, 155 Mbps. There are major differences between broadband ISDN and ISDN. Specifically, end-to-end communication is by asynchronous transfer mode rather than by synchronous transfer mode (STM) 42 as in ISDN. Since the early 1990s, the most important technical innovation to come out of the broadband ISDN effort is ATM. It was developed by telephone companies, primarily AT&T. It is a high-data-rate transmission technique—in some ways similar to traditional telephone circuit switching—made possible by fast and reliable computers that can switch and route very small packets of a message, called cells, over a network at rates in the hundreds of Mbps.

Generally speaking, packet switching is a technique in which a message is first divided into segments of convenient length, called packets. Once a particular network software has divided (fragmented) the message, the packets are transmitted individually, and if necessary are stored in a queue at network nodes 43 and orderly transmitted when a time slot on a link is free. Finally the packets are reassembled at the destination as the original message. It is how the packets are fragmented and transmitted that distinguishes the different network technologies. For example, short-range Ethernet networks typically interconnect office computers and use packets of 1500 bytes called frames; the Internet’s TCP/IP uses packets of up to 64 kbytes called datagrams; and ATM uses very short packets (cells) of 53 bytes.

From this point forward, when we speak of packet switching, we understand it to be the TCP/IP connectionless service 44 used by the Internet in which the message transmission delay from end-to-end depends on the number of nodes involved and the level of traffic in the network. It can be a fraction of a second or take hours if network routes are busy. Since packets are sent only when needed (a link is dedicated only for the duration of the packet’s transmission), links are frequently available for other connections. Packet switching therefore is more efficient than circuit switching, but at the expense of increased transmission delays. This type of a connectionless communication with variable-length packets (referred to as IP packets or datagrams) is well suited for any digital data transmission which contains data that are “bursty,” i.e., not needing to communicate for an extended period of time and then needing to communicate large quantities of information as fast as possible, as in email and file transfer. But, because of potentially large delays, packet switching was assumed not as suitable for real-time services (streaming media) such as voice or live video where the amount of information flow is more even but very sensitive to delays and to when and in what Horder the information arrives. Because of these characteristics, separate networks were/are used for voice and video (STM), and data. This was/is a contentious issue because practically minded corporations prefer a single data network that could also carry voice in addition to data because there is more data than voice traffic.

ATM combines the efficiency of packet switching with the reliability of circuit switching. A connection, called a virtual circuit (VC), is established between two end points before data transfer is begun and torn down when end-to-end communication is finished. ATM can guarantee each virtual circuit the quality of service (QoS) it needs. For example, data on a virtual circuit used to transmit video can experience small, almost constant delays. On the other hand, a virtual circuit used for data traffic will experience variable delays which could be large. It is this ability to transmit traffic with such different characteristics that made ATM an attractive network technology. However, because of its cost, currently it is only occasionally used and many providers are phasing it out.

The most recent implementation of ATM improves packet switching by putting the data into short, fixed-length packets (cells). These small packets can be switched very quickly (Gbps range) by ATM switches 45 (fast computers), are transported to the destination by the network, and are reassembled there. Many ATM switches can be connected together to build large networks. The fixed length of the ATM cells, which is 53 bytes (48 bytes of data and 5 bytes of header information), allows the information to be conveyed in a predictable manner for a large variety of different traffic types on the same network. Each stage of a link can operate asynchronously (asynchronous here means that the cells are sent at arbitrary times), but as long as all the cells reach their destination in the correct order, it is irrelevant at what precise bit rate the cells were carried. Furthermore, ATM identifies and prioritizes different virtual circuits, thus guaranteeing those connections requiring a continuous bit stream sufficient capacity to ensure against excessive time delays. Because ATM is an extremely flexible technology for routing 46 short, fixed-length packets over a network, ATM coexists with current LAN/WAN (local/wide area network) technology and smoothly integrates numerous existing network technologies such as Ethernet and TCP/IP. However, because the Internet with TCP/IP is currently the preferred service, ATM is slowly being phased out.

How does ATM identify and prioritize the routing of packets through the network? In the virtual circuit packet-switching technique, which ATM uses, a call-setup phase first sets up a route before any packets are sent. It is a connection-oriented service and in that sense is similar to an old-fashioned circuit-switched telephone network because all packets will now use the established circuit, but it also differs from traditional circuit switching because at each node of the established route, packets are queued and must wait for retransmission. It is the order in which these packets are transmitted that makes ATM different from traditional packet-switching networks. By considering the quality of service required for each virtual circuit, the ATM switches prioritize the incoming cells. In this manner, delays can be kept low for real-time traffic, for example.

In short, ATM technology can smoothly integrate different types of traffic by using virtual circuits to switch small fixed-length packets called cells. In addition, ATM can provide quality of service guarantees to each virtual circuit although this requires sophisticated network control mechanisms whose discussion is beyond the scope of this text.

Summarizing, we can say that we have considered three different switching mechanisms: circuit switching used by the telephone network, datagram packet switching used by the Internet, and virtual circuit switching in ATM networks. Even though ATM was developed primarily as a high-speed (Gbps rates over optical fibers), connection-oriented networking technology that uses 53-byte cells, modern ATM networks accept and can deliver much larger packets. Modern ATM is very flexible and smoothly integrates different types of information and different types of networks that run at different data rates. Thus connectionless communication of large file-transfer packets, for example, can coexist with connection-oriented communication of real-time voice and video. When an ATM switch in a network receives an IP packet (up to 64 kbytes) or an Ethernet packet (1500 bytes), it simply fragments the packet into smaller ATM cells and transmits the cells to the next node. Even though this technology appeared promising, it is being phased out in favor of TCP/IP which is less costly and more flexible.

Public engagement and stakeholder consultation in nuclear decommissioning projects

8.9 Future trends

In a world where the information revolution has made so much data available to so many, public consultation and the increasing involvement of local and national stakeholders will become even more common. The old industry attitude of ‘decide, announce and defend’ is no longer appropriate in this new age of public awareness, marring progress and confusing the issue. Well considered stakeholder consultation is the most effective tool that site operators have when it comes to dealing with local politicians, community leaders and pressure groups. This means providing them with valid information about plans and programmes, living up to commitments, and being constantly available to answer questions and hear comments. This approach will become the contemporary norm given that an open flow of reliable information is generally expected these days. At the same time, decommissioning and waste management projects have much more than a local profile, and strong, well-defined links to national energy and nuclear management strategy must be maintained as a top priority in any consultation process.

With the passage of time and recent tightening of financial restraints, the task of satisfying perceived stakeholder needs and wants can only become more challenging. Clear, new thinking is required, with due consideration to adaptability, if we are to keep the ball rolling in a useful direction while maintaining stakeholder interest. ‘Consultation fatigue’ can also be an issue and must be considered. Most stakeholders give up their time freely to the consultation process, and it is important that they are not discouraged by pointless meetings, confusing proposals or simple information overload.

The involvement of younger people – those on whom nuclear decommissioning will impact long into the future – is a major challenge. Social networks, Facebook, Twitter and the like, are new and powerful tools that can be extremely effective in this area. The current ‘younger generation’ are the ones who are most likely to inherit any nuclear legacy after we, the decision makers, are long gone. Therefore it is vital that important decisions now being made are analysed, documented and archived, for the benefit of future generations.

Today, when there is wide acceptance that stakeholder involvement, for substantive procedural and philosophical reasons, offers positive benefits, one thing has become starkly clear: that the time when exchanges between management authorities and civil society were confined by rigidly-imposed, impersonal mechanisms is past.

Risk Management in Gas Networks

Vulnerability 1: Information Systems

Vulnerabilities in information systems is considered to be critical today. The information revolution has made big changes in many different aspects of our lives, and IT has made many changes in businesses. Nowadays, the world is considered a village in which even faraway places can communicate with each other within seconds. Information systems are also developing rapidly and new advances occur every day. These technologies have increased the efficiency of many processes, and most businesses are so dependent on these processes that they cannot function properly without them. In fact, businesses have made themselves so reliant on these technologies that they can be harmed by various threats. Gas networks, like other businesses, now face significant security challenges. The wide range of vulnerabilities include the following:

Manual systems have been widely replaced by the information systems on which the gas companies depend. There are no manual backups for automated processes, so there is no possibility of returning a system to a manual status.

Because of the competitive market, gas industries rapidly welcome new technologies to reduce their cost and increase their efficiency. However, the security of these systems is an important concern.

The use of joint systems can be considered a type of vulnerability. For example, many companies are interested in having joint systems for their e-commerce, so a problem in one system can be transferred to other systems as well causing huge losses.

The information systems have increased access from local to national and international levels; as a result of this wider access, systems are exposed to more electronic vulnerabilities.

The IT advances have allowed attackers to attack from almost anywhere. They can attack systems even from home, so it becomes difficult to determine the origins of an attack.

There is a great competition in software industry market, so most vendors try to offer their products to the market as soon as possible. As a result, many softwares do not have adequate security features. These insecure systems are exposed to different professional attacks and cannot provide enough security. Updating software systems with frequent security patches is critical.

The gas industries widely use e-commerce, so it is exposed to different virus attacks. Some antivirus programs are available, but they are reactive and there are more viruses in the IT environment than these programs can handle.

In the gas industries, most of today’s equipment has become automated in order to increase their efficiency. The gas networks are highly reliant on the Internet, intranets, and extranets, or they depend on satellites, fiber-optic cables, microwave, phones, and so on. A disruption in one of these systems can even cause gas networks to fail to respond appropriately to customers because most operations are done automatically and need these communication systems.

This vulnerability has become one of the most important threats to the gas industries and its risks are rapidly increasing. Currently, the widespread hacking tools that are available are leading to more people, even amateurs, using these tools. Hackers have become more professional and have gained the ability to better exploit vulnerabilities and attack the systems.

SURFACE AND INTERFACE PHENOMENA

1.1 Background to Organic Devices

Following the announcement of highly efficient OLEDs by the Kodak group of Tang and VanSlyke in 1987, great strides are being made toward the commercialization of organic based displays utilizing small molecules as well as polymers. In 1999, Pioneer announced the first commercial display based on the Kodak design incorporating multilayers of tris-8 hydroxyquinoline aluminum (Alq3), phenyl diamine (NPB), and copper phthalocynanine (CuPc) [ 10 ]. Remarkable commercial potential has also been realized for polymer based light-emitting devices (PLED). The current market for these displays includes, but is not limited to, backlights for liquid crystal displays (LCDs), low information displays (stereo displays) [ 10 ], as well as small multicolor displays for portable applications.

In addition to electrooptic applications, organic based devices have shown remarkable progress toward highly efficient solar cells. In 1991, O’Regan and Gratzel demonstrated photovoltaic cells based on organic dye-sensitized colloidal TiO2 films [ 26 ]. The organic dye absorbs the light energy and the excited state electron transfers to the conduction band of the TiO2 colodial film. The dye is then regenerated by an electron transfer from a redox species, which is then reduced at the counterelectrode. These photovoltaic cells have demonstrated a light to electrical energy conversion yield as high as 7–12%.

For all organic and organic–inorganic hybrid devices, the interface properties between two organic films or an organic molecular solid and an inorganic electrode remains vital to the performance and the understanding of the device operation. It is well beyond the scope of this chapter to address, in detail, all of the interfaces for the different organic based devices. Therefore, this chapter will provide an overview of the issues related to organic based interface and specific examples based on interface studies of small molecules used in typical OLEDs. Where appropriate, comparisons will be drawn to polymer based light-emitting devices. This approach provides the reader with many of the issues related to all organic based interfaces employed in current and future devices.

The examples in this chapter are focused on an OLED design similar to the first commercial displays announced by Pioneer (based on Eastman Kodak’s design) and schematically illustrated in Figure 1a and b, where the molecular films are prepared typically by thermal evaporation onto a room temperature substrate. Here, electrons are injected from a metal cathode into an electron-transporting organic layer (ETL) and holes are injected from a transparent, conducting anode into a hole-transporting organic layer (HTL). The electrons and holes recombine the emissive ETL layer with the characteristic spectrum of the ETL layer (with fluorescent or phosphorescent dye dopants). This concept is similar to the early designs employed for polymer OLEDs, (sometimes referred to as PLEDs) The molecules and polymers are Alq3, NPB, CuPc, coumarin 6, PPV, and polyfluorine. As we discuss later, recent devices have utilized a number of variations on this design, and no doubt further changes will evolve as this technology matures. Again, this focus will provide the reader with fundamental interface issues important to all current and future OLED designs. The reader should note that organic based devices are a rapidly advancing field and some the performance numbers will be outdated even as this chapter is published.

Reprinted with permission from C. W. Tang and S. A. VanSlyke, Appl. Phys. Lett. 51, 913 (1987). © 1987 American Institute of Physics. Copyright © 1987

A tipping point in the information revolution

A long time ago, in a country far, far away(*), one Melvin Conway made the sociological observation that the structure of IT Solutions in an organisation followed the structure of the organisation. Frederick Brooks, in his seminal book The Mythical Man-Month, christened it Conway’s Law, and that name stuck. I think that Conway’s Law — and what is happening to it — is a sign that our ‘information revolution’ is experiencing a tipping point.

In short, what Conway observed was that if — for example — an organisation had separate accounting and reporting departments, it had separate accounting and reporting IT solutions. But if — for instance — it had a combined accounting-and-reporting department, it had a combined accounting-and-reporting IT solution. In other words: the structure of IT followed the structure of the organisation.

This was generally true 50 years ago. But it is changing now, and this change suggests we have reached a tipping point in the ongoing information revolution. Let me explain.

What have we done?

If you look at the last 50 years, we have added an enormous amount of ‘logical behaviour’ to our societies (and thus to the world).

Before computers, logical behaviour was extremely rare in the world. We humans were able to do some, but our power to do it is puny compared to computers. We are however (probably) unique in nature of actually being able to do that and it defines how our behavioural intelligence differs from that of other species in the world. At least partly as a result, we are the most successful complex organism on earth, and the one which has been able to colonise the most wide collection of niches, from cold to hot, dry to wet, low to high, etc..

So, it is not that strange that — when super fast machine logic appeared in the 40’s — we believed that it would enable us to make truly intelligent machines. After all, we believed (and most of us still do) that the essence of of our intelligence is logic. That idea has — as far as I’m concerned — been pretty much debunked, whatever advances (both real and imagined) are being made in digital AI or analytics. You can read more about it in this article: Something is (still) rotten in Artificial Intelligence. But that is not the issue of this post.

The issue of this post is that — regardless of that logic being ‘intelligent’ or not — in roughly half a century, we have added an unprecedented and unbelievable volume of it to the world. And given the fundamental properties of such logic(**), this is having more and more of an effect on human society, and through human society the world.

One way a key property of logic shows itself is that large complex logical landscapes (that is us, these days) are increasingly hard to change. Large transformations in/with IT are slow, costly, and often fail. Why? Why is it that instead of getting better and better at it, we seem to get slower and slower? Because, contrary to what is often said: change is not just speeding up, it is — in the reality of organisations — often also slowing down. Changing a core IT system in your landscape takes more time and money than building a large new building. Change in IT is hard. (***)

But what makes it so hard?

Three things make it hard: (1) the sheer volume of interconnected logic; (2) the limitations of us humans when working with logic — we’re better at frisbee; and (3) the brittleness of logic.

The brittleness of logic

To start with the latter, logic, for all its strong points, has one major Achilles’ heel: it is brittle. Logic is extremely sensitive to correct inputs. Where we humans can work very well with estimates and approximations, logical machinery can’t. One wrong bit and the whole thing may not work or can even come crashing down. A little broken often means completely broken. Or, if we move over to a security example: one small vulnerability and the criminal is `in’.

The complexity of dependencies between all those brittle parts is more than we humans can handle. And to top it off, although we are the best logicians of all species on earth, we humans are actually pretty bad at logic. We create that logic, but we cannot foresee every combination and we make errors. Lots of them.

And no, digital computers are not going to help us out on this one. Digital computers are in the ultimate end rule-based machines, even when the rules are hidden from view. Very short, the problem is that there are no rules to decide which rules to use. And while we can do some things to help us out here (e.g. statistics), the solutions are inefficient (e.g. look at the large energy cost of machine learning in particular) and remain brittle.

So, given that the brittleness of (machine) logic has been with us for quite a while, what do we normally do to manage the problems of using logic?

We add more logic!

In other words: to address those troublesome effects of having huge amounts of interconnected, brittle logic, we add more (interconnected, brittle) logic. With its own additional brittleness of course. There is a whiff of a dead end here.

In the ‘digital enterprise’ only a (small) part of all that machine logic is in the end about the primary function such as ‘paying a pension’ or ‘selling a book’. Most of it is about (a) keeping the whole logical edifice from falling apart or down in one way or another, and (b) remaining able to change it.

In control?

Summarising: the volume of brittle machine logic in the world has ballooned. And the whole landscape of interdependent logical behaviour is too complex for us humans to be completely in control of it. For years we have seen larger and larger top-down waterfall-like approaches (of which orthodox enterprise architecture is a part) to ‘change’ in digital landscapes, but that approach is now dying.

What we see happening instead are bottom-up approaches to address the problem, such as Agile/DevOps. This is not entirely new. Software engineering approaches such as object-orientation (which was supposed to fix the same problem for us at the application level in the 1980’s) or service-oriented architecture (which was supposed to fix the same problem for us at the application-landscape level in the 1990’s) have long shown a tendency towards bottom-up abstraction as a way to handle interconnected brittle complexity.

And now, these software-engineering born, bottom-up approaches have reached the level of the human organisation. The volume of IT — of machine logic — has become so large, and the inertia (‘resistance to change’) of all that interconnected brittleness has become so large, that we humans have started to adapt to the logic instead of trying to adapt the logic to us. After all, we’re the ones with flexible and robust behaviour.

Case in point: we increasingly organise ourselves in Agile/DevOps teams around IT solutions with ‘Product Owners’. We then combine various product teams into organisational entities called ‘Value Streams’ (e.g. in a leading agile methode called SAFe). In other words, instead of having IT solutions reflect the organisation (Conway’s Law), we now more and more have human organisation reflect the structure of IT.

In a slide from my workshop:

So, whereas it used to be “business purpose leads to business structure leads to IT structure”, more and more we are seeing “business purpose leads to IT structure leads to business structure”. Inverse Conway’s Law.

This is what the buzzword-phrases ‘digital enterprise’ or ‘digital transformation’ stand for: it is us (flexible) humans adapting to the weight and inertia of the massive amounts of (inflexible) brittle machine logic that we have brought into the world. The IT does not just adapt to our wishes, we adapt to the demands of using all that IT. A true tipping point. And the switch from Conway’s Law to Inverse Conway’s Law illustrates it.

What next?

What we clearly haven’t adapted to yet is the effect the new ‘primacy of logical behaviour’ is having on our social structures. As with the industrial revolution in the end spawning political movements to curb its excesses, something like that still has to happen with respect to the information revolution. [update Feb 15 2020 based on the comment by Erwin: excesses not necessarily stemming from the information inertia itself/alone].

Three questions are in my mind about that. (1): how bad will the excesses (already visible) become before we act? (2): will we be able to act (sensibly) given our human limitations? And (3): will there be enough time to curb the excesses before the excesses enable or result in irreparable damage?

[Update 15/Feb/2020] After reading several comments, I feel the need for a small update to correct some interpretations of my writings I have seen people make.

The first addition has to do with the role of all that IT, of the ‘information inertia’ effect. I do not argue that IT is driving our world instead of we humans ourselves. We are still driving it and making the choices. I think one could best compare the changed situation to skiing a slalom with a weight attached by a short string to ones waist. The speed has gone up because of the weight. But the inertia of the weight (all that IT) makes it harder to be agile. The weight has not an active ‘will’, though. The human now has to manipulate the weight (which is hard) in order to do the slalom.

Expanding the notion:

What I am describing, therefore, is not the (IT) tail wagging the (business) dog, just as the weight in the slalom example is not doing the slalom. But the human doing the slalom has to adapt to the inertia of large complex IT, and changing direction thus becomes harder.

Secondly, I use the notion of Value Stream as an IT-driven business structure. Originally, the notion of Value Stream is much older and predates massive IT. But in SAFe, it is kind of a roll-up of (IT) product teams, so more bottom-up. Which is logical because the ‘value’ in Value Stream comes more and more from what IT does. [/Update 15/Feb.2020]

Previous: Blame the mathematicians!

Next: IT is us

Overview page: All that IT, what is it doing to us?

Summary and link to a very condensed 40 minute presentation based on these articles: Perspectives on the Information Revolution

(*) 1968, the US (paraphrasing this one, of course)

The information revolution

The period after World War Two we can characterize as «Scientific Technical Revolution» (STR). Its essence is that science (or rather knowledge in general) becomes a leading factor in production (as opposed to «land» during agricultural revolution, or industrial «factors of production», such as iron, coal, oil, labor-power, etc., during the Industrial revolution). Hence, the term «knowledge economy» is applied to modern economy.

The main aspect of the STR today is the information revolution. According to RAND report from 2003, » The information revolution is not the only technology-driven revolution under way in the world today, merely the most advanced. Advances in biotechnology and nanotechnology, and their synergies with IT, should also change the world greatly over the course of the 21st century».

This presentation on the information revolution we’d like to divide up into a number of sections:

I. A question «what is information?»

II. Brief history of information technologies (IT).

III. Tendencies in development of computers.

IV. The Internet.

V. Social consequences of the IT revolution.

VI. The Information Revolution in the ex-USSR.

I. What is «information»?

The origin of the word is from Latin » informatio «, meaning «explanation», «clarification», «exposition». The product of explaining something, clarifying, or making an exposition of something was called «an information».

» According to the Oxford English Dictionary, the earliest historical meaning of the word information in English was the act of informing, or giving form or shape to the mind, as in education, instruction, or training. A quote from 1387: «Five books come down from heaven for information of mankind.» It was also used for an item of training, e.g. a particular instruction. «Melibee had heard the great skills and reasons of Dame Prudence, and her wise informations and techniques.» (1386) » (Wikipedia)

So, grammatical definition of information is that which gives shape to mind.

In 1930’s, a French physicist Pierre Brillouin defined information as «negative entropy». Entropy means «a measure of disorder that exists in a system». Hence, «negative entropy» would mean a measure of order in a system, i.e. how well it is organized, how well it is structured. For example, if a course (book, site) is informative, it removes a great deal of disorder from the minds of its users.

So, a physical definition of information is a measure of how well a system is organized.

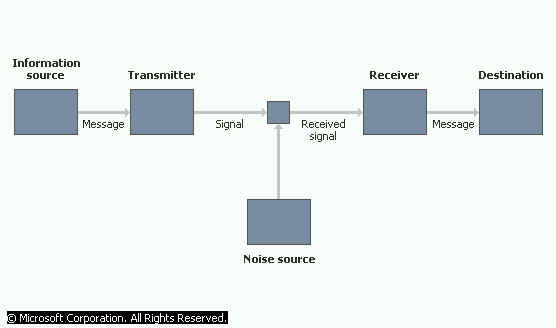

In 1940’s, an American electrical engineer Claude Shannon presented a theory of information summarized by the picture on the right. Here we see a source of information transmiting some signal via a trasmitter. For example, this can a star transmitting a ray of light. Then, this ray of light travels through space, where it meets different obstacles and sources of interference, such as massive stars, gaseous formations, etc. These are «sources of noise». Then, the ray of light is received via some receiver, for example a telescope. Finally, it arrives at our eye, which is its «destination».

So, it appears that Shannon’s understanding of information is a signal that travels from «information source» towards some «destination», meeting several kinds of interferences on its way.

In 1960’s, a Soviet academician Glushkov defined information as: 1) the degree to which matter-energy is distributed unevenly in the time/space continuum, or, how matter-energy is distributed in time-space continuum; 2) the measure of change which accompanies all processes in the world, or, how much something has changed, what kind of quantitative or qualitative changes have taken place.

So, information is a measure of change, or development, of a system.

In 1975, a Soviet philosophical dictionary writes that information is a measure of organization of systems; it is a negation of uncertainty, a reflection of the processes in nature.

Microsoft «Encarta ’97» encyclopedia defines information as: 1) «a collection of facts or data»; 2) «a non-accidental signal used as input into a communications system».

So, information here is that which models, or shapes, matter/energy complex.

In 2003, a Russian encyclopedia «Cyril & Methodius» defines information as data that has been initially transmitted between people, either orally or through various signals. Since the second half of XX century, information has been transmitted between machines and people, and between machines. Information also includes signals which are exchanged between organisms, such as plants and animals.

So, information is data which is transmitted between various entities.

«Encarta 2004 Dictionary» defines information as «computer data that has been organized and presented in a systematic fashion to clarify the underlying meaning». Here, information is data organized to explain the meaning.

I understand «information» is a raw material of knowledge. When information is processed, it is «enriched» (like some atoms) and produces knowledge. One form of knowledge may serve as a «raw material» for still another inquiry, and thus presents itself as «information».

II. Brief history of information technologies

The nature of the media used to process information influences what type of people handle information, hence what information is and what effect it has on society.

1) The Premechanical Age: from the Stone Age to 1450 A.D.

Homer from the British Museum

Initially, information was spread by the word of mouth. The oral tradition gives rise to such poems as «Iliad» and «Odyssey» (the picture on the right: Homer).

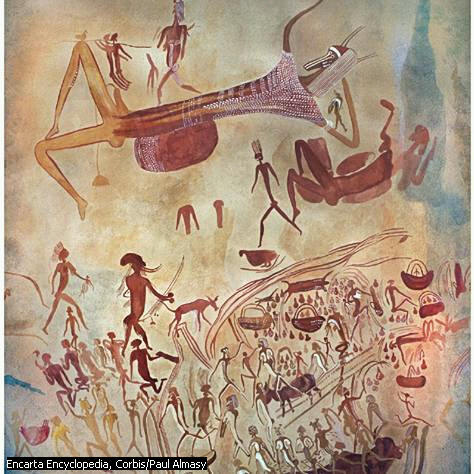

A cave painting

Along the side of the oral tradition, cave painting developed. On the left, we see a cave painting by San people, on the territory of modern day Zimbabwe.

We note that the earlier forms of information technologies do not die, but continue to live, however, relegated to secondary place. The same applies to any phenomena in development.

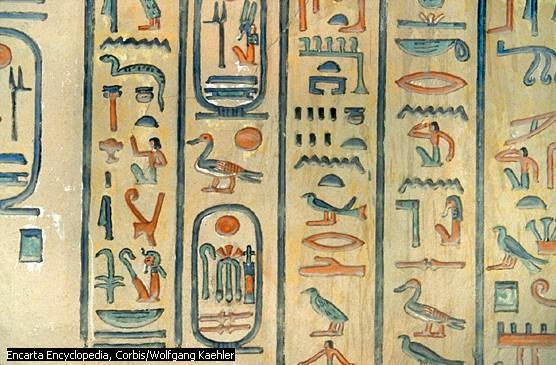

From primitive paintings developed pictorial writing. The most famous example is Egyptian hieroglyphs.

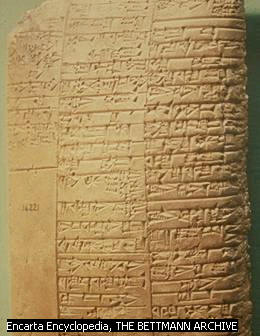

One of the earliest attempts at developing letter writing was by the people of Sumeria around 4000 years ago. They wrote in stone and clay tablets in a system called «cuneiform» (on the left).

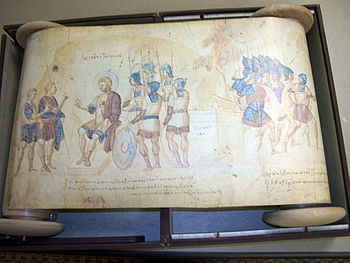

A scroll from Byzantine empire, X century

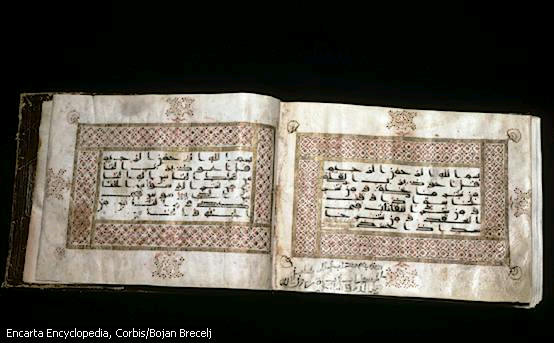

Quaran written on a parchment

A system of writing on parchment was invented in a town of Pergamum, which is in modern day Turkey. This was a system of writing on animal skin. Several sheets of «Pergamum» were then first bound together to form a prototype of a modern book. In the photo, we see a Qur’an, dating VIII-IX century, written on parchment. This media is longer-lasting than papyrus.

Johannes Gutenberg (1398-1468) invented the printing press in Europe. Originally, he was trained as goldsmith. «Encarta 2004»

A replica of the Gutenberg press

writes: «in about 1450, Gutenberg formed another partnership, with the German merchant and moneylender Johann Fust, and set up a press on which he probably started printing the large Latin Bible associated with his name». Thus, book printing was due to a partnership of an artisan and a capitalist. Similar partnership we notice in another key invention of the Industrial revolution, the steam engine. James Watt was a mechanic, and Bolton was a capitalist.

The discovery of ways to harness electricity was the key advance made during this period.

Early models of the telephone include Edison’s 1879 wall-mounted phone (left), the candlestick design common in the 1920s and 1930s (bottom), and a 1937 “cradle” telephone

The telephone was invented in 1876, in the United States, by Alexander Bell.

According to Kathleen Guinee, writing in » A Journey through the History of Information Technology»: «Through the first half of the century, having a private telephone line run into your house was expensive and considered a luxury. Instead of a private line, many people had what are called «party lines.» A party-line was a phone line shared by three different households. An extension would be placed in each house, and if another person who shared the line was on the phone when you wanted to make a call, you would have to ask them to please get off. As the century continued into its second half, telephone technology became less expensive for the consumer».

Alexander Popov demonstrating his radio in 1895

After the telephone, the next step in development of information technologies was a radio. According to Western sources, such as Encyclopedia «Encarta», Italian inventor Marconi, from a family of a rich landowner, experimenting on his own against the will of his father, invented the radio (or «wireless telegraphy», as it was called then). In 1896 he transmitted a signal at a distance of 1.6 km. Later, improvements followed, with most famous case being the «Titanic» using radio signals to transmit «SOS», in 1912. However, according to Russian sources, such as Encyclopedia «Cyril and Methodius», 2003, radio was invented by A.S. Popov; in 1895, he transmitted signals at a distance of 600 m.

Television sets from 1950’s

The next step was television. After numerous experiments in 1920’s and 1930’s, public broadcasting of TV started in London in 1936, and in the United States in 1939. However, this was interrupted by World War II. The TV really hit the public in 1950’s. In 1955, 65% of American homes have had a TV.

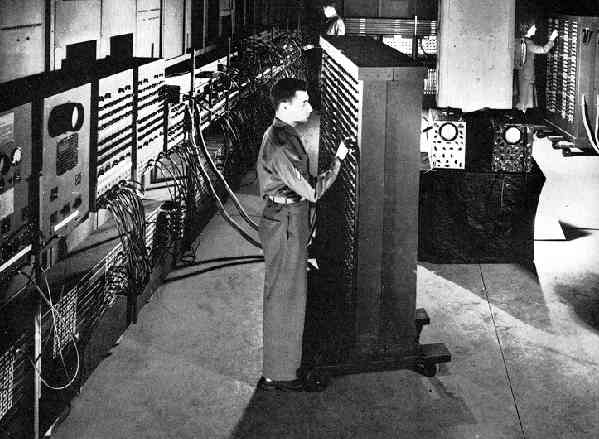

Electronic Numerical Integrator and Computer (ENIAC) was assembled in 1946. It used vacuum tubes (not mechanical devices) to do its calculations. Hence, this is the first electronic computer. People behind it were: mathematician John von Newmann, physicist John Mauchly, electrical engineer J. Prosper Eckert, as well as the U.S. government, the Defense Department in particular.

IBM-PC in a German school in 1980’s

I n 1981 IBM PC appeared. Apple and IBM computers started to compete with each other, with Apple being more aesthetic, while the IBM being a market monopolist, and hence having more useful applications written for it.

III. Tendencies in development of computers

Second tendency of modern computers is a constant increase in the amounts of information stored. My first notebook computer, «Packard Bell», purchased in 1995, had an HDD of 400 MB. A Toshiba Satellite, purchased in 2000, had an HDD of 2 GB. A Toshiba Satellite purchased in 2003 has an HDD of 60 GB. The same model in 2004 featured 120 GB of storage. Toshiba Satellite purchased in 2013 had 700 GB of storage.

A third tendency is development of multi-functionality. For example, a modern laser printer is also a scanner and a Xerox. This tendency means that computers develop towards being robots, towards acting artificial intelligence.

A fourth tendency is a decrease in size and hence an increase in mobility. The users switch from desktop models to notebooks. Palm computers have appeared. Palm computers are a fusion of computers, cell phones and video-camera. However, the keyboard of palm computers is not very comfortable for text processing. Hence, computers of «palm» size will require voice input of information. The screen of modern «palm» computers is too small. An answer to this problem may be a flexible electronic screen. Another possibility is combining «palm» with multimedia projector, producing 3-D images. For this, we will need to improve our battery performance, perhaps use different materials for storing electricity. Finally, the camera in palm does not produce very good images. Perhaps, we can use biotechnology to produce a device small enough to fit into a «palm», but with the quality of an eye.

If we’re to follow the methodology proposed above, which is that of dividing the information technologies according to the type of the media used as carriers of information (pre-mechanical, mechanical, electromechanical, and electronic eras), and we remember that the scientific technological revolution is tending towards nanotechnology, then we suppose that the future of information technologies will be in molecular and atomic realm. For example, Seymour Cray, an inventor of supercomputers in 1970’s and 80’s, said in an interview on May 9, 1995:

«My view is that as machines become faster and faster they have to become smaller and smaller because we have basic communications limitations which are speed of light communication. If I extrapolate as I did at this workshop, the sizes that we have now which are in the micrometer range, to what we should be doing to accomplish the goals twenty years hence, we have to be in the nanometer dimensions. Well nanometer devices are the kinds of things that proteins are made of. They are molecular sized devices and if one let’s one’s mind run on the subject and one thinks «well, we’re going to build computers in this time frame that are molecular in size», then here we are suddenly face with the same dimensions and structures as we have in biological molecules».

The future computer will not be a «mechanism» but an organism. It will possess artificial intelligence, enabling it, for example, to understand live speech. It will possess «an eye», for example, enabling it to «see» and take pictures. It will have some means enabling it to move about, for example artificial legs, and it will possess «arms», enabling it to perform a multiplicity of tasks, from cleaning a dwelling to loving us. And of course it will have a variety of means of communication, both with humans and other robots, such as Wi-Fi.

IV. The Internet

Information on computers is shared via the Internet. » This first network was called Arpanet. It was constructed in 1969 by building links between four different computer sites: UCLA, Stanford Research Institute, UC-Santa Barbara, and the University of Utah in Salt Lake City» (Kathleen Guinee, «A Journey through the History of Information Technology», 1995). Organizationally, Internet was promoted by the U.S. government, specifically Department of Defense, and not private capitalists, as in the case of key inventions of the Industrial revolution.

The person who transformed the Internet from a scholarly network into the World Wide Web of today (WWW) is Timothy Berners-Lee. It’s curious to observe his background, as we strive to understand who the modern revolutionaries are: » I am the son of mathematicians. My mother and father were part of the team that programmed the world’s first commercial, stored-program computer, the Manchester University ‘Mark I,’ which was sold by Ferranti Ltd. in the early 1950s «.

It’s curious to observe how the WWW was invented. Timothy Berners-Lee speaks: «Inventing the World Wide Web involved my growing realization that there was a power in arranging ideas in an unconstrained, weblike way. And that awareness came to me through precisely that kind of process. The Web arose as the answer to an open challenge, through the swirling together of influences, ideas, and realizations from many sides, until, by the wondrous offices of the human mind, a new concept jelled. It was a process of accretion, not the linear solving of one well-defined problem after another».

The WWW was designed by Timothy Berners-Lee (TBL) with the goal of sharing knowledge, not selling it: «The idea was that everybody would be putting their ideas in, as well as taking them out». Or: «the Web initially was designed to be a space within which people could work on an expression of their shared knowledge».

«Intercreativity» is the key to the present and the future of Internet, and learning in general. The most amazing sites and «intercreative», or created by the community of users. These include the Wikipedia and YouTube. Learning in general, if it is to be real learning, i.e. something new both for the teachers and the students, should be intercreative, where both the teachers and the students constantly create something new for each other. Intercreativity is implicitly present in computer games, but it can and should be present in more serious learning.

Internet came into our homes in late 1990’s. «One survey found that there were 61 million Internet users worldwide at the end of 1996, 148 million at the end of 1998, and an estimated 320 million in 2000.» In 2006, there were over 1 billion Internet users world-wide. The first place among Internet users is occupied by the U.S., with 59% of population, or 175 million people, using the Net. The second place is occupied by the European Union, with 50% of its population, or 233 million people, using the Net. In China, almost 10% of population use the net, or 111 million people. The Latin American countries have the fastest growing «Internet population». The number of users has increased by 70%, vis-a-vis 2005, and made up 70 million people. There are 315 million Internet users in Asia-Pacific region. In Ukraine, a republic of the former USSR, there were 3 million people using the Internet (the general population being around 43 million people), i.e. less than 10% of people were using the Net. More than half of these were in Kiev, the capital.

So, one tendency is growing Internet population world-wide.

The Internet has a tendency to go faster, from «dial-up» connection to a «broadband» connection. «The New York Times», in 2004, wrote: «These days, when the Internet teems with complex Web sites and oversized files for downloading, broadband is no longer a luxury: it’s a necessity. The need to get high-speed access to rural areas is analogous to the rural electrification project that began to transform America in the late 1930’s.»

Internet has a tendency to go wireless, e.g. via Wi-Fi and satellites. In 2002, in the U.S., there were 10 million users of wireless Internet. Most of the wireless internet users are people between 18 and 34. Thus, we can expect the Internet to be accessible in any part of the globe, including the oceans, the deserts, the forests, and the outer space.