How to install cuda

How to install cuda

sithu31296/CUDA-Install-Guide

Use Git or checkout with SVN using the web URL.

Work fast with our official CLI. Learn more.

Launching GitHub Desktop

If nothing happens, download GitHub Desktop and try again.

Launching GitHub Desktop

If nothing happens, download GitHub Desktop and try again.

Launching Xcode

If nothing happens, download Xcode and try again.

Launching Visual Studio Code

Your codespace will open once ready.

There was a problem preparing your codespace, please try again.

Latest commit

Git stats

Files

Failed to load latest commit information.

README.md

CUDA Install Guide

This is a must-read guide if you want to setup a new Deep Learning PC. This guide includes the installation of the following:

Debian installation method is recommended for all CUDA toolkit, cuDNN and TensorRT installation.

For PyTorch, CUDA 11.0 and CUDA 10.2 are recommended.

For TensorFlow, up to CUDA 10.2 are supported.

TensorRT is still not supported for Ubuntu 20.04. So, Ubuntu 18.04 is recommended

Install NVIDIA Driver

Windows Update automatically install and update NVIDIA Driver.

Check latest and recommended drivers:

Install recommended driver automatically:

Or, Install specific driver version using:

Verify the Installation

After reboot, verify using:

Install CUDA Toolkit

Windows exe CUDA Toolkit installation method automatically adds CUDA Toolkit specific Environment variables. You can skip the following section.

Before CUDA Toolkit can be used on a Linux system, you need to add CUDA Toolkit path to PATH variable.

Open a terminal and run the following command.

In addition, when using the runfile installation method, you also need to add LD_LIBRARY_PATH variable.

For 64-bit system,

For 32-bit system,

Note: The above paths change when using a custom install path with the runfile installation method.

Verify the Installation

Check the CUDA Toolkit version with:

The NVIDIA CUDA Deep Neural Network library (cuDNN) is a GPU-accelerated lirbary of primitives for deep neural networks. cuDNN provides highly tuned implementations for standard routines such as forward and backward convolution, pooling, normalization and activation layers.

Choose «cuDNN Library for Windows (x86)» and download. (That is the only one available for Windows).

Extract the downloaded zip file to a directory of your choice.

Copy the following files into the CUDA Toolkit directory.

Download the 2 files named as:

for your installed OS version.

Then, install the downloaded files with the following command:

TensorRT is meant for high-performance inference on NVIDIA GPUs. TensorRT takes a trained network, which consists of a network definition and a set of trained parameters, and produces a highly optimized runtime engine that performs inference for that network.

Download «TensorRT 7.x.x for Ubuntu xx.04 and CUDA xx.x DEB local repo package» that matches your OS version, CUDA version and CPU architecture.

Then install with:

If you plan to use TensorRT with TensorFlow, install this also:

Verify the Installation

You should see packages related with TensorRT.

Download and install the new version as if you didn’t install before. You don’t need to uninstall your previous version.

PyCUDA is used within Python wrappers to access NVIDIA’s CUDA APIs.

Install PyCUDA with:

If you want to upgrade PyCUDA for newest CUDA version or if you change the CUDA version, you need to uninstall and reinstall PyCUDA.

For that purpose, do the following:

About

Installation guide for NVIDIA driver, CUDA, cuDNN and TensorRT

How to install cuda

Minimal first-steps instructions to get CUDA running on a standard system.

1. Introduction

This guide covers the basic instructions needed to install CUDA and verify that a CUDA application can run on each supported platform.

These instructions are intended to be used on a clean installation of a supported platform. For questions which are not answered in this document, please refer to the Windows Installation Guide and Linux Installation Guide.

The CUDA installation packages can be found on the CUDA Downloads Page.

2. Windows

When installing CUDA on Windows, you can choose between the Network Installer and the Local Installer. The Network Installer allows you to download only the files you need. The Local Installer is a stand-alone installer with a large initial download. For more details, refer to the Windows Installation Guide.

2.1. Network Installer

Perform the following steps to install CUDA and verify the installation.

2.2. Local Installer

Perform the following steps to install CUDA and verify the installation.

NVIDIA provides Python Wheels for installing CUDA through pip, primarily for using CUDA with Python. These packages are intended for runtime use and do not currently include developer tools (these can be installed separately).

Please note that with this installation method, CUDA installation environment is managed via pip and additional care must be taken to set up your host environment to use CUDA outside the pip environment.

2.4. Conda

The Conda packages are available at https://anaconda.org/nvidia.

To perform a basic install of all CUDA Toolkit components using Conda, run the following command:

To uninstall the CUDA Toolkit using Conda, run the following command:

3. Linux

CUDA on Linux can be installed using an RPM, Debian, Runfile, or Conda package, depending on the platform being installed on.

3.1. Linux x86_64

For development on the x86_64 architecture. In some cases, x86_64 systems may act as host platforms targeting other architectures. See the Linux Installation Guide for more details.

3.1.1. Redhat / CentOS

When installing CUDA on Redhat or CentOS, you can choose between the Runfile Installer and the RPM Installer. The Runfile Installer is only available as a Local Installer. The RPM Installer is available as both a Local Installer and a Network Installer. The Network Installer allows you to download only the files you need. The Local Installer is a stand-alone installer with a large initial download. In the case of the RPM installers, the instructions for the Local and Network variants are the same. For more details, refer to the Linux Installation Guide.

3.1.1.1. RPM Installer

Perform the following steps to install CUDA and verify the installation.

On RHEL 7 Linux only, execute the following steps to enable optional repositories.

3.1.1.2. Runfile Installer

Perform the following steps to install CUDA and verify the installation.

3.1.2. Fedora

When installing CUDA on Fedora, you can choose between the Runfile Installer and the RPM Installer. The Runfile Installer is only available as a Local Installer. The RPM Installer is available as both a Local Installer and a Network Installer. The Network Installer allows you to download only the files you need. The Local Installer is a stand-alone installer with a large initial download. In the case of the RPM installers, the instructions for the Local and Network variants are the same. For more details, refer to the Linux Installation Guide.

3.1.2.1. RPM Installer

Perform the following steps to install CUDA and verify the installation.

3.1.2.2. Runfile Installer

Perform the following steps to install CUDA and verify the installation.

3.1.3. SUSE Linux Enterprise Server

When installing CUDA on SUSE Linux Enterprise Server, you can choose between the Runfile Installer and the RPM Installer. The Runfile Installer is only available as a Local Installer. The RPM Installer is available as both a Local Installer and a Network Installer. The Network Installer allows you to download only the files you need. The Local Installer is a stand-alone installer with a large initial download. In the case of the RPM installers, the instructions for the Local and Network variants are the same. For more details, refer to the Linux Installation Guide.

3.1.3.1. RPM Installer

Perform the following steps to install CUDA and verify the installation.

3.1.3.2. Runfile Installer

Perform the following steps to install CUDA and verify the installation.

3.1.4. OpenSUSE

When installing CUDA on OpenSUSE, you can choose between the Runfile Installer and the RPM Installer. The Runfile Installer is only available as a Local Installer. The RPM Installer is available as both a Local Installer and a Network Installer. The Network Installer allows you to download only the files you need. The Local Installer is a stand-alone installer with a large initial download. In the case of the RPM installers, the instructions for the Local and Network variants are the same. For more details, refer to the Linux Installation Guide.

3.1.4.1. RPM Installer

Perform the following steps to install CUDA and verify the installation.

3.1.4.2. Runfile Installer

Perform the following steps to install CUDA and verify the installation.

NVIDIA provides Python Wheels for installing CUDA through pip, primarily for using CUDA with Python. These packages are intended for runtime use and do not currently include developer tools (these can be installed separately).

Please note that with this installation method, CUDA installation environment is managed via pip and additional care must be taken to set up your host environment to use CUDA outside the pip environment.

The following metapackages will install the latest version of the named component on Linux for the indicated CUDA version. «cu11» should be read as «cuda11».

These metapackages install the following packages:

3.1.6. Conda

The Conda packages are available at https://anaconda.org/nvidia.

To perform a basic install of all CUDA Toolkit components using Conda, run the following command:

To uninstall the CUDA Toolkit using Conda, run the following command:

3.1.7. WSL

These instructions must be used if you are installing in a WSL environment. Do not use the Ubuntu instructions in this case.

When installing using the local repo:

When installing using the network repo:

Pin file to prioritize CUDA repository:

3.1.8. Ubuntu

When installing CUDA on Ubuntu, you can choose between the Runfile Installer and the Debian Installer. The Runfile Installer is only available as a Local Installer. The Debian Installer is available as both a Local Installer and a Network Installer. The Network Installer allows you to download only the files you need. The Local Installer is a stand-alone installer with a large initial download. In the case of the Debian installers, the instructions for the Local and Network variants are the same. For more details, refer to the Linux Installation Guide.

3.1.8.1. Debian Installer

Perform the following steps to install CUDA and verify the installation.

3.1.8.2. Runfile Installer

Perform the following steps to install CUDA and verify the installation.

3.1.9. Debian

When installing CUDA on Debian 10, you can choose between the Runfile Installer and the Debian Installer. The Runfile Installer is only available as a Local Installer. The Debian Installer is available as both a Local Installer and a Network Installer. The Network Installer allows you to download only the files you need. The Local Installer is a stand-alone installer with a large initial download. For more details, refer to the Linux Installation Guide.

3.1.9.1. Debian Installer

Perform the following steps to install CUDA and verify the installation.

3.1.9.2. Runfile Installer

Perform the following steps to install CUDA and verify the installation.

3.2. Linux POWER8

For development on the POWER8 architecture.

3.2.1. Ubuntu

When installing CUDA on Ubuntu on POWER8, you must use the Debian Installer. The Debian Installer is available as both a Local Installer and a Network Installer. The Network Installer allows you to download only the files you need. The Local Installer is a stand-alone installer with a large initial download. The instructions for the Local and Network variants are the same. For more details, refer to the Linux Installation Guide.

3.2.1.1. Debian Installer

Perform the following steps to install CUDA and verify the installation.

3.2.2. Redhat / CentOS

When installing CUDA on Redhat on POWER8, you must use the RPM Installer. The RPM Installer is available as both a Local Installer and a Network Installer. The Network Installer allows you to download only the files you need. The Local Installer is a stand-alone installer with a large initial download. The instructions for the Local and Network variants are the same. For more details, refer to the Linux Installation Guide.

3.2.2.1. RPM Installer

Perform the following steps to install CUDA and verify the installation.

3.2.3. Conda

The Conda packages are available at https://anaconda.org/nvidia.

To perform a basic install of all CUDA Toolkit components using Conda, run the following command:

To uninstall the CUDA Toolkit using Conda, run the following command:

Notices

Notice

This document is provided for information purposes only and shall not be regarded as a warranty of a certain functionality, condition, or quality of a product. NVIDIA Corporation (“NVIDIA”) makes no representations or warranties, expressed or implied, as to the accuracy or completeness of the information contained in this document and assumes no responsibility for any errors contained herein. NVIDIA shall have no liability for the consequences or use of such information or for any infringement of patents or other rights of third parties that may result from its use. This document is not a commitment to develop, release, or deliver any Material (defined below), code, or functionality.

NVIDIA reserves the right to make corrections, modifications, enhancements, improvements, and any other changes to this document, at any time without notice.

Customer should obtain the latest relevant information before placing orders and should verify that such information is current and complete.

NVIDIA products are sold subject to the NVIDIA standard terms and conditions of sale supplied at the time of order acknowledgement, unless otherwise agreed in an individual sales agreement signed by authorized representatives of NVIDIA and customer (“Terms of Sale”). NVIDIA hereby expressly objects to applying any customer general terms and conditions with regards to the purchase of the NVIDIA product referenced in this document. No contractual obligations are formed either directly or indirectly by this document.

NVIDIA products are not designed, authorized, or warranted to be suitable for use in medical, military, aircraft, space, or life support equipment, nor in applications where failure or malfunction of the NVIDIA product can reasonably be expected to result in personal injury, death, or property or environmental damage. NVIDIA accepts no liability for inclusion and/or use of NVIDIA products in such equipment or applications and therefore such inclusion and/or use is at customer’s own risk.

NVIDIA makes no representation or warranty that products based on this document will be suitable for any specified use. Testing of all parameters of each product is not necessarily performed by NVIDIA. It is customer’s sole responsibility to evaluate and determine the applicability of any information contained in this document, ensure the product is suitable and fit for the application planned by customer, and perform the necessary testing for the application in order to avoid a default of the application or the product. Weaknesses in customer’s product designs may affect the quality and reliability of the NVIDIA product and may result in additional or different conditions and/or requirements beyond those contained in this document. NVIDIA accepts no liability related to any default, damage, costs, or problem which may be based on or attributable to: (i) the use of the NVIDIA product in any manner that is contrary to this document or (ii) customer product designs.

No license, either expressed or implied, is granted under any NVIDIA patent right, copyright, or other NVIDIA intellectual property right under this document. Information published by NVIDIA regarding third-party products or services does not constitute a license from NVIDIA to use such products or services or a warranty or endorsement thereof. Use of such information may require a license from a third party under the patents or other intellectual property rights of the third party, or a license from NVIDIA under the patents or other intellectual property rights of NVIDIA.

Reproduction of information in this document is permissible only if approved in advance by NVIDIA in writing, reproduced without alteration and in full compliance with all applicable export laws and regulations, and accompanied by all associated conditions, limitations, and notices.

THIS DOCUMENT AND ALL NVIDIA DESIGN SPECIFICATIONS, REFERENCE BOARDS, FILES, DRAWINGS, DIAGNOSTICS, LISTS, AND OTHER DOCUMENTS (TOGETHER AND SEPARATELY, “MATERIALS”) ARE BEING PROVIDED “AS IS.” NVIDIA MAKES NO WARRANTIES, EXPRESSED, IMPLIED, STATUTORY, OR OTHERWISE WITH RESPECT TO THE MATERIALS, AND EXPRESSLY DISCLAIMS ALL IMPLIED WARRANTIES OF NONINFRINGEMENT, MERCHANTABILITY, AND FITNESS FOR A PARTICULAR PURPOSE. TO THE EXTENT NOT PROHIBITED BY LAW, IN NO EVENT WILL NVIDIA BE LIABLE FOR ANY DAMAGES, INCLUDING WITHOUT LIMITATION ANY DIRECT, INDIRECT, SPECIAL, INCIDENTAL, PUNITIVE, OR CONSEQUENTIAL DAMAGES, HOWEVER CAUSED AND REGARDLESS OF THE THEORY OF LIABILITY, ARISING OUT OF ANY USE OF THIS DOCUMENT, EVEN IF NVIDIA HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. Notwithstanding any damages that customer might incur for any reason whatsoever, NVIDIA’s aggregate and cumulative liability towards customer for the products described herein shall be limited in accordance with the Terms of Sale for the product.

How to install cuda

The installation instructions for the CUDA Toolkit on Linux.

1. Introduction

This guide will show you how to install and check the correct operation of the CUDA development tools.

1.1. System Requirements

The CUDA development environment relies on tight integration with the host development environment, including the host compiler and C runtime libraries, and is therefore only supported on distribution versions that have been qualified for this CUDA Toolkit release.

The following table lists the supported Linux distributions. Please review the footnotes associated with the table.

(2) Note that starting with CUDA 11.0, the minimum recommended GCC compiler is at least GCC 6 due to C++11 requirements in CUDA libraries e.g. cuFFT and CUB. On distributions such as RHEL 7 or CentOS 7 that may use an older GCC toolchain by default, it is recommended to use a newer GCC toolchain with CUDA 11.0. Newer GCC toolchains are available with the Red Hat Developer Toolset. For platforms that ship a compiler version older than GCC 6 by default, linking to static cuBLAS and cuDNN using the default compiler is not supported.

(3) Minor versions of the following compilers listed: of GCC, ICC, NVHPC and XLC, as host compilers for nvcc are supported.

1.2. OS Support Policy

Refer to the support lifecycle for these supported OSes to know their support timelines and plan to move to newer releases accordingly.

1.3. About This Document

This document is intended for readers familiar with the Linux environment and the compilation of C programs from the command line. You do not need previous experience with CUDA or experience with parallel computation. Note: This guide covers installation only on systems with X Windows installed.

2. Pre-installation Actions

2.1. Verify You Have a CUDA-Capable GPU

To verify that your GPU is CUDA-capable, go to your distribution’s equivalent of System Properties, or, from the command line, enter:

If you do not see any settings, update the PCI hardware database that Linux maintains by entering update-pciids (generally found in /sbin ) at the command line and rerun the previous lspci command.

If your graphics card is from NVIDIA and it is listed in https://developer.nvidia.com/cuda-gpus, your GPU is CUDA-capable.

The Release Notes for the CUDA Toolkit also contain a list of supported products.

2.2. Verify You Have a Supported Version of Linux

The CUDA Development Tools are only supported on some specific distributions of Linux. These are listed in the CUDA Toolkit release notes.

To determine which distribution and release number you’re running, type the following at the command line:

You should see output similar to the following, modified for your particular system:

The x86_64 line indicates you are running on a 64-bit system. The remainder gives information about your distribution.

2.3. Verify the System Has gcc Installed

The gcc compiler is required for development using the CUDA Toolkit. It is not required for running CUDA applications. It is generally installed as part of the Linux installation, and in most cases the version of gcc installed with a supported version of Linux will work correctly.

To verify the version of gcc installed on your system, type the following on the command line:

If an error message displays, you need to install the from your Linux distribution or obtain a version of gcc and its accompanying toolchain from the Web.

2.4. Verify the System has the Correct Kernel Headers and Development Packages Installed

The CUDA Driver requires that the kernel headers and development packages for the running version of the kernel be installed at the time of the driver installation, as well whenever the driver is rebuilt. For example, if your system is running kernel version 3.17.4-301, the 3.17.4-301 kernel headers and development packages must also be installed.

While the Runfile installation performs no package validation, the RPM and Deb installations of the driver will make an attempt to install the kernel header and development packages if no version of these packages is currently installed. However, it will install the latest version of these packages, which may or may not match the version of the kernel your system is using. Therefore, it is best to manually ensure the correct version of the kernel headers and development packages are installed prior to installing the CUDA Drivers, as well as whenever you change the kernel version.

RHEL 7 / CentOS 7

Fedora / RHEL 8 / Rocky Linux 8

OpenSUSE / SLES

The kernel development packages for the currently running kernel can be installed with:

This section does not need to be performed for WSL.

Ubuntu

2.5. Install MLNX_OFED

GDS packages can be installed using the CUDA packaging guide. Follow the instructions in MLNX_OFED Requirements and Installation.

GDS is supported in two different modes: GDS (default/full perf mode) and Compatibility mode. Installation instructions for them differ slightly. Compatibility mode is the only mode that is supported on certain distributions due to software dependency limitations.

Full GDS support is restricted to the following Linux distros:

2.6. Choose an Installation Method

The CUDA Toolkit can be installed using either of two different installation mechanisms: distribution-specific packages (RPM and Deb packages), or a distribution-independent package (runfile packages).

The distribution-independent package has the advantage of working across a wider set of Linux distributions, but does not update the distribution’s native package management system. The distribution-specific packages interface with the distribution’s native package management system. It is recommended to use the distribution-specific packages, where possible.

2.7. Download the NVIDIA CUDA Toolkit

Choose the platform you are using and download the NVIDIA CUDA Toolkit.

The CUDA Toolkit contains the CUDA driver and tools needed to create, build and run a CUDA application as well as libraries, header files, and other resources.

Download Verification

The download can be verified by comparing the MD5 checksum posted at https://developer.download.nvidia.com/compute/cuda/11.6.2/docs/sidebar/md5sum.txt with that of the downloaded file. If either of the checksums differ, the downloaded file is corrupt and needs to be downloaded again.

2.8. Address Custom xorg.conf, If Applicable

2.9. Handle Conflicting Installation Methods

Before installing CUDA, any previous installations that could conflict should be uninstalled. This will not affect systems which have not had CUDA installed previously, or systems where the installation method has been preserved (RPM/Deb vs. Runfile). See the following charts for specifics.

Use the following command to uninstall a Toolkit runfile installation:

3. Package Manager Installation

Basic instructions can be found in the Quick Start Guide. Read on for more detailed instructions.

3.1. Overview

Installation using RPM or Debian packages interfaces with your system’s package management system. When using RPM or Debian local repo installers, the downloaded package contains a repository snapshot stored on the local filesystem in /var/. Such a package only informs the package manager where to find the actual installation packages, but will not install them.

If the online network repository is enabled, RPM or Debian packages will be automatically downloaded at installation time using the package manager: apt-get, dnf, yum, or zypper.

Finally, some helpful package manager capabilities are detailed.

These instructions are for native development only. For cross-platform development, see the CUDA Cross-Platform Environment section.

3.2. RHEL 7 / CentOS 7

3.2.1. Prepare RHEL 7 / CentOS 7

Satisfy third-party package dependency:

Enable optional repos::

On RHEL 7 Linux only, execute the following steps to enable optional repositories.

Optional – Remove Outdated Signing Key:

Choose an installation method: local repo or network repo.

3.2.2. Local Repo Installation for RHEL 7 / CentOS 7

Install local repository onto file system:

3.2.3. Network Repo Installation for RHEL 7 / CentOS 7

Enable the network repo:

Install the new CUDA public GPG key:

The new GPG public key for the CUDA repository (RPM-based distros) is d42d0685.

On a fresh installation of RHEL, the yum package manager will prompt the user to accept new keys when installing packages the first time. Indicate you accept the change when prompted.

3.2.4. Common Installation Intructions for RHEL 7 / CentOS 7

These instructions apply to both local and network installation.

Add libcuda.so symbolic link, if necessary:

3.2.5. Installing a Previous NVIDIA Driver Branch on RHEL 7

To perform a network install of a previous NVIDIA driver branch on RHEL 7, use the commands below:

Where DRIVER_VERSION is the full version, for example, 470.82.01, and DRIVER_BRANCH is, for example, 470.

3.3. RHEL 8 / Rocky 8

3.3.1. Prepare RHEL 8 / Rocky 8

On RHEL 8 Linux only, execute the following steps to enable optional repositories.

Remove Outdated Signing Key:

3.3.2. Local Repo Installation for RHEL 8 / Rocky 8

3.3.3. Network Repo Installation for RHEL 8 / Rocky 8

Install the new CUDA public GPG key:

The new GPG public key for the CUDA repository (RPM-based distros) is d42d0685.

On a fresh installation of RHEL, the dnf package manager will prompt the user to accept new keys when installing packages the first time. Indicate you accept the change when prompted.

3.3.4. Common Instructions for RHEL 8 / Rocky 8

These instructions apply to both local and network installation.

Install CUDA SDK:

3.4. Fedora

3.4.1. Prepare Fedora

3.4.2. Local Repo Installation for Fedora

3.4.3. Network Repo Installation for Fedora

Install the new CUDA public GPG key:

The new GPG public key for the CUDA repository (RPM-based distros) is d42d0685.

On a fresh installation of Fedora, the dnf package manager will prompt the user to accept new keys when installing packages the first time. Indicate you accept the change when prompted.

3.4.4. Common Installation Intructions for Fedora

These instructions apply to both local and network installation for Fedora.

NOTE: The CUDA driver installation may fail if the RPMFusion non-free repository is enabled. In this case, CUDA installations should temporarily disable the RPMFusion non-free repository:

It may be necessary to rebuild the grub configuration files, particularly if you use a non-default partition scheme. If so, then run this below command, and reboot the system:

3.5. SLES

3.5.1. Prepare SLES

On SLES12 SP4, install the Mesa-libgl-devel Linux packages before proceeding. See Mesa-libGL-devel.

Remove Outdated Signing Key:

3.5.2. Local Repo Installation for SLES

Install local repository on file system:

3.5.3. Network Repo Installation for SLES

Install the new CUDA public GPG key:

The new GPG public key for the CUDA repository (RPM-based distros) is d42d0685.

On a fresh installation of SLES, the zypper package manager will prompt the user to accept new keys when installing packages the first time. Indicate you accept the change when prompted.

3.5.4. Common Installation Instructions for SLES

These instructions apply to both local and network installation for SLES.

3.6. OpenSUSE

3.6.1. Prepare OpenSUSE

Remove Outdated Signing Key:

3.6.2. Local Repo Installation for OpenSUSE

Install local repository on file system:

3.6.3. Network Repo Installation for OpenSUSE

Enable the network repo:

Install the new CUDA public GPG key:

The new GPG public key for the CUDA repository (RPM-based distros) is d42d0685. On fresh installation of openSUSE, the zypper package manager will prompt the user to accept new keys when installing packages the first time. Indicate you accept the change when prompted.

Refresh Zypper repository cache:

3.6.4. Common Installation Instructions for OpenSUSE

These instructions apply to both local and network installation for OpenSUSE.

3.7. WSL

These instructions must be used if you are installing in a WSL environment. Do not use the Ubuntu instructions in this case; it is important to not install the cuda-drivers packages within the WSL environment.

3.7.1. Prepare WSL

Remove Outdated Signing Key:

3.7.2. Local Repo Installation for WSL

3.7.3. Network Repo Installation for WSL

The new GPG public key for the CUDA repository (Debian-based distros) is 3bf863cc. This must be enrolled on the system, either using the cuda-keyring package or manually; the apt-key command is deprecated and not recommended.

Install the newcuda-keyring package:

3.7.4. Common Installation Instructions for WSL

These instructions apply to both local and network installation for WSL.

3.8. Ubuntu

3.8.1. Prepare Ubuntu

Remove Outdated Signing Key:

3.8.2. Local Repo Installation for Ubuntu

Install local repository on file system:

3.8.3. Network Repo Installation for Ubuntu

The new GPG public key for the CUDA repository is 3bf863cc. This must be enrolled on the system, either using the cuda-keyring package or manually; the apt-key command is deprecated and not recommended.

Install the new cuda-keyring package:

3.8.4. Common Installation Instructions for Ubuntu

These instructions apply to both local and network installation for Ubuntu.

3.9. Debian

3.9.1. Prepare Debian

Enable the contrib repository:

Remove Outdated Signing Key:

3.9.2. Local Repo Installation for Debian

Install local repository on file system:

3.9.3. Network Repo Installation for Debian

The new GPG public key for the CUDA repository (Debian-based distros) is 3bf863cc. This must be enrolled on the system, either using the cuda-keyring package or manually; the apt-key command is deprecated and not recommended.

Install the new cuda-keyring package:

3.9.4. Common Installation Instructions for Debian

These instructions apply to both local and network installation for Debian.

Update the Apt repository cache:

3.10. Additional Package Manager Capabilities

Below are some additional capabilities of the package manager that users can take advantage of.

3.10.1. Available Packages

The recommended installation package is the cuda package. This package will install the full set of other CUDA packages required for native development and should cover most scenarios.

The cuda package installs all the available packages for native developments. That includes the compiler, the debugger, the profiler, the math libraries, and so on. For x86_64 platforms, this also includes Nsight Eclipse Edition and the visual profilers. It also includes the NVIDIA driver package.

On supported platforms, the cuda-cross-aarch64 and cuda-cross-ppc64el packages install all the packages required for cross-platform development to ARMv8 and POWER8, respectively. The libraries and header files of the target architecture’s display driver package are also installed to enable the cross compilation of driver applications. The cuda-cross- packages do not install the native display driver.

The packages installed by the packages above can also be installed individually by specifying their names explicitly. The list of available packages be can obtained with:

These packages provide 32-bit driver libraries needed for things such as Steam (popular game app store/launcher), older video games, and some compute applications.

Where

is the driver version, for example 495.

For Fedora and RHEL8:

No extra installation is required, the nvidia-glG05 package already contains the 32-bit libraries.

3.10.3. Package Upgrades

The cuda package points to the latest stable release of the CUDA Toolkit. When a new version is available, use the following commands to upgrade the toolkit and driver:

The cuda-drivers package points to the latest driver release available in the CUDA repository. When a new version is available, use the following commands to upgrade the driver:

Some desktop environments, such as GNOME or KDE, will display a notification alert when new packages are available.

To avoid any automatic upgrade, and lock down the toolkit installation to the X.Y release, install the cuda-X-Y or cuda-cross—X-Y package.

Side-by-side installations are supported. For instance, to install both the X.Y CUDA Toolkit and the X.Y+1 CUDA Toolkit, install the cuda-X.Y and cuda-X.Y+1 packages.

3.10.4. Meta Packages

Meta packages are RPM/Deb/Conda packages which contain no (or few) files but have multiple dependencies. They are used to install many CUDA packages when you may not know the details of the packages you want. Below is the list of meta packages.

4. Driver Installation

This section is for users who want to install a specific driver version.

For Debian and Ubuntu:

This allows you to get the highest version in the specified branch.

For Fedora and RHEL8:

To uninstall or change streams on Fedora and RHEL8:

5. NVIDIA Open GPU Kernel Modules

The NVIDIA Linux GPU Driver contains several kernel modules:

To install NVIDIA Open GPU Kernel Modules, follow the instructions below.

5.1. CUDA Runfile

5.2. Debian

5.3. Fedora

5.4. RHEL 8 / Rocky 8

5.5. RHEL 7 / CentOS 7

5.6. OpenSUSE / SLES

5.7. Ubuntu

6. Precompiled Streams

Packaging templates and instructions are provided on GitHub to allow you to maintain your own precompiled kernel module packages for custom kernels and derivative Linux distros: NVIDIA/yum-packaging-precompiled-kmod

To use the new driver packages on RHEL 8:

Choose one of the four options below depending on the desired driver:

latest always updates to the highest versioned driver (precompiled):

locks the driver updates to the specified driver branch (precompiled):

latest-dkms always updates to the highest versioned driver (non-precompiled):

-dkms locks the driver updates to the specified driver branch (non-precompiled):

6.1. Precompiled Streams Support Matrix

| NVIDIA Driver | Precompiled Stream | Legacy DKMS Stream |

|---|---|---|

| Highest version | latest | latest-dkms |

| Locked at 455.x | 455 | 455-dkms |

| Locked at 450.x | 450 | 450-dkms |

| Locked at 440.x | 440 | 440-dkms |

| Locked at 418.x | 418 | 418-dkms |

6.2. Modularity Profiles

| Stream | Profile | Use Case |

|---|---|---|

| Default | /default | Installs all the driver packages in a stream. |

| Kickstart | /ks | Performs unattended Linux OS installation using a config file. |

| NVSwitch Fabric | /fm | Installs all the driver packages plus components required for bootstrapping an NVSwitch system (including the Fabric Manager and NSCQ telemetry). |

| Source | /src | Source headers for compilation (precompiled streams only). |

7. Kickstart Installation

7.1. RHEL8 / Rocky Linux 8

8. Runfile Installation

Basic instructions can be found in the Quick Start Guide. Read on for more detailed instructions.

This section describes the installation and configuration of CUDA when using the standalone installer. The standalone installer is a «.run» file and is completely self-contained.

8.1. Overview

The Runfile installation installs the NVIDIA Driver and CUDA Toolkit via an interactive ncurses-based interface.

The installation steps are listed below. Distribution-specific instructions on disabling the Nouveau drivers as well as steps for verifying device node creation are also provided.

Finally, advanced options for the installer and uninstallation steps are detailed below.

The Runfile installation does not include support for cross-platform development. For cross-platform development, see the CUDA Cross-Platform Environment section.

8.2. Installation

Reboot into text mode (runlevel 3).

This can usually be accomplished by adding the number «3» to the end of the system’s kernel boot parameters.

Since the NVIDIA drivers are not yet installed, the text terminals may not display correctly. Temporarily adding «nomodeset» to the system’s kernel boot parameters may fix this issue.

Consult your system’s bootloader documentation for information on how to make the above boot parameter changes.

The reboot is required to completely unload the Nouveau drivers and prevent the graphical interface from loading. The CUDA driver cannot be installed while the Nouveau drivers are loaded or while the graphical interface is active.

Verify that the Nouveau drivers are not loaded. If the Nouveau drivers are still loaded, consult your distribution’s documentation to see if further steps are needed to disable Nouveau.

The installer will prompt for the following:

CUDA Driver installation

CUDA Toolkit installation, location, and /usr/local/cuda symbolic link

The default installation location for the toolkit is /usr/local/cuda- 11.7 :

The /usr/local/cuda symbolic link points to the location where the CUDA Toolkit was installed. This link allows projects to use the latest CUDA Toolkit without any configuration file update.

The installer must be executed with sufficient privileges to perform some actions. When the current privileges are insufficient to perform an action, the installer will ask for the user’s password to attempt to install with root privileges. Actions that cause the installer to attempt to install with root privileges are:

installing the CUDA Driver

installing the CUDA Toolkit to a location the user does not have permission to write to

creating the /usr/local/cuda symbolic link

Running the installer with sudo, as shown above, will give permission to install to directories that require root permissions. Directories and files created while running the installer with sudo will have root ownership.

Verify the device nodes are created properly.

8.3. Disabling Nouveau

To install the Display Driver, the Nouveau drivers must first be disabled. Each distribution of Linux has a different method for disabling Nouveau.

8.3.1. Fedora

8.3.2. RHEL/CentOS

8.3.3. OpenSUSE

8.3.4. SLES

No actions to disable Nouveau are required as Nouveau is not installed on SLES.

8.3.5. WSL

No actions to disable Nouveau are required as Nouveau is not installed on WSL.

8.3.6. Ubuntu

8.3.7. Debian

8.4. Device Node Verification

Check that the device files /dev/nvidia* exist and have the correct (0666) file permissions. These files are used by the CUDA Driver to communicate with the kernel-mode portion of the NVIDIA Driver. Applications that use the NVIDIA driver, such as a CUDA application or the X server (if any), will normally automatically create these files if they are missing using the nvidia-modprobe tool that is bundled with the NVIDIA Driver. However, some systems disallow setuid binaries, so if these files do not exist, you can create them manually by using a startup script such as the one below:

8.5. Advanced Options

directory. If not provided, the default path of /usr/local/cuda- 11.7 is used.

directory. If the

is not provided, then the default path of your distribution is used. This only applies to the libraries installed outside of the CUDA Toolkit path.

Extracts to the

the following: the driver runfile, the raw files of the toolkit to

This is especially useful when one wants to install the driver using one or more of the command-line options provided by the driver installer which are not exposed in this installer.

as the kernel source directory when building the NVIDIA kernel module. Required for systems where the kernel source is installed to a non-standard location.

8.6. Uninstallation

9. Conda Installation

9.1. Conda Overview

9.2. Installation

To perform a basic install of all CUDA Toolkit components using Conda, run the following command:

9.3. Uninstallation

To uninstall the CUDA Toolkit using Conda, run the following command:

9.4. Installing Previous CUDA Releases

All Conda packages released under a specific CUDA version are labeled with that release version. To install a previous version, include that label in the install command such as:

Some CUDA releases do not move to new versions of all installable components. When this is the case these components will be moved to the new label, and you may need to modify the install command to include both labels such as:

This example will install all packages released as part of CUDA 11.3.0.

10. Pip Wheels

NVIDIA provides Python Wheels for installing CUDA through pip, primarily for using CUDA with Python. These packages are intended for runtime use and do not currently include developer tools (these can be installed separately).

Please note that with this installation method, CUDA installation environment is managed via pip and additional care must be taken to set up your host environment to use CUDA outside the pip environment.

The following metapackages will install the latest version of the named component on Linux for the indicated CUDA version. «cu11» should be read as «cuda11».

These metapackages install the following packages:

11. Tarball and Zip Archive Deliverables

For each release, a JSON manifest is provided such as redistrib_11.4.2.json, which corresponds to the CUDA 11.4.2 release label (CUDA 11.4 update 2) which includes the release date, the name of each component, license name, relative URL for each platform and checksums.

Package maintainers are advised to check the provided LICENSE for each component prior to redistribution. Instructions for developers using CMake and Bazel build systems are provided in the next sections.

11.1. Parsing Redistrib JSON

11.2. Importing Tarballs into CMake

The recommended module for importing these tarballs into the CMake build system is via FindCUDAToolkit (3.17 and newer).

The path to the extraction location can be specified with the CUDAToolkit_ROOT environmental variable. For example CMakeLists.txt and commands, see cmake/1_FindCUDAToolkit/.

For older versions of CMake, the ExternalProject_Add module is an alternative method. For example CMakeLists.txt file and commands, see cmake/2_ExternalProject/.

11.3. Importing Tarballs into Bazel

The recommended method of importing these tarballs into the Bazel build system is using http_archive and pkg_tar.

12. CUDA Cross-Platform Environment

Cross-platform development is only supported on Ubuntu systems, and is only provided via the Package Manager installation process.

We recommend selecting Ubuntu 18.04 as your cross-platform development environment. This selection helps prevent host/target incompatibilities, such as GCC or GLIBC version mismatches.

12.1. CUDA Cross-Platform Installation

Some of the following steps may have already been performed as part of the native Ubuntu installation. Such steps can safely be skipped.

These steps should be performed on the x86_64 host system, rather than the target system. To install the native CUDA Toolkit on the target system, refer to the native Ubuntu installation section.

where indicates the operating system, architecture, and/or the version of the package.

12.2. CUDA Cross-Platform Samples

CUDA Samples are now located in https://github.com/nvidia/cuda-samples, which includes instructions for obtaining, building, and running the samples.

13. Post-installation Actions

The post-installation actions must be manually performed. These actions are split into mandatory, recommended, and optional sections.

13.1. Mandatory Actions

Some actions must be taken after the installation before the CUDA Toolkit and Driver can be used.

13.1.1. Environment Setup

To add this path to the PATH variable:

In addition, when using the runfile installation method, the LD_LIBRARY_PATH variable needs to contain /usr/local/cuda- 11.7 /lib64 on a 64-bit system, or /usr/local/cuda- 11.7 /lib on a 32-bit system

To change the environment variables for 64-bit operating systems:

To change the environment variables for 32-bit operating systems:

Note that the above paths change when using a custom install path with the runfile installation method.

13.1.2. POWER9 Setup

Because of the addition of new features specific to the NVIDIA POWER9 CUDA driver, there are some additional setup requirements in order for the driver to function properly. These additional steps are not handled by the installation of CUDA packages, and failure to ensure these extra requirements are met will result in a non-functional CUDA driver installation.

Disable a udev rule installed by default in some Linux distributions that cause hot-pluggable memory to be automatically onlined when it is physically probed. This behavior prevents NVIDIA software from bringing NVIDIA device memory online with non-default settings. This udev rule must be disabled in order for the NVIDIA CUDA driver to function properly on POWER9 systems.

You will need to reboot the system to initialize the above changes.

13.2. Recommended Actions

Other actions are recommended to verify the integrity of the installation.

13.2.1. Install Persistence Daemon

NVIDIA is providing a user-space daemon on Linux to support persistence of driver state across CUDA job runs. The daemon approach provides a more elegant and robust solution to this problem than persistence mode. For more details on the NVIDIA Persistence Daemon, see the documentation here.

13.2.2. Install Writable Samples

CUDA Samples are now located in https://github.com/nvidia/cuda-samples, which includes instructions for obtaining, building, and running the samples.

13.2.3. Verify the Installation

Before continuing, it is important to verify that the CUDA toolkit can find and communicate correctly with the CUDA-capable hardware. To do this, you need to compile and run some of the sample programs, located in https://github.com/nvidia/cuda-samples.

13.2.3.1. Verify the Driver Version

If you installed the driver, verify that the correct version of it is loaded. If you did not install the driver, or are using an operating system where the driver is not loaded via a kernel module, such as L4T, skip this step.

13.2.3.2. Running the Binaries

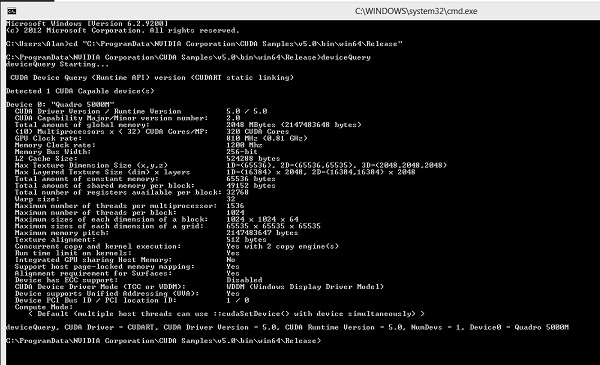

After compilation, find and run deviceQuery from https://github.com/nvidia/cuda-samples. If the CUDA software is installed and configured correctly, the output for deviceQuery should look similar to that shown in Figure 1.

The exact appearance and the output lines might be different on your system. The important outcomes are that a device was found (the first highlighted line), that the device matches the one on your system (the second highlighted line), and that the test passed (the final highlighted line).

If a CUDA-capable device and the CUDA Driver are installed but deviceQuery reports that no CUDA-capable devices are present, this likely means that the /dev/nvidia* files are missing or have the wrong permissions.

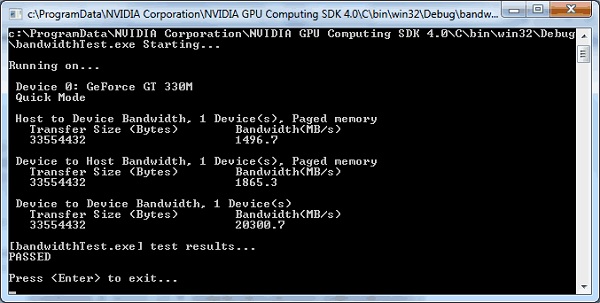

Running the bandwidthTest program ensures that the system and the CUDA-capable device are able to communicate correctly. Its output is shown in Figure 2.

Note that the measurements for your CUDA-capable device description will vary from system to system. The important point is that you obtain measurements, and that the second-to-last line (in Figure 2) confirms that all necessary tests passed.

Should the tests not pass, make sure you have a CUDA-capable NVIDIA GPU on your system and make sure it is properly installed.

If you run into difficulties with the link step (such as libraries not being found), consult the Linux Release Notes found inhttps://github.com/nvidia/cuda-samples.

13.2.4. Install Nsight Eclipse Plugins

13.3. Optional Actions

Other options are not necessary to use the CUDA Toolkit, but are available to provide additional features.

13.3.1. Install Third-party Libraries

Some CUDA samples use third-party libraries which may not be installed by default on your system. These samples attempt to detect any required libraries when building.

RHEL 7 / CentOS 7

RHEL 8 / Rocky Linux 8

Fedora

OpenSUSE

Ubuntu

Debian

13.3.2. Install the Source Code for cuda-gdb

The cuda-gdb source must be explicitly selected for installation with the runfile installation method. During the installation, in the component selection page, expand the component «CUDA Tools 11.0» and select cuda-gdb-src for installation. It is unchecked by default.

To obtain a copy of the source code for cuda-gdb using the RPM and Debian installation methods, the cuda-gdb-src package must be installed.

The source code is installed as a tarball in the /usr/local/cuda- 11.7 /extras directory.

13.3.3. Select the Active Version of CUDA

To show the active version of CUDA and all available versions:

To show the active minor version of a given major CUDA release:

To update the active version of CUDA:

14. Advanced Setup

Below is information on some advanced setup scenarios which are not covered in the basic instructions above.

| Scenario | Instructions |

|---|---|

| Install CUDA using the Package Manager installation method without installing the NVIDIA GL libraries. | Fedora |

Install CUDA using the following command:

Follow the instructions here to ensure that Nouveau is disabled.

If performing an upgrade over a previous installation, the NVIDIA kernel module may need to be rebuilt by following the instructions here.

On some system configurations the NVIDIA GL libraries may need to be locked before installation using:

Install CUDA using the following command:

Follow the instructions here to ensure that Nouveau is disabled.

This functionality isn’t supported on Ubuntu. Instead, the driver packages integrate with the Bumblebee framework to provide a solution for users who wish to control what applications the NVIDIA drivers are used for. See Ubuntu’s Bumblebee wiki for more information.

Remove diagnostic packages using the following command:

Follow the instructions here to continue installation as normal.

Remove diagnostic packages using the following command:

Follow the instructions here to continue installation as normal.

Remove diagnostic packages using the following command:

Follow the instructions here to continue installation as normal.

Remove diagnostic packages using the following command:

Follow the instructions here to continue installation as normal.

You will need to install the packages in the correct dependency order; this task is normally taken care of by the package managers. For example, if package «foo» has a dependency on package «bar», you should install package «bar» first, and package «foo» second. You can check the dependencies of a RPM package as follows:

Note that the driver packages cannot be relocated.

The Deb packages do not support custom install locations. It is however possible to extract the contents of the Deb packages and move the files to the desired install location. See the next scenario for more details one xtracting Deb packages.

The Driver Runfile can be extracted by running:

The RPM packages can be extracted by running:

The Deb packages can be extracted by running:

Modify Ubuntu’s apt package manager to query specific architectures for specific repositories.

This is useful when a foreign architecture has been added, causing «404 Not Found» errors to appear when the repository meta-data is updated.

Each repository you wish to restrict to specific architectures must have its sources.list entry modified. This is done by modifying the /etc/apt/sources.list file and any files containing repositories you wish to restrict under the /etc/apt/sources.list.d/ directory. Normally, it is sufficient to modify only the entries in /etc/apt/sources.list

For more details, see the sources.list manpage.

The nvidia.ko kernel module fails to load, saying some symbols are unknown.

Check to see if there are any optionally installable modules that might provide these symbols which are not currently installed.

For the example of the drm_open symbol, check to see if there are any packages which provide drm_open and are not already installed. For instance, on Ubuntu 14.04, the linux-image-extra package provides the DRM kernel module (which provides drm_open ). This package is optional even though the kernel headers reflect the availability of DRM regardless of whether this package is installed or not.

The runfile installer fails to extract due to limited space in the TMP directory.

Re-enable Wayland after installing the RPM driver on Fedora.

This can occur when installing CUDA after uninstalling a different version. Use the following command before installation:

15. Frequently Asked Questions

How do I install the Toolkit in a different location?

The RPM and Deb packages cannot be installed to a custom install location directly using the package managers. See the «Install CUDA to a specific directory using the Package Manager installation method» scenario in the Advanced Setup section for more information.

Why do I see «nvcc: No such file or directory» when I try to build a CUDA application?

Why do I see multiple «404 Not Found» errors when updating my repository meta-data on Ubuntu?

These errors occur after adding a foreign architecture because apt is attempting to query for each architecture within each repository listed in the system’s sources.list file. Repositories that do not host packages for the newly added architecture will present this error. While noisy, the error itself does no harm. Please see the Advanced Setup section for details on how to modify your sources.list file to prevent these errors.

How can I tell X to ignore a GPU for compute-only use?

To make sure X doesn’t use a certain GPU for display, you need to specify which other GPU to use for display. For more information, please refer to the «Use a specific GPU for rendering the display» scenario in the Advanced Setup section.

Why doesn’t the cuda-repo package install the CUDA Toolkit and Drivers?

When using RPM or Deb, the downloaded package is a repository package. Such a package only informs the package manager where to find the actual installation packages, but will not install them.

See the Package Manager Installation section for more details.

How do I get CUDA to work on a laptop with an iGPU and a dGPU running Ubuntu14.04?

What do I do if the display does not load, or CUDA does not work, after performing a system update?

System updates may include an updated Linux kernel. In many cases, a new Linux kernel will be installed without properly updating the required Linux kernel headers and development packages. To ensure the CUDA driver continues to work when performing a system update, rerun the commands in the Kernel Headers and Development Packages section.

How do I install a CUDA driver with a version less than 367 using a network repo?

To install a CUDA driver at a version earlier than 367 using a network repo, the required packages will need to be explicitly installed at the desired version. For example, to install 352.99, instead of installing the cuda-drivers metapackage at version 352.99, you will need to install all required packages of cuda-drivers at version 352.99.

How do I install an older CUDA version using a network repo?

Depending on your system configuration, you may not be able to install old versions of CUDA using the cuda metapackage. In order to install a specific version of CUDA, you may need to specify all of the packages that would normally be installed by the cuda metapackage at the version you want to install.

Why does the installation on SUSE install the Mesa-dri-nouveau dependency?

How do I handle «Errors were encountered while processing: glx-diversions»?

16. Additional Considerations

A number of helpful development tools are included in the CUDA Toolkit to assist you as you develop your CUDA programs, such as NVIDIA В® Nsightв„ў Eclipse Edition, NVIDIA Visual Profiler, CUDA-GDB, and CUDA-MEMCHECK.

For technical support on programming questions, consult and participate in the developer forums at https://developer.nvidia.com/cuda/.

17. Removing CUDA Toolkit and Driver

Follow the below steps to properly uninstall the CUDA Toolkit and NVIDIA Drivers from your system. These steps will ensure that the uninstallation will be clean.

RHEL 8 / Rocky Linux 8

RHEL 7 / CentOS 7

Fedora

OpenSUSE / SLES

Ubuntu and Debian

Notices

Notice

This document is provided for information purposes only and shall not be regarded as a warranty of a certain functionality, condition, or quality of a product. NVIDIA Corporation (“NVIDIA”) makes no representations or warranties, expressed or implied, as to the accuracy or completeness of the information contained in this document and assumes no responsibility for any errors contained herein. NVIDIA shall have no liability for the consequences or use of such information or for any infringement of patents or other rights of third parties that may result from its use. This document is not a commitment to develop, release, or deliver any Material (defined below), code, or functionality.

NVIDIA reserves the right to make corrections, modifications, enhancements, improvements, and any other changes to this document, at any time without notice.

Customer should obtain the latest relevant information before placing orders and should verify that such information is current and complete.

NVIDIA products are sold subject to the NVIDIA standard terms and conditions of sale supplied at the time of order acknowledgement, unless otherwise agreed in an individual sales agreement signed by authorized representatives of NVIDIA and customer (“Terms of Sale”). NVIDIA hereby expressly objects to applying any customer general terms and conditions with regards to the purchase of the NVIDIA product referenced in this document. No contractual obligations are formed either directly or indirectly by this document.

NVIDIA products are not designed, authorized, or warranted to be suitable for use in medical, military, aircraft, space, or life support equipment, nor in applications where failure or malfunction of the NVIDIA product can reasonably be expected to result in personal injury, death, or property or environmental damage. NVIDIA accepts no liability for inclusion and/or use of NVIDIA products in such equipment or applications and therefore such inclusion and/or use is at customer’s own risk.

NVIDIA makes no representation or warranty that products based on this document will be suitable for any specified use. Testing of all parameters of each product is not necessarily performed by NVIDIA. It is customer’s sole responsibility to evaluate and determine the applicability of any information contained in this document, ensure the product is suitable and fit for the application planned by customer, and perform the necessary testing for the application in order to avoid a default of the application or the product. Weaknesses in customer’s product designs may affect the quality and reliability of the NVIDIA product and may result in additional or different conditions and/or requirements beyond those contained in this document. NVIDIA accepts no liability related to any default, damage, costs, or problem which may be based on or attributable to: (i) the use of the NVIDIA product in any manner that is contrary to this document or (ii) customer product designs.

No license, either expressed or implied, is granted under any NVIDIA patent right, copyright, or other NVIDIA intellectual property right under this document. Information published by NVIDIA regarding third-party products or services does not constitute a license from NVIDIA to use such products or services or a warranty or endorsement thereof. Use of such information may require a license from a third party under the patents or other intellectual property rights of the third party, or a license from NVIDIA under the patents or other intellectual property rights of NVIDIA.

Reproduction of information in this document is permissible only if approved in advance by NVIDIA in writing, reproduced without alteration and in full compliance with all applicable export laws and regulations, and accompanied by all associated conditions, limitations, and notices.

THIS DOCUMENT AND ALL NVIDIA DESIGN SPECIFICATIONS, REFERENCE BOARDS, FILES, DRAWINGS, DIAGNOSTICS, LISTS, AND OTHER DOCUMENTS (TOGETHER AND SEPARATELY, “MATERIALS”) ARE BEING PROVIDED “AS IS.” NVIDIA MAKES NO WARRANTIES, EXPRESSED, IMPLIED, STATUTORY, OR OTHERWISE WITH RESPECT TO THE MATERIALS, AND EXPRESSLY DISCLAIMS ALL IMPLIED WARRANTIES OF NONINFRINGEMENT, MERCHANTABILITY, AND FITNESS FOR A PARTICULAR PURPOSE. TO THE EXTENT NOT PROHIBITED BY LAW, IN NO EVENT WILL NVIDIA BE LIABLE FOR ANY DAMAGES, INCLUDING WITHOUT LIMITATION ANY DIRECT, INDIRECT, SPECIAL, INCIDENTAL, PUNITIVE, OR CONSEQUENTIAL DAMAGES, HOWEVER CAUSED AND REGARDLESS OF THE THEORY OF LIABILITY, ARISING OUT OF ANY USE OF THIS DOCUMENT, EVEN IF NVIDIA HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. Notwithstanding any damages that customer might incur for any reason whatsoever, NVIDIA’s aggregate and cumulative liability towards customer for the products described herein shall be limited in accordance with the Terms of Sale for the product.

In this chapter, we will learn how to install CUDA.

For installing the CUDA toolkit on Windows, you’ll need −

Note that natively, CUDA allows only 64b applications. That is, you cannot develop 32b CUDA applications natively (exception: they can be developed only on the GeForce series GPUs). 32b applications can be developed on x86_64 using the cross-development capabilities of the CUDA toolkit. For compiling CUDA programs to 32b, follow these steps −

Step 1 − Add \bin to your path.

Step 3 − Link with the 32-bit libs in \lib (instead of \lib64).

You can download the latest CUDA toolkit from here.

Compatibility

| Windows version | Native x86_64 support | X86_32 support on x86_32 (cross) |

|---|---|---|

| Windows 10 | YES | YES |

| Windows 8.1 | YES | YES |

| Windows 7 | YES | YES |

| Windows Server 2016 | YES | NO |

| Windows Server 2012 R2 | YES | NO |

| Visual Studio Version | Native x86_64 support | X86_32 support on x86_32 (cross) |

|---|---|---|

| 2017 | YES | NO |

| 2015 | YES | NO |

| 2015 Community edition | YES | NO |

| 2013 | YES | YES |

| 2012 | YES | YES |

| 2010 | YES | YES |

As can be seen from the above tables, support for x86_32 is limited. Presently, only the GeForce series is supported for 32b CUDA applications. If you have a supported version of Windows and Visual Studio, then proceed. Otherwise, first install the required software.

Verifying if your system has a CUDA capable GPU − Open a RUN window and run the command − control /name Microsoft.DeviceManager, and verify from the given information. If you do not have a CUDA capable GPU, or a GPU, then halt.

Installing the Latest CUDA Toolkit

In this section, we will see how to install the latest CUDA toolkit.

Step 1 − Visit − https://developer.nvidia.com and select the desired operating system.

Step 2 − Select the type of installation that you would like to perform. The network installer will initially be a very small executable, which will download the required files when run. The standalone installer will download each required file at once and won’t require an Internet connection later to install.

Step 3 − Download the base installer.

The CUDA toolkit will also install the required GPU drivers, along with the required libraries and header files to develop CUDA applications. It will also install some sample code to help starters. If you run the executable by double-clicking on it, just follow the on-screen directions and the toolkit will be installed. This is the graphical way of installation, and the downside of this method is that you do not have control on what packages to install. This can be avoided if you install the toolkit using CLI. Here is a list of possible packages that you can control −

| nvcc_9.1 | cuobjdump_9.1 | nvprune_9.1 | cupti_9.1 |

| demo_suite_9.1 | documentation_9.1 | cublas_9.1 | gpu-library-advisor_9.1 |

| curand_dev_9.1 | nvgraph_9.1 | cublas_dev_9.1 | memcheck_9.1 |

| cusolver_9.1 | nvgraph_dev_9.1 | cudart_9.1 | nvdisasm_9.1 |

| cusolver_dev_9.1 | npp_9.1 | cufft_9.1 | nvprof_9.1 |

| cusparse_9.1 | npp_dev_9.1 | cufft_dev_9.1 | visual_profiler_9.1 |

For example, to install only the compiler and the occupancy calculator, use the following command −

Verifying the Installation

Follow these steps to verify the installation −

Step 2 − Run deviceQuery.cu located at: C:\ProgramData\NVIDIA Corporation\CUDA Samples\v9.1\bin\win64\Release to view your GPU card information. The output will look like −

Step 3 − Run the bandWidth test located at C:\ProgramData\NVIDIA Corporation\CUDA Samples\v9.1\bin\win64\Release. This ensures that the host and the device are able to communicate properly with each other. The output will look like −

If any of the above tests fail, it means the toolkit has not been installed properly. Re-install by following the above instructions.

Uninstalling

CUDA can be uninstalled without any fuss from the ‘Control Panel’ of Windows.

At this point, the CUDA toolkit is installed. You can get started by running the sample programs provided in the toolkit.

Setting-up Visual Studio for CUDA

For doing development work using CUDA on Visual Studio, it needs to be configured. To do this, go to − File → New | Project. NVIDIA → CUDA →. Now, select a template for your CUDA Toolkit version (We are using 9.1 in this tutorial). To specify a custom CUDA Toolkit location, under CUDA C/C++, select Common, and set the CUDA Toolkit Custom Directory.

How to install cuda

Недавно вышла версия TensorFlow 1.5 с поддержкой CUDA 9, поэтому можно переводить TensorFlow и Keras на новую версию CUDA. В этой статье я расскажу, как установить CUDA 9 и CuDNN 7 в Windows 10. По установке для Linux будет отдельная статья.

Что нужно устанавливать

Чтобы TensorFlow и Keras могли использовать GPU под Windows, необходимо установить три компонента:

CUDA работает только с GPU компании NVIDIA, вот список поддерживаемых GPU. Если у вас GPU от AMD или Intel, то CUDA работать не будет и TensorFlow, к сожалению, не сможет использовать такие GPU.

Для TensorFlow нужна видеокарта с CUDA Compute Capability 3.0. Узнать Compute Capability для своей карты можно на сайте. Если у вас старый GPU с Compute Capability меньше 3.0, то вместо TensorFlow можно использовать Theano, у которой меньше требования к GPU. Однако ускорение на таких GPU будет не очень большим, по сравнению с центральным процессором.

Установка Microsoft Visual Studio

Под Windows NVIDIA CUDA использует Microsoft Visual Studio для генерации кода для CPU. Список поддерживаемых версий Visual Studio для CUDA 9 приведен на сайте NVIDIA. На момент написания этой статьи поддерживаются версии:

Из бесплатных версий есть только Visual Studio Community 2015, я устанавливал именно ее. Скачать Visual Studio Community 2015 можно с сайта my.visualstudio.com, нужна предварительная регистрация.

Если у вас есть Visual Studio 2017, то можно использовать ее. Однако обратите внимание, что пока не поддерживается обновленная версия Visual Studio 2017 с компилятором Visual C++ 15.5. Официально CUDA 9 работает только с Visual C++ 15.0.

Рекомендую устанавливать Visual Studio на английском языке. С русскоязычной Visual Studio есть проблемы у Theano. В TensorFlow я пока с подобными проблемами не сталкивался, но, возможно, мне просто повезло.

При установке Visual Studio выберите средства для разработки C++, остальное устанавливайте по желанию.

После установки добавьте путь к компилятору Visual С++ (cl.exe) в переменную PATH.

Установка NVIDIA CUDA

На время написания этой статьи текущей версией CUDA является 9.1. Однако TensorFlow 1.5 поддерживает только CUDA 9.0. Если установите 9.1, то получите ошибку. Странное поведение, но что поделать.

cuDNN можно скачать бесплатно с сайта NVIDIA. Нужна предварительная регистрация в качестве разработчика NVIDIA, что также делается бесплатно и быстро на этом же сайте.

После регистрации будет предложено скачать несколько версий cuDNN для разных версий CUDA. Выбирайте cuDNN 7 для CUDA 9.0. С другими версиями cuDNN и CUDA не будет работать TensorFlow 1.5.

cuDNN представляет собой архив, в котором всего три файла:

После установки Microsoft Visual Studio, NVIDIA CUDA и cuDNN, компьютер необходимо перезагрузить.