How to setup nginx

How to setup nginx

Search Results

No Results

Filters

Getting Started with NGINX (Part 1): Installation and Basic Setup

This guide is the first of a four-part series. Parts One and Two will walk you through installing NGINX Open Source from the NGINX repositories and making some configuration changes to increase performance and security. Parts Three and Four set up NGINX to serve your site over HTTPS and harden the TLS connection.

Before You Begin

Install NGINX

Stable Versus Mainline

The first decision to make about your installation is whether you want the Stable or Mainline version of NGINX Open Source. Stable is recommended, and will be what this series of guides uses. More on NGINX versions here.

Binary Versus Compiling from Source

There are three primary ways to install NGINX Open Source:

A pre-built binary from your Linux distribution’s repositories. This is the easiest installation method because you use your package manager to install the nginx package. However, for distributions which provide binaries (as opposed to build scripts), you’ll be running an older version of NGINX than the current stable or mainline release. Patches can also be slower to land in distro repositories from upstream.

Compiling from source. This is the most complicated method of installation but still not impractical when following NGINX’s documentation. Source code is updated frequently with patches and maintained at the newest stable or mainline releases, and building can be easily automated. This is the most customizable installation method because you can include or omit any compiling options and flags you choose. For example, one common reason people compile their own NGINX build is so they can use the server with a newer version of OpenSSL than what their Linux distribution provides.

Installation Instructions

The NGINX admin guide gives clear and accurate instructions for any installation method and NGINX version you choose, so we won’t mirror them here. When your installation completes, return here to continue the series.

Configuration Notes

As use of the NGINX web server has grown, NGINX, Inc. has worked to distance NGINX from configurations and terminology that were used in the past when trying to ease adoption for people already accustomed to Apache.

Sure, it can. The NGINX packages in Debian and Ubuntu repositories have changed their configurations to this for quite a while now, so serving sites whose configuration files are stored in /sites-available/ and symlinked to /sites-enabled/ is certainly a working setup. However it is unnecessary, and the Debian Linux family is the only one which does it. Do not force Apache configurations onto NGINX.

Finally, as the NGINX docs point out, the term Virtual Host is an Apache term, even though it’s used in the nginx.conf file supplied from the Debian and Ubuntu repositories, and some of NGINX’s old documentation. A Server Block is the NGINX equivalent, so that is the phrase you’ll see in this series on NGINX.

NGINX Configuration Best Practices

There is a large variety of customizations you can do to NGINX to fit it better to your needs. Many of those will be exclusive to your use case though; what works great for one person may not work at all for another.

This series will provide configurations that are general enough to be useful in just about any production scenario, but which you can build on for your own specialized setup. Everything in the section below is considered a best practice and none are reliant on each other. They’re not essential to the function of your site or server, but they can have unintended and undesirable consequences if disregarded.

Two quick points:

Before going further, first preserve the default nginx.conf file so you have something to restore to if your customizations get so convoluted that NGINX breaks.

After implementing a change below, reload your configuration with:

Use Multiple Worker Processes

For more information, see the sections on worker processes in the NGINX docs and this NGINX blog post.

Disable Server Tokens

NGINX’s version number is visible by default with any connection made to the server, whether by a successful 201 connection by cURL, or a 404 returned to a browser. Disabling server tokens makes it more difficult to determine NGINX’s version, and therefore more difficult for an attacker to execute version-specific attacks.

Server tokens enabled:

Server tokens disabled:

Add the following line to the http block of /etc/nginx/nginx.conf :

Set Your Site’s Root Directory

This series will use /var/www/example.com/ in its examples. Replace example.com where you see it with the IP address or domain name of your Linode.

The root directory for your site or sites should be added to the corresponding server block of /etc/nginx/conf.d/example.com.conf :

Then create that directory:

Serve Content Over IPv4 and IPv6

Default NGINX configurations listen on port 80 and on all IPv4 addresses. Unless you intend your site to be inaccessible over IPv6 (or are unable to provide it for some reason), you should tell NGINX to also listen for incoming IPv6 traffic.

Add a second listen directive for IPv6 to the server block of /etc/nginx/conf.d/example.com.conf :

If your site uses SSL/TLS, you would add:

Static Content Compression

You do not want to universally enable gzip compression because, depending on your site’s content and whether you set session cookies, you risk vulnerability to the CRIME and BREACH exploits.

Compression has been disabled by default in NGINX for years now, so it’s not vulnerable to CRIME out of the box. Modern browsers have also taken steps against these exploits, but web servers can still be configured irresponsibly.

On the other hand, if you leave gzip compression totally disabled, you rule out those vulnerabilities and use fewer CPU cycles, but at the expense of decreasing your site’s performance. There are various server-side mitigations possible and the release of TLS 1.3 will further contribute to that. For now, and unless you know what you’re doing, the best solution is to compress only static site content such as images, HTML, and CSS.

In cases where NGINX is serving multiple websites, some using SSl/TLS and some not, an example would look like below. The gzip directive is added to the HTTP site’s server block, which ensures it remains disabled for the HTTPS site.

Setting Up an NGINX Demo Environment

Configure NGINX Open Source as a web server and NGINX Plus as a load balancer, as required for the sample deployments in NGINX deployment guides.

The instructions in this guide explain how to set up a simple demo environment that uses NGINX Plus to load balance web servers that run NGINX Open Source and serve two distinct web applications. It is referenced by some of our deployment guides for implementing highly availability of NGINX Plus and NGINX Open Source in cloud environments.

Prerequisites

This guide assumes you have already provisioned a number of host systems (physical servers, virtual machines, containers, or cloud instances) required for a deployment guide (if applicable) and installed NGINX Open Source or NGINX Plus on each instance as appropriate. For installation instructions, see the NGINX Plus Admin Guide.

Some commands require root privilege. If appropriate for your environment, prefix commands with the sudo command.

Configuring NGINX Open Source for Web Serving

The steps in this section configure an NGINX Open Source instance as a web server to return a page like the following, which specifies the server name, address, and other information. The page is defined in the demo-index.html configuration file you create in Step 4 below.

If you are using these instructions to satisfy the prerequisites for one of our deployment guides, the Appendix in the guide specifies the name of each NGINX Open Source instance and whether to configure App 1 or App 2.

Note: Some commands require root privilege. If appropriate for your environment, prefix commands with the sudo command.

Open a connection to the NGINX Open Source instance and change the directory to **/etc/nginx/conf.d:

Rename default.conf to default.conf.bak so that NGINX Plus does not use it.

Create a new file called app.conf with the following contents.

Include the following directive in the top‑level (“main”) context in /etc/nginx/nginx.conf, if it does not already appear there.

In the /usr/share/nginx/html directory, create a new file called demo-index.html with the following contents, which define the default web page that appears when users access the instance.

In the tag, replace the comment with 1 or 2 depending on whether the instance is serving App 1 or App 2.

Configuring NGINX Plus for Load Balancing

The steps in this section configure an NGINX Plus instance to load balance requests across the group of NGINX Open Source web servers you configured in the previous section.

If you are using these instructions to satisfy the prerequisites for one of our deployment guides, the Appendix in the guide specifies the names of the NGINX Plus instances used in it.

Repeat these instructions on each instance. Alternatively, you can configure one instance and share the configuration with its peers in a cluster. See the NGINX Plus Admin Guide.

Open a connection to the NGINX Plus instance and change the directory to **/etc/nginx/conf.d:

Rename default.conf to default.conf.bak so that NGINX Plus does not use it.

Create a new file called lb.conf with the following contents.

Note: In the upstream blocks, include a server directive for each NGINX Open Source instance that serves the relevant application.

How to Setup Nginx Server On Ubuntu:18.04 /Dockerfile

Nginx is one of the most popular web servers in the world and is responsible for hosting some of the largest and highest-traffic sites on the internet. It is more resource-friendly than Apache in most cases and can be used as a web server or reverse proxy.

In this guide, we’ll discuss how to install Nginx on your Ubuntu 18.04 server.

Before installing new software, it is strongly recommended to update your local software database. Updating helps to make sure you’re installing the latest and best-patched software available.

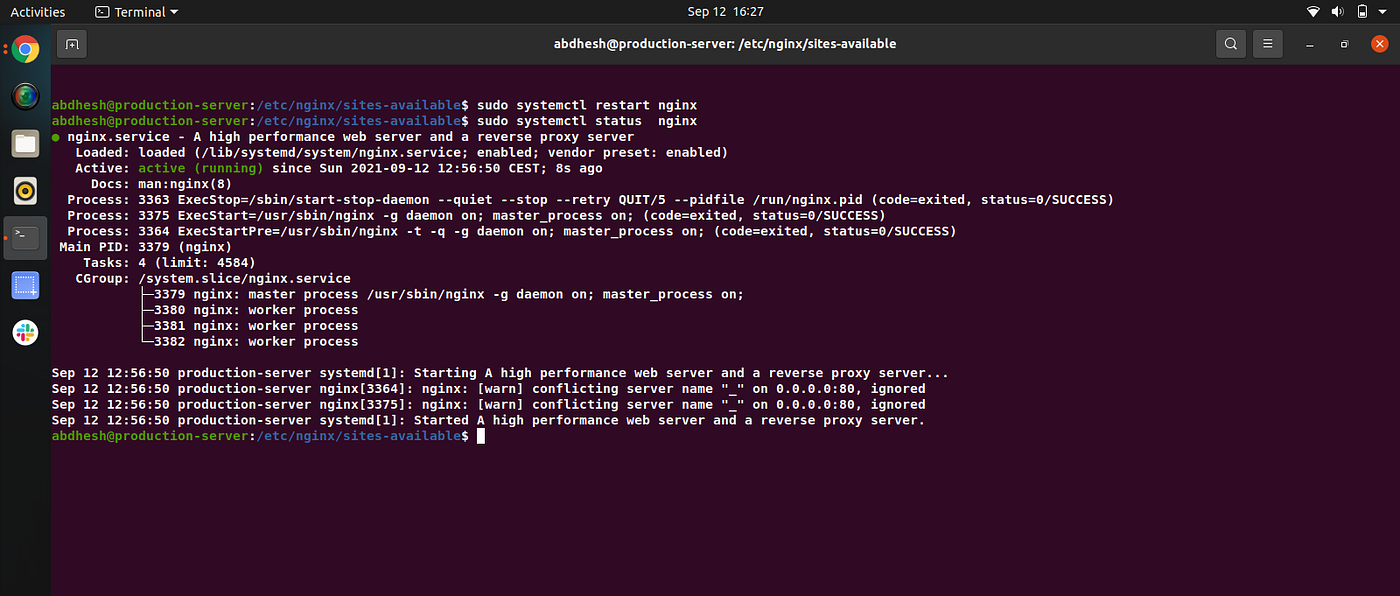

$ sudo systemctl status nginx

Start nginx webservices

$ sudo systemctl start nginx

You can also use the following commands in place of start:

Step3: Allow Nginx Traffic through a Firewall:

You can generate a list of the firewall rules using the following command:

This should generate a list of application profiles. On the list, you should see four entries related to Nginx:

To allow normal HTTP traffic to your Nginx server, use the Nginx HTTP profile with the following command:

To check the status of your firewall, use the following command:

It should display a list of the kind of HTTP web traffic allowed to different services. Nginx HTTP should be listed as ALLOW and Anywhere.

Step-4: Familiar with Important Nginx Files & Directories.

Now that you know how to manage the Nginx service itself, you should take a few minutes to familiarize yourself with a few important directories and files.

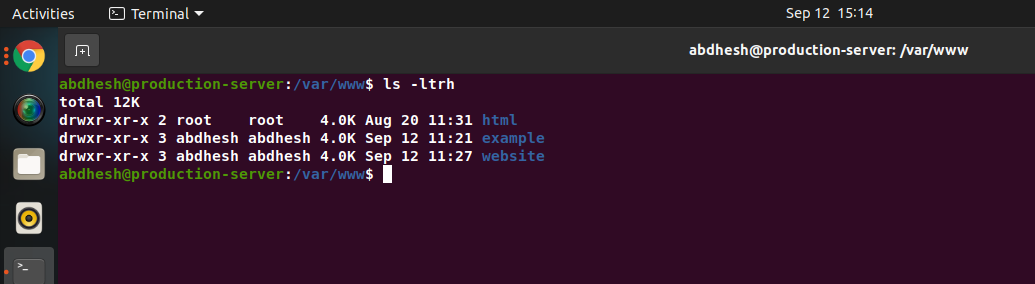

/var/www/html: The actual web content, which by default only consists of the default Nginx page you saw earlier, is served out of the /var/www/html directory. This can be changed by altering Nginx configuration files.

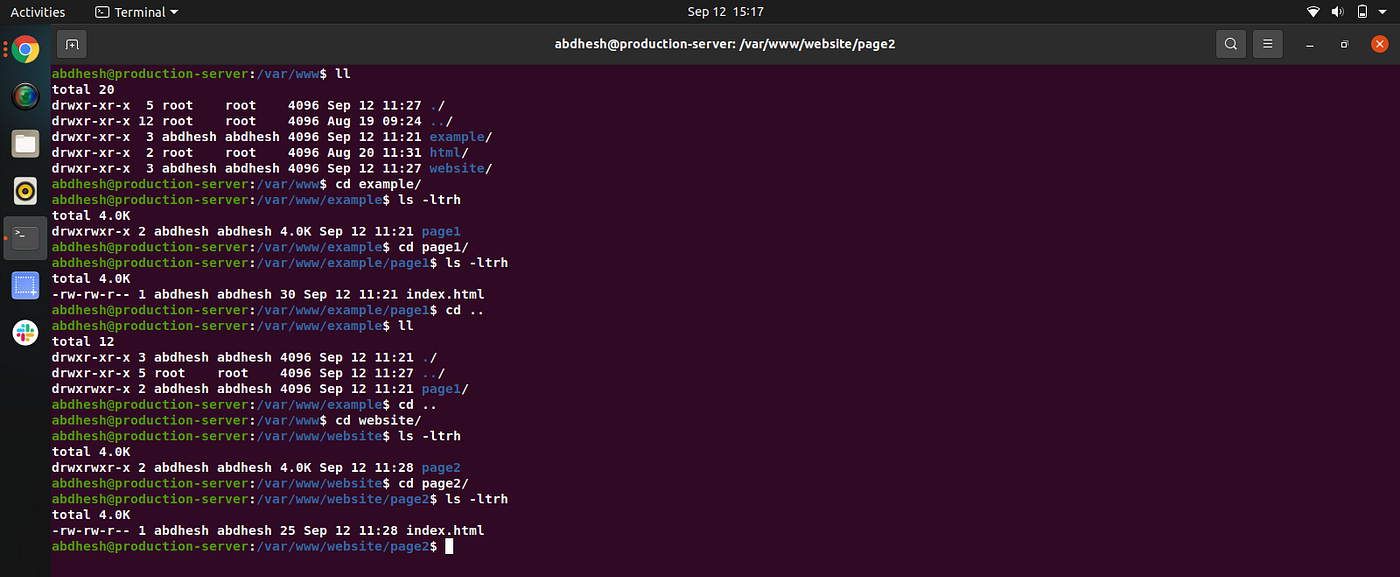

Step-5: In my case I am going to create to directories:

Inside this directories I am going to create two sub directories.

I have already created above types of scenario.

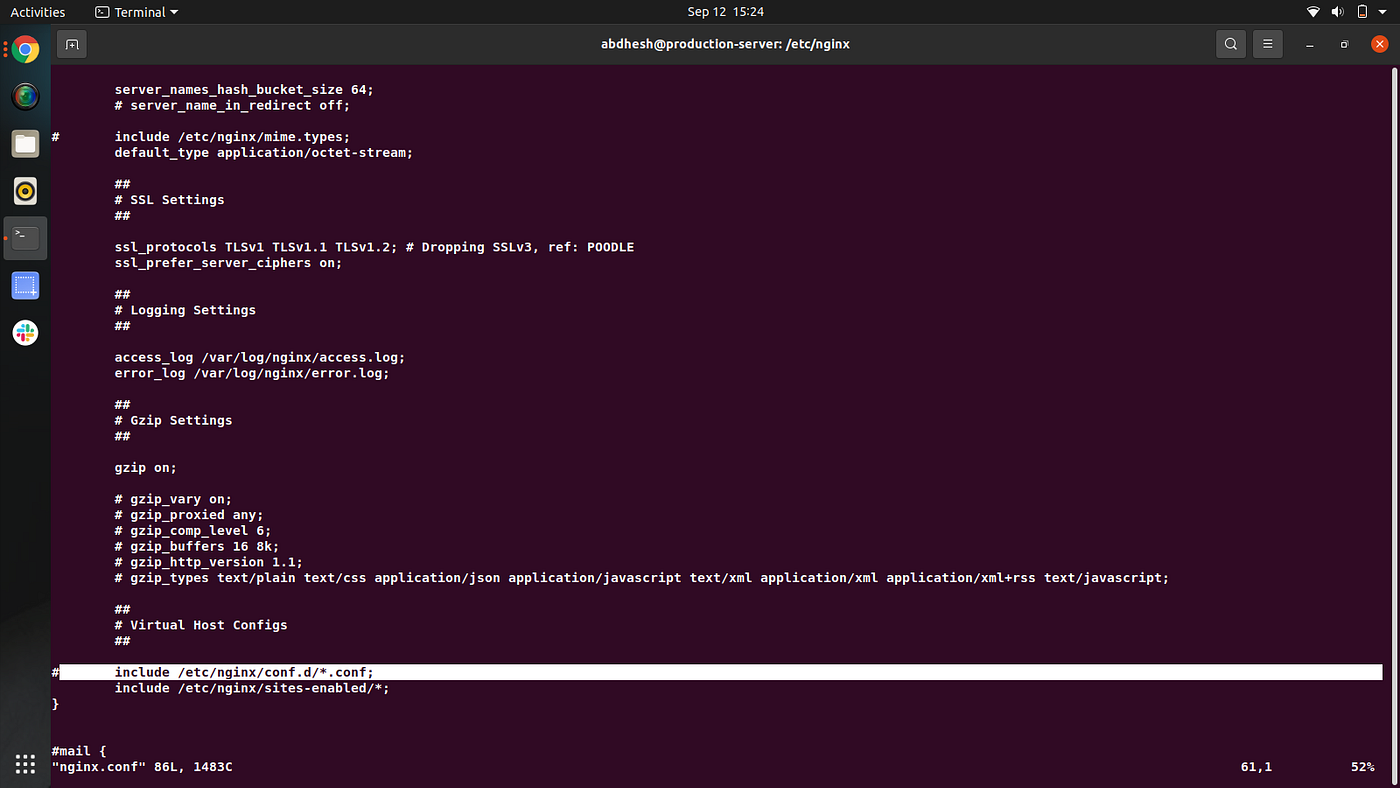

I am going to edit nginx configuration file.

I have commented /etc/nginx/conf.d/conf file location in this configuration.

Step-7: I’m go to /etc/nginx/sites-available directories.

I am create to different different configuration files.

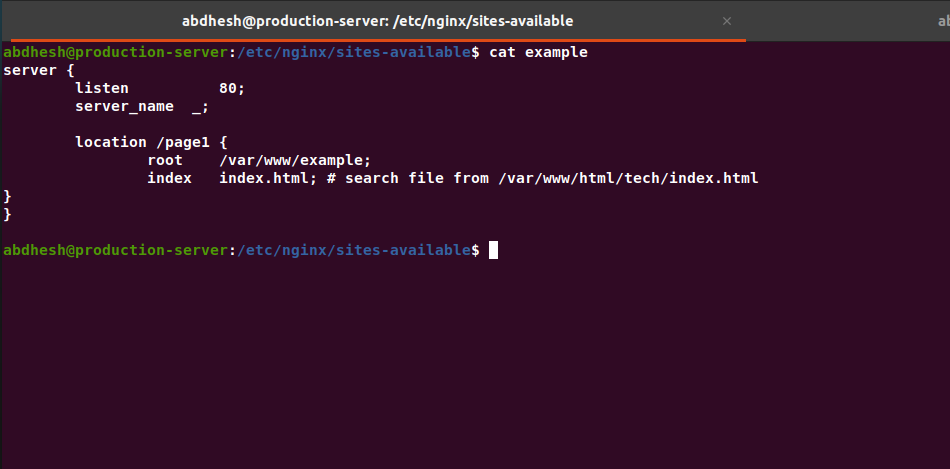

First: example file configuration

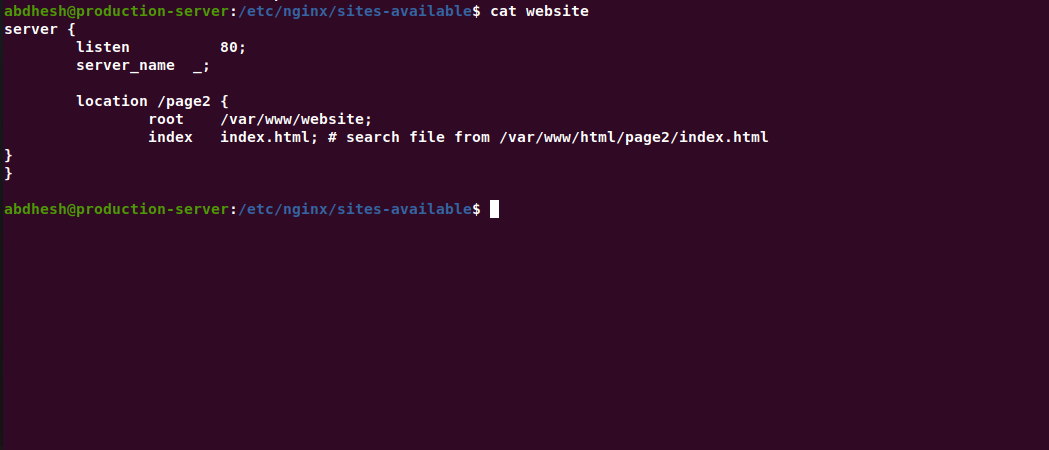

Second: website file configuration

Step-8: Enable site in Nginx Server:

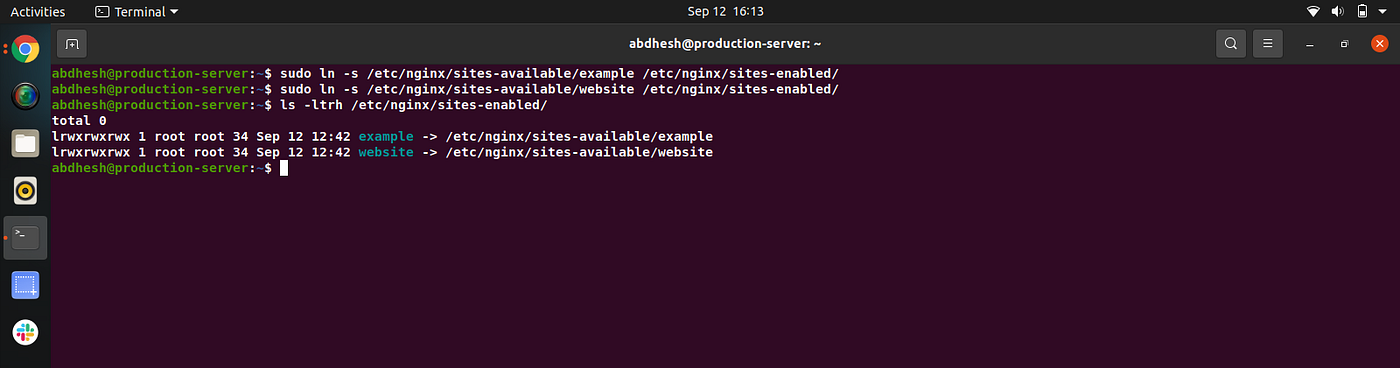

To enable a specific virtual host file, we have to use ln command to create a symlink. A symlink is kind of a shortcut to the original file. It means that you can edit your virtual host file inside sites-available directory and the changes will be applied in sites-enabled directory too.

Let’s create a symlink of one of our example site from sites-available directory to sites-enabled directory. Execute the following command to perform this task.

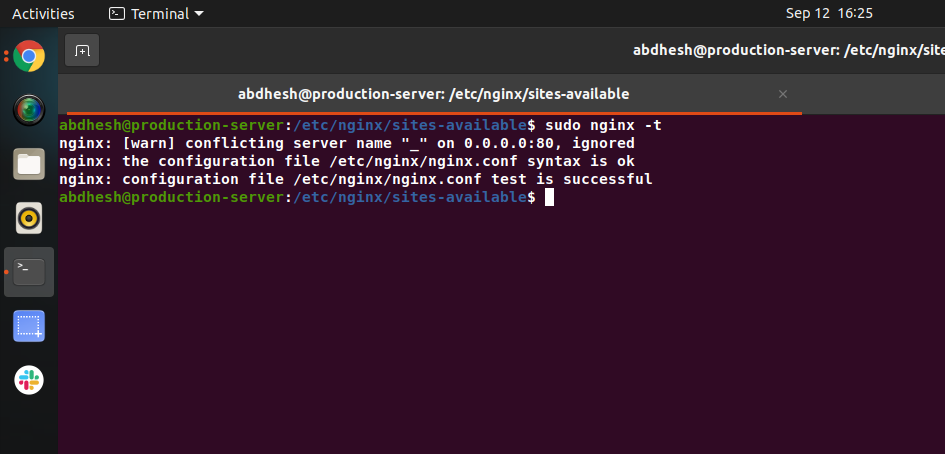

Verifying nginx configuration files.

Step-9: Restart Nginx Service.

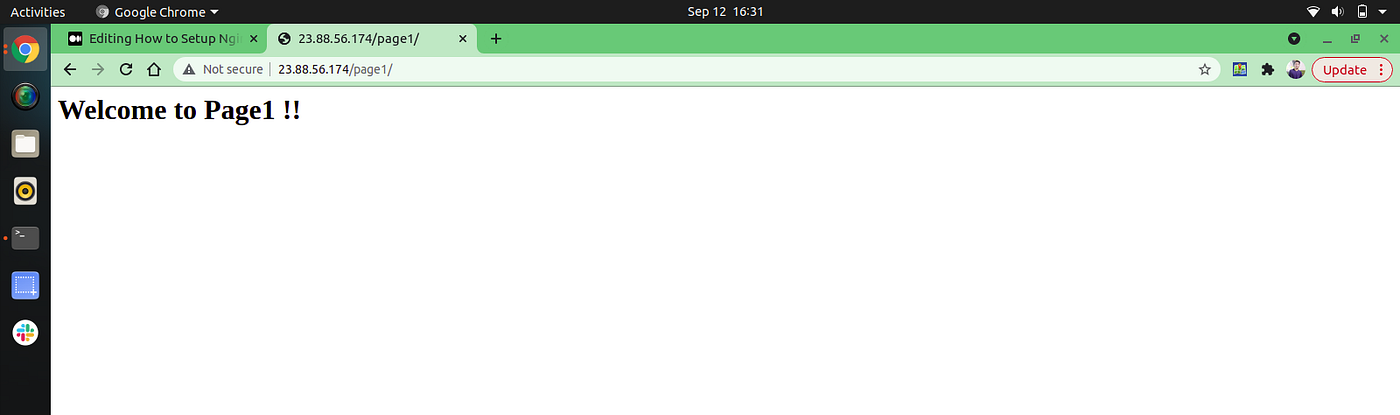

Step-10: Go to Web Browser and Access the page.

ip address: 23.88.56.174

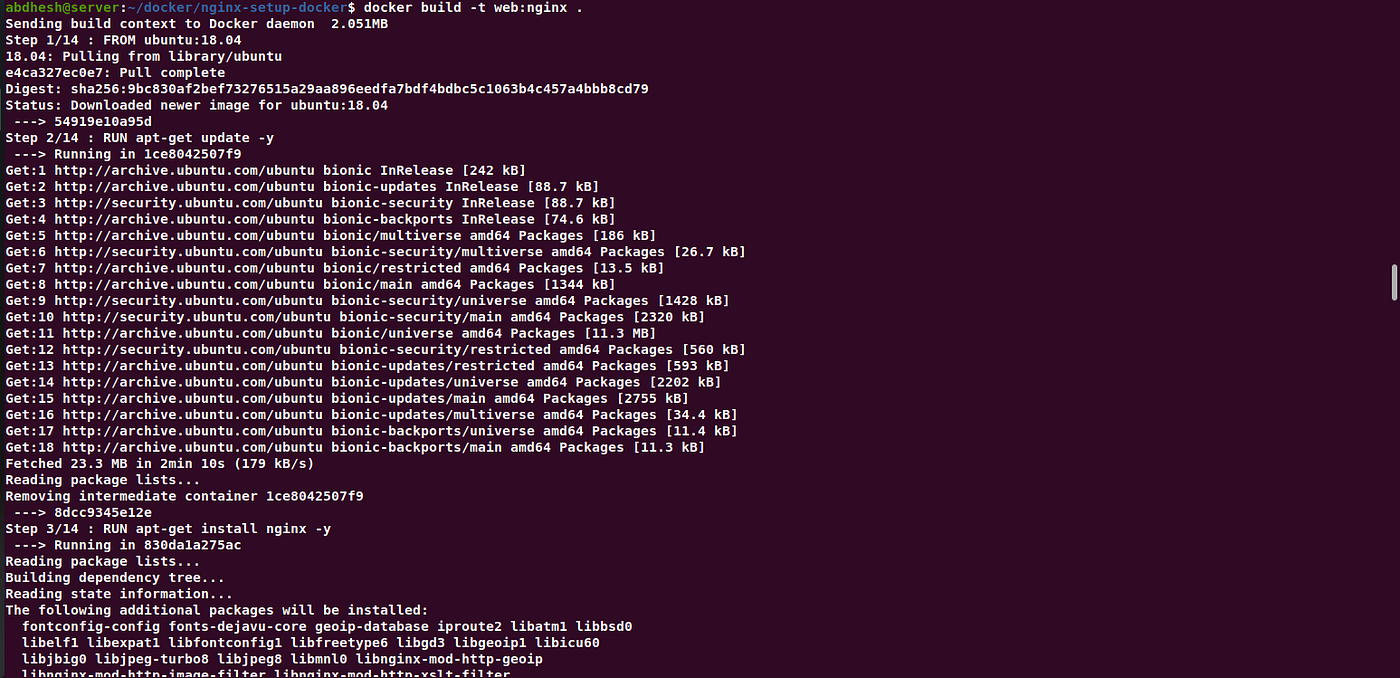

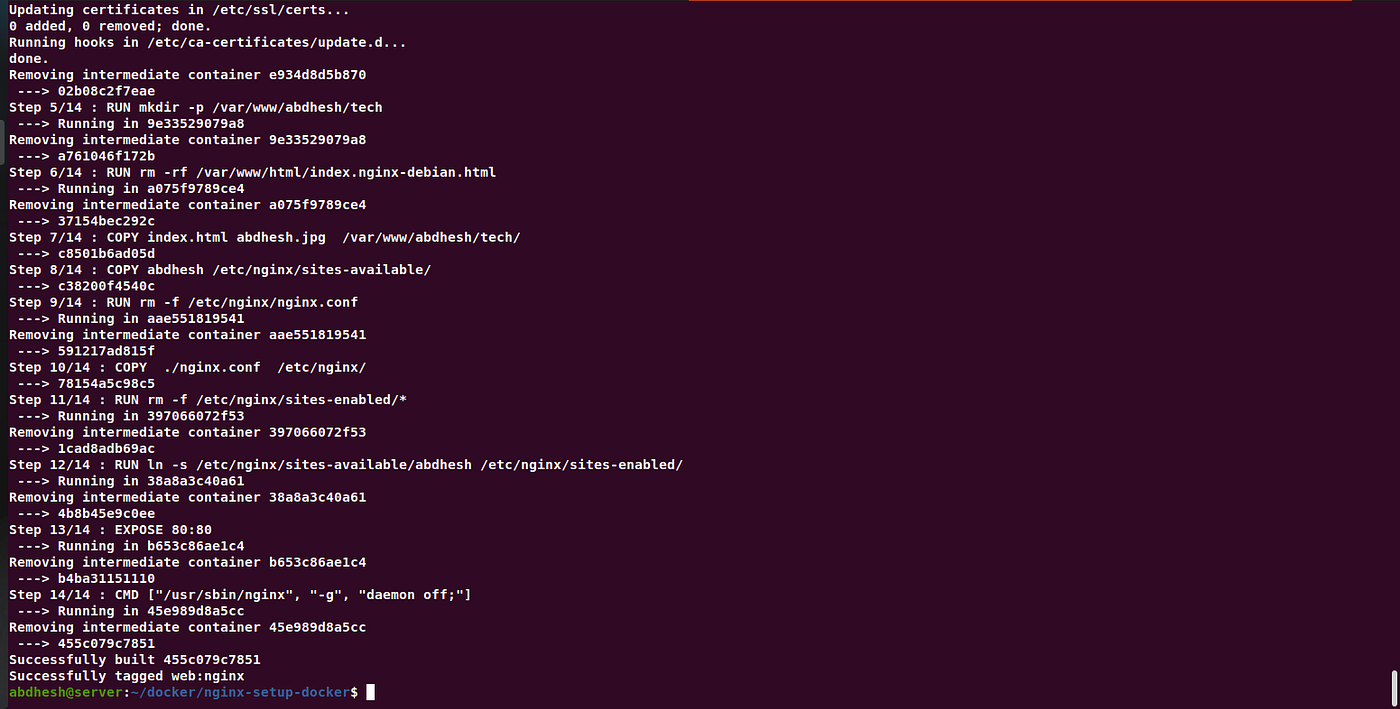

I’m going to create Dockerfile in docker_WIP workspace.

Step-2: build docker nginx image.

I’am going to run following command to build docker images name called web:nginx

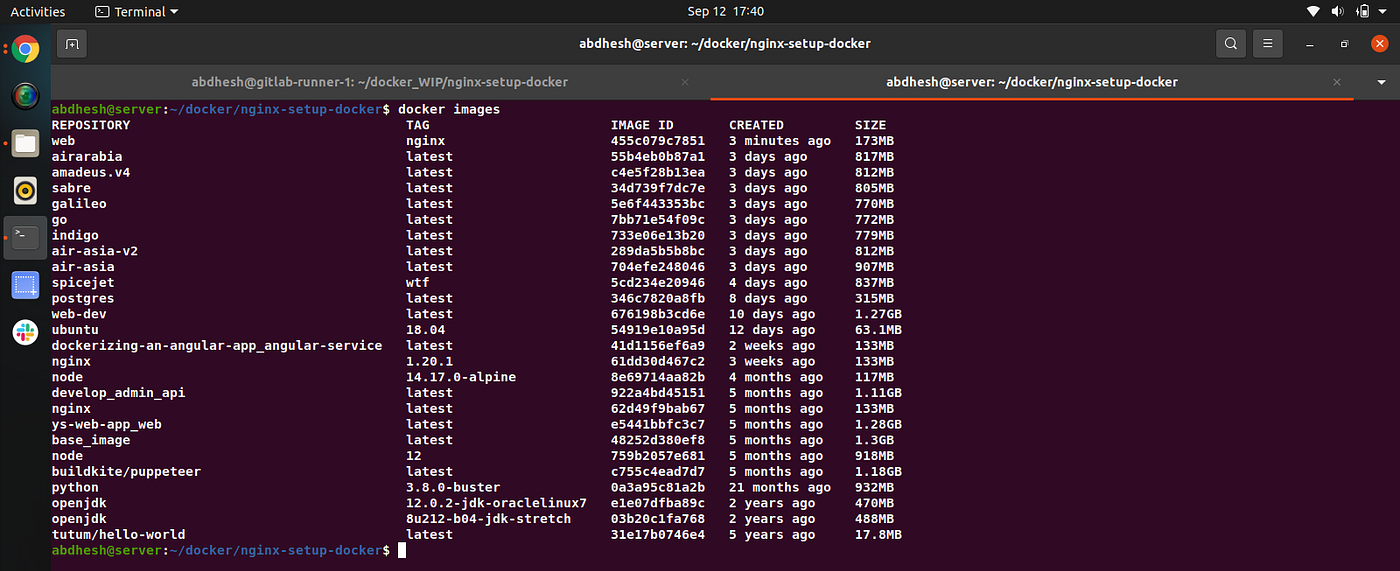

I have successfully build docker images called web:nginx

Step-4: showing Image successfully build or not.

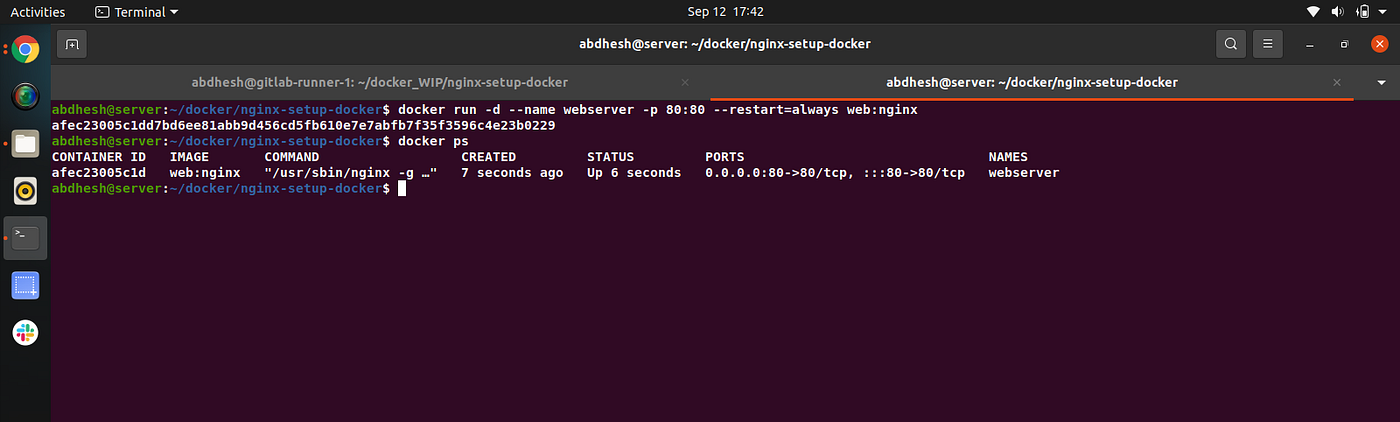

Step-3: Launch Container called webserver from web:nginx image.

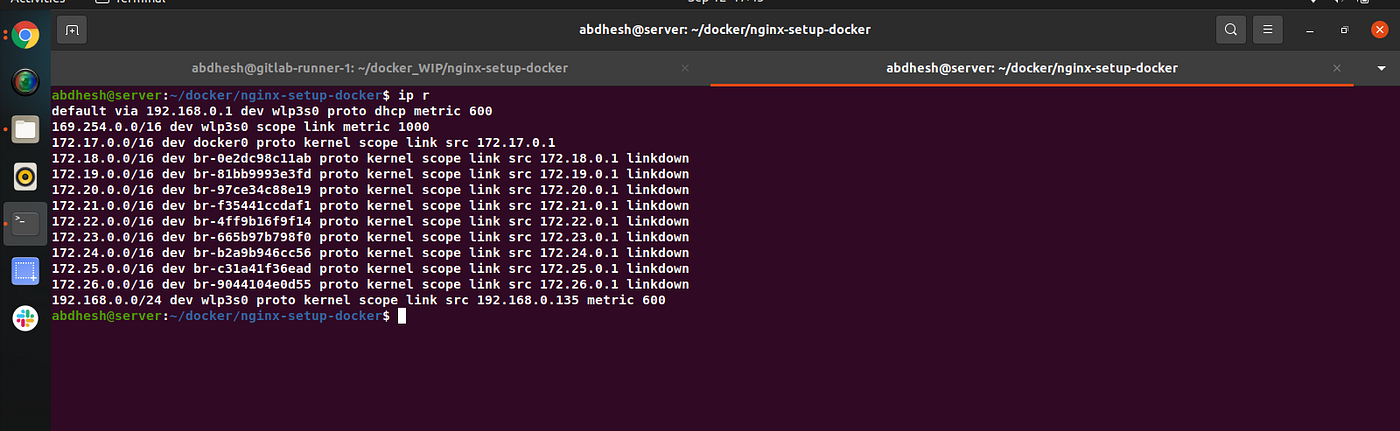

Step-4: Server local Server IP

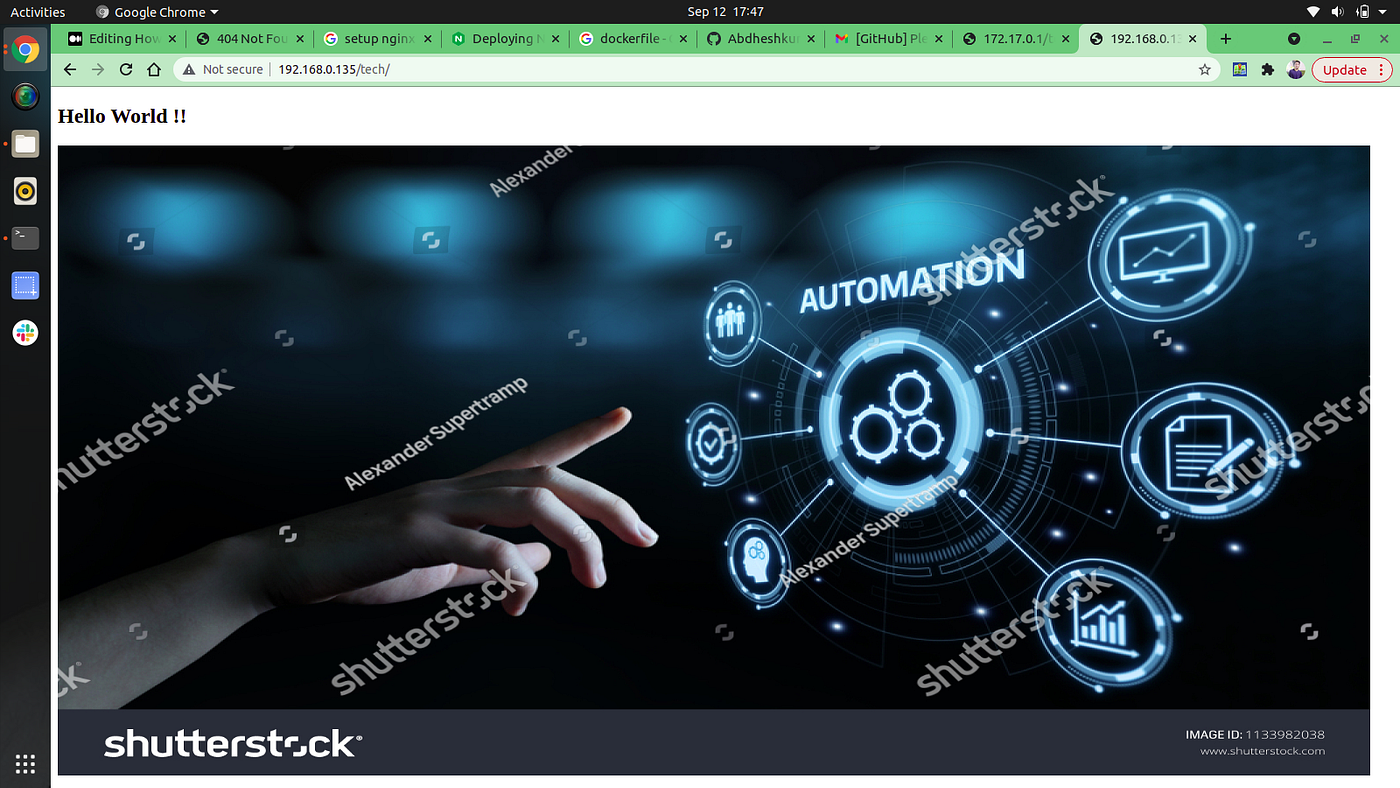

Step-5: go to browser & access page http://172.17.0.1/tech

“I hope I have explained everything, and if you have any doubts or suggestions, you can comment on this blog or contact me on my LinkedIn.”

Настройка nginx

Тема правильной настройки nginx очень велика, и, боюсь, в рамки одной статьи на хабре никак не помещается. В этом тексте я постарался рассказать про общую структуру конфига, более интересные мелочи и частности, возможно, будут позже. 🙂

Неплохой начальной точкой для настройки nginx является конфиг, который идёт в комплекте с дистрибутивом, но очень многие возможности этого сервера в нём даже не упоминаются. Значительно более подробный пример есть на сайте Игоря Сысоева: sysoev.ru/nginx/docs/example.html. Однако, давайте лучше попробуем собрать с нуля свой конфиг, с бриджем и поэтессами. 🙂

Начнём с общих настроек. Сначала укажем пользователя, от имени которого будет работать nginx (от рута работать плохо, все знают 🙂 )

user nobody;

Теперь скажем nginx-у, какое количество рабочих процессов породить. Обычно, хорошим выбором бывает число процессов, равное числу процессорных ядер в вашем сервере, но с этой настройкой имеет смысл поэкспериментировать. Если ожидается высокая нагрузка на жёсткий диск, можно сделать по процессу на каждый физический жёсткий диск, поскольку вся работа будет всё-равно ограничена его производительностью.

worker_processes 2;

Уточним, куда писать логи ошибок. Потом, для отдельных виртуальных серверов, этот параметр можно переопределить, так что в этот лог будут сыпаться только «глобальные» ошибки, например, связанные со стартом сервера.

error_log /spool/logs/nginx/nginx.error_log notice; # уровень уведомлений «notice», конечно, можно менять

Теперь идёт очень интересная секция «events». В ней можно задать максимальное количество соединений, которые одновременно будет обрабатывать один процесс-воркер, и метод, который будет использоваться для получения асинхронных уведомлений о событиях в ОС. Конечно же, можно выбрать только те методы, которые доступны на вашей ОС и были включены при компиляции.

Эти параметры могут оказать значительное влияние на производительность вашего сервера. Их надо подбирать индивидуально, в зависимости от ОС и железа. Я могу привести только несколько общих правил.

Модули работы с событиями:

— select и poll обычно медленнее и довольно сильно нагружают процессор, зато доступны практически везде, и работают практически всегда;

— kqueue и epoll — более эффективны, но доступны только во FreeBSD и Linux 2.6, соответственно;

— rtsig — довольно эффективный метод, и поддерживается даже очень старыми линуксами, но может вызывать проблемы при большом числе подключений;

— /dev/poll — насколько мне известно, работает в несколько более экзотических системах, типа соляриса, и в нём довольно эффективен;

Параметр worker_connections:

— Общее максимальное количество обслуживаемых клиентов будет равно worker_processes * worker_connections;

— Иногда могут сработать в положительную сторону даже самые экстремальные значения, вроде 128 процессов, по 128 коннектов на процесс, или 1 процесса, но с параметром worker_connections=16384. В последнем случае, впрочем, скорее всего понадобится тюнить ОС.

events <

worker_connections 2048;

use kqueue; # У нас BSD 🙂

>

Следующая секция — самая большая, и содержит самое интересное. Это описание виртуальных серверов, и некоторых параметров, общих для них всех. Я опущу стандартные настройки, которые есть в каждом конфиге, типа путей к логам.

Системный вызов sendfile появился в Linux относительно недавно. Он позволяет отправить данные в сеть, минуя этап их копирования в адресное пространство приложения. Во многих случаях это существенно повышает производительность сервера, так что параметр sendfile лучше всегда включать.

sendfile on;

Параметр keepalive_timeout отвечает за максимальное время поддержания keepalive-соединения, в случае, если пользователь по нему ничего не запрашивает. Обдумайте, как именно на вашем сайте посылаются запросы, и исправьте этот параметр. Для сайтов, активно использующих AJAX, соединение лучше держать подольше, для статических страничек, которые пользователи будут долго читать, соединение лучше разрывать пораньше. Учтите, что поддерживая неактивное keepalive-соединение, вы занимаете коннекшн, который мог бы использоваться по-другому. 🙂

keepalive_timeout 15;

Отдельно стоит выделить настройки проксирования nginx. Чаще всего, nginx используется именно как сервер-прокси, соответственно они имеют довольно большое значение. В частности, размер буфера для проксируемых запросов имеет смысл устанавливать не менее, чем ожидаемый размер ответа от сервера-бэкенда. При медленных (или, наоборот, очень быстрых) бэкендах, имеет смысл изменить таймауты ожидания ответа от бэкенда. Помните, чем больше эти таймауты, тем дольше будут ждать ответа ваши пользователе, при тормозах бэкенда.

proxy_buffers 8 64k;

proxy_intercept_errors on;

proxy_connect_timeout 1s;

proxy_read_timeout 3s;

proxy_send_timeout 3s;

Небольшой трюк. В случае, если nginx обслуживает более чем один виртуальный хост, имеет смысл создать «виртуальный хост по-умолчанию», который будет обрабатывать запросы в тех случаях, когда сервер не сможет найти другой альтернативы по заголовку Host в запросе клиента.

# default virtual host

server <

listen 80 default;

server_name localhost;

deny all;

>

Далее может следовать одна (или несколько) секций «server». В каждой из них описывается виртуальный хост (чаще всего, name-based). Для владельцев множества сайтов на одном хостинге, или для хостеров здесь может быть что-то, типа директивы

include /spool/users/nginx/*.conf;

Остальные, скорее всего опишут свой виртуальный хост прямо в основном конфиге.

server <

listen 80;

charset utf-8;

И скажем, что мы не хотим принимать от клиентов запросы, длиной более чем 1 мегабайт.

client_max_body_size 1m;

Включим для сервера SSI и попросим для SSI-переменных резервировать не более 1 килобайта.

ssi on;

ssi_value_length 1024;

И, наконец, опишем два локейшна, один из которых будет вести на бэкенд, к апачу, запущенному на порту 9999, а второй отдавать статические картинки с локальной файловой системы. Для двух локейшнов это малоосмысленно, но для большего их числа имеет смысл также сразу определить переменную, в которой будет храниться корневой каталог сервера, и потом использовать её в описаниях локаций.

How To Install Nginx on Ubuntu 16.04

Introduction

Nginx is one of the most popular web servers in the world and is responsible for hosting some of the largest and highest-traffic sites on the internet. It is more resource-friendly than Apache in most cases and can be used as a web server or a reverse proxy.

In this guide, we’ll discuss how to get Nginx installed on your Ubuntu 16.04 server.

Prerequisites

Before you begin this guide, you should have a regular, non-root user with sudo privileges configured on your server. You can learn how to configure a regular user account by following our initial server setup guide for Ubuntu 16.04.

When you have an account available, log in as your non-root user to begin.

Step 1: Install Nginx

Nginx is available in Ubuntu’s default repositories, so the installation is rather straight forward.

Since this is our first interaction with the apt packaging system in this session, we will update our local package index so that we have access to the most recent package listings. Afterwards, we can install nginx :

After accepting the procedure, apt-get will install Nginx and any required dependencies to your server.

Step 2: Adjust the Firewall

We can list the applications configurations that ufw knows how to work with by typing:

You should get a listing of the application profiles:

As you can see, there are three profiles available for Nginx:

It is recommended that you enable the most restrictive profile that will still allow the traffic you’ve configured. Since we haven’t configured SSL for our server yet, in this guide, we will only need to allow traffic on port 80.

You can enable this by typing:

You can verify the change by typing:

You should see HTTP traffic allowed in the displayed output:

Step 3: Check your Web Server

At the end of the installation process, Ubuntu 16.04 starts Nginx. The web server should already be up and running.

We can check with the systemd init system to make sure the service is running by typing:

As you can see above, the service appears to have started successfully. However, the best way to test this is to actually request a page from Nginx.

You can access the default Nginx landing page to confirm that the software is running properly. You can access this through your server’s domain name or IP address.

If you do not have a domain name set up for your server, you can learn how to set up a domain with DigitalOcean here.

If you do not want to set up a domain name for your server, you can use your server’s public IP address. If you do not know your server’s IP address, you can get it a few different ways from the command line.

Try typing this at your server’s command prompt:

You will get back a few lines. You can try each in your web browser to see if they work.

An alternative is typing this, which should give you your public IP address as seen from another location on the internet:

When you have your server’s IP address or domain, enter it into your browser’s address bar:

You should see the default Nginx landing page, which should look something like this:

This page is simply included with Nginx to show you that the server is running correctly.

Step 4: Manage the Nginx Process

Now that you have your web server up and running, we can go over some basic management commands.

To stop your web server, you can type:

To start the web server when it is stopped, type:

To stop and then start the service again, type:

If you are simply making configuration changes, Nginx can often reload without dropping connections. To do this, this command can be used:

By default, Nginx is configured to start automatically when the server boots. If this is not what you want, you can disable this behavior by typing:

To re-enable the service to start up at boot, you can type:

Step 5: Get Familiar with Important Nginx Files and Directories

Now that you know how to manage the service itself, you should take a few minutes to familiarize yourself with a few important directories and files.

Content

Server Configuration

Server Logs

Conclusion

Now that you have your web server installed, you have many options for the type of content to serve and the technologies you want to use to create a richer experience.

Learn how to use Nginx server blocks here. If you’d like to build out a more complete application stack, check out this article on how to configure a LEMP stack on Ubuntu 16.04.

Want to learn more? Join the DigitalOcean Community!

Join our DigitalOcean community of over a million developers for free! Get help and share knowledge in our Questions & Answers section, find tutorials and tools that will help you grow as a developer and scale your project or business, and subscribe to topics of interest.