How update docker compose

How update docker compose

Compose file versions and upgrading

Estimated reading time: 16 minutes

The Compose file is a YAML file defining services, networks, and volumes for a Docker application.

The Compose file formats are now described in these references, specific to each version.

| Reference file | What changed in this version |

|---|---|

| Compose Specification (most current, and recommended) | Versioning |

| Version 3 | Version 3 updates |

| Version 2 | Version 2 updates |

| Version 1 (Deprecated) | Version 1 updates |

The topics below explain the differences among the versions, Docker Engine compatibility, and how to upgrade.

Compatibility matrix

There are several versions of the Compose file format – 1, 2, 2.x, and 3.x

This table shows which Compose file versions support specific Docker releases.

| Compose file format | Docker Engine release |

|---|---|

| Compose specification | 19.03.0+ |

| 3.8 | 19.03.0+ |

| 3.7 | 18.06.0+ |

| 3.6 | 18.02.0+ |

| 3.5 | 17.12.0+ |

| 3.4 | 17.09.0+ |

| 3.3 | 17.06.0+ |

| 3.2 | 17.04.0+ |

| 3.1 | 1.13.1+ |

| 3.0 | 1.13.0+ |

| 2.4 | 17.12.0+ |

| 2.3 | 17.06.0+ |

| 2.2 | 1.13.0+ |

| 2.1 | 1.12.0+ |

| 2.0 | 1.10.0+ |

In addition to Compose file format versions shown in the table, the Compose itself is on a release schedule, as shown in Compose releases, but file format versions do not necessarily increment with each release. For example, Compose file format 3.0 was first introduced in Compose release 1.10.0, and versioned gradually in subsequent releases.

The latest Compose file format is defined by the Compose Specification and is implemented by Docker Compose 1.27.0+.

Looking for more detail on Docker and Compose compatibility?

We recommend keeping up-to-date with newer releases as much as possible. However, if you are using an older version of Docker and want to determine which Compose release is compatible, refer to the Compose release notes. Each set of release notes gives details on which versions of Docker Engine are supported, along with compatible Compose file format versions. (See also, the discussion in issue #3404.)

For details on versions and how to upgrade, see Versioning and Upgrading.

Versioning

There are three legacy versions of the Compose file format:

Version 1. This is specified by omitting a version key at the root of the YAML.

The latest and recommended version of the Compose file format is defined by the Compose Specification. This format merges the 2.x and 3.x versions and is implemented by Compose 1.27.0+.

v2 and v3 Declaration

Note: When specifying the Compose file version to use, make sure to specify both the major and minor numbers. If no minor version is given, 0 is used by default and not the latest minor version.

The Compatibility Matrix shows Compose file versions mapped to Docker Engine releases.

To move your project to a later version, see the Upgrading section.

Several things differ depending on which version you use:

These differences are explained below.

Version 1 (Deprecated)

Compose files that do not declare a version are considered “version 1”. In those files, all the services are declared at the root of the document.

Version 1 is supported by Compose up to 1.6.x. It will be deprecated in a future Compose release.

Version 1 files cannot declare named volumes, networks or build arguments.

Compose does not take advantage of networking when you use version 1: every container is placed on the default bridge network and is reachable from every other container at its IP address. You need to use links to enable discovery between containers.

Version 2

Compose files using the version 2 syntax must indicate the version number at the root of the document. All services must be declared under the services key.

Version 2 files are supported by Compose 1.6.0+ and require a Docker Engine of version 1.10.0+.

Named volumes can be declared under the volumes key, and networks can be declared under the networks key.

By default, every container joins an application-wide default network, and is discoverable at a hostname that’s the same as the service name. This means links are largely unnecessary. For more details, see Networking in Compose.

When specifying the Compose file version to use, make sure to specify both the major and minor numbers. If no minor version is given, 0 is used by default and not the latest minor version. As a result, features added in later versions will not be supported. For example:

How to Update Docker Compose on a Synology NAS

Today we are going to take a look at how you can update Docker Compose on a Synology NAS.

First off, if you aren’t sure how to use Docker Compose on a Synology NAS, I created a tutorial on how you can do it. The tutorial will walk you through Docker entirely and help you decide if Docker Compose is the right choice for you. This tutorial will focus on how you can update Docker Compose since the Docker Compose version shipped with a Synology NAS is fairly old.

Instructions

1. Ensure that you have Docker installed. Docker Compose is automatically installed on a Synology NAS, but the device must have Docker installed.

2. Ensure you can SSH into your Synology NAS. Open Control Panel, select Terminal & SNMP, and Enable SSH service. If you are using Synology’s Firewall, ensure that you allow port 22 traffic. I created a video on how to SSH into your Synology NAS if you have any problems.

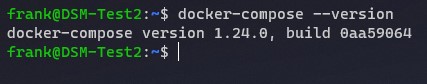

3. After you SSH into the device, run the command below to determine the version of Docker Compose you’re currently running.

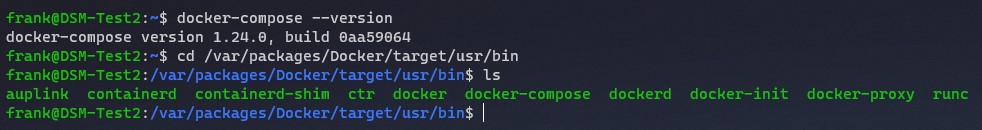

4. Navigate to where the Docker Compose directory exists.

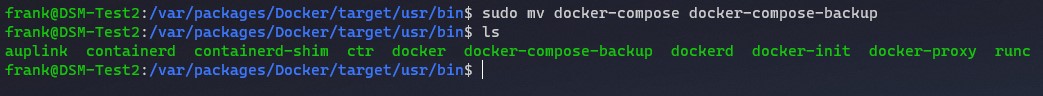

5. Back up the current directory by renaming it. This will keep the folder on your Synology NAS, but will not be overwritten by the update.

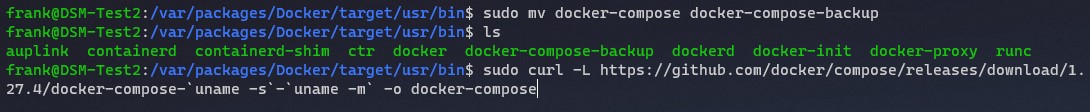

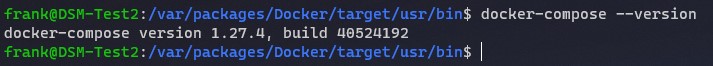

6. Now that the directory has been renamed, we can run the script below which will automatically update Docker Compose. Please note that as of the writing of this tutorial, version 1.27.4 is the latest release. I would heir on the side of caution and not install anything other than the Latest Release, but you must update the version below (written in red) with the latest version. You can find the latest version at this link.

NOTE: If you update to a version that doesn’t appear to work, delete the folder by running the command below, then rerun the command above with the prior version.

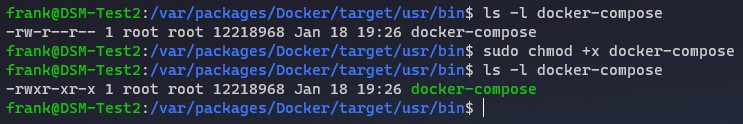

7. Update the permissions so that the folder can be executed. This command gives execute permission to the folder on top of the permissions that it currently has.

8. Docker Compose is now updated! You can run the command below again to confirm that you’re on version 1.27.4. If you’re interested in learning why you might want to use Docker Compose as opposed to Synology’s Docker GUI, I explain it in greater detail in the video instructions!

Conclusion – Synology NAS Update Docker Compose

If you’ve made it this far, you can learn how to create Docker Compose containers on a Synology NAS in this tutorial. I also have a guide that will show you how to update Docker Compose containers. Some people find it easier to use Synology’s GUI, but Docker Compose opens a lot of possibilities for extremely easy container management!

As always, thank you for reading the tutorial. If you have any questions, please leave them in the comments!

Updating and Backing Up Docker Containers With Version Control

Issues with the latest tag

There are various reasons why an update of containers can go wrong. New images may have issues or new app or package versions may not be compatible with existing data (our images are automatically built and published and although our Jenkins does certain tests on the new image before publishing, they are not very extensive and do not cover updating with existing data). Currently, when an update breaks things, our recommendation is to go back to the previous tag. In most cases, the user has no idea which previous image they were using (as they know them only by the latest tag, rather than the versioned tags). So they would have to dig through the tag listings on Docker Hub and try older versioned tags and see if any of them work. This is not only time consuming, but also requires a higher degree of knowledge of Docker Hub and the tag concept.

Scripting versioned backups and updates

I will share with you a set of scripts I came up with to mitigate this problem, so one command can back up the data, update the containers, another command to restore to the previously working state, and another to resume updates to latest again. Let’s start with the individual components and concepts first, and we’ll put together the whole script at the end.

Update concept

Before we update the containers, we need to preserve the current working state. There are three components to that:

With these three components, we can create an exact replica of our current working containers.

Backup concept

App data can be backed up via tar and stored in an archive file on the same machine or a remote machine. App data can also be transferred to another machine via rsync or any other copy protocol. In this case, we will use a tar archive but feel free to substitute your own method.

Repo digest

Saving the docker compose yaml is as simple as copying and renaming the file into our backup location for our reference later.

Restore concept

If something breaks, we can restore the last working state with the following steps:

Resume concept

Constructing the script

Script prerequisites & assumptions

Now let’s try to put that whole structure into a somewhat automated script. But first, let’s establish some prerequisites and assumptions:

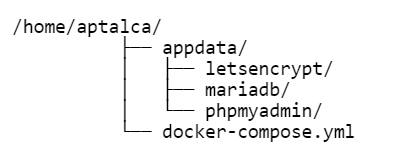

Here’s my folder structure that works with this script:

User variables

First we’ll set our user defined variables:

Functions

Update function

As mentioned above, our update function will do three things: 1) save the versions, 2) back up the app data, and 3) update images and recreate containers.

First, let’s make sure yq is installed:

Here’s how we figure out and save the versions:

Essentially, this part of the script retrieves and saves 3 pieces of info for each container in comma separated values, one line for each container:

It will look something like this:

This versions.txt will be stored inside the app data folder so it can be backed up alongside the app data.

Alternative method without yq

You can enter all of your container and image names in the above format, comma separated, no spaces, and one container per line.

Continuing with the update function

Although the second step in update is backing up the app data folder, we first need to stop the running containers. I also like to update the images prior to stopping the containers to minimize container down time, as the image update process can take a significant amount of time for larger images and/or on slow connections.

Then we save a copy of our docker compose yaml inside the app data folder as well and back it up:

This will create an appdatabackup.tar.gz one folder up from our app data folder. Then we create the new containers as soon as backup is completed (to minimize downtime), and then fix our permissions and remove stale docker images:

Now we have updated our images and container, but we also preserved all the necessary info to recreate our last working state in a tar archive.

Restore function

First we stop and remove the existing containers:

Then we move/rename the current (potentially broken) app data folder by appending it with an 8 digit random string (for reference):

Now we can restore our app data folder from the backup:

And we can read the previously working image repo digests from the versions.txt file we saved before, and modify our docker compose yaml to pull those images:

Now that we have restored the last working app data and specified the last working image tags, we can create our containers to restore our last working state:

Resume function

Once we are ready to go back to the latest tags and resume updates, we can run the following function that reads the original image names and tags and put into our compose yaml:

Then we pull the images and recreate the containers:

After this point, the update function will continue pulling the latest images.

Full Script

Developing on Staxmanade

As a newbie to the tooling, docker-compose it’s great for getting started. To bring up all the service containers with a simple docker-compose up starts everything. However, what if you want to replace an existing container without tearing down the entire suite of containers?

For example: I have a docker-compose project that has the following containers.

I had a small configuration change within the CouchDB container that I wanted to update and re-start to get going but wasn’t sure how to do that.

Here’s how I did it with little down time.

I’m hoping there are better ways to go about this (I’m still learning), but the following steps are what I used to replace a running docker container with the latest build.

Once the change has been made and container re-built, we need to get that new container running (without affecting the other containers that were started by docker-compose).

That’s it. at least for me, it’s worked to update my running containers with the latest version without tearing down the entire docker-compose set of services.

Again, if you know of a faster/better way to go about this, I’d love to hear it. Or if you know of any down-sides to this approach, I’d love to hear about it before I have to learn the hard way on a production environment.

UPDATE:

Happy Container Updating!

Posted by Jason Jarrett Sep 8th 2016 Docker docker-compose

Updating Docker Containers With Zero or Minimum Downtime

Suppose you are running a service in a container and there is a new version of the service available through their docker image. In such a case you would like to update the Docker container.

Updating a docker container is not an issue but updating docker container without downtime is challenging.

Confused? Let me show you both ways one by one.

Method 1: Updating docker container to the latest image (results in downtime)

This method basically consists of these steps:

Want the commands? Here you go.

List the docker images and get the docker image that has an update. Get the latest changes to this image using docker pull command:

Now get the container ID or name of the container that is running the older docker image. Use the docker ps command for this purpose.

Once you have the details, stop this container:

The next step is to run a new container with the same parameters you used for running the previous container. I believe you know what those parameters are because you had created them in the first place.

Do you see the problem with this approach? You have to stop the running container and then create a new one. This will result in a downtime for the running service.

The downtime, even if for a minute, could leave big impact if it’s a mission-critical project or high-traffic web service.

Want to know a safer and better approach for this problem? Read the next section.

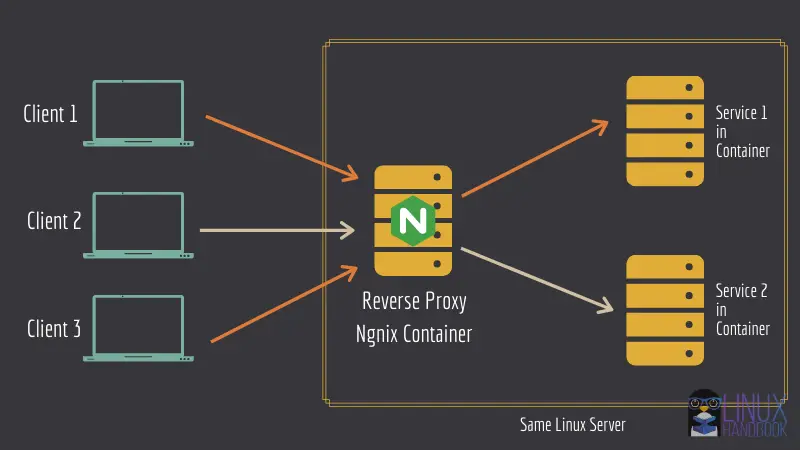

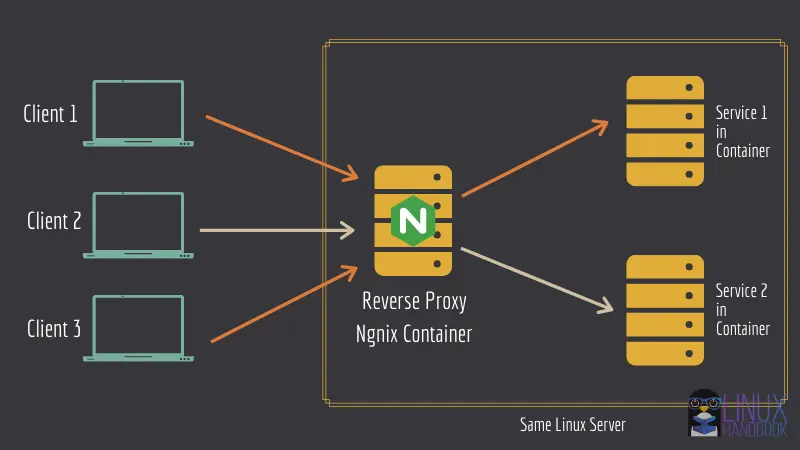

Method 2: Updating docker container in a reverse proxy setup (with zero or minimal downtime)

If you were looking for a straightforward solution, sorry to disappoint you but it’s not going to be one because here you’ll have to deploy your containers in a reverse-proxy architecture with Docker Compose.

If you are looking to manage critical services using docker containers, the reverse proxy method will help you a great deal in the long run.

Let me list three main advantages of the reverse proxy setup:

If you’re curious to learn more, you can check out the official Nginx glossary that highlights the common uses of a reverse proxy and how it compares with a load balancer.

We have a great in-depth tutorial on setting up Nginx reverse proxy to host more than one instances of web services running in containers on the same server. So, I am not going to discuss it here again. You should go and set up your containers using this architecture first. Trust me, this is worth the trouble.

In this tutorial, I have designed a step by step methodology that can be very helpful in your day to day DevOps activities. This requirement can not only be very necessary when you update your containers but also when you want to make a very necessary change in any of your running apps without sacrificing invaluable uptime.

From here onwards, we assume that you are running your web applications under a reverse proxy setup that would ensure that rerouting works for the new up-to-date container as expected after the configuration changes that we are going to do.

I’ll show the steps of this method first, followed by a real life example.

Step 1: Update the docker compose file

First of all, you need to edit the existing docker compose file with the version number of the latest image. It can be viewed at Docker Hub, specifically under the «tags» section of the application.

Move into the application directory and edit the docker-compose file with a command line text editor. I used Nano here.

You might wonder why not use the latest tag anyway instead of specifying the version number manually? I did it on purpose since I’ve noticed that while updating containers, there can be an intermittent delay of the latest tag in actually picking up the latest version of the dockerized application.When you use the version number directly, you can always be absolutely sure.

Step 2: Scale up a new container

When you use the following command, a new container is created based on the new changes made in the docker compose file.

Step 3: Remove the old container

After step 2, give it around 15-20 seconds for the new changes to take into effect and then remove the old container:

On different web apps, the reflected changes are behaviorally different after you run the above command(discussed as a bonus tip at the very bottom of this tutorial).

Step 4: Scale down to the single container setup as before

For the final step, you scale down to the single container setup once again:

I have tested this method with Ghost, WordPress, Rocket.Chat and Nextcloud instances. Except Nextcloud switching to maintenance mode for a few seconds, the procedure works extremely well for the other three.

Discourse however, is another story and can be a very tricky exception in this case due to its hybrid model.

The bottom line is: the more the web app uses standard docker practice when dockerizing it, the more convenient it becomes to manage all web app containers on a day to day basis.

Real life example: Updating a live Ghost instance without downtime

As promised, I am going to show you a real life example. I am going to show you how to update Ghost running in docker container to a newer version without any downtime.

Ghost is a CMS and we use it for Linux Handbook. The example shown here is what we use to update our Ghost instance running this website.

Say, I have an existing configuration based on an older version located at /home/avimanyu/ghost :

When I check it with docker ps :

At this time of writing, this is an older version of Ghost. Time to update it to the latest version 3.37.1! So, I revise it in the image section as:

Now to put the scaling method to good use:

With the above command, the older container remains unaffected but a new one joins in with the same configuration but based on the latest version of Ghost:

Recreation involves removal of the older container and creating a new one in its place with the same settings. This is when downtime occurs and the site becomes inaccessible.

So now I have two containers running based on the same ghost configuration. This is the crucial part where we avoid downtime:

But for the Ghost blog itself, there is no downtime at all! So, in this manner you can ensure zero downtime when updating Ghost blogs.

Finally, scale down the configuration to its original setting:

As mentioned earlier, after removing old containers, the changes are reflected in the respective web apps, but they obviously behave differently due to diverse app designs.

Here are a few observations:

On WordPress: Ensure that you add define( ‘AUTOMATIC_UPDATER_DISABLED’, true ); as a bottom line on the file wp-config.php located at /var/www/html and mount /var/www/html/wp-content instead of /var/www/html as volume. Check here for details. After step 3, the WordPress admin panel would show that your WordPress is up-to-date and will ask you to proceed and update your database. The update happens quickly without any downtime on the WordPress site and that’s it!

On Rocket.Chat: It can take around 15-20 seconds for the Admin>Info page to show that you are running the latest version even before you do step 3. No downtime again!

Bonus tips

Here are a few tips and things to keep in mind while following this method.

Tip 1

To keep downtime at a minimum to a zero across different applications, make sure you provide sufficient time to the newly scaled and up-to-date containers so that the Nginx containers can finally acknowledge them before you remove the old ones in step 2.

Before you move to step 2 described above, it is advisable to observe your application’s behavior on your browser (both as a page refresh after login or as a fresh page access on a private, cache-free browser window) after doing step 1.

Since each application is designed differently, this measure would prove very helpful before you bring down your old containers.

Tip 2

While this can be a very resourceful method to update your containers to the latest versions of the apps they run, do note that you can use the same method to also make any configuration changes or modify environmental settings without facing downtime issues.

This can be crucial if you must troubleshoot an issue or perform a change that you may deem necessary in a live container, but do not want to bring it down while doing so. After you make your changes and are sure that the issue is fixed, you can bring down the older one with ease.

We have ourselves faced this when we found that log rotation wasn’t enabled in one of our live containers. We did the necessary changes to enable it and at the same time avoided any downtime while doing so with this method.

Tip 3

In case you are already using a service by using custom named containers in your docker compose file, to use this method, simply comment out the line (with a # prefix) that includes container_name inside the service definition.

After creating the new container for the above using step 1 as described in this tutorial, for step 2, the old container(that wasn’t stopped to avoid downtime) name would be as it was specified earlier using container_name (also can be checked with ` docker ps ` before removing the old container).

Did you learn something new?

I know this article is a bit lengthy but I do hope it helps our DevOps community in managing downtime issues with production servers running dockerized web applications. Downtime does have a huge impact at both individual and commercial level and this is why covering this topic was an absolute necessity.

Please join the discussion and share your thoughts, feedbacks or suggestions in the comments section below.

Источники информации:

- http://www.wundertech.net/how-to-update-docker-compose-on-a-synology-nas/

- http://www.linuxserver.io/blog/2019-10-01-updating-and-backing-up-docker-containers-with-version-control

- http://staxmanade.com/2016/09/how-to-update-a-single-running-docker-compose-container/

- http://linuxhandbook.com/update-docker-container-zero-downtime/